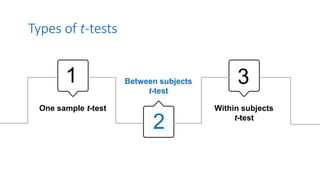

1. The document discusses different types of t-tests including the between subjects t-test.

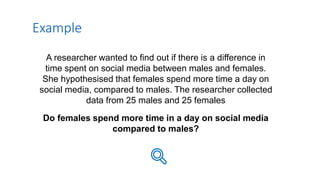

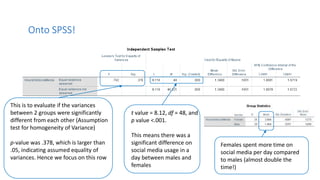

2. It provides an example of using a between subjects t-test to compare time spent on social media between males and females, with results showing females spent significantly more time than males.

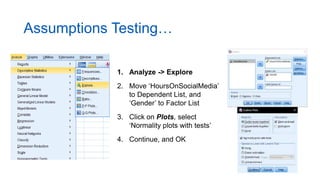

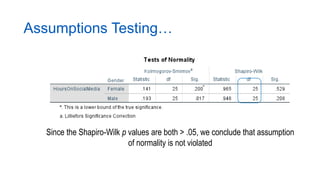

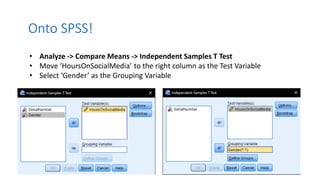

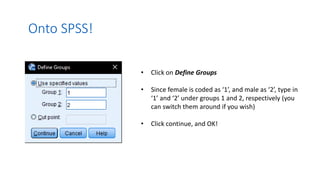

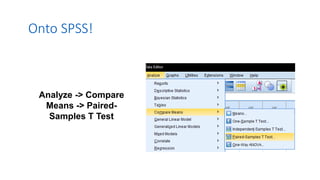

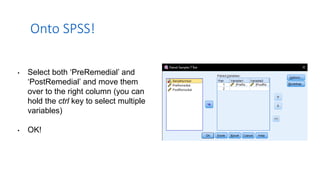

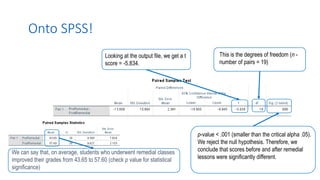

3. Guidance is given on testing assumptions, conducting the t-test in SPSS, and interpreting the results including a significant difference found between the groups.