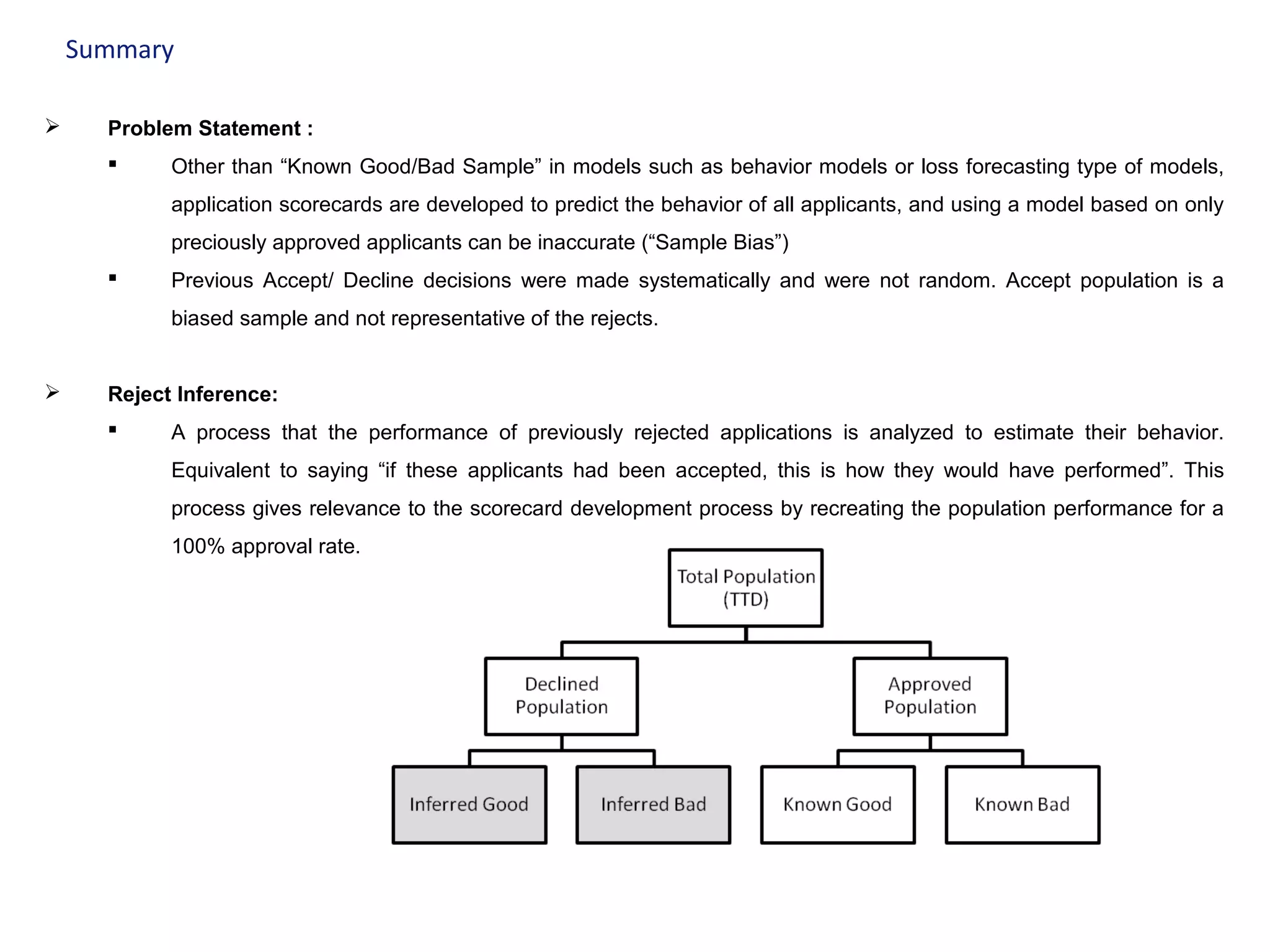

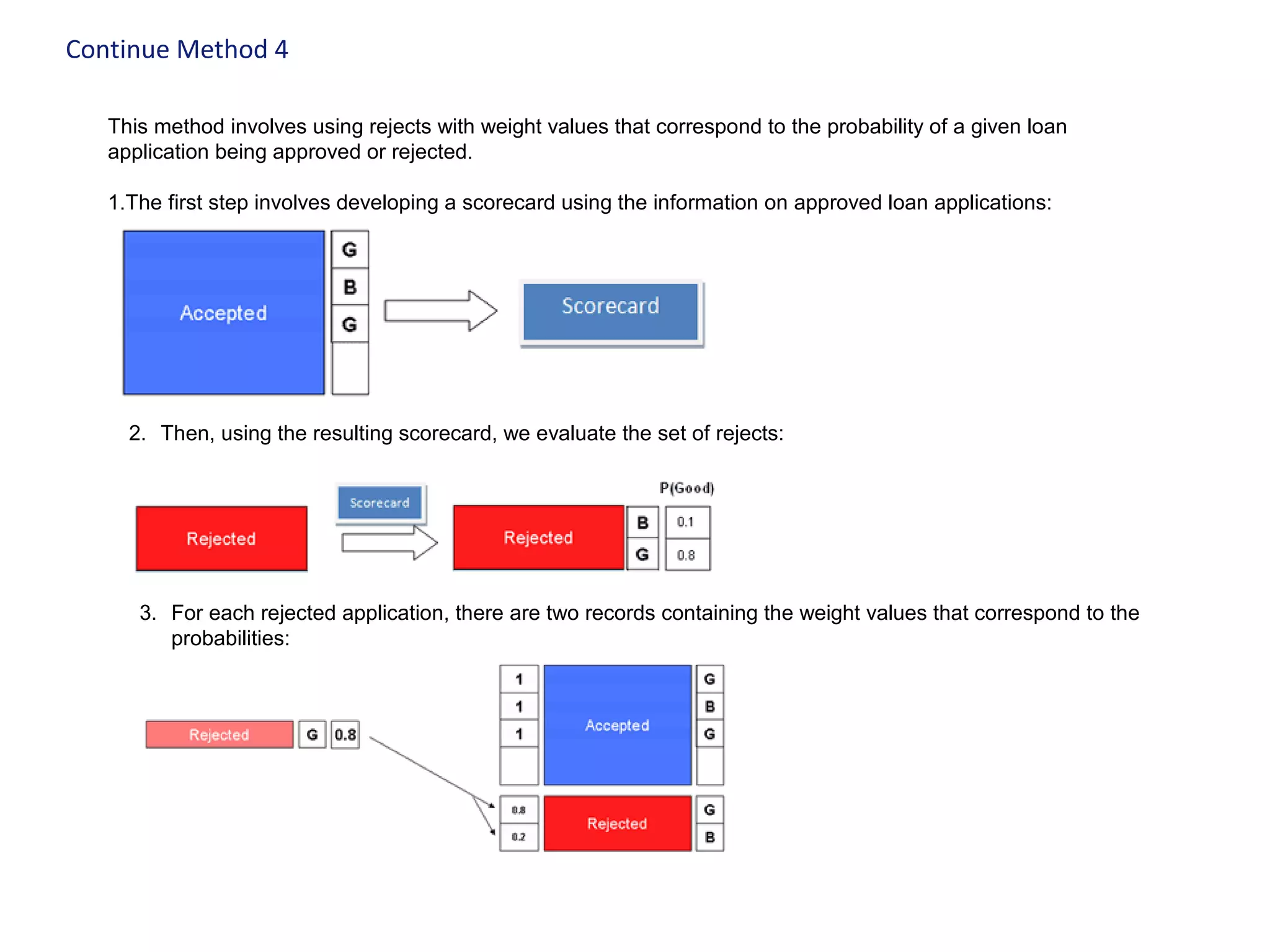

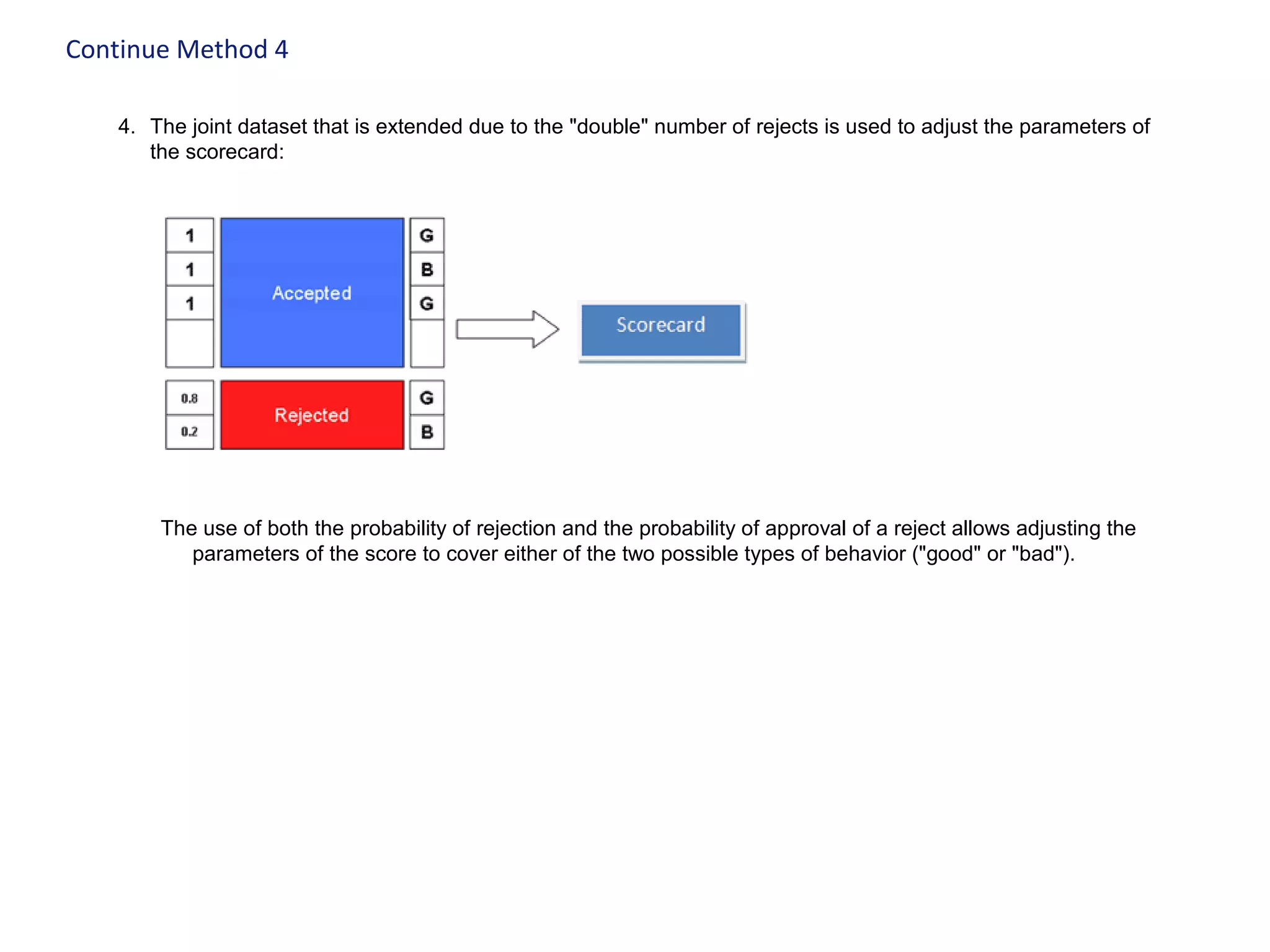

The document discusses techniques for reject inference when developing credit risk models. Reject inference aims to address sample bias by estimating how previously rejected applicants would have performed if approved. Four methods are described: 1) using performance of rejects approved elsewhere, 2) approving all applications, 3) using supplemental bureau data on rejects, 4) replicating rejects with weights based on predicted approval/rejection probabilities. The last method develops a scorecard on approvals then evaluates rejects, replicating them with weights equal to predicted good/bad behavior probabilities.