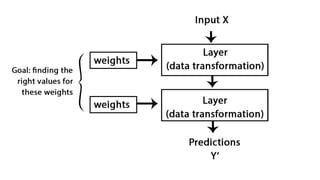

The document provides an overview of using TensorFlow and Keras for deep learning, outlining the basic concepts of tensors and neural networks. It includes code examples for building models in R, demonstrating how to compile and fit them using various libraries. Additionally, it discusses the applicability of deep learning in various domains, including image processing and natural language processing.

![> parameters <- model %>%

+ get_layer("parameters") %>%

+ (function(x) x$get_weights())

> parameters

[[1]]

[,1]

[1,] 1.985788

[[2]]

[1] 1.090006](https://image.slidesharecdn.com/sdss2019-190926064429/85/R-Interface-for-TensorFlow-41-320.jpg)

![> parameters <- model %>%

+ get_layer("parameters") %>%

+ (function(x) x$get_weights())

> parameters

[[1]]

[,1]

[1,] 1.985788

[[2]]

[1] 1.090006](https://image.slidesharecdn.com/sdss2019-190926064429/85/R-Interface-for-TensorFlow-43-320.jpg)

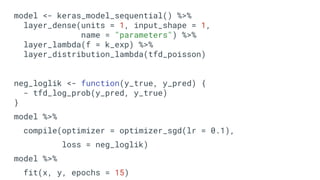

![model <- keras_model_sequential() %>%

layer_dense(units = 8675309, input_shape = 1,

name = "parameters") %>%

layer_dense_variational(

units = 2,

make_posterior_fn = posterior_mean_field,

make_prior_fn = prior_trainable,

kl_weight = 1 / n_rows,

activation = "linear"

) %>%

layer_distribution_lambda(function(t) {

tfd_normal(t[,1], k_softplus(t[,2]))

})

HETEROSCEDASTIC ERRORS OMGWTFBBQ](https://image.slidesharecdn.com/sdss2019-190926064429/85/R-Interface-for-TensorFlow-47-320.jpg)