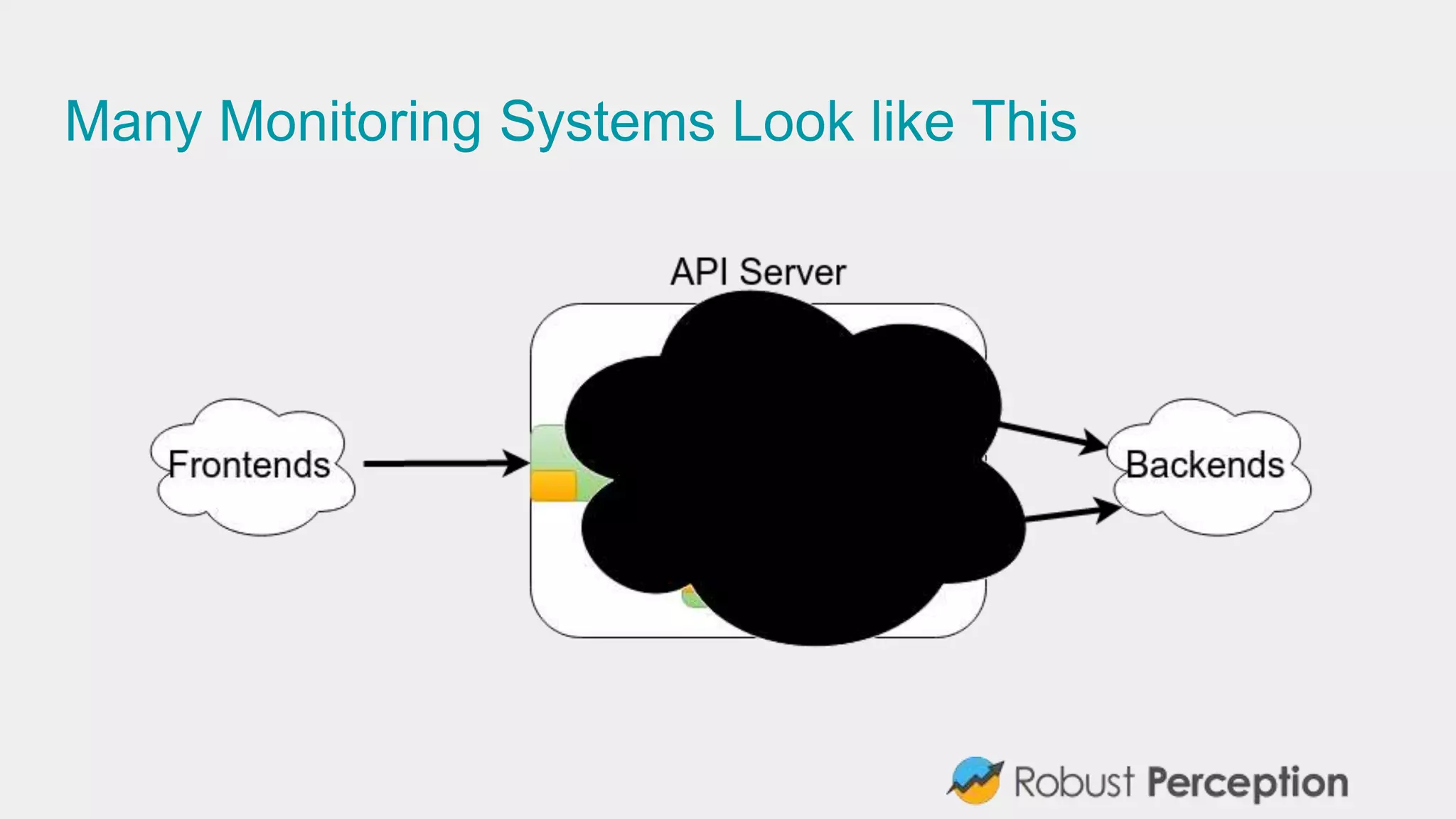

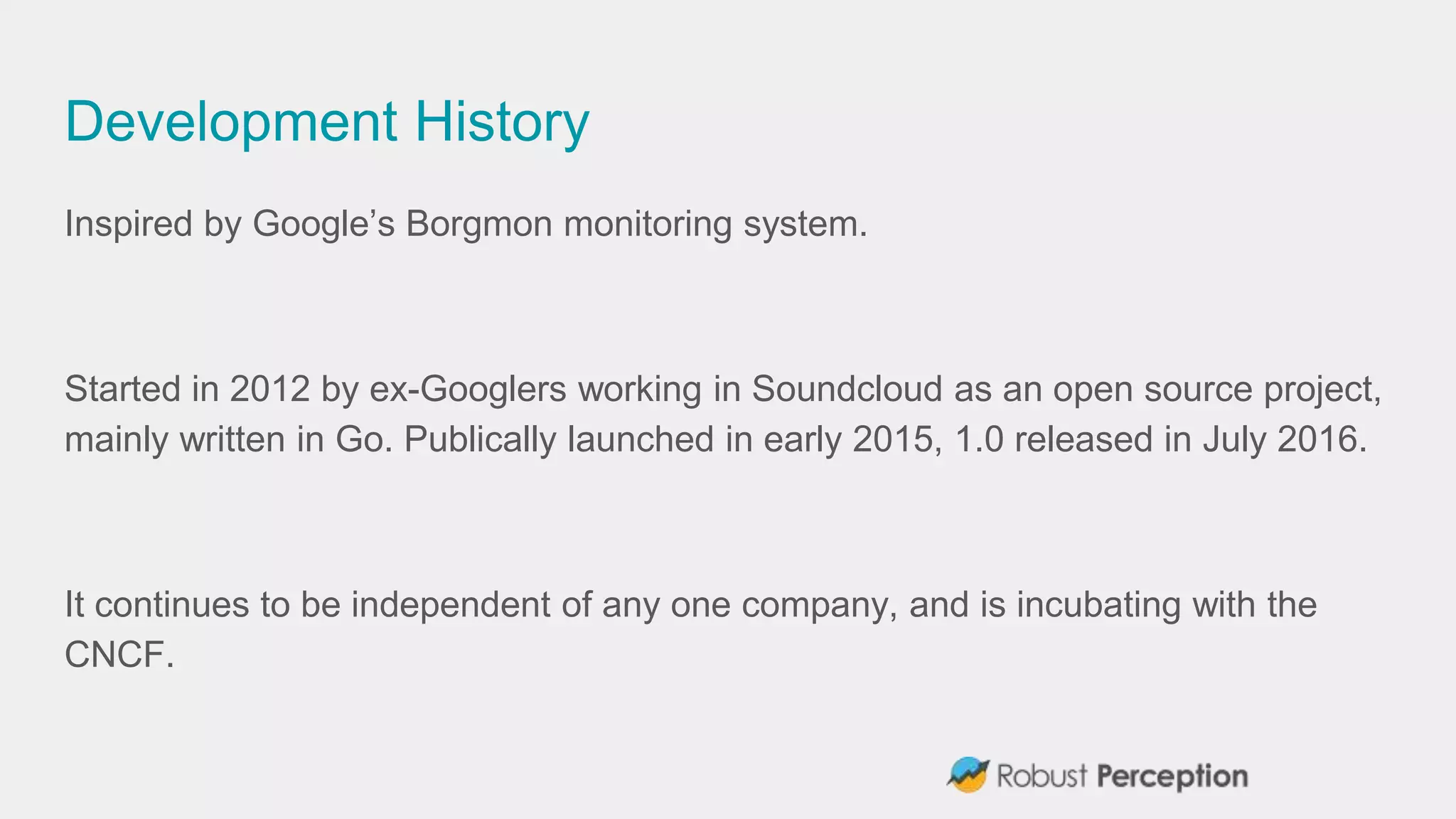

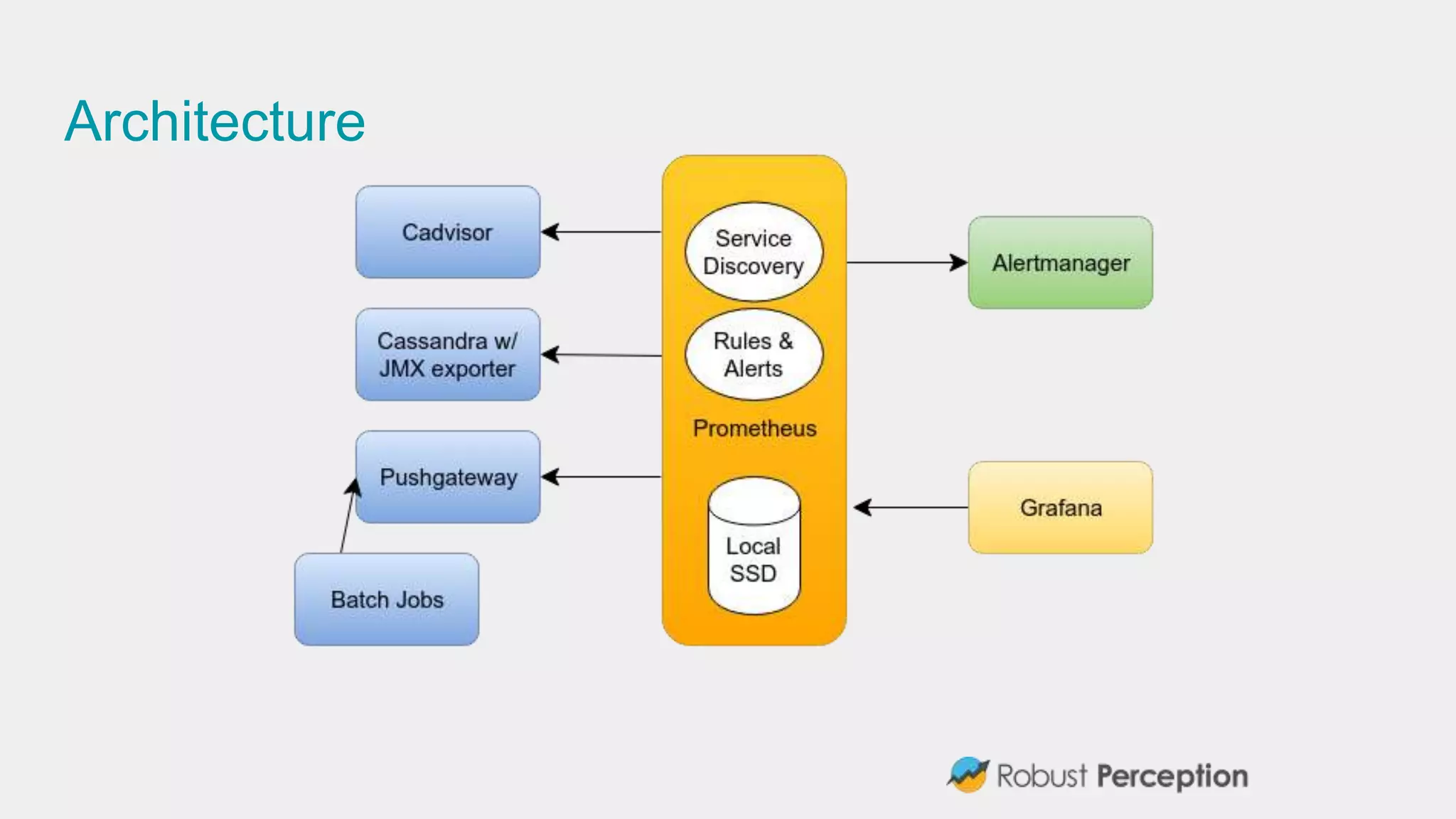

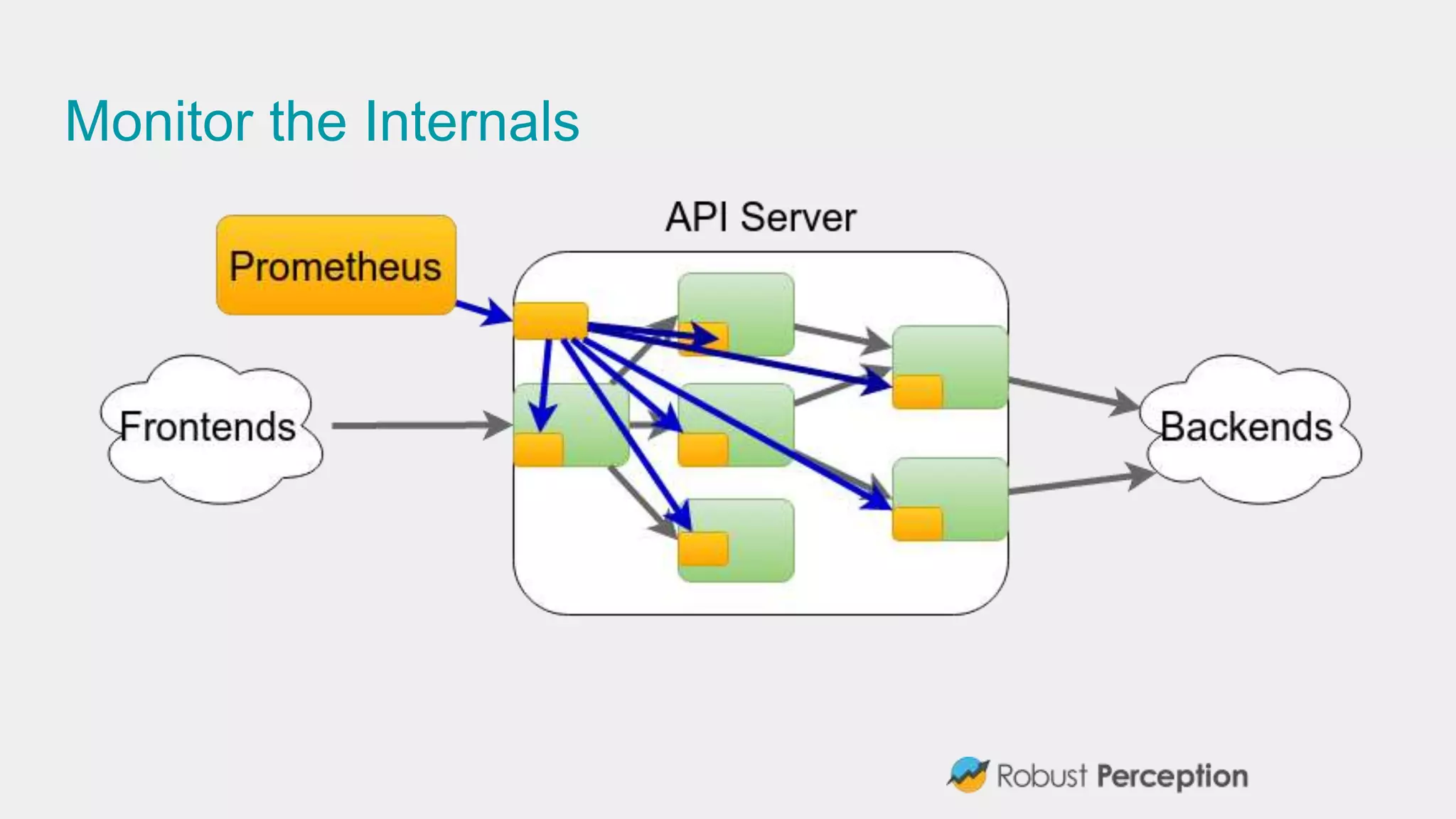

The document introduces Brian Brazil, founder of Prometheus and core developer, highlighting the importance of monitoring systems for reliable operations and the challenges faced by many organizations. Prometheus, an open-source metrics-based time series database, provides efficient monitoring solutions and has a robust community with over 500 users. It emphasizes best practices for effective monitoring while suggesting ways to avoid common pitfalls, focusing on key metrics and simplicity in analysis.

![Great for Aggregation

topk(5,

sum by (image)(

rate(container_cpu_usage_seconds_total{

id=~"/system.slice/docker.*"}[5m]

)

)

)](https://image.slidesharecdn.com/prometheus-japan-161115062158/75/Prometheus-Open-Source-Forum-Japan-16-2048.jpg)