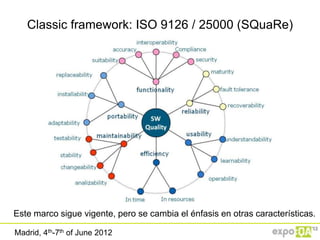

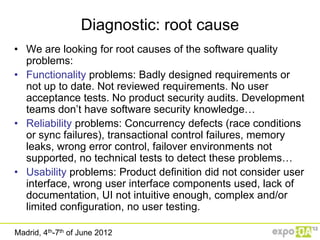

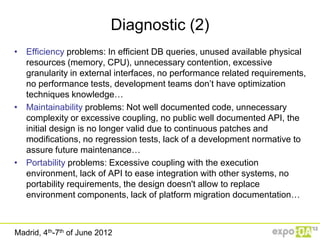

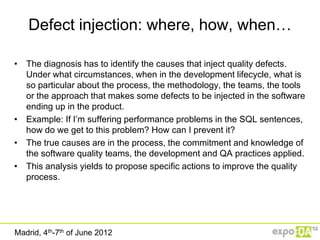

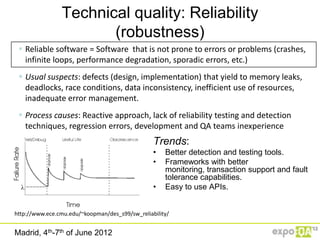

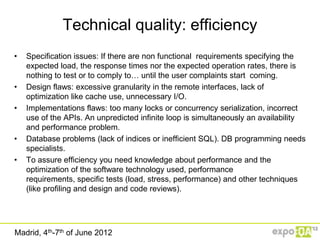

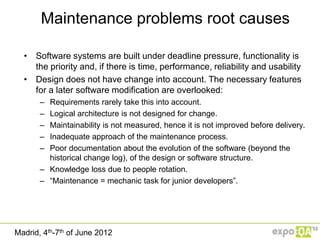

The document discusses the impact of the global economic crisis on software quality, emphasizing the need for improved processes amid reduced investment in software development and maintenance. It outlines trends in software quality, the increasing complexity of software systems, and offers a diagnostic framework for identifying and addressing quality issues. Recommendations include integrating quality into the development process, leveraging open-source frameworks, and employing efficient technical solutions to improve software quality effectively.

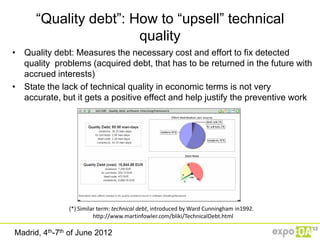

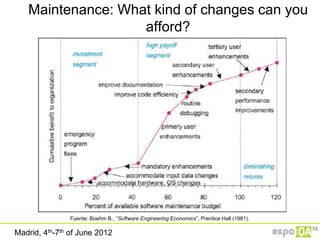

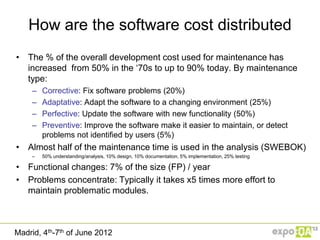

![Technical quality: Maintainability

• A hidden characteristic for the final user.. Unless the user has to pay

the corrective/perfective maintenance cost

• A significant amount of the software cost is due to maintenance.

Corrective maintenance is important but statistically perfective

maintenance consumes a bigger portion of the cake.

• Any maintainability improvement will have an important impact on

software cost and the response to demand changes.

• Software maintenance depends on the organization, the infrastructure

and how the software is built.

• “The software is not usually designed for change. Even when it

complies with the operational specification, its design and

documentation is usually poor for maintenance”. Review “The

Lehmann laws” [*]

• In times of crisis, is it useful to do preventive maintenance to improve

this characteristic and reduce cost?

[*] http://en.wikipedia.org/wiki/Software_evolution

Madrid, 4th-7th of June 2012](https://image.slidesharecdn.com/15filefinalpresentationoptimyth-120620094007-phpapp02/85/Process-Improvement-for-better-Software-Technical-Quality-under-Global-Crisis-Scenario-18-320.jpg)

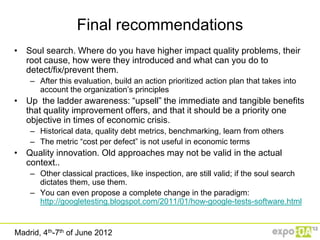

![Quality toolbox: prevention techniques

• Reuse of certified components

• [*] Training and software quality awareness

• [*] Formal inspections or reviews. http://www.gilb.com/Inspection

• Specialized techniques: Poka-Yoke (ポカヨケ “error proof”), QFD

• [*] Prototyping

• Patterns (design, requirements, code, architecture, documentation…)

• [*] Quality analysis (and management) tools

• [*] Include quality score in status reports

• Agile development(TDD, pair programming)

In these times of economic crisis, implement practices that

produce value in the short/mid term [*]

Madrid, 4th-7th of June 2012](https://image.slidesharecdn.com/15filefinalpresentationoptimyth-120620094007-phpapp02/85/Process-Improvement-for-better-Software-Technical-Quality-under-Global-Crisis-Scenario-26-320.jpg)