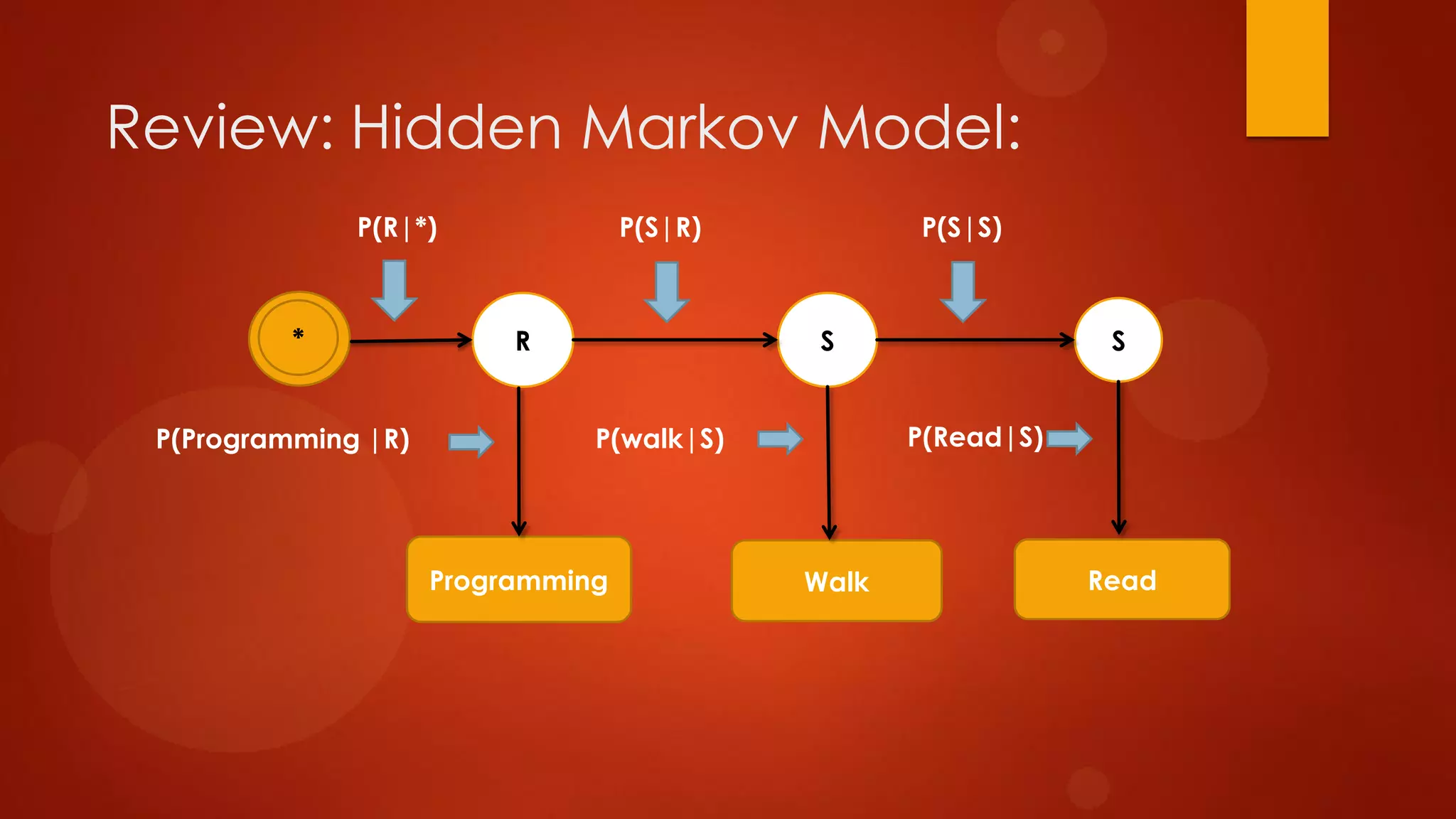

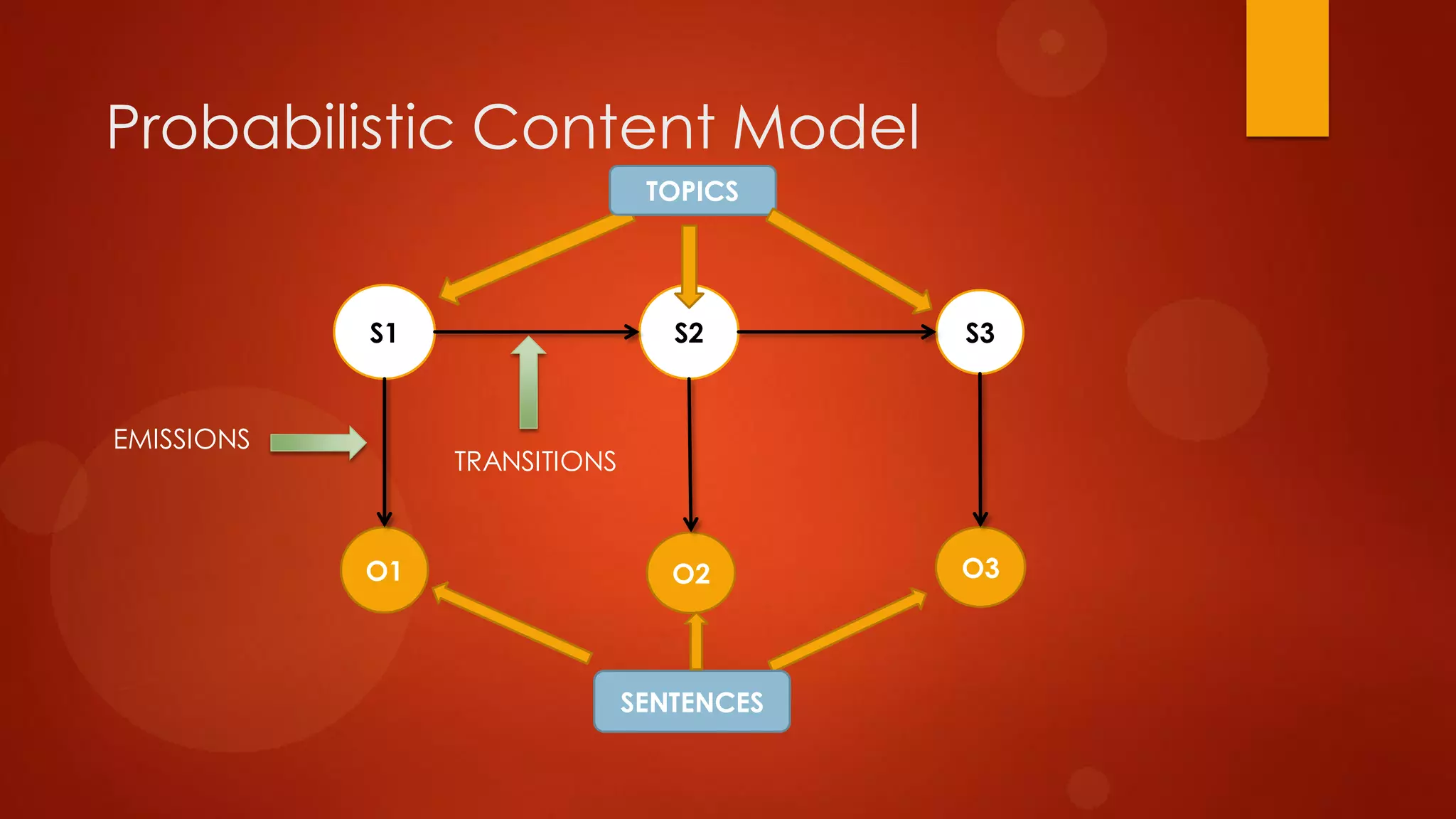

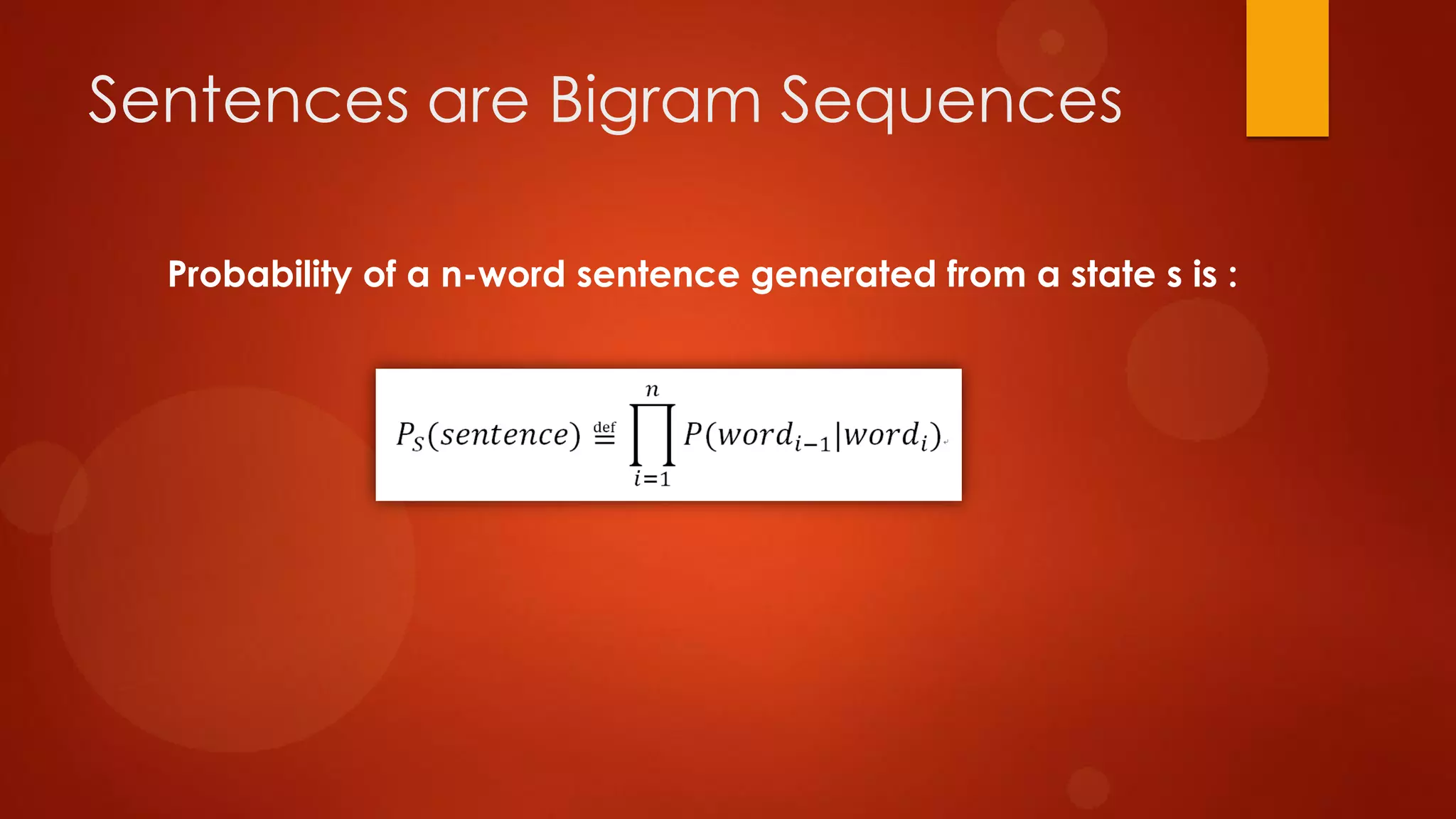

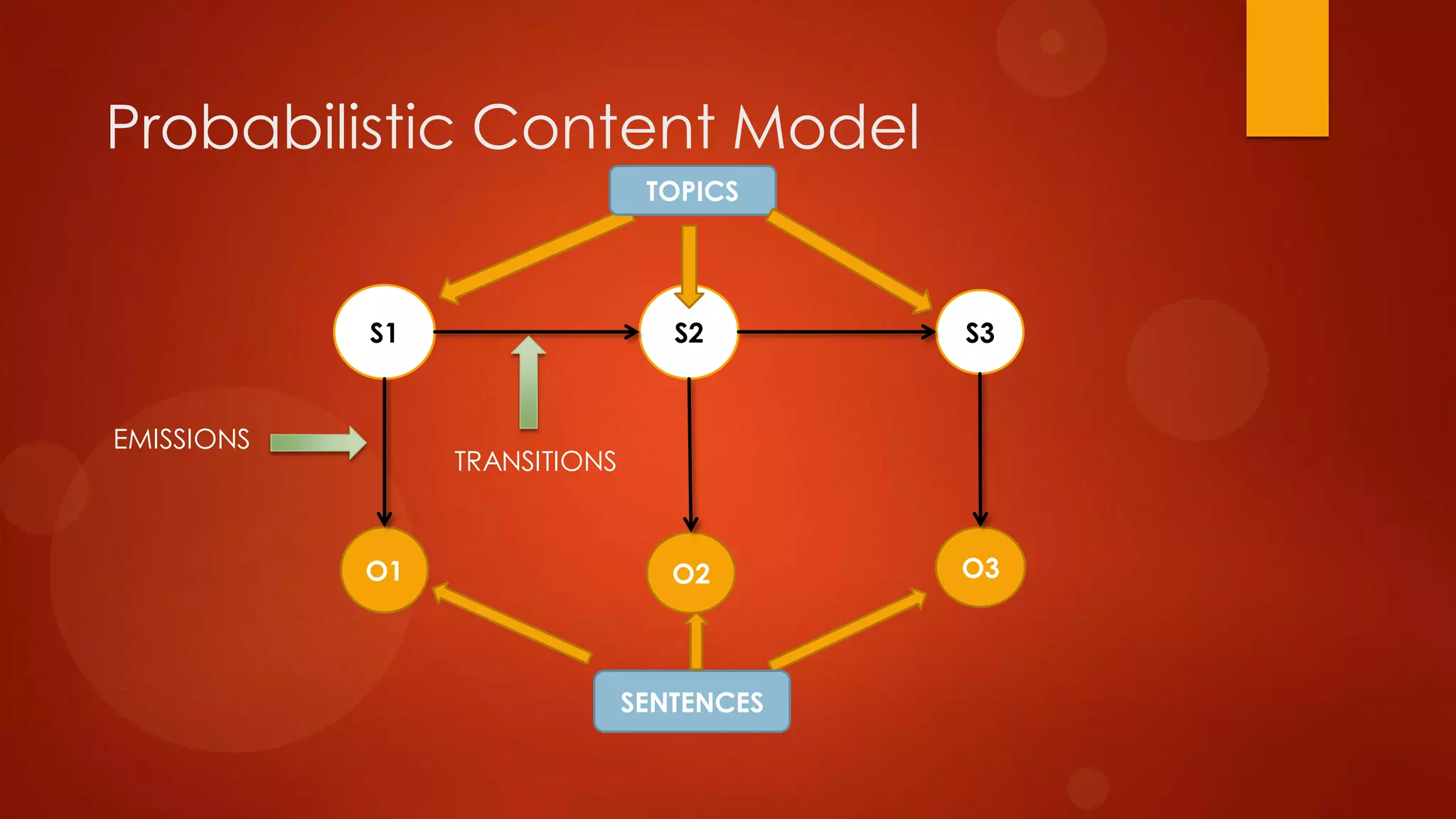

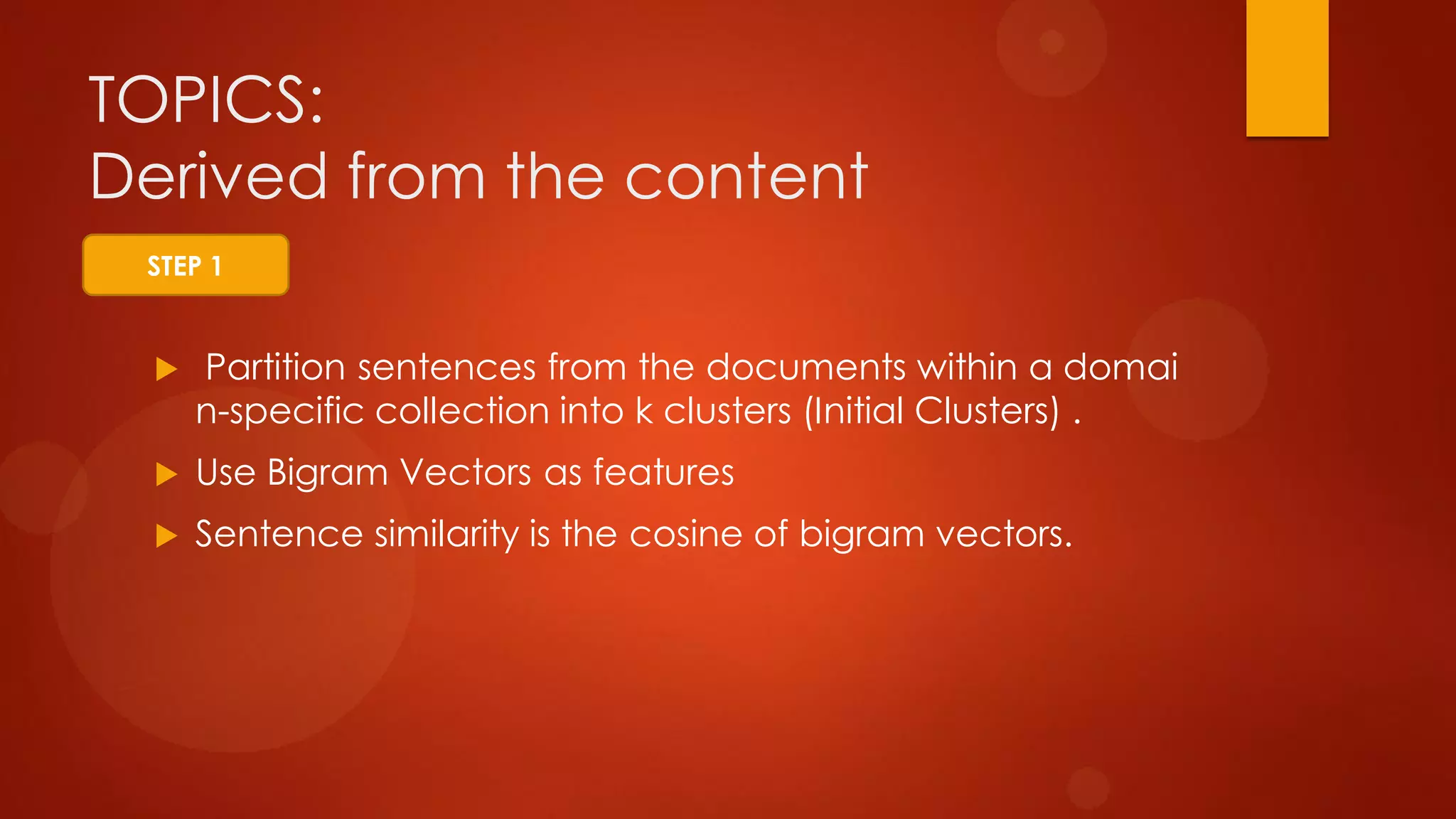

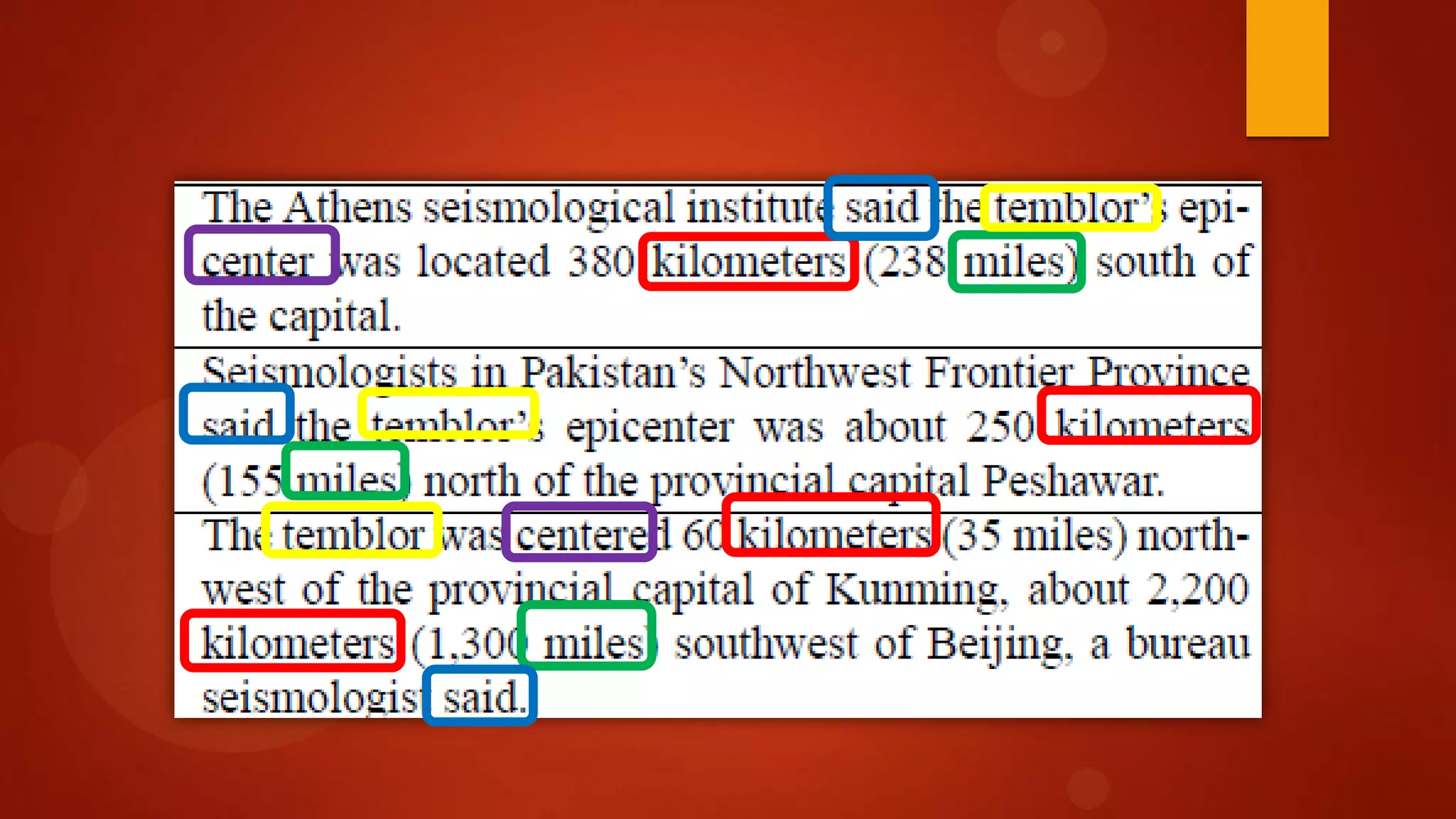

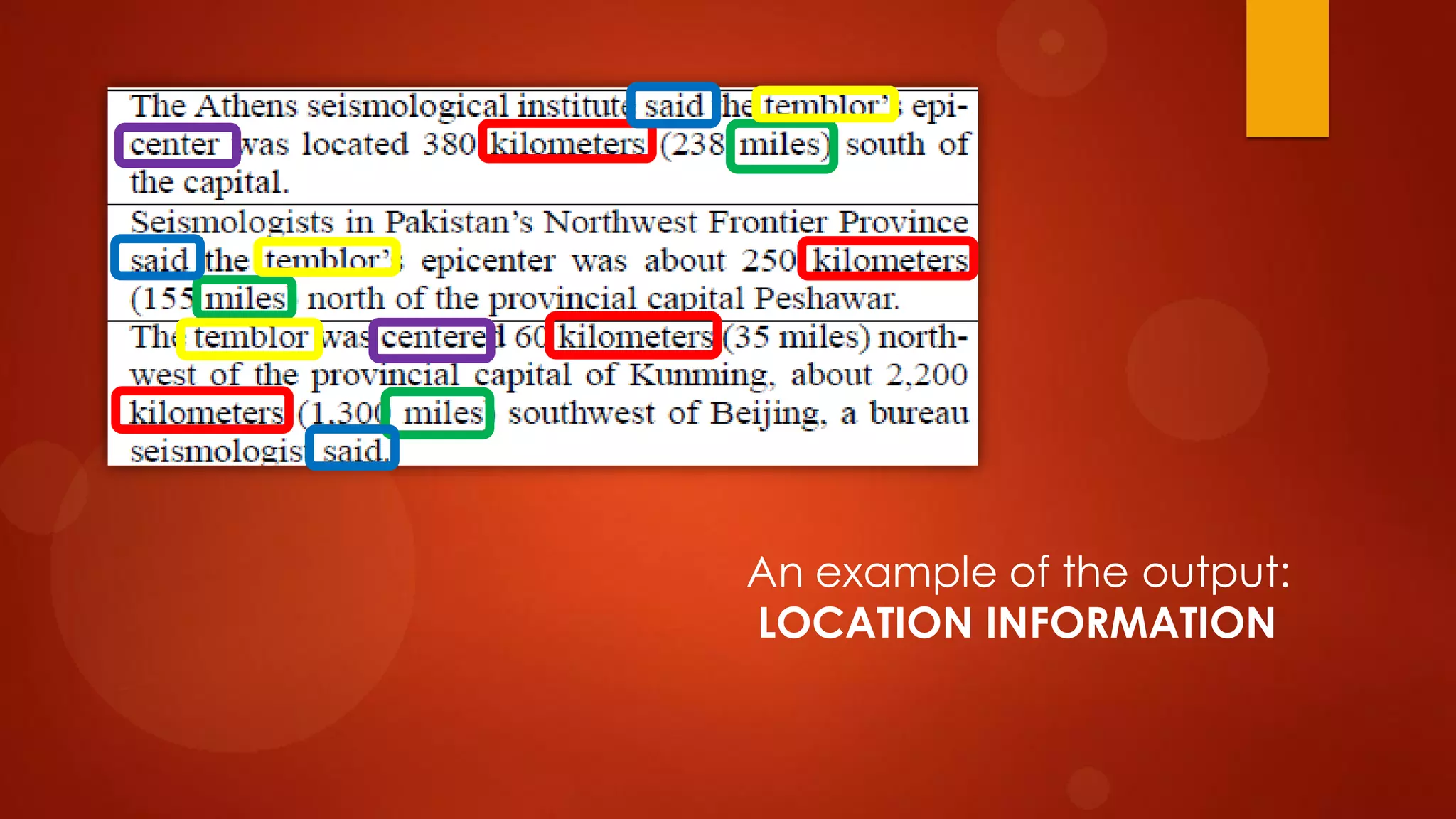

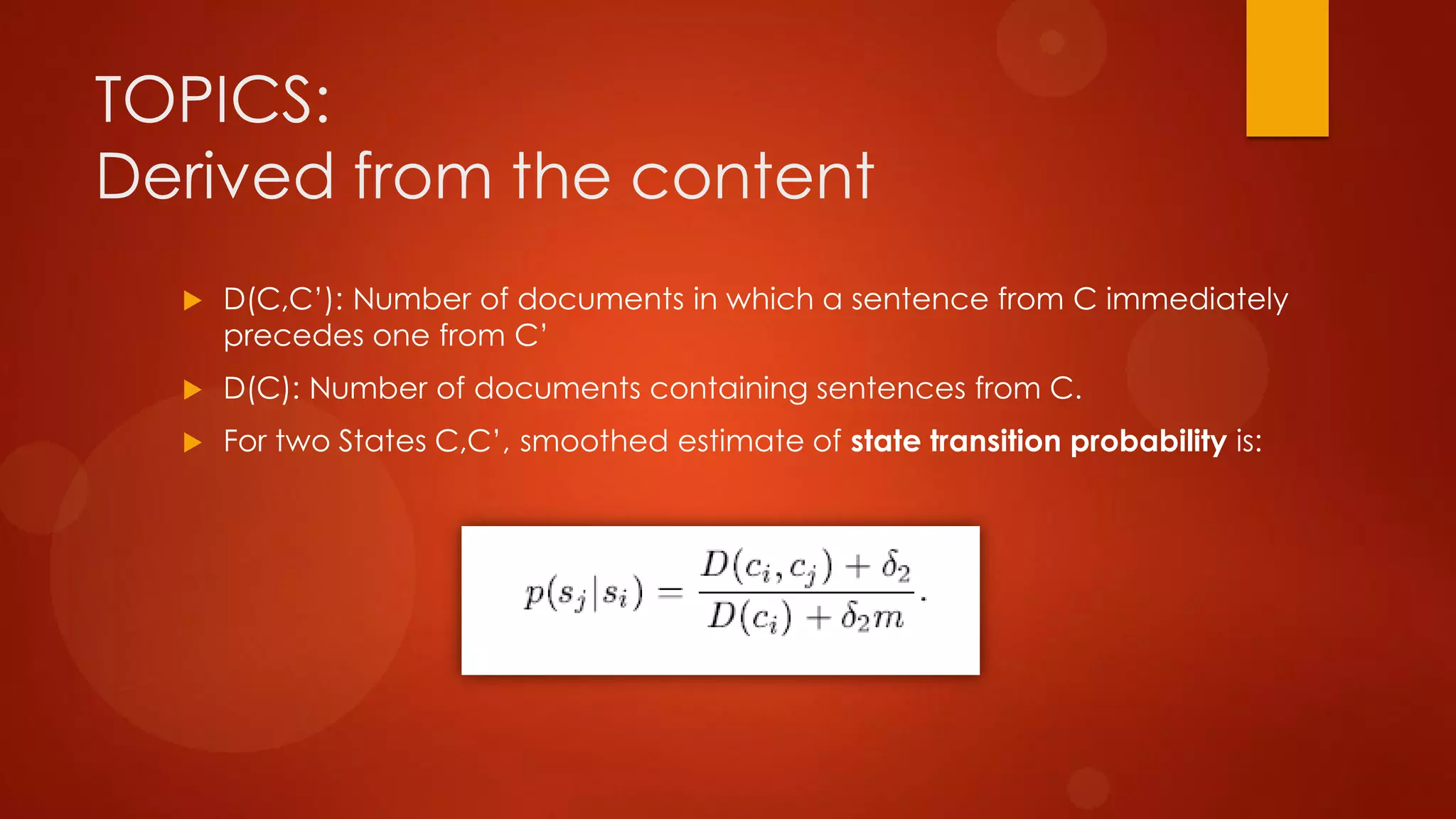

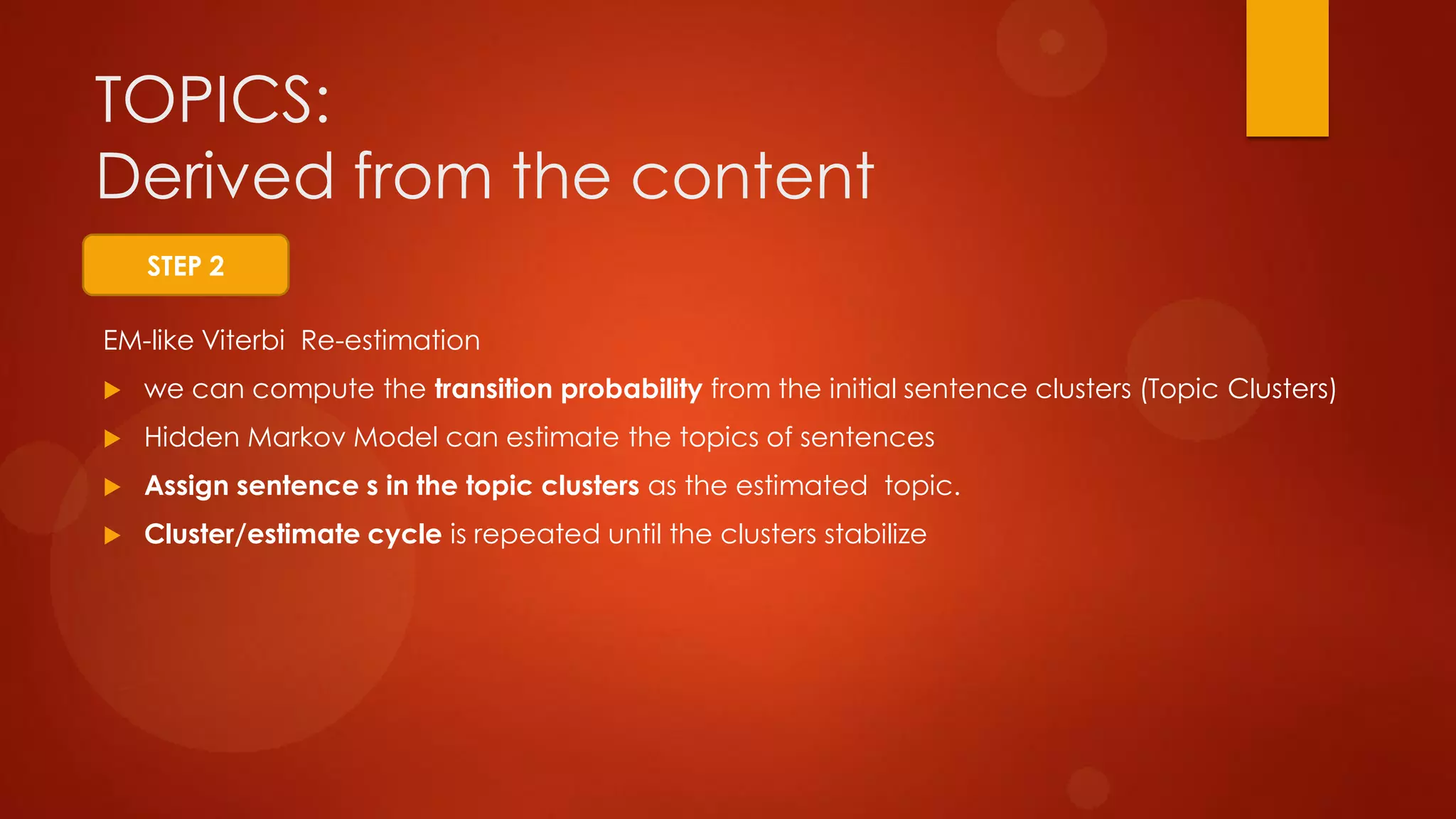

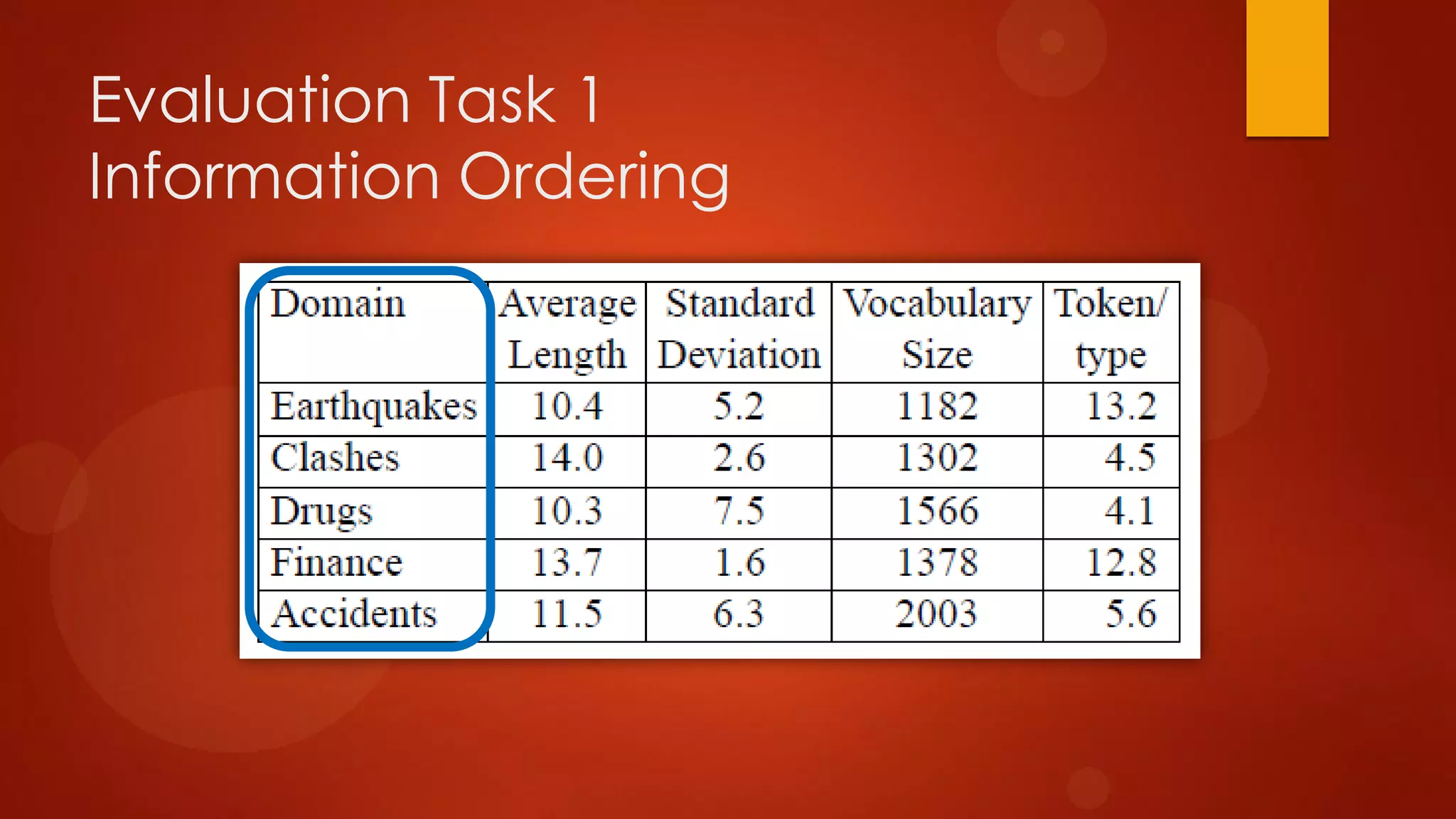

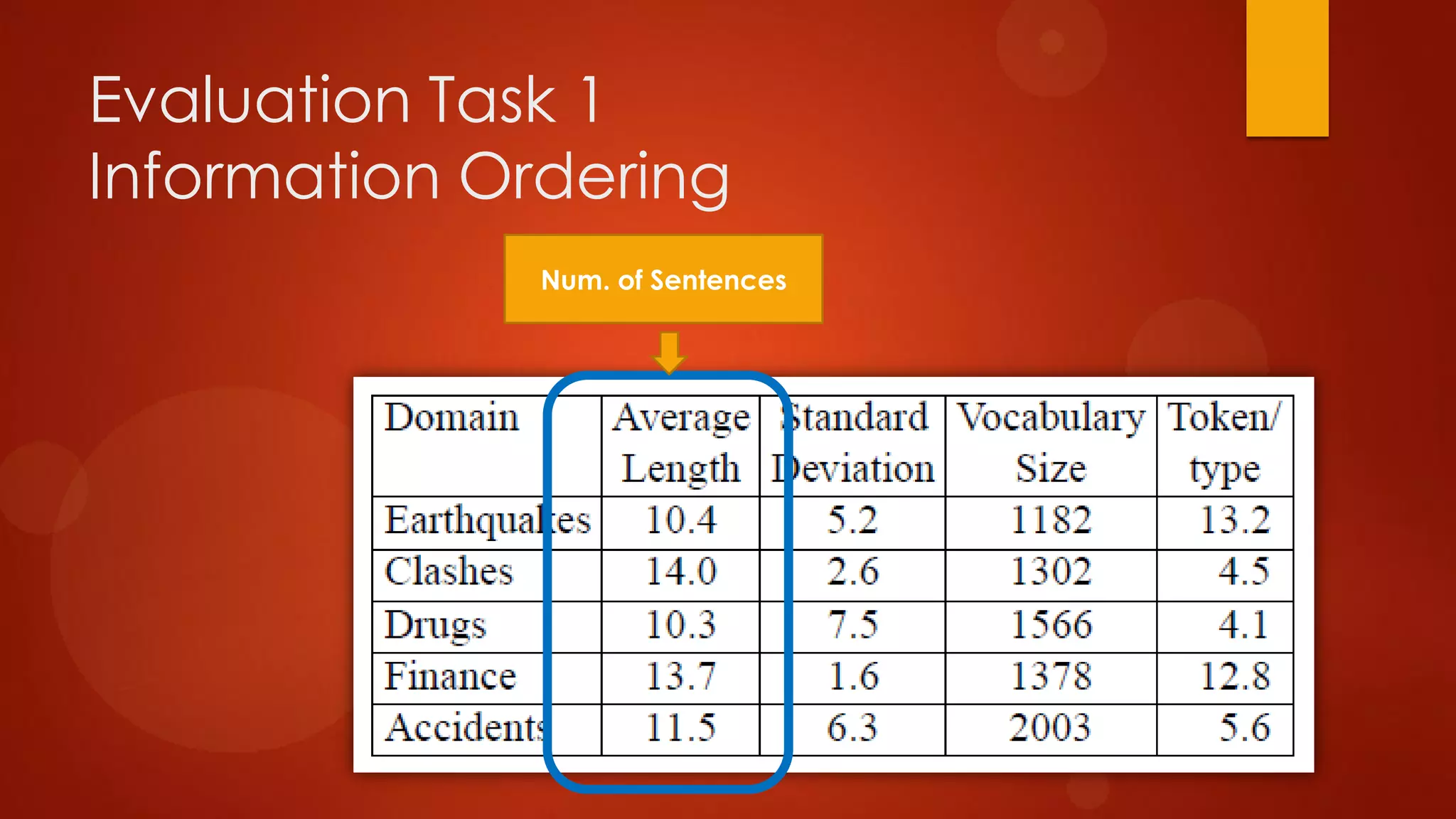

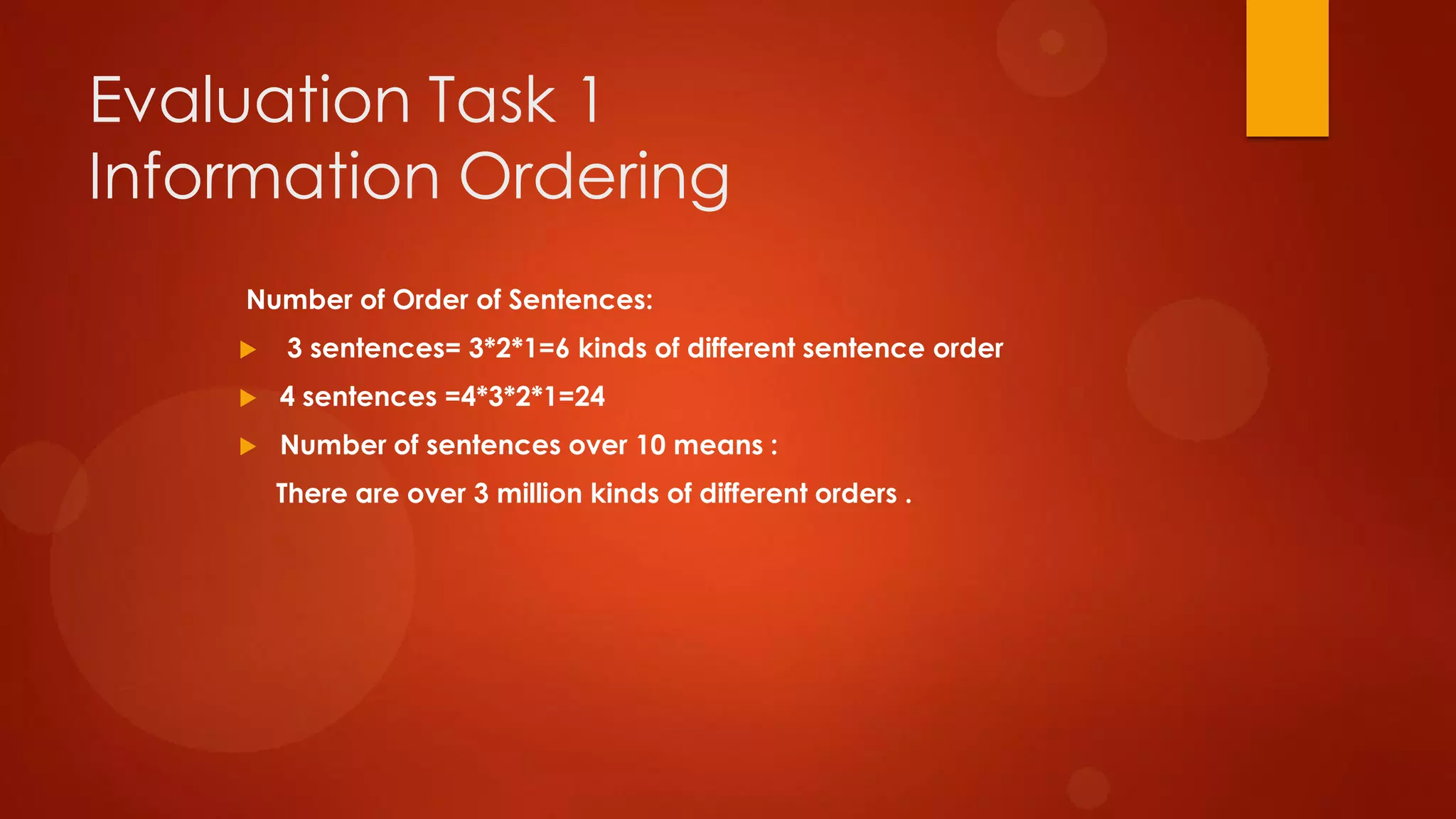

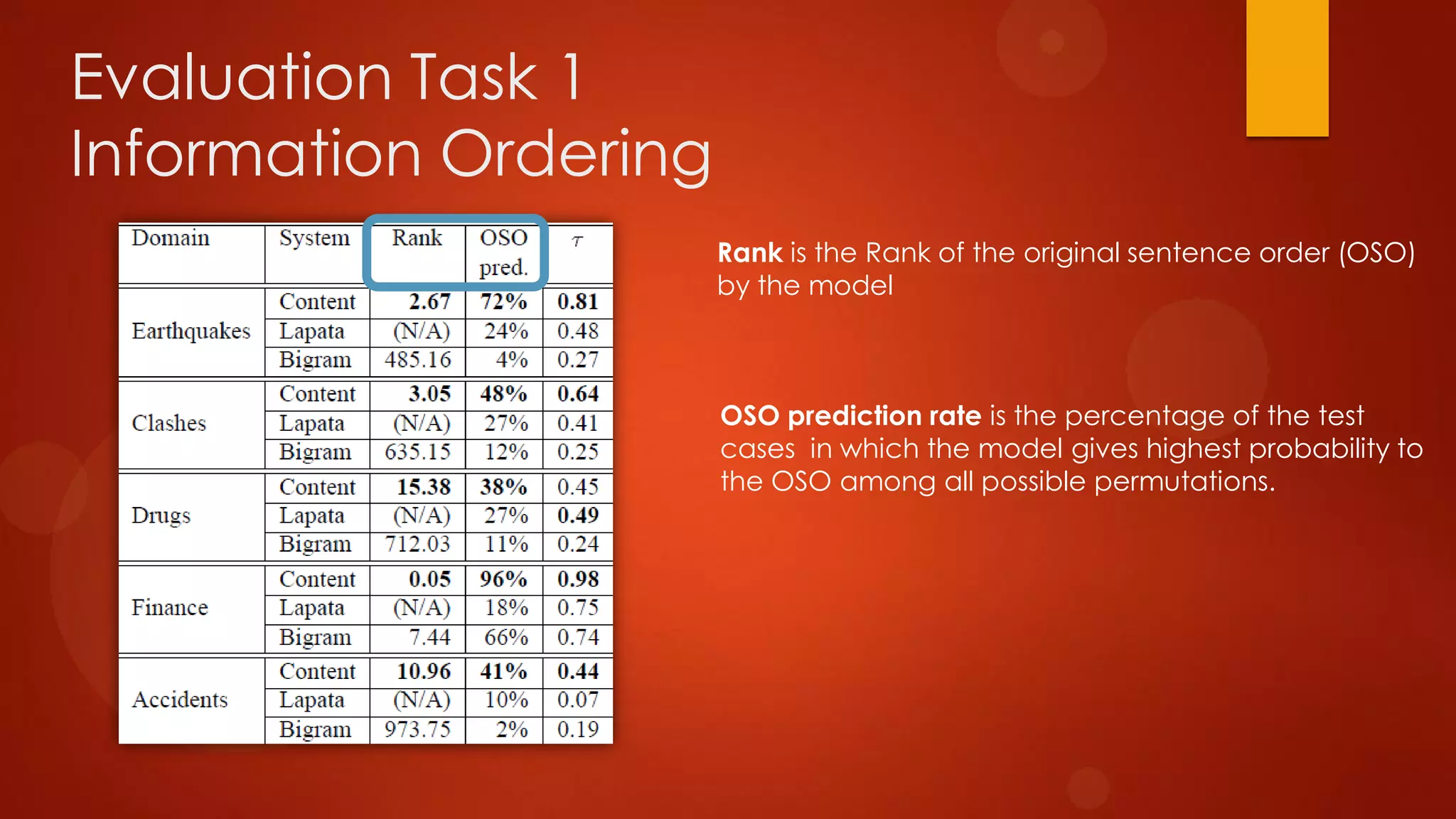

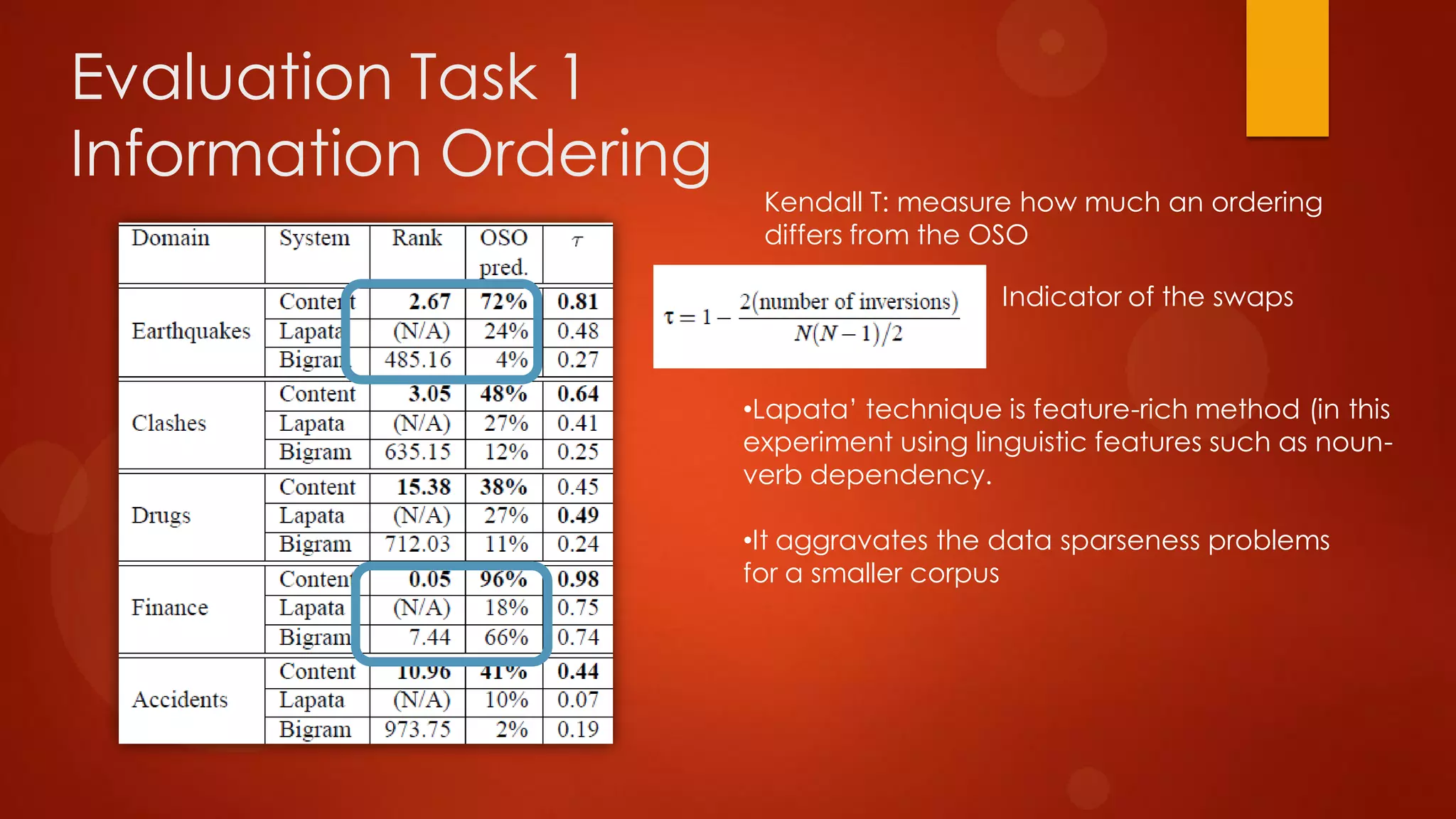

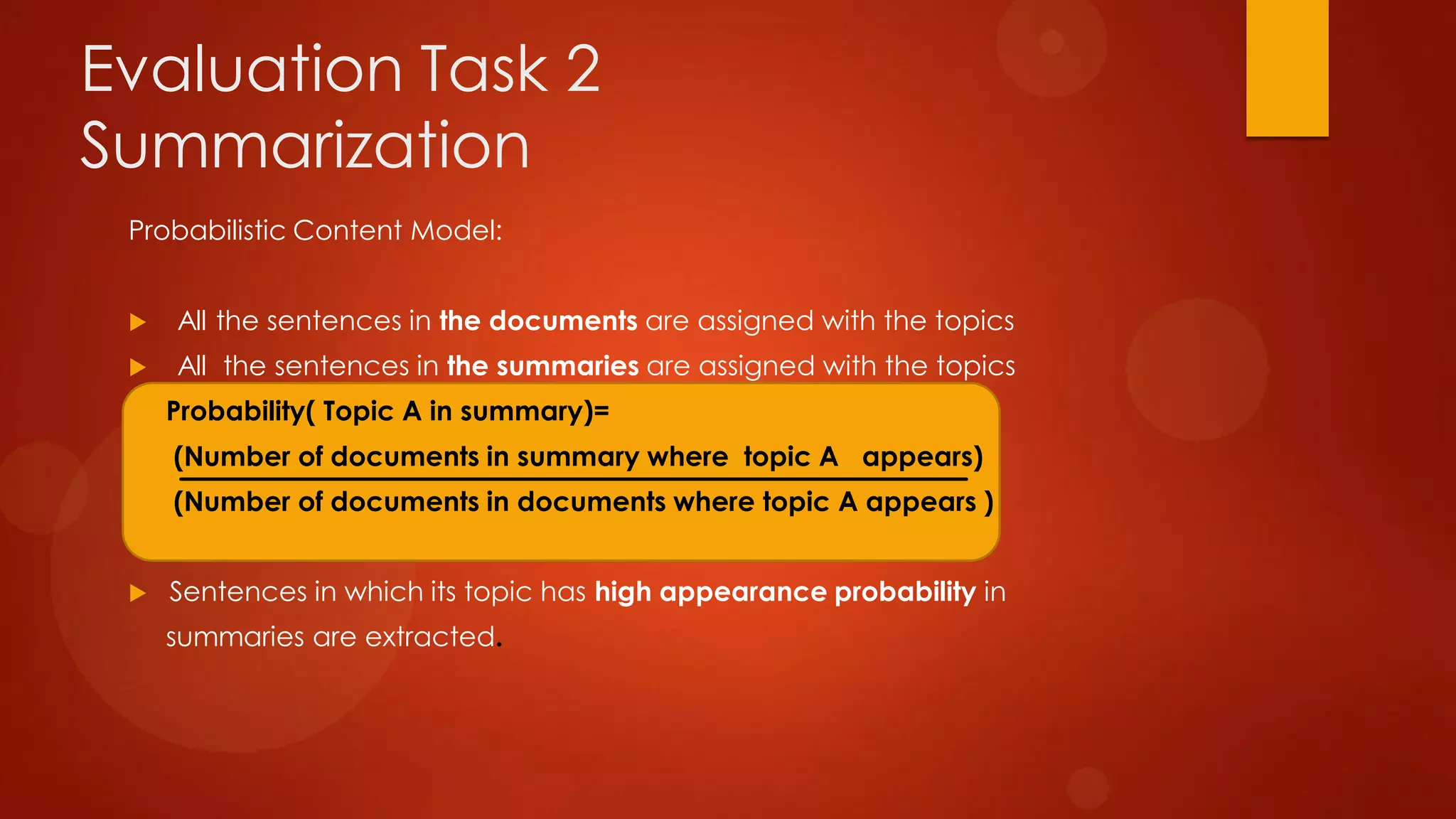

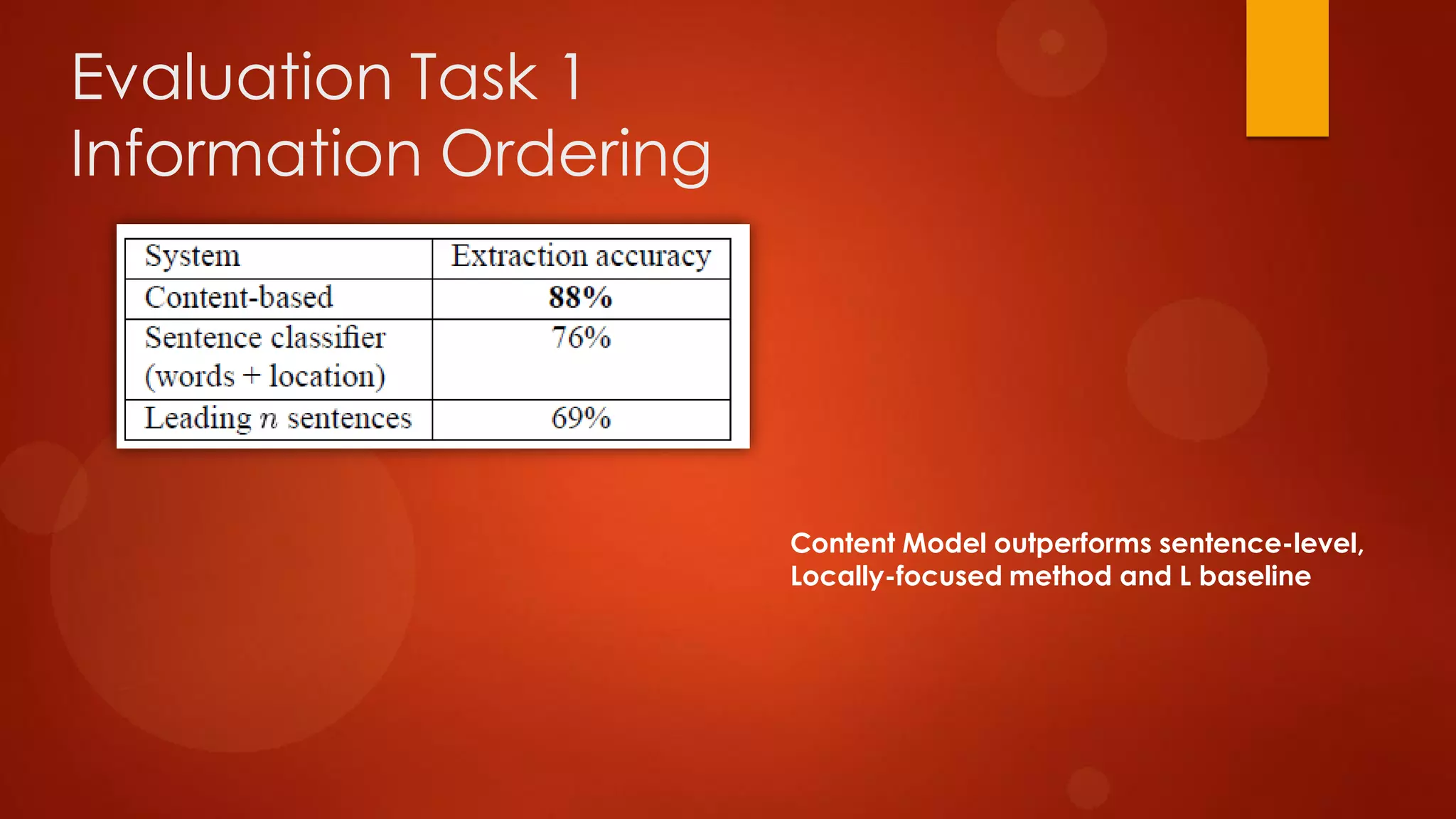

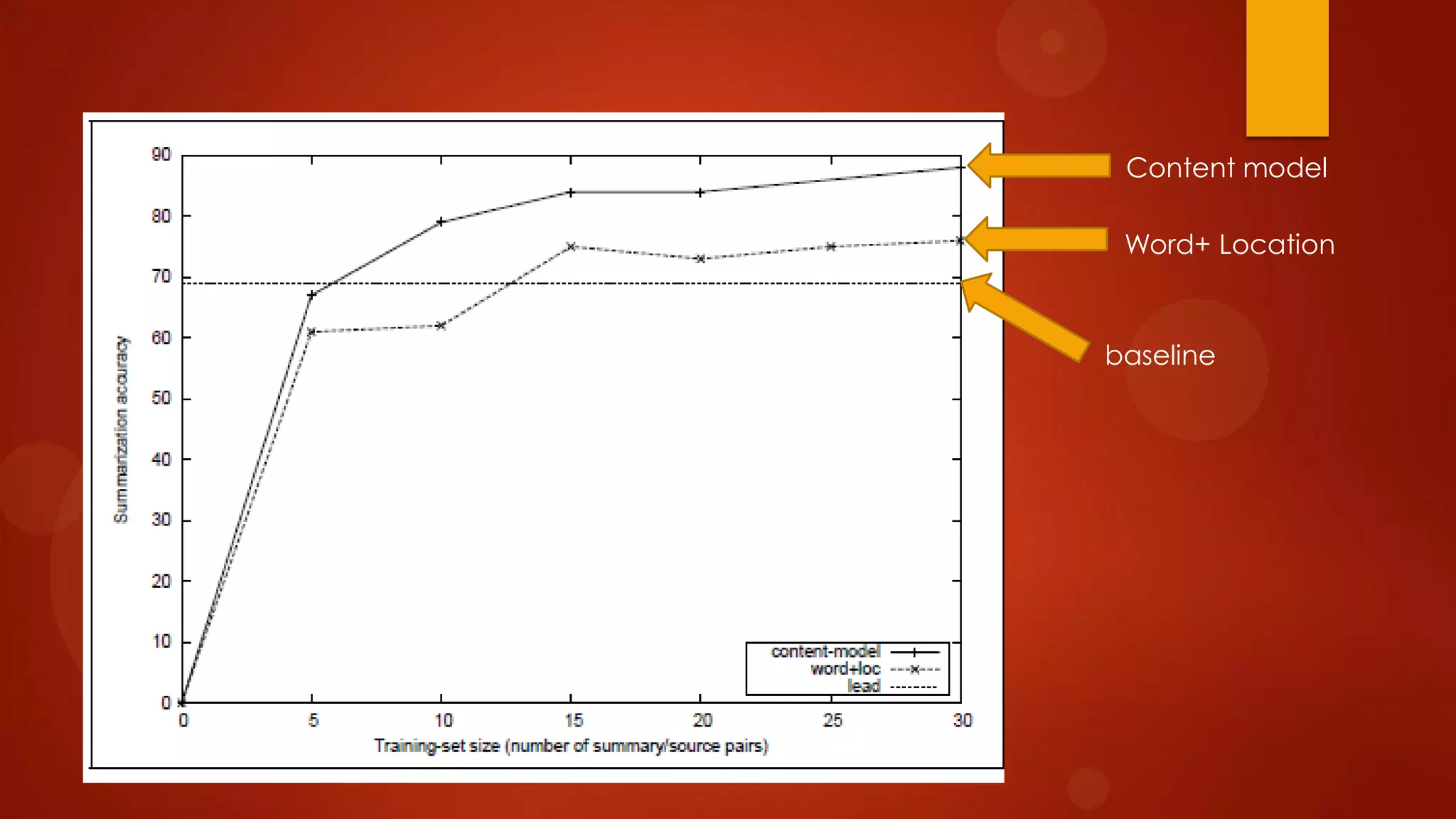

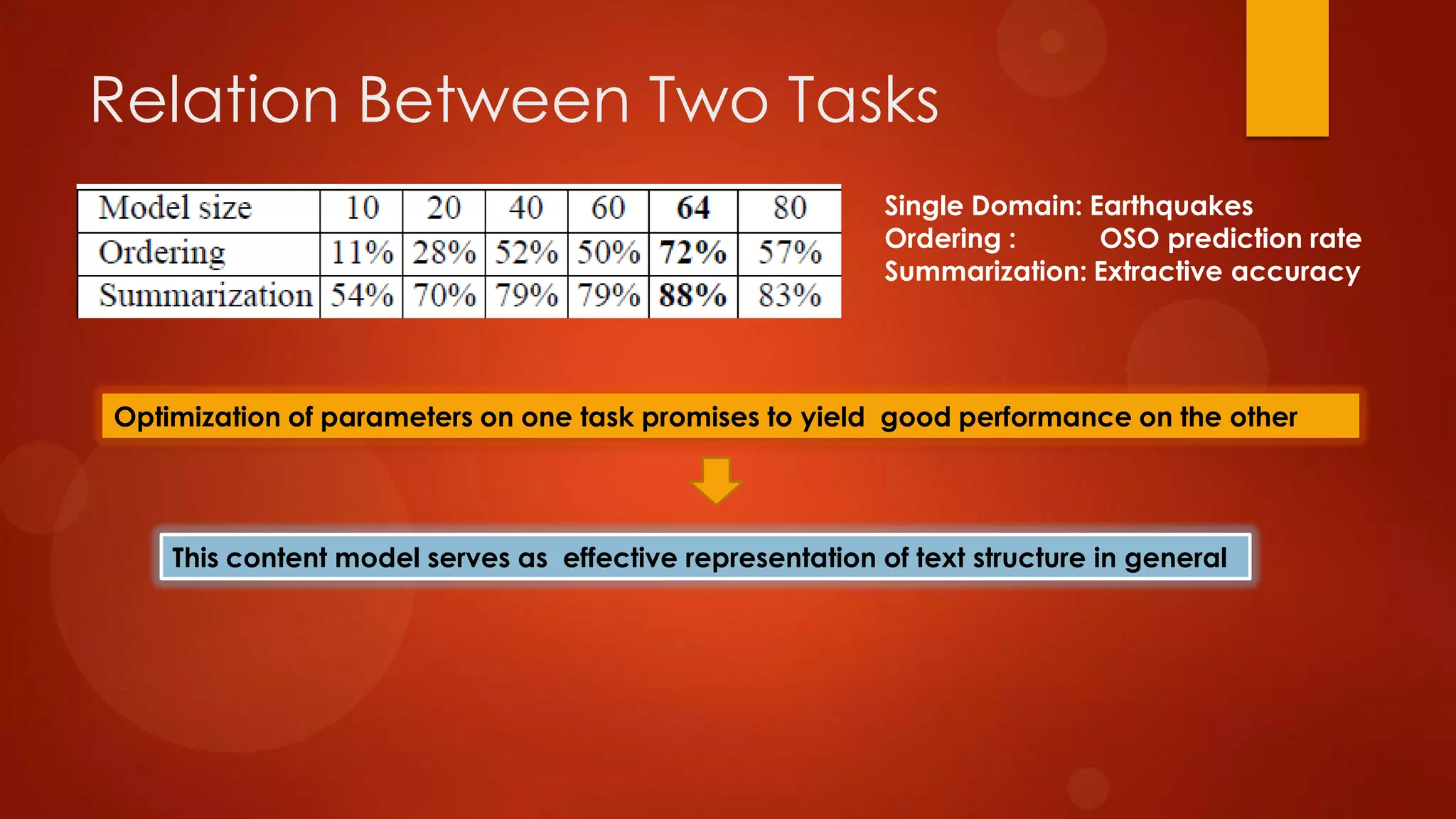

This document presents a probabilistic content model using a hidden Markov model to model topic structures in text. It applies this model to two tasks: sentence ordering and extractive summarization. For sentence ordering, it generates all possible orders, computes the probability of each, and ranks them to evaluate how well the original sentence order is recovered. For summarization, it assigns topic probabilities to sentences based on topic distributions and extracts sentences with high topic probabilities in summaries. Evaluation shows the content model outperforms baselines on both tasks, demonstrating it effectively captures text structure.