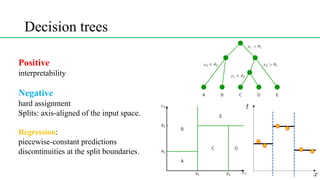

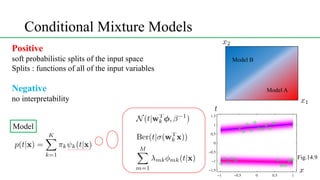

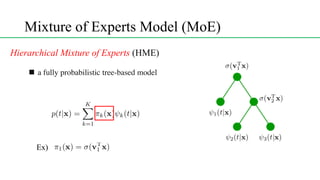

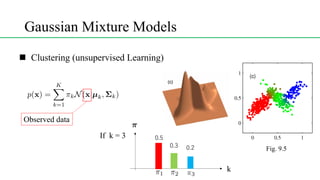

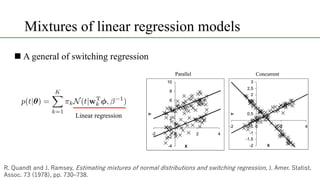

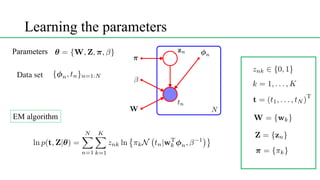

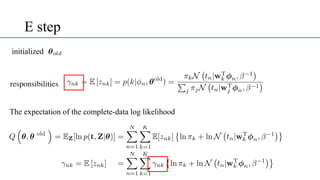

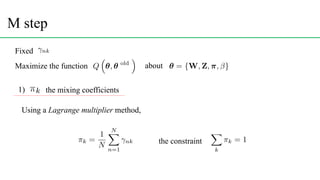

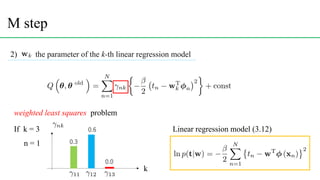

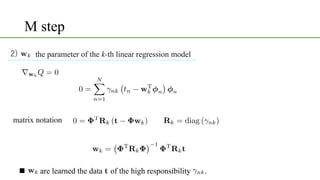

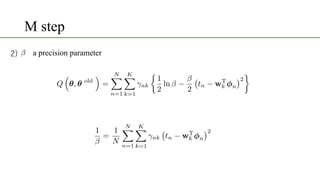

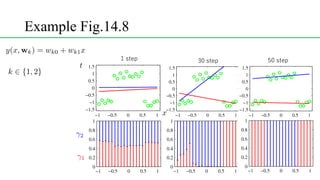

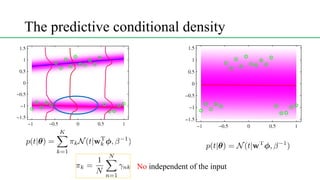

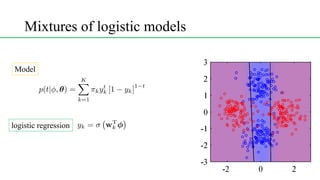

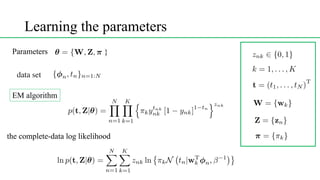

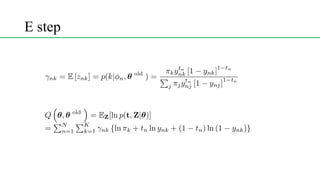

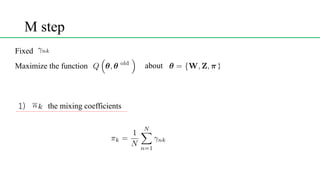

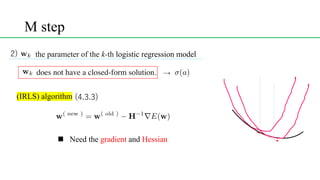

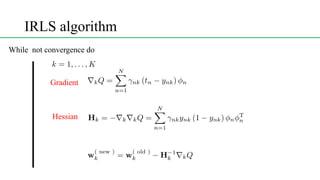

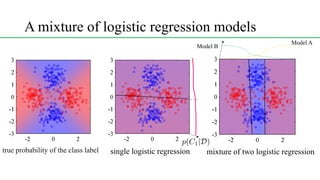

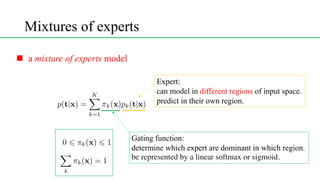

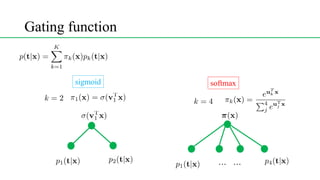

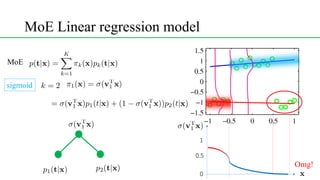

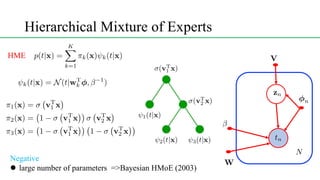

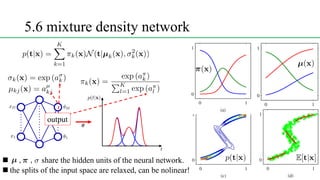

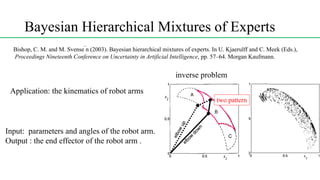

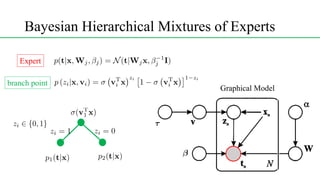

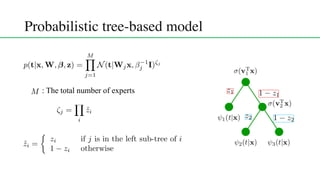

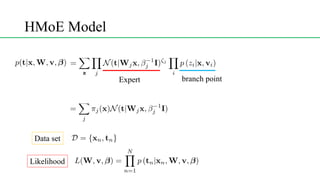

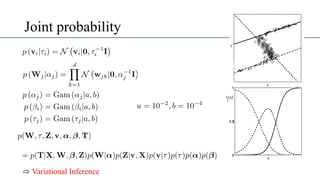

This document discusses conditional mixture models, including mixtures of linear regression models, mixtures of logistic models, and mixtures of experts models. It provides details on learning the parameters of these models using the EM algorithm. Mixtures of experts models use a gating network to determine which expert network is responsible for different regions of the input space. Hierarchical mixtures of experts extend this idea by incorporating multiple levels of gating networks.