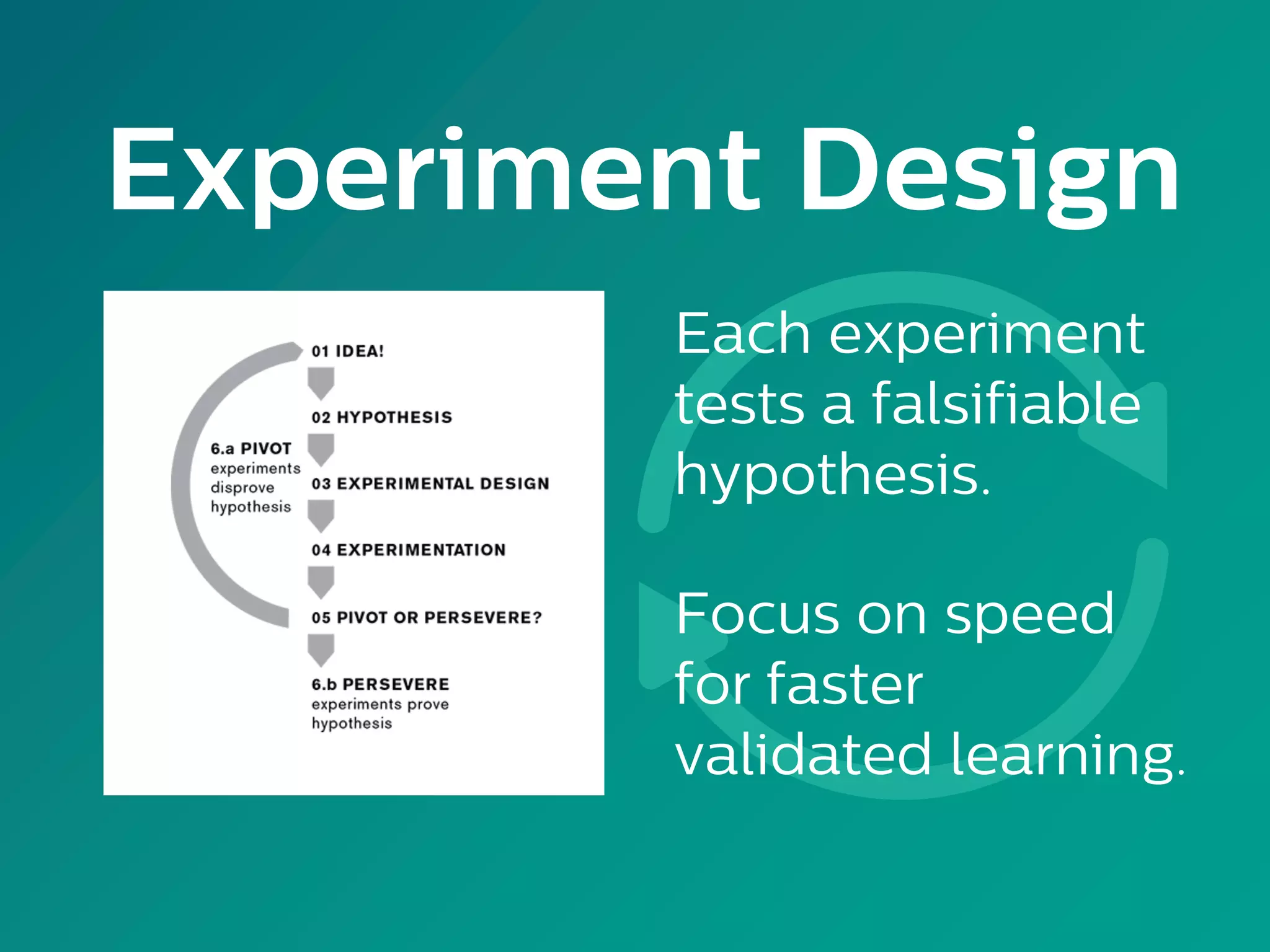

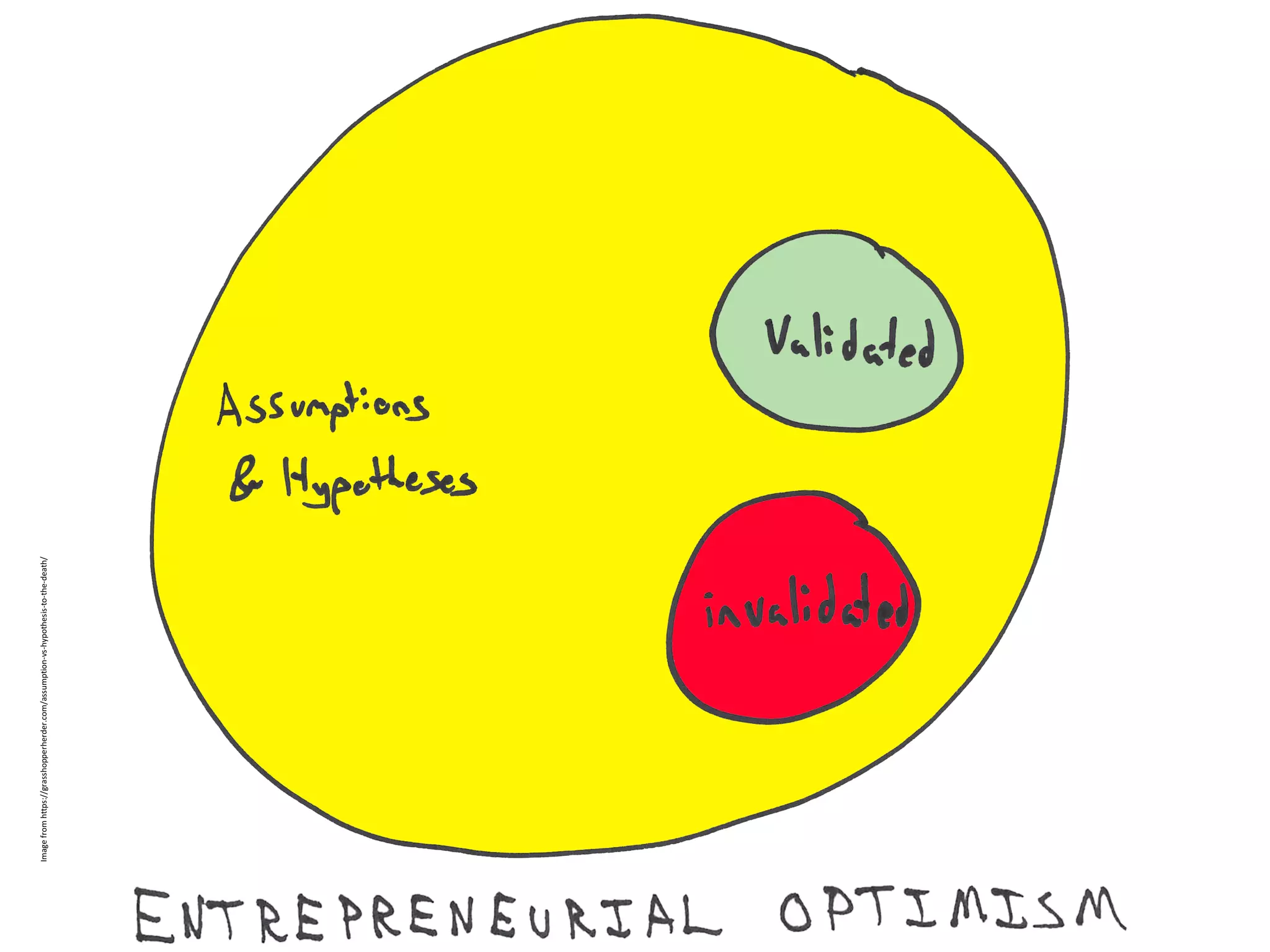

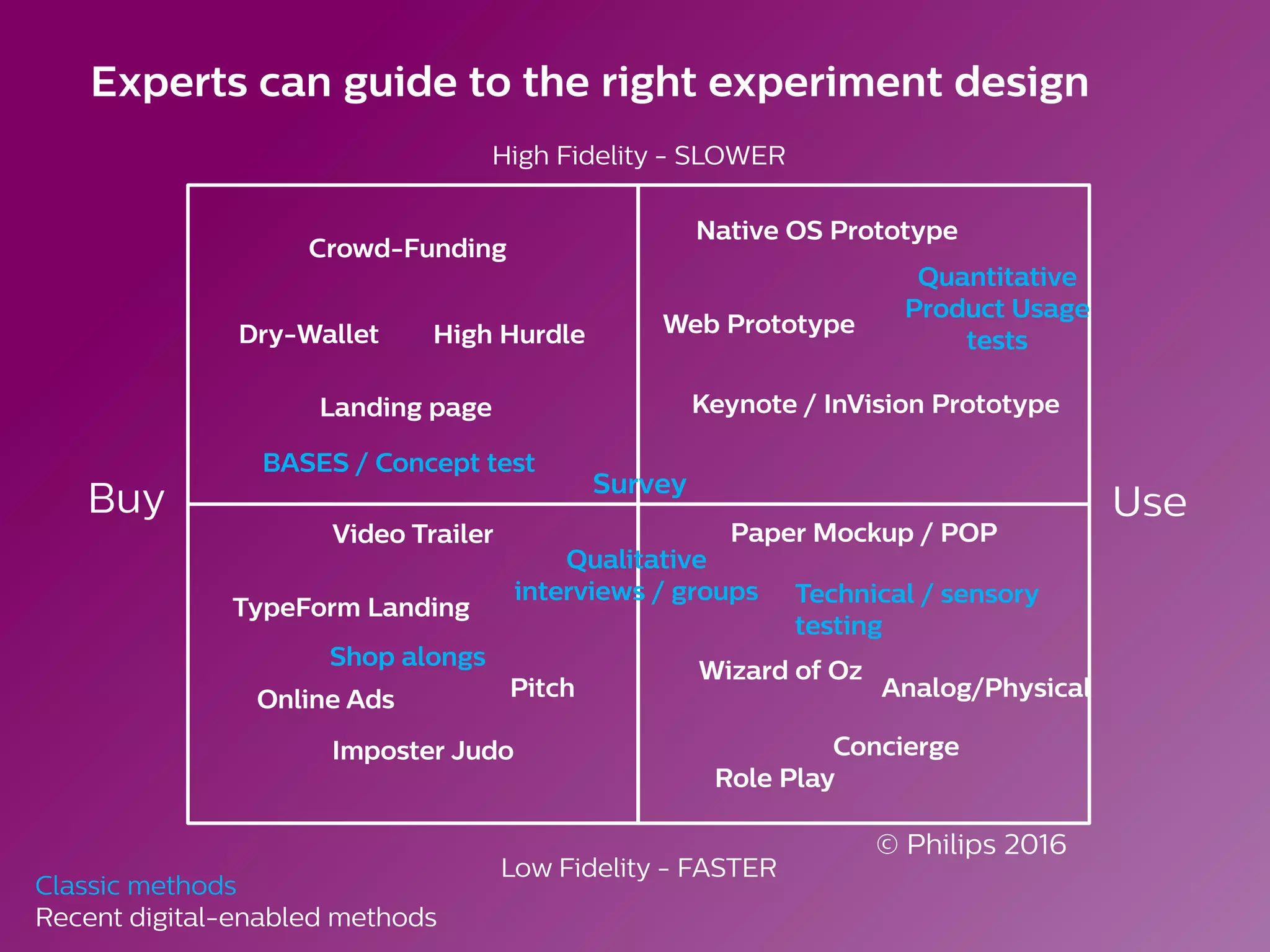

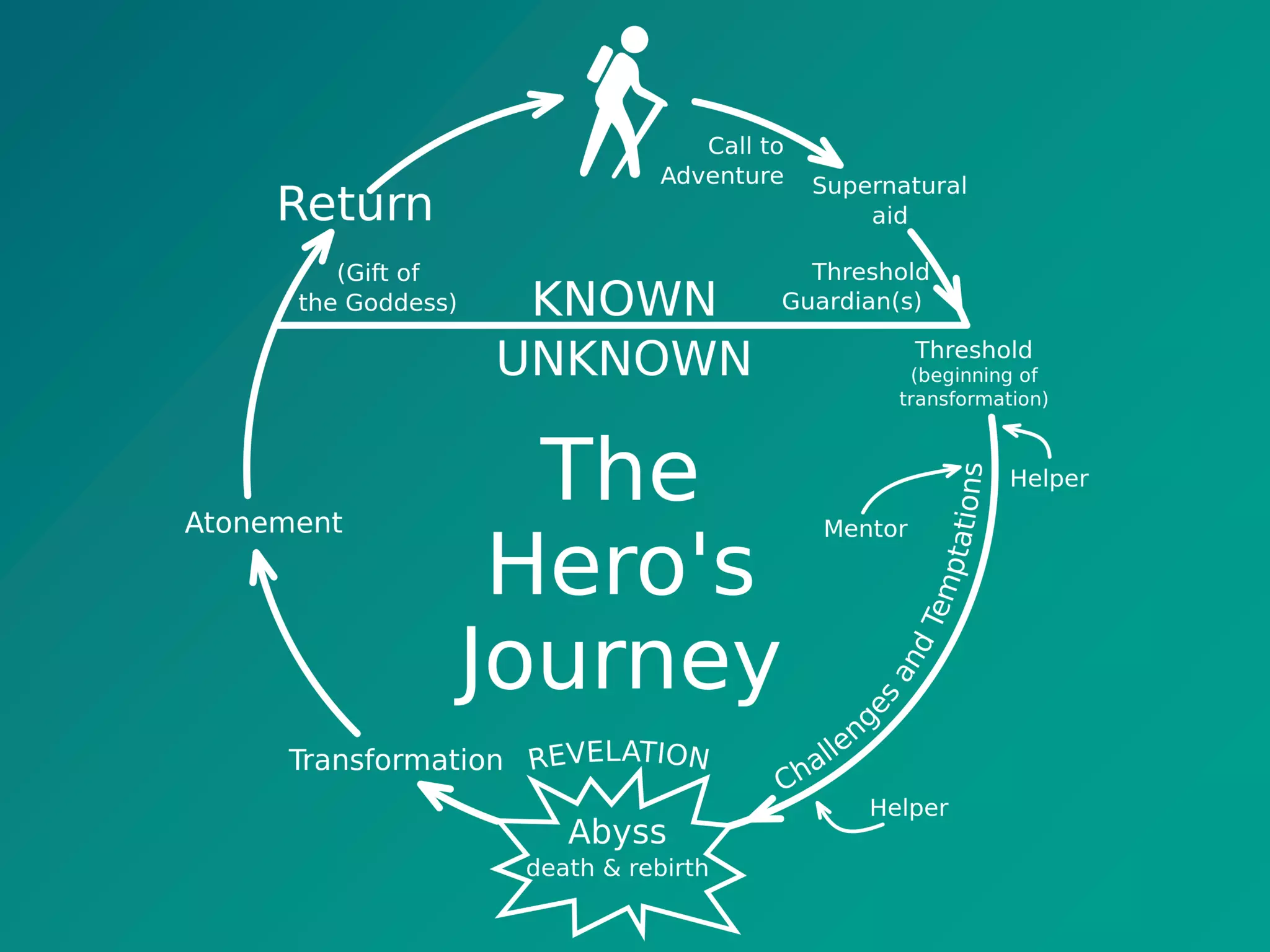

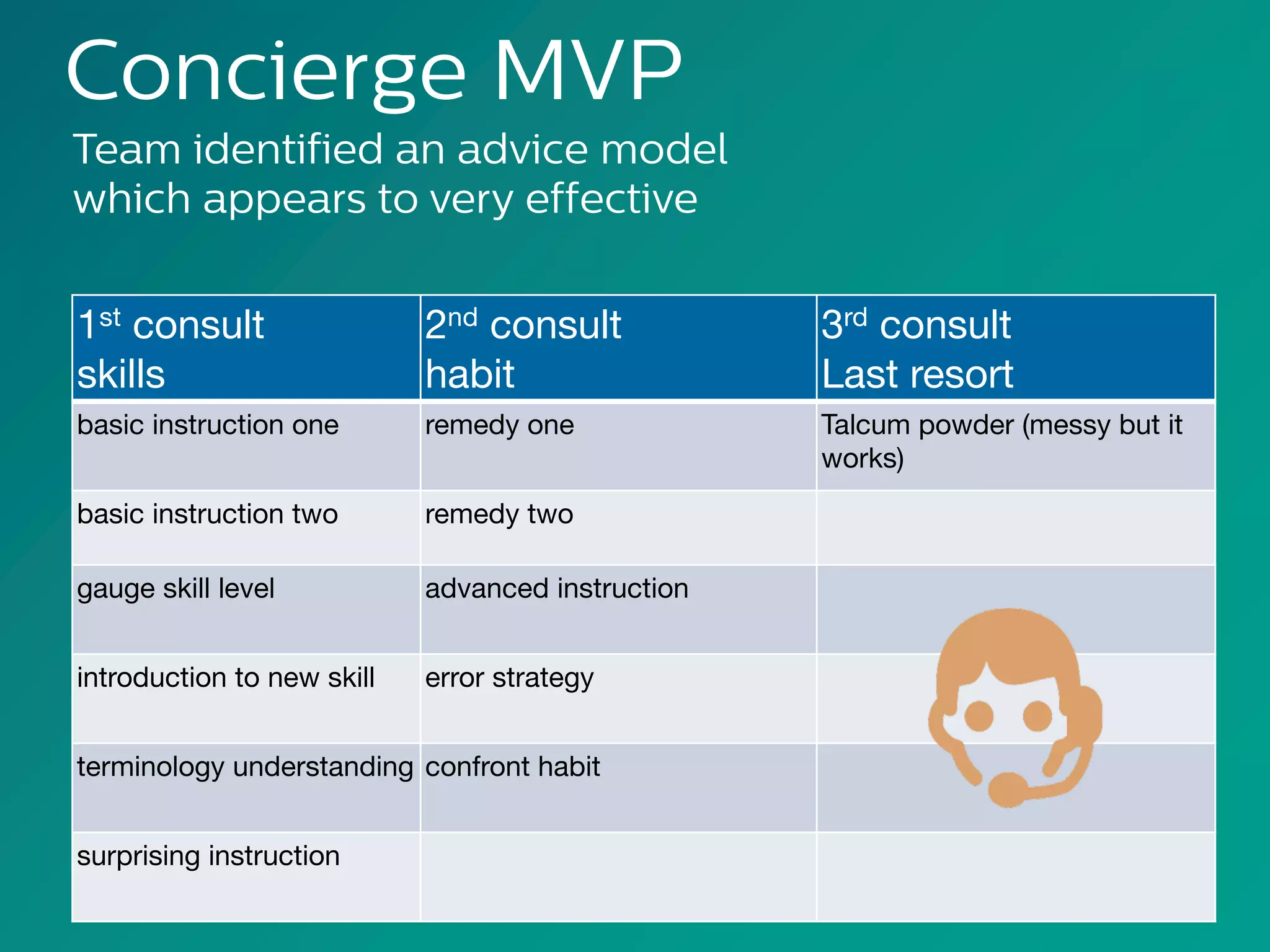

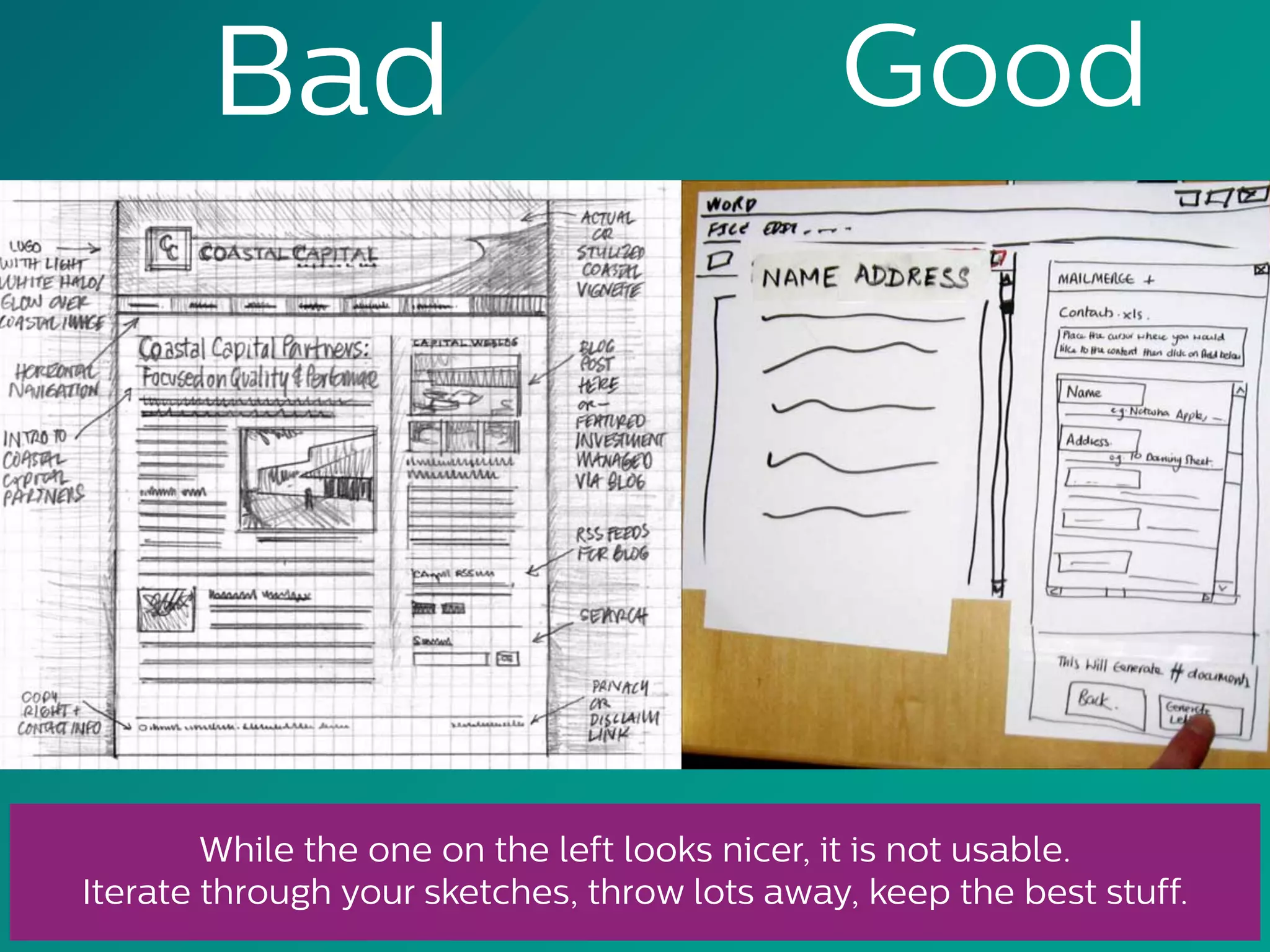

This document discusses the importance of experimentation in moving ideas to business. It argues that as humans, we tend to overestimate success and inflate impacts, so experimentation helps overcome these biases. Validated learning through experimentation measures effects to inform decisions. Experiments should test falsifiable hypotheses and focus on speed to facilitate faster learning. Different testing methods are outlined from paper prototypes to A/B testing digital versions. Key principles for effective experimentation include making tests simple, close to reality, prioritized, and statistically significant to either validate hypotheses or identify needed pivots.