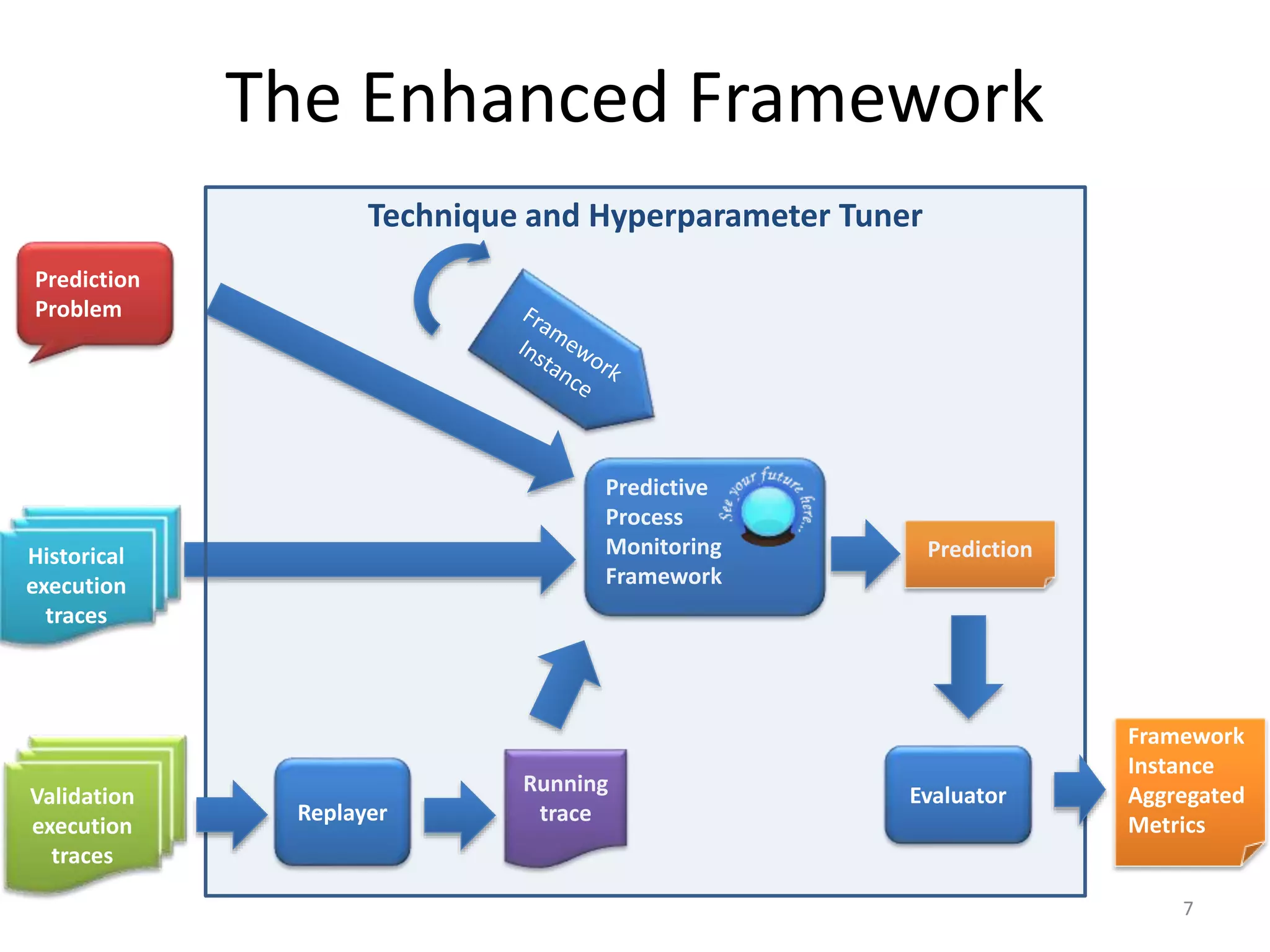

1. The document presents a predictive process monitoring framework that is enhanced with technique and hyperparameter optimization.

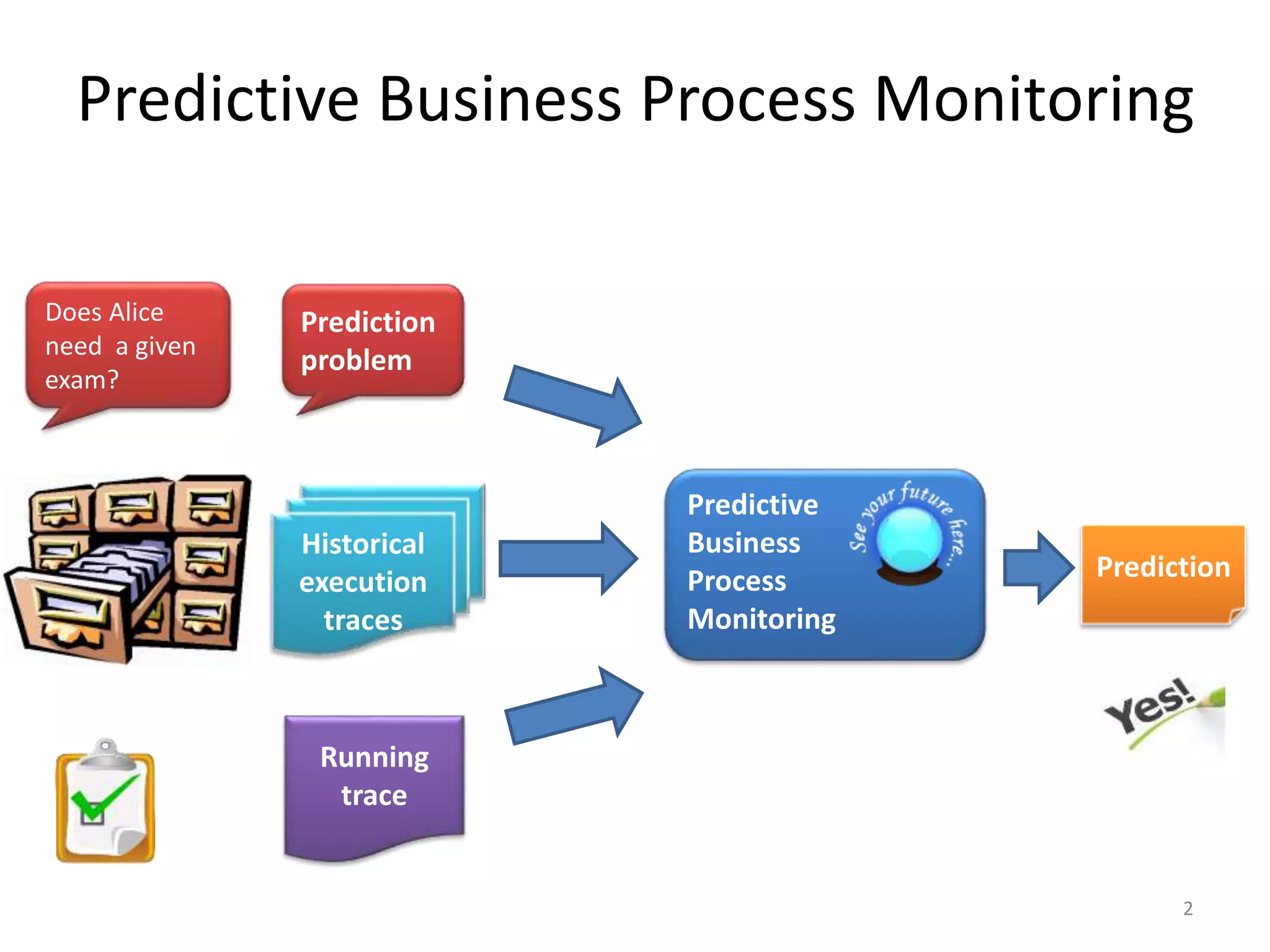

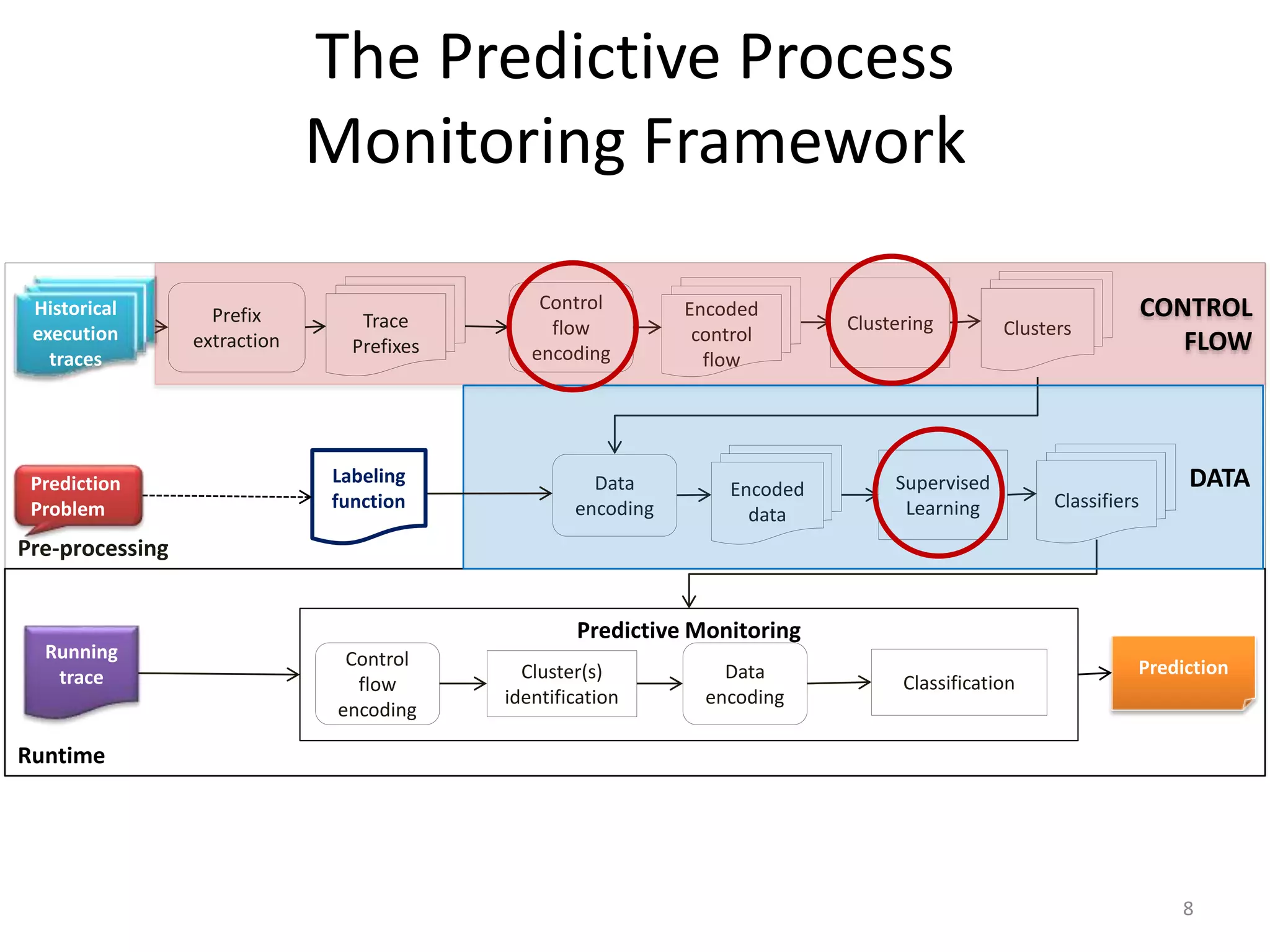

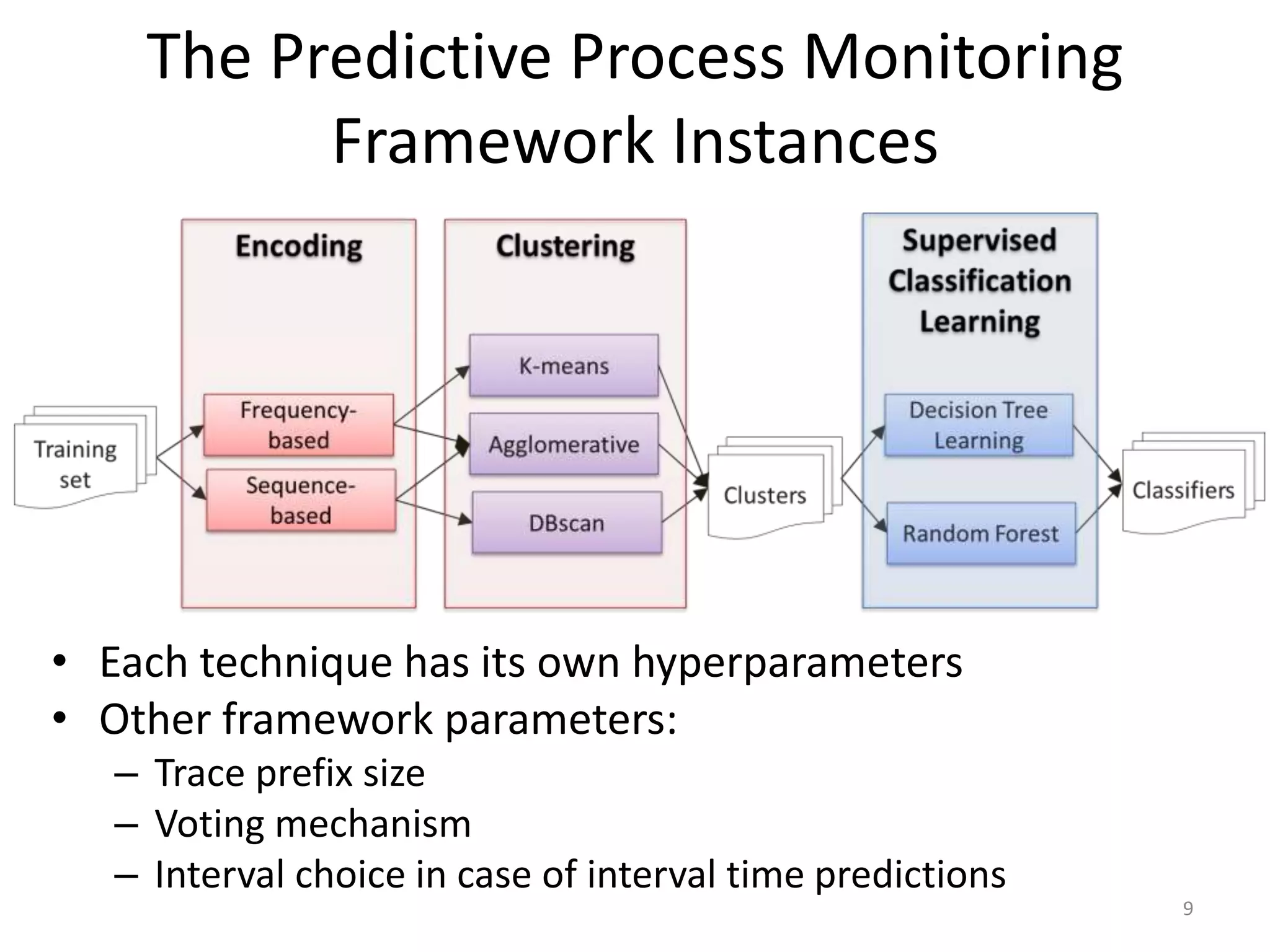

2. The framework evaluates multiple configurations of machine learning techniques and their hyperparameters on historical execution traces to identify the most suitable configuration for a given prediction problem and dataset.

3. An evaluation of the framework on two prediction problems and datasets found that it was able to identify suitable configurations within a reasonable time frame, though the best configuration varied depending on the prediction problem and users' performance criteria.