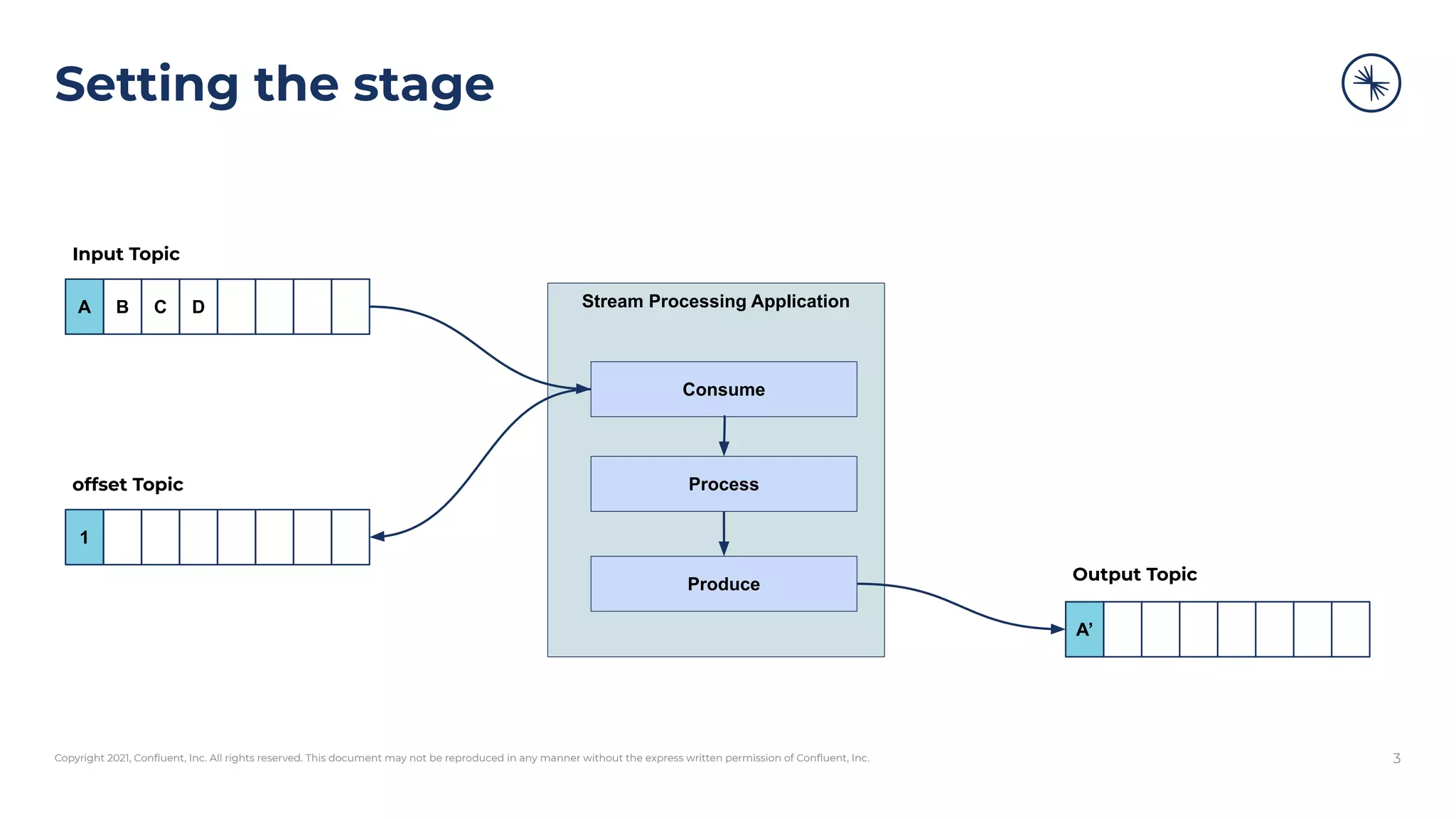

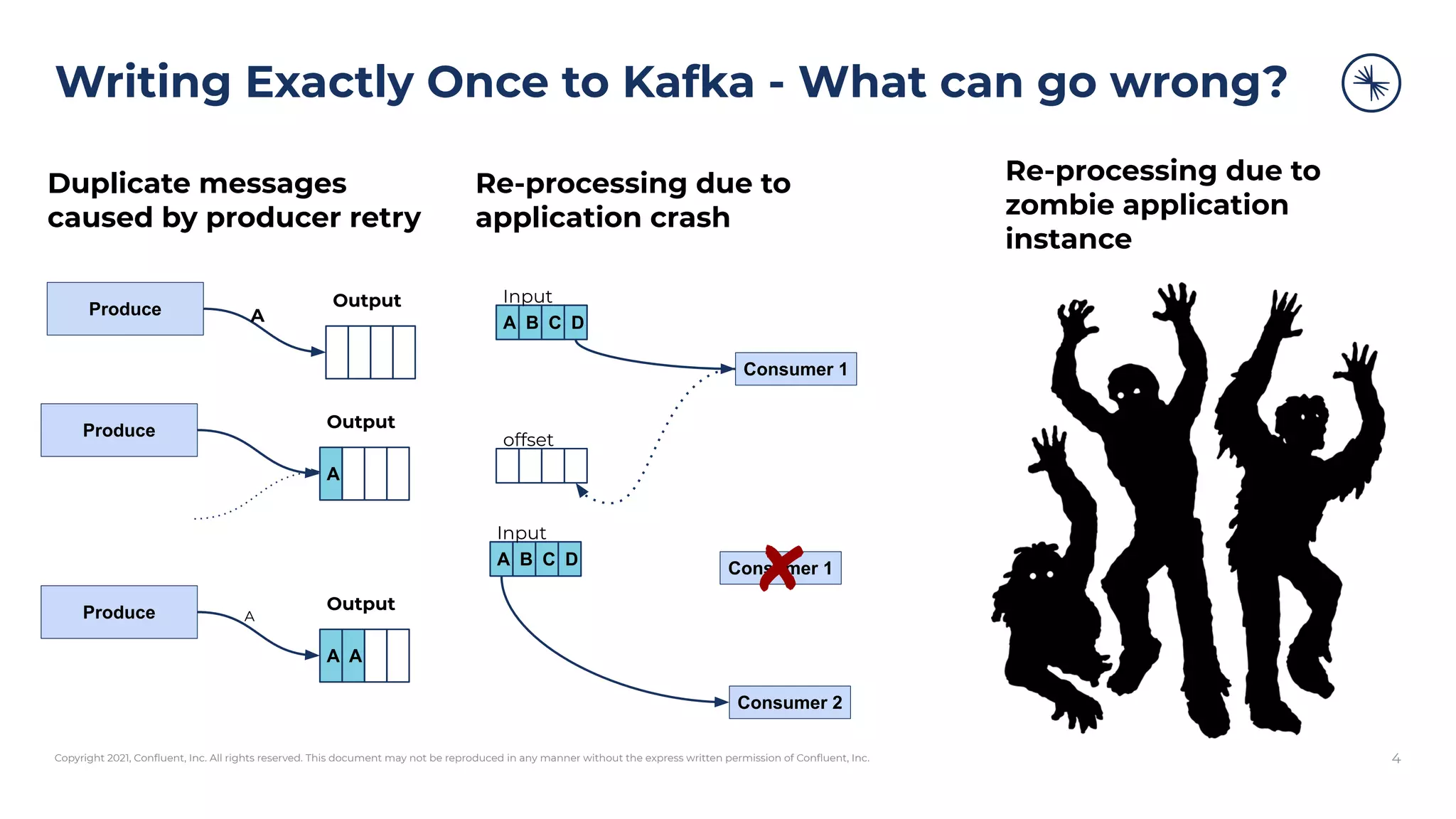

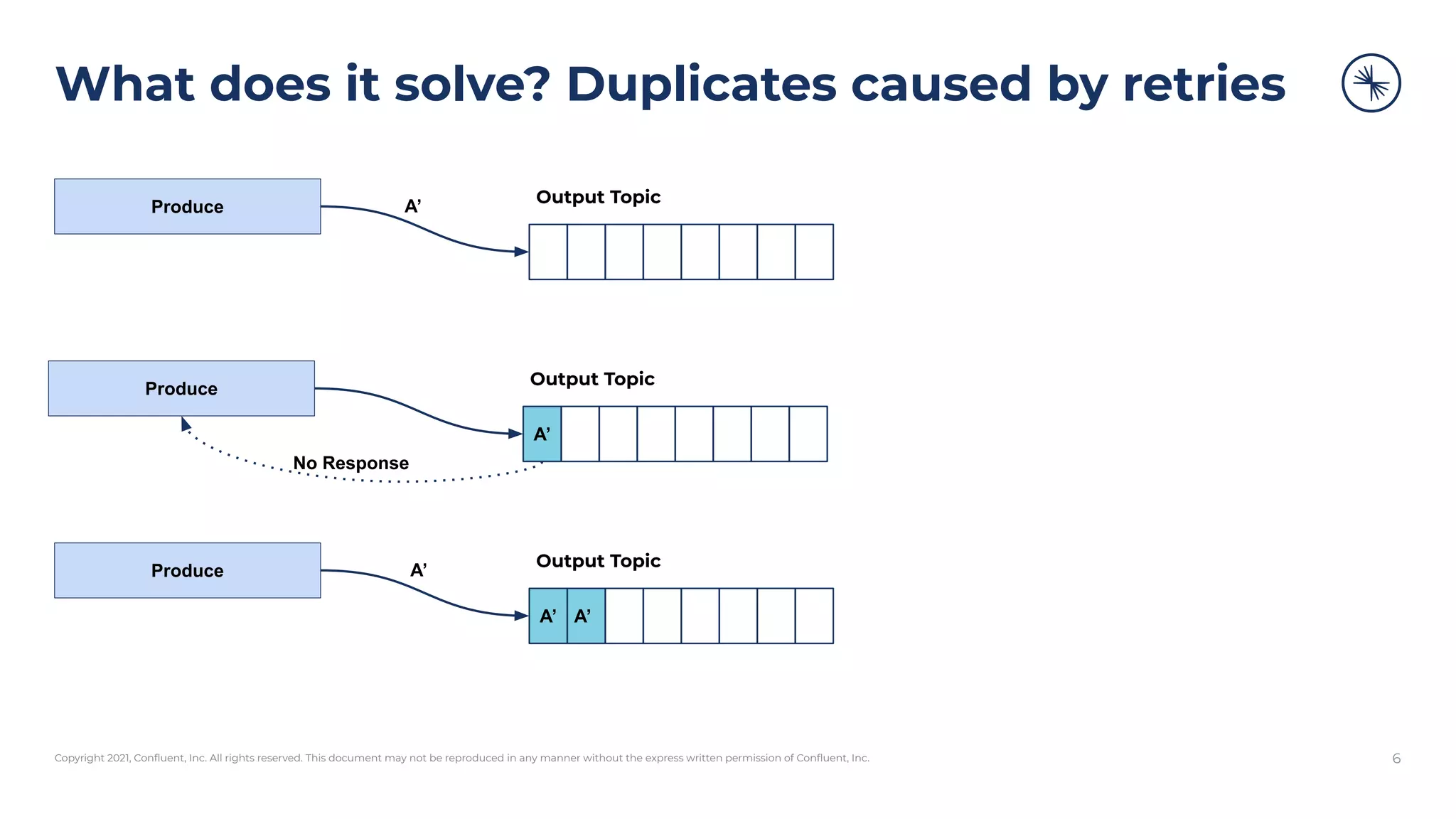

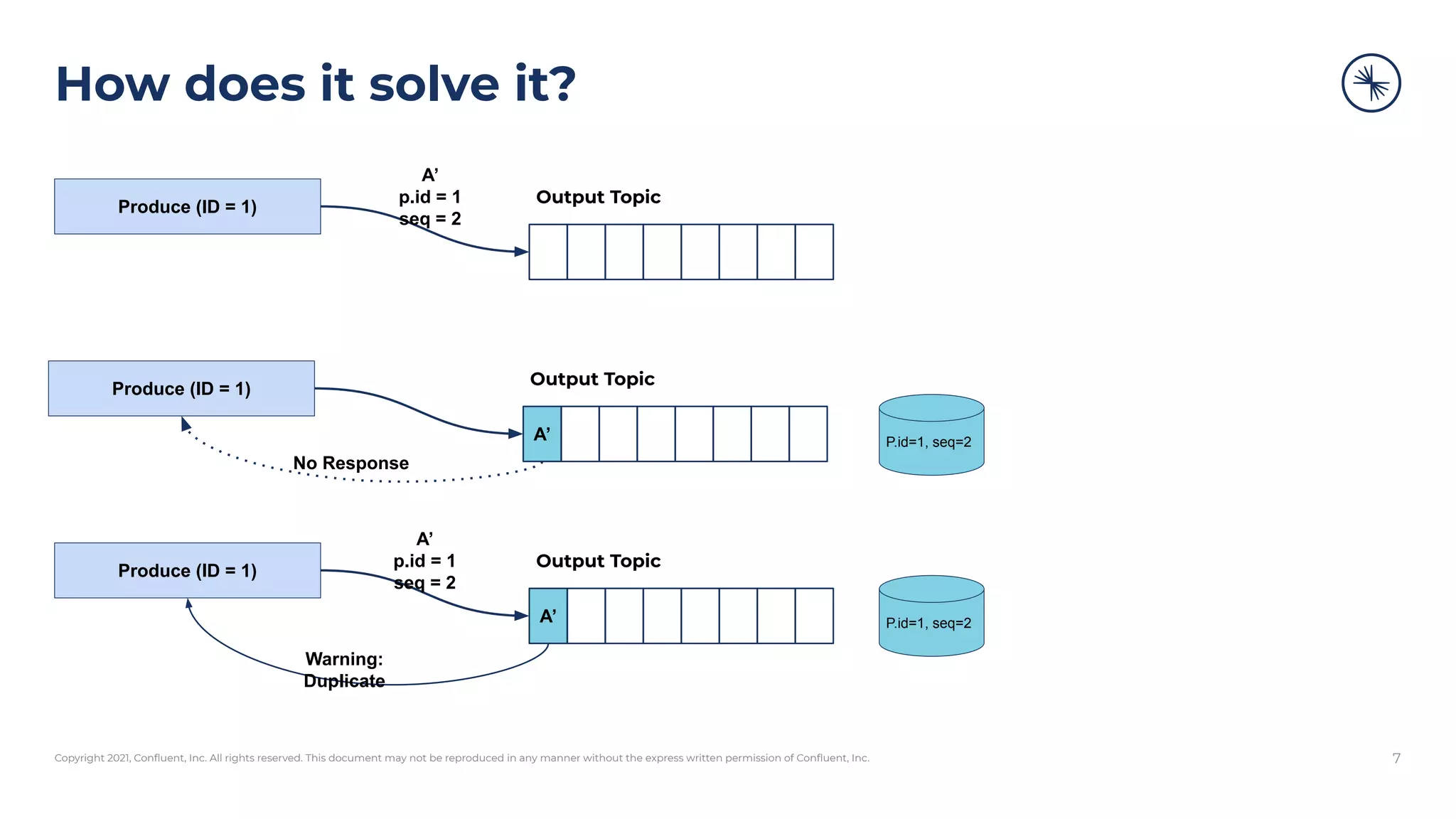

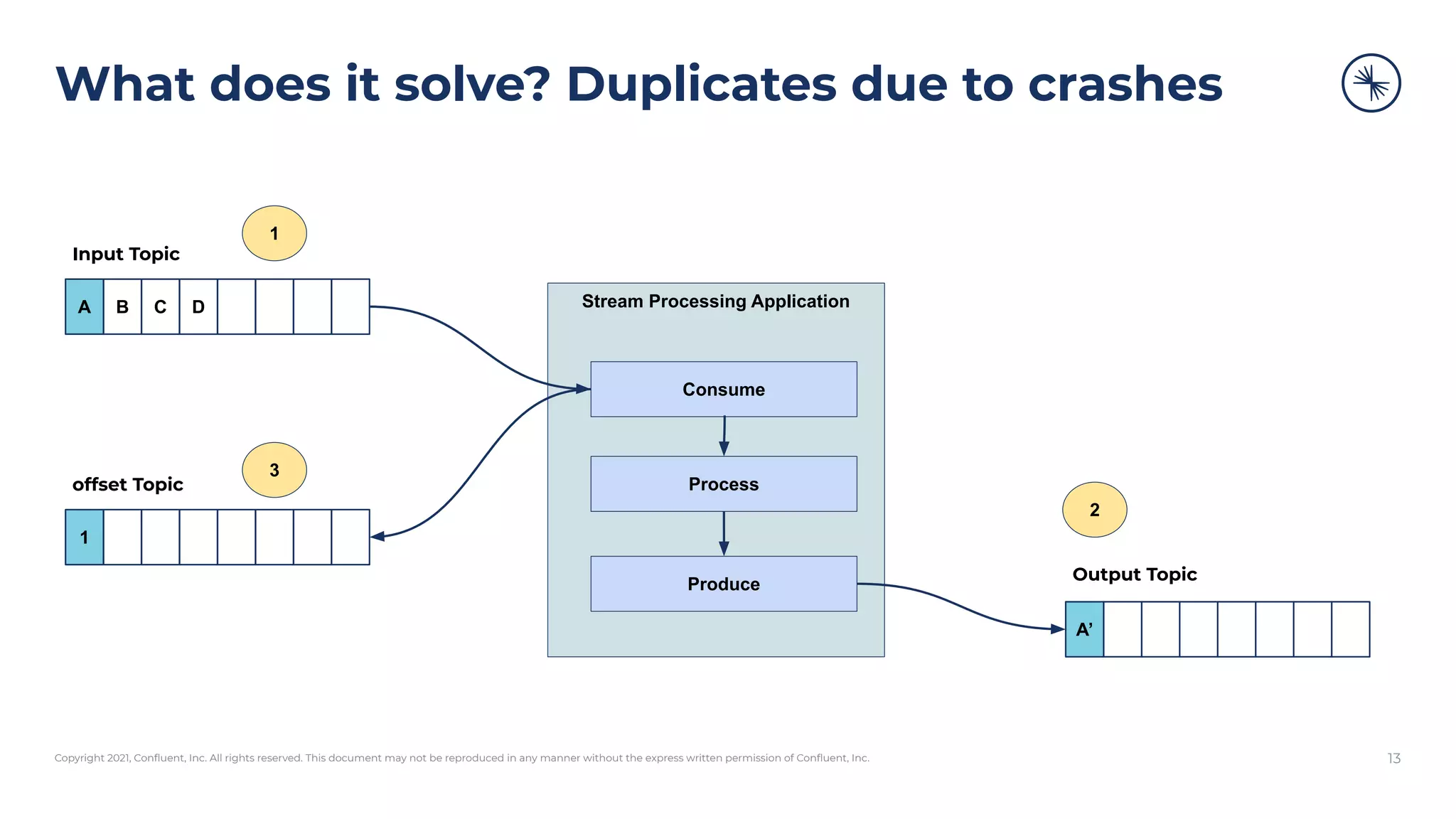

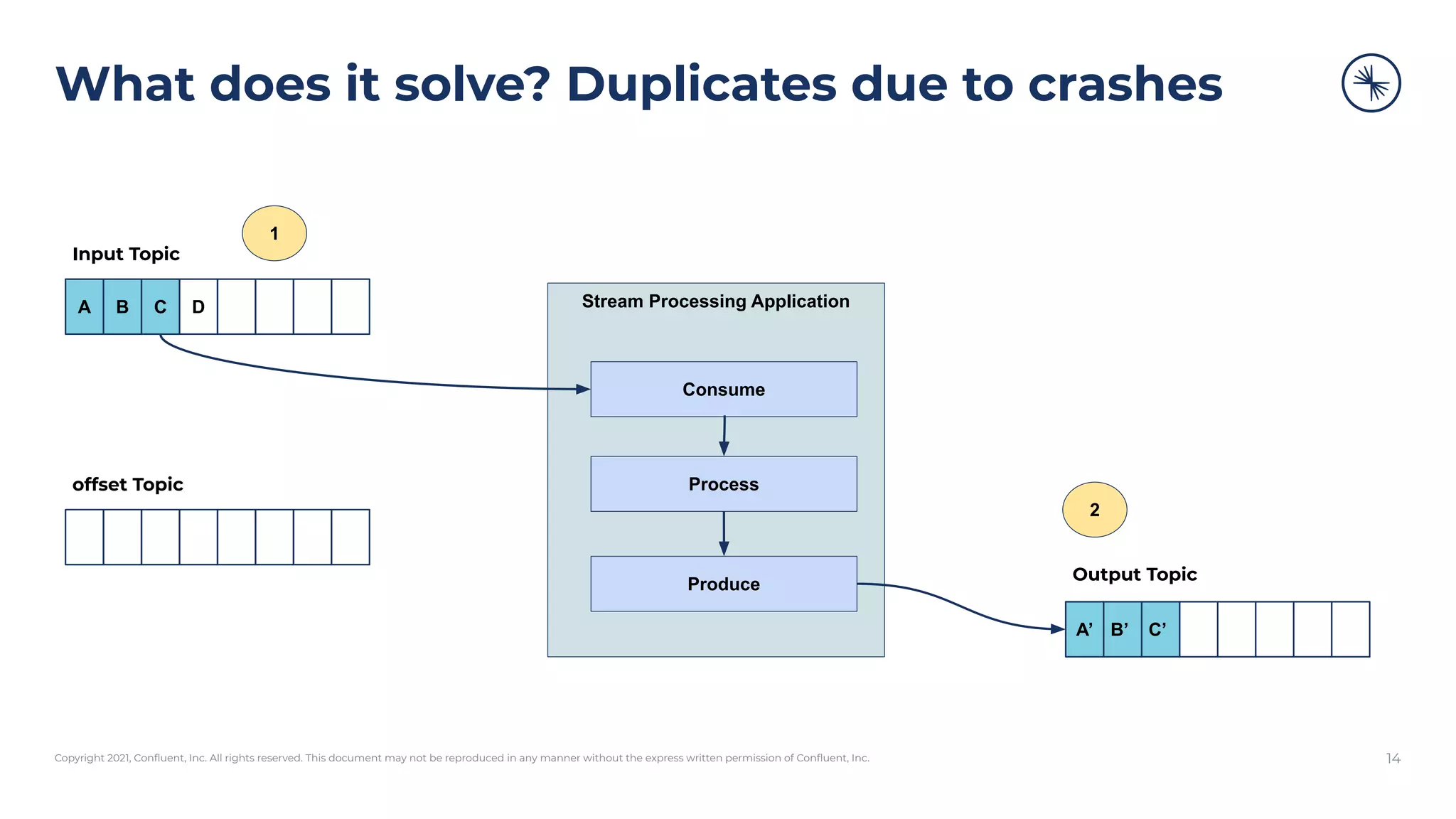

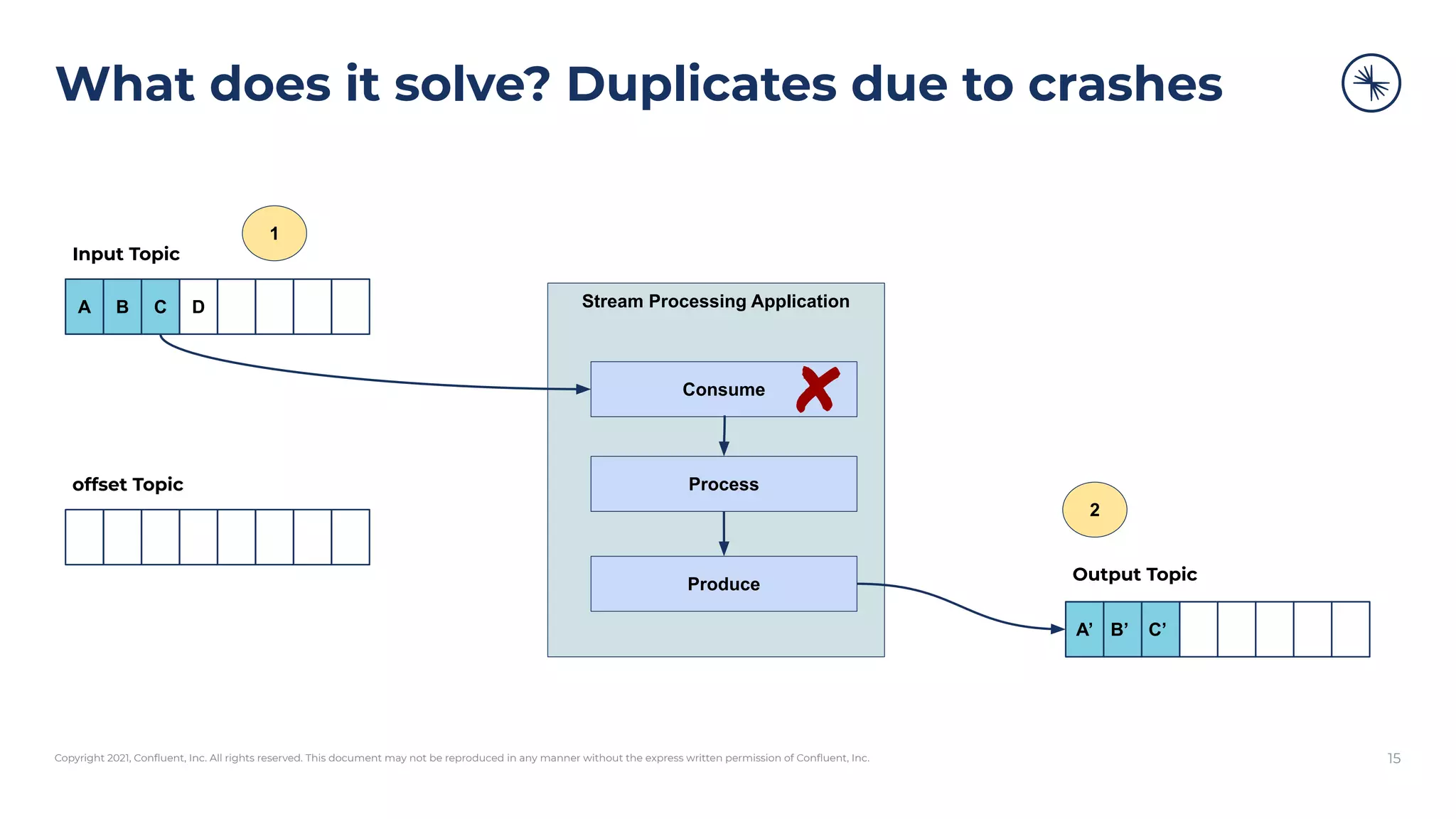

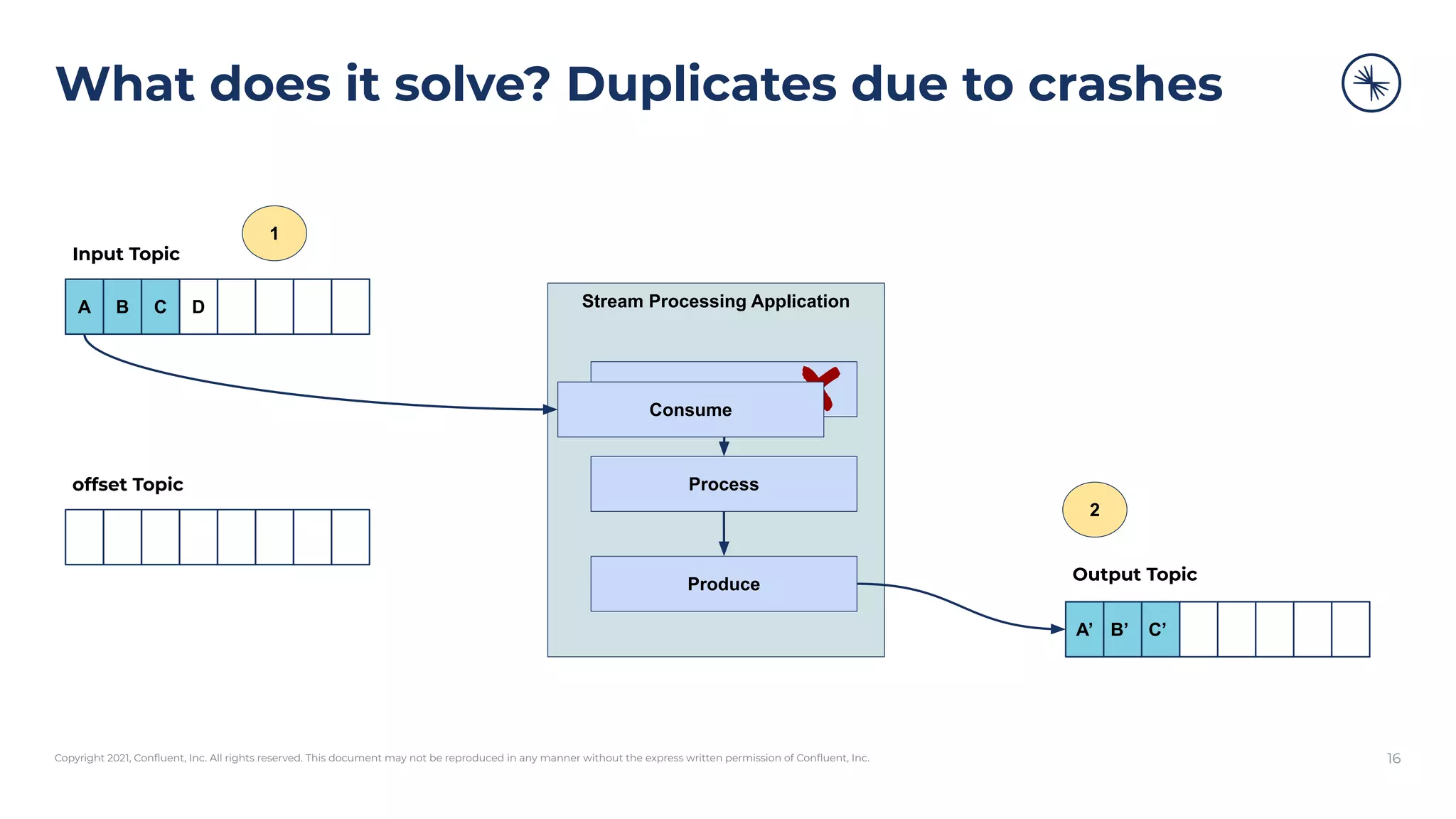

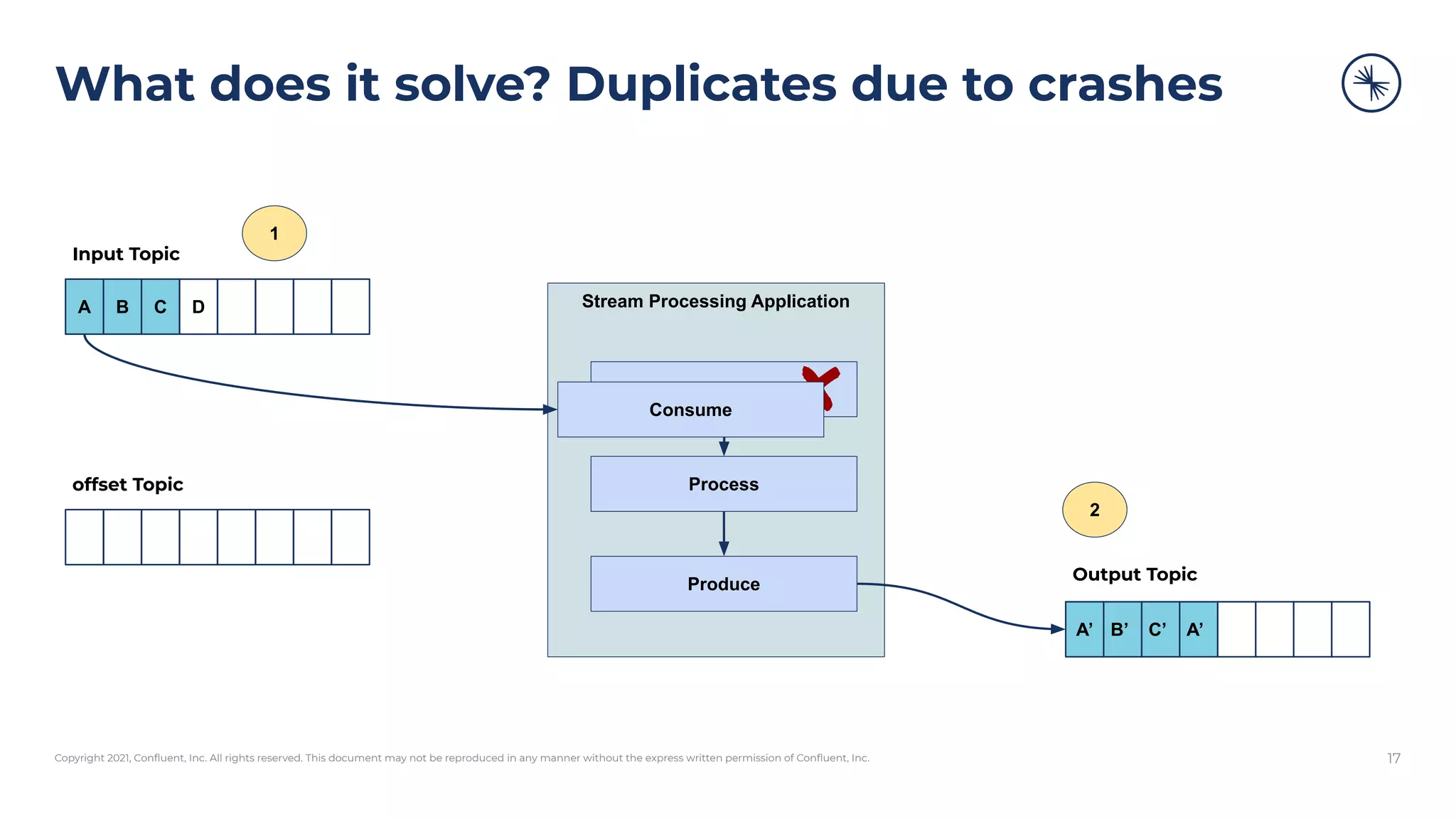

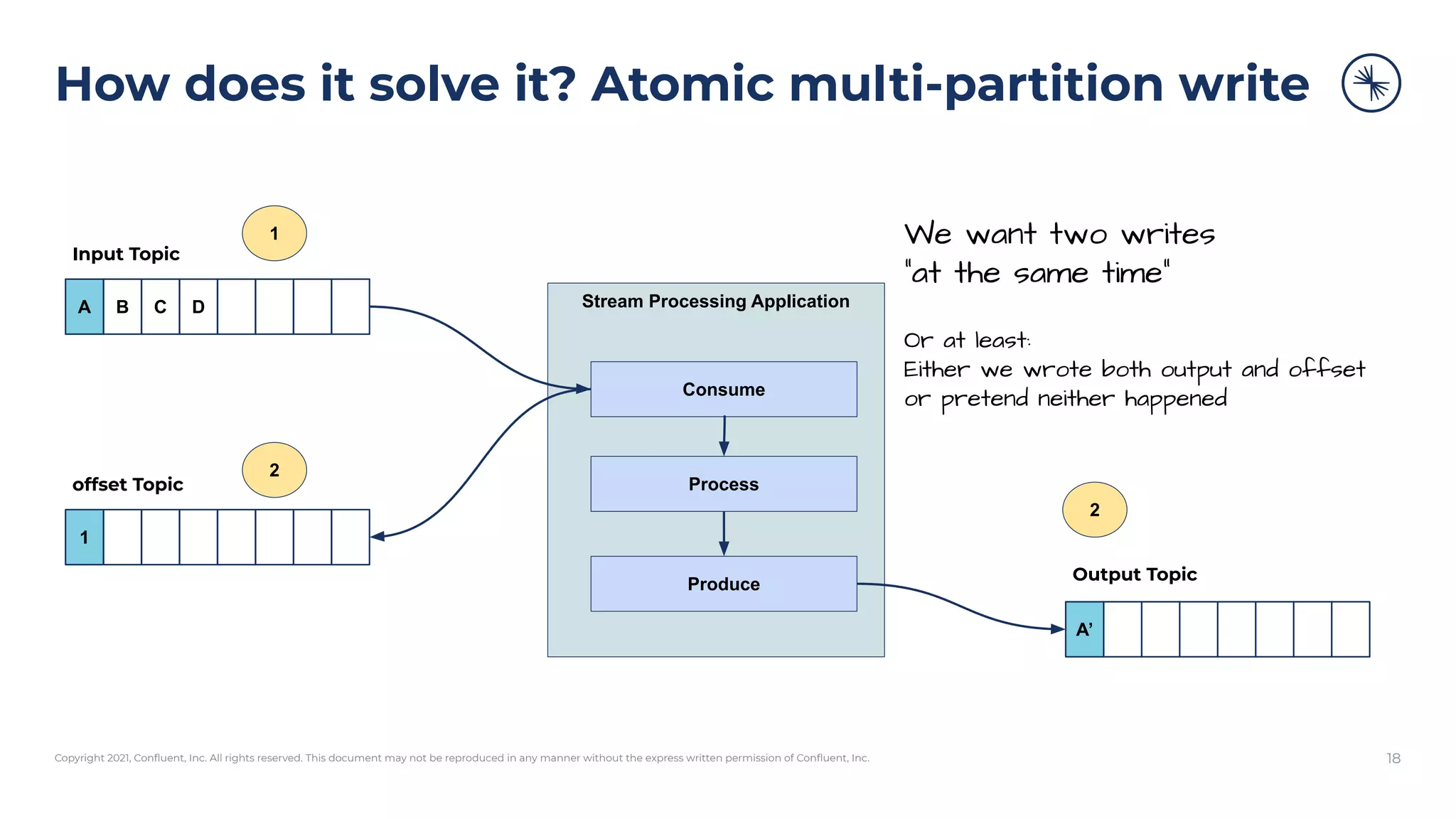

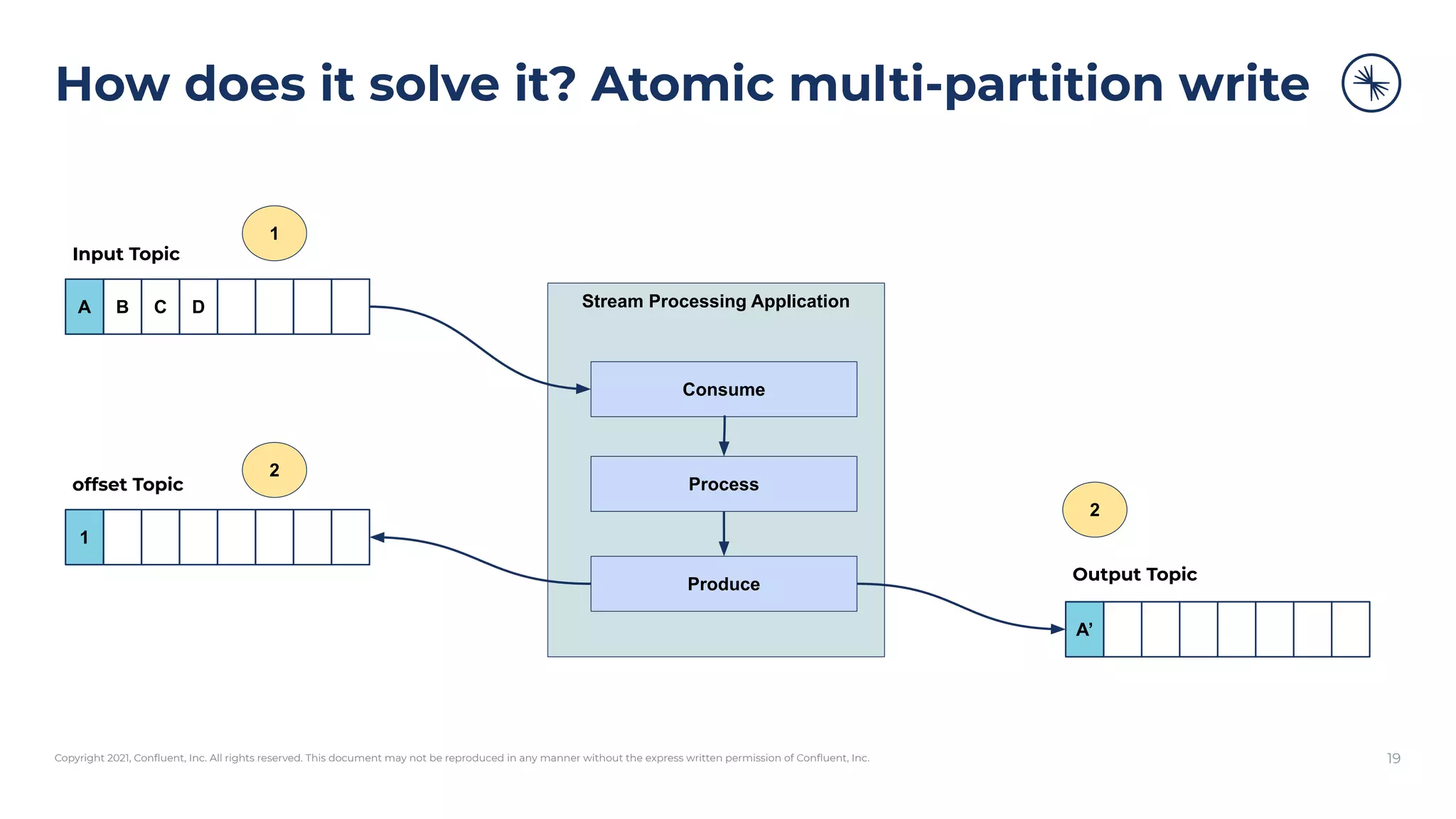

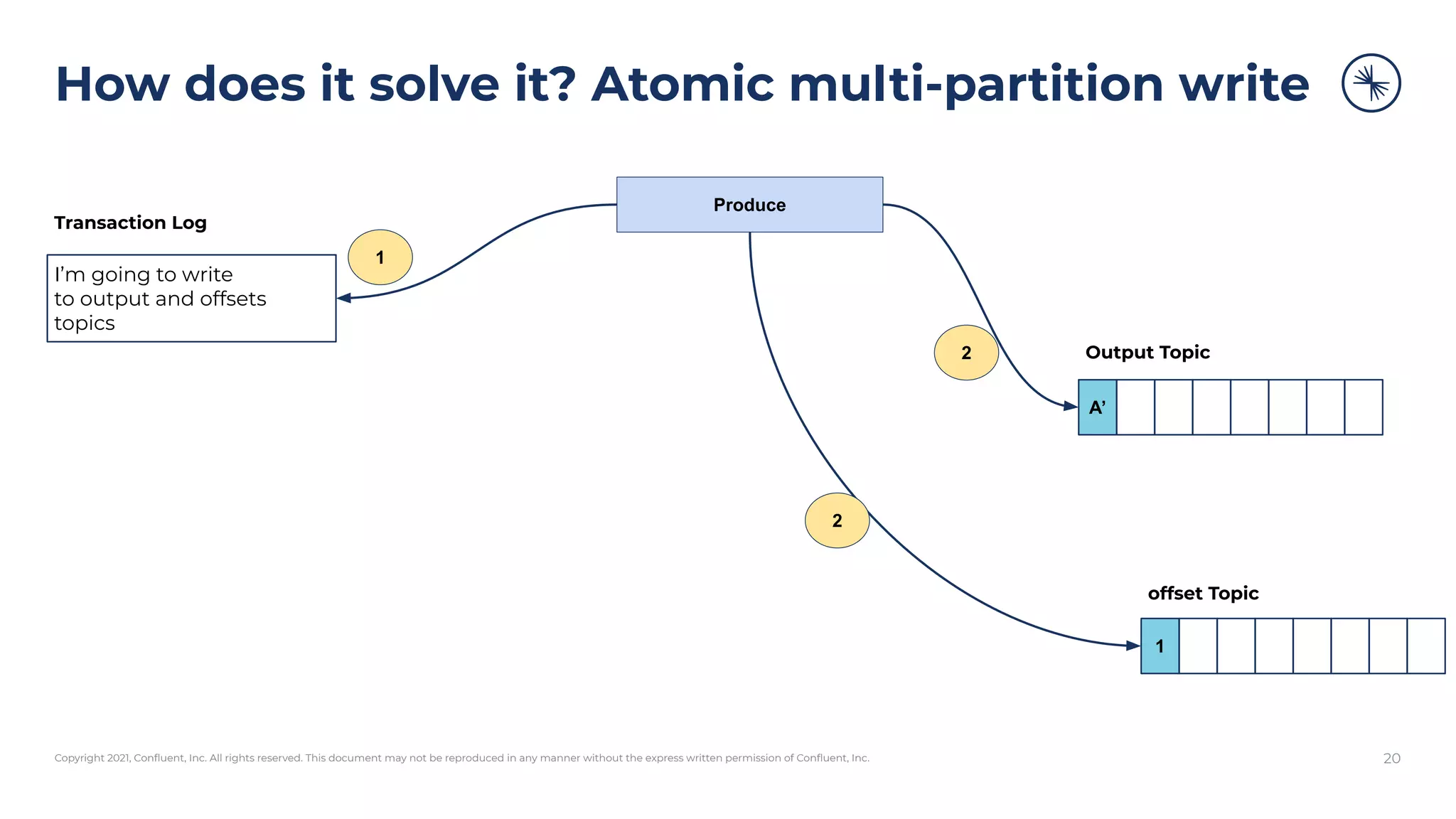

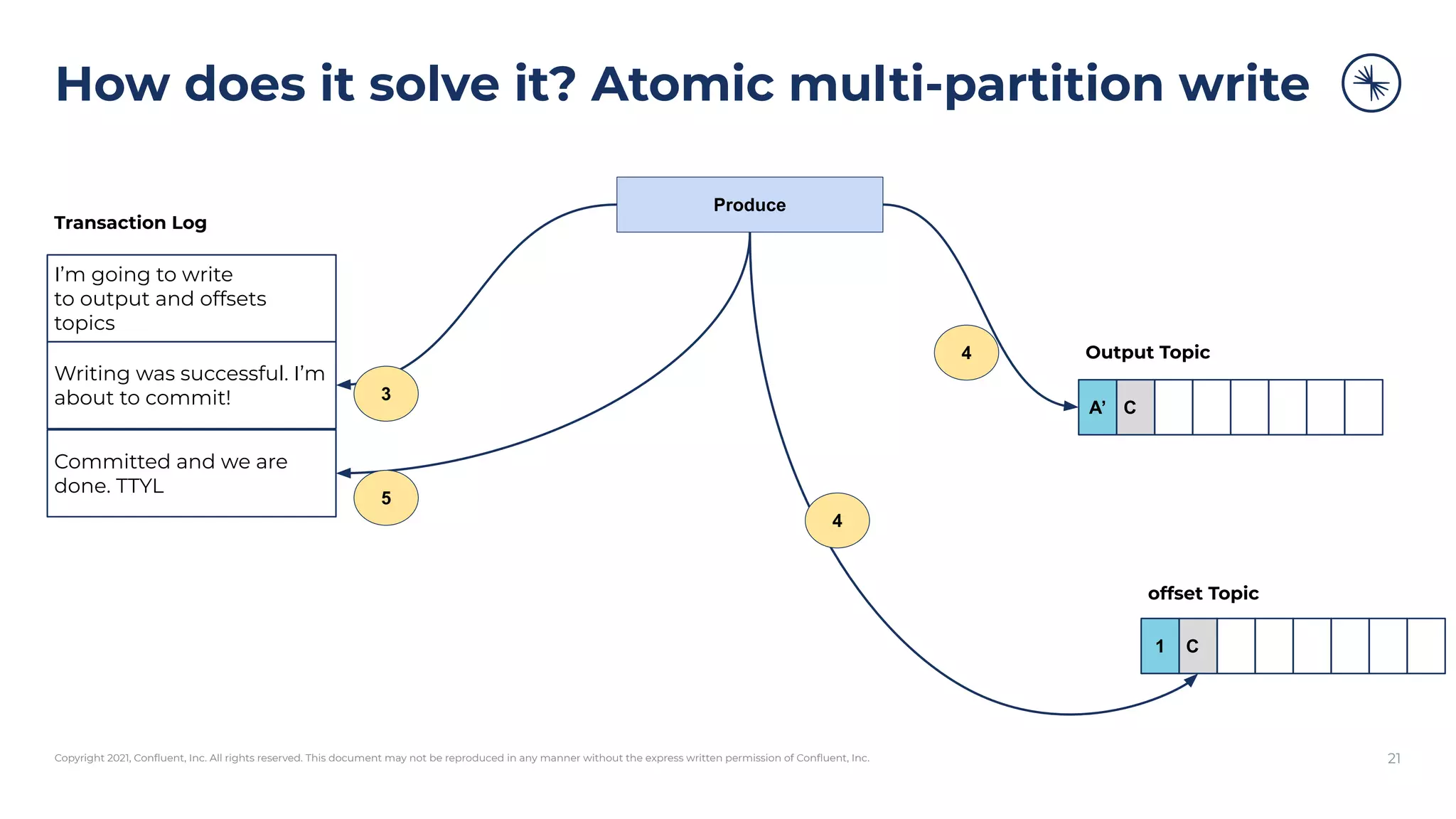

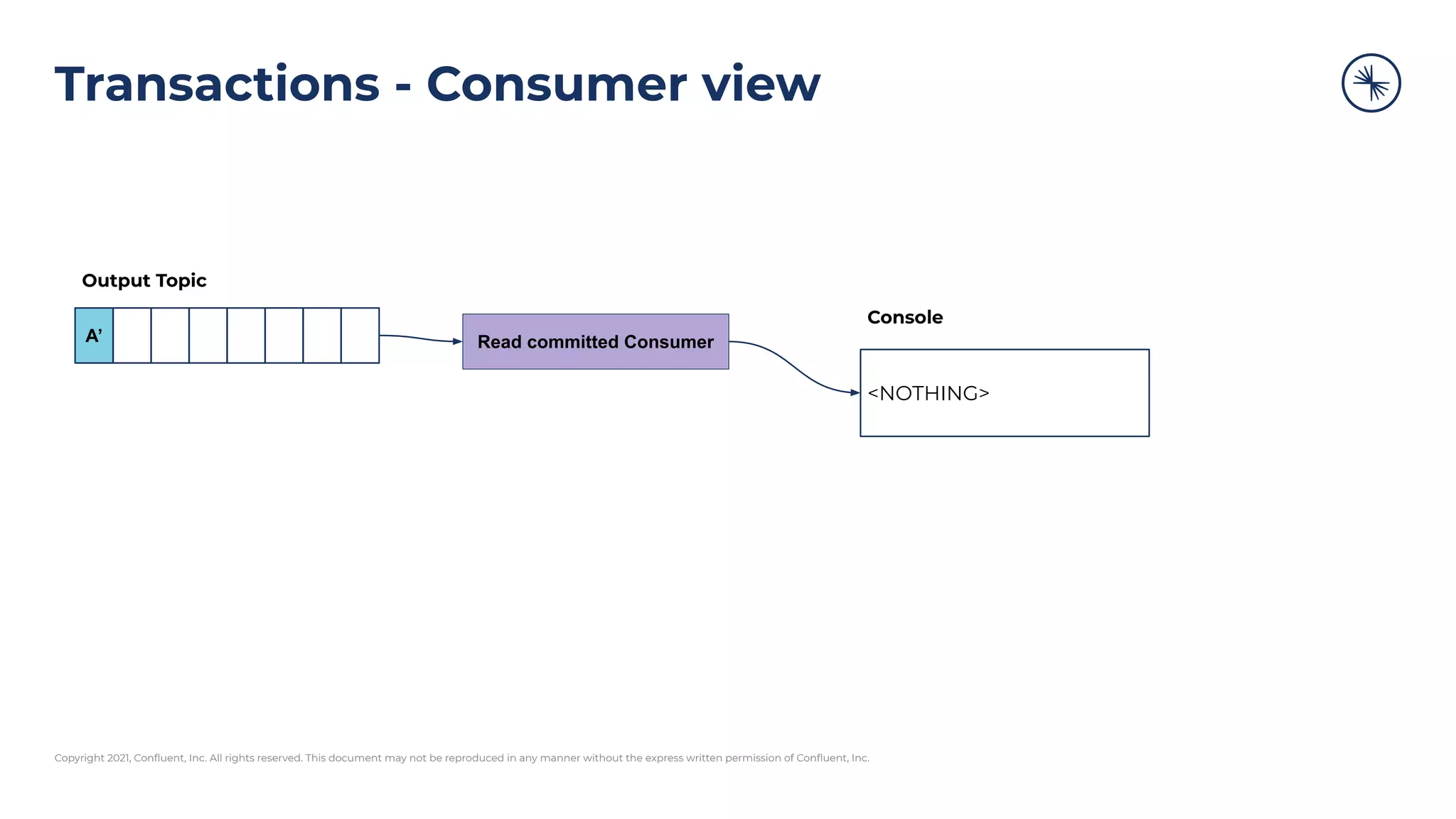

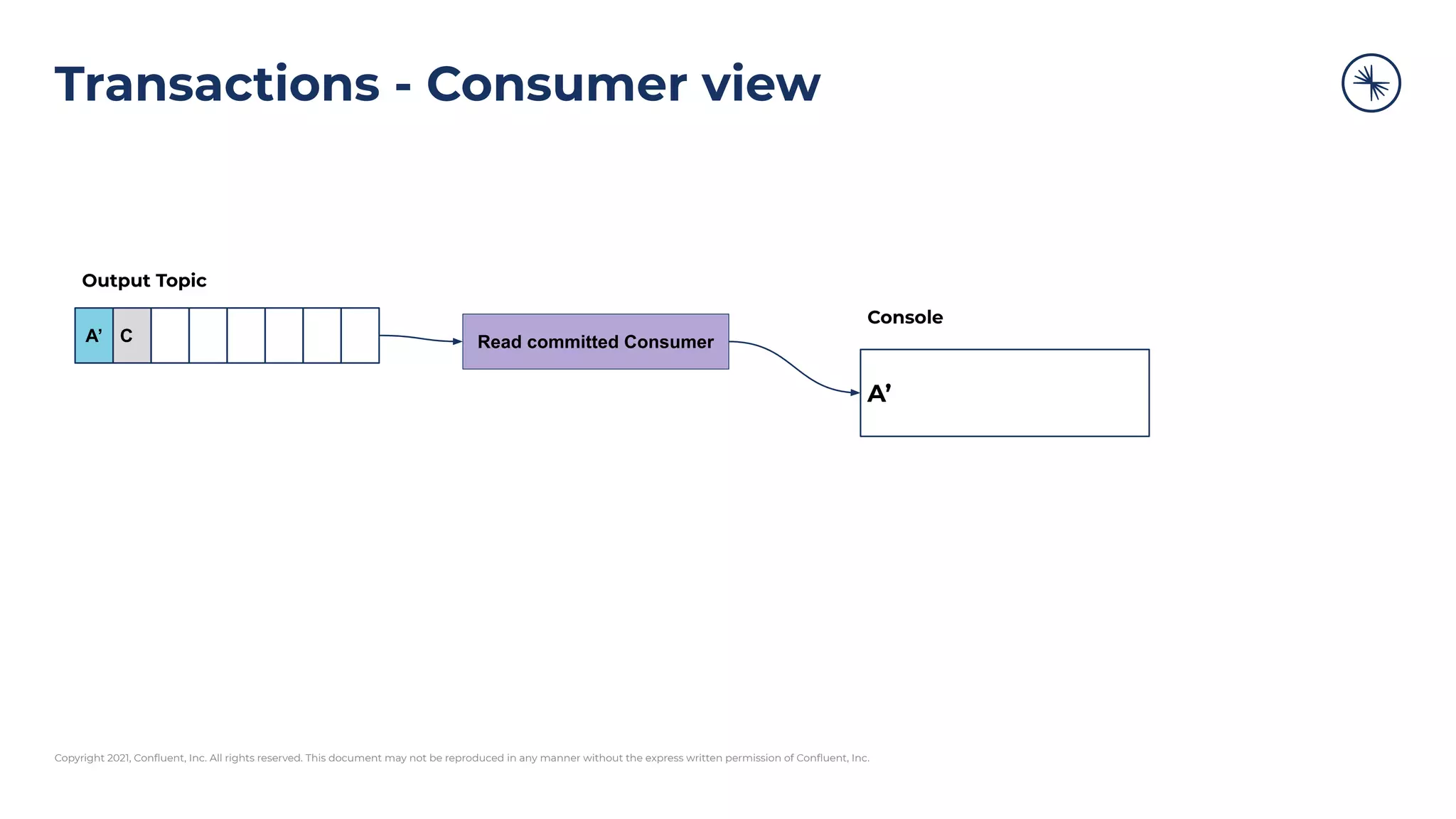

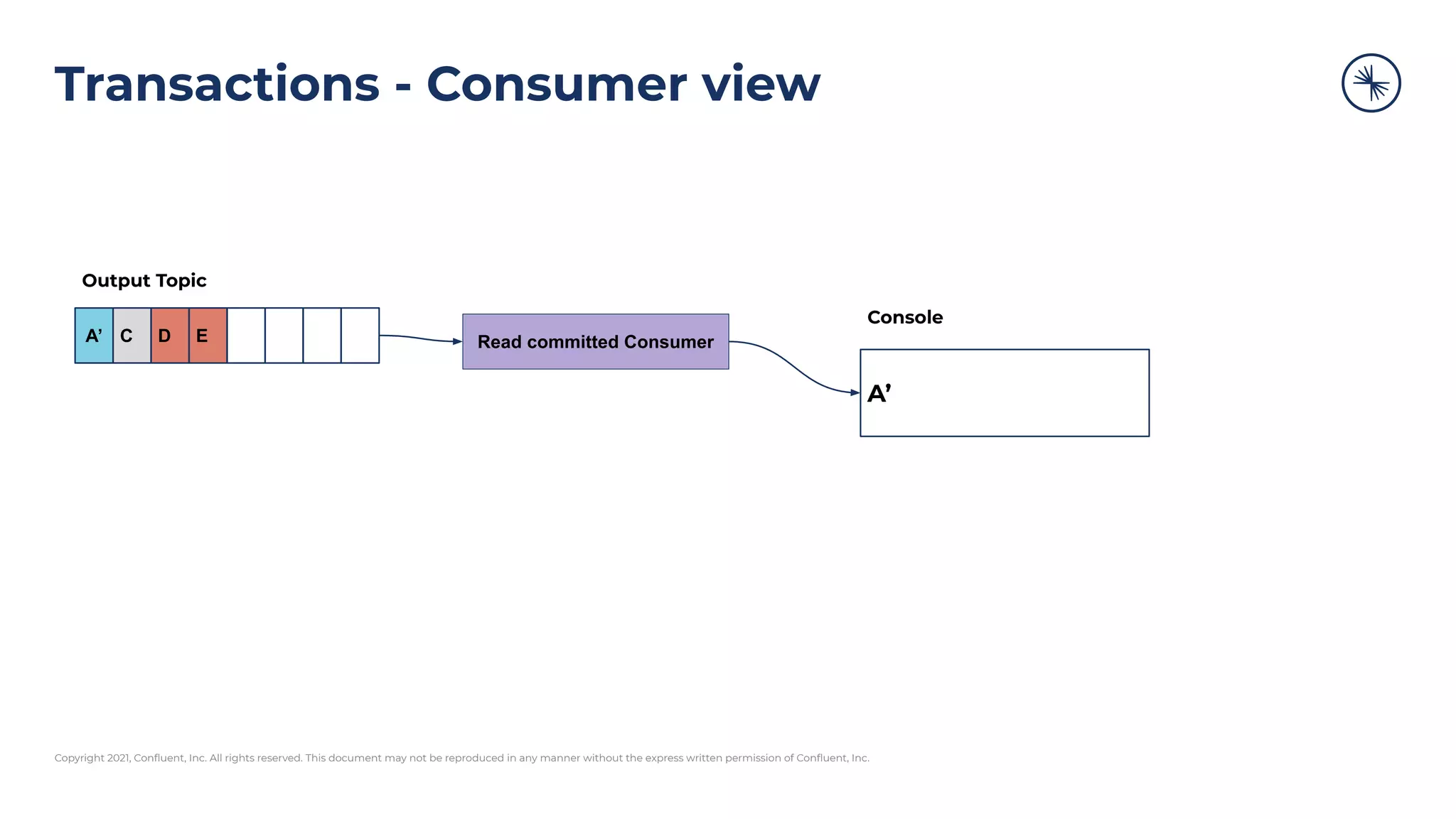

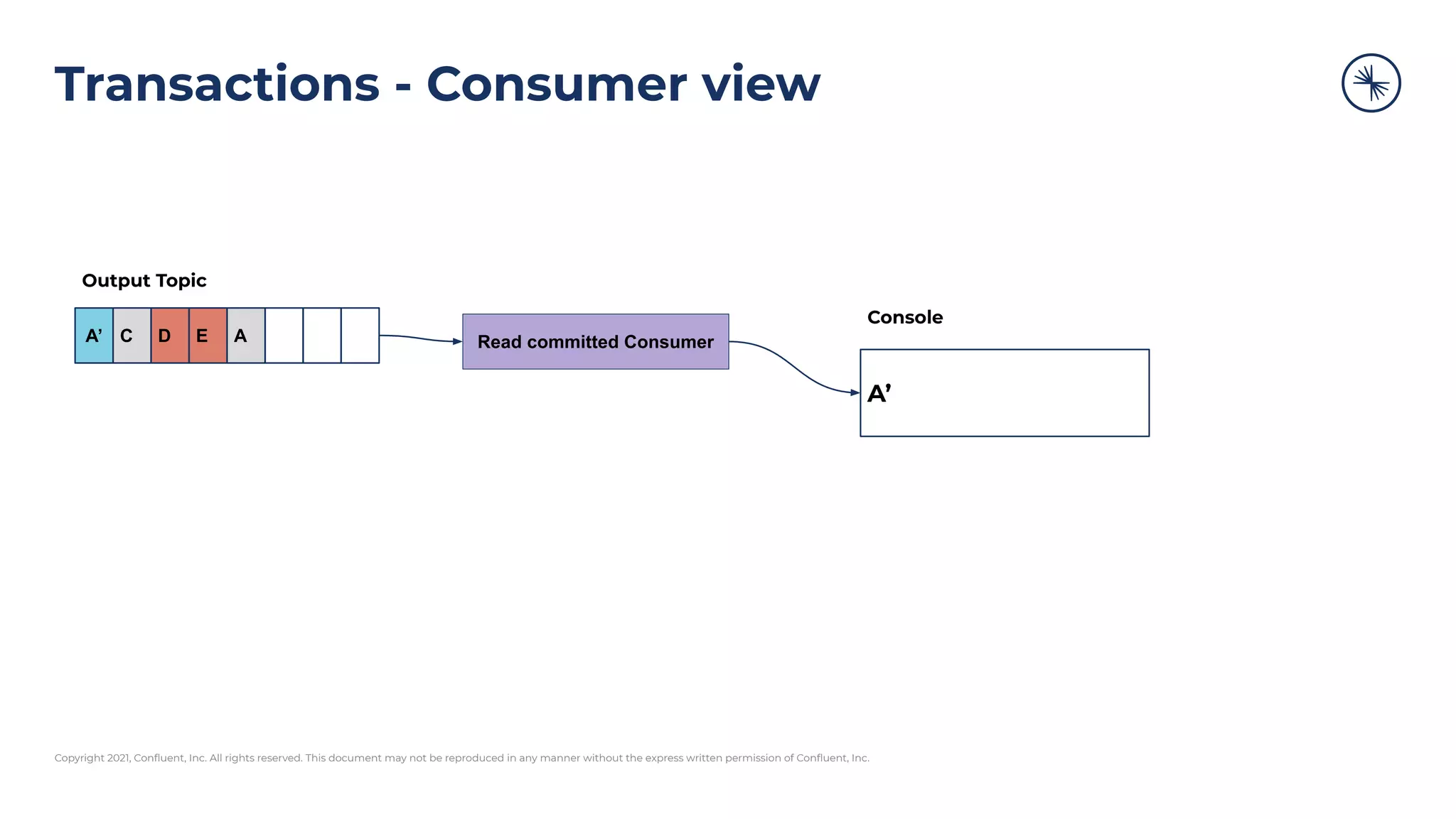

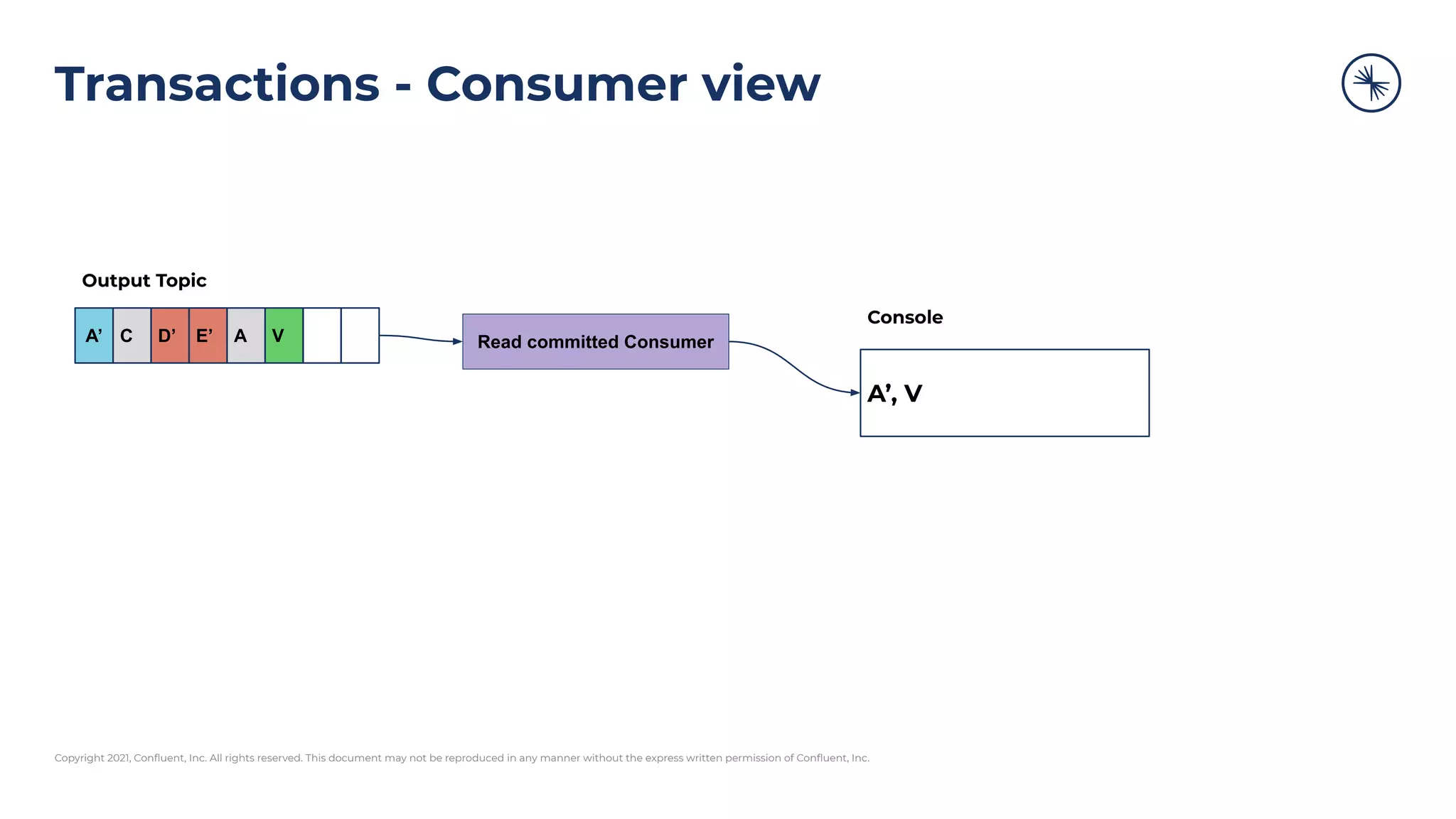

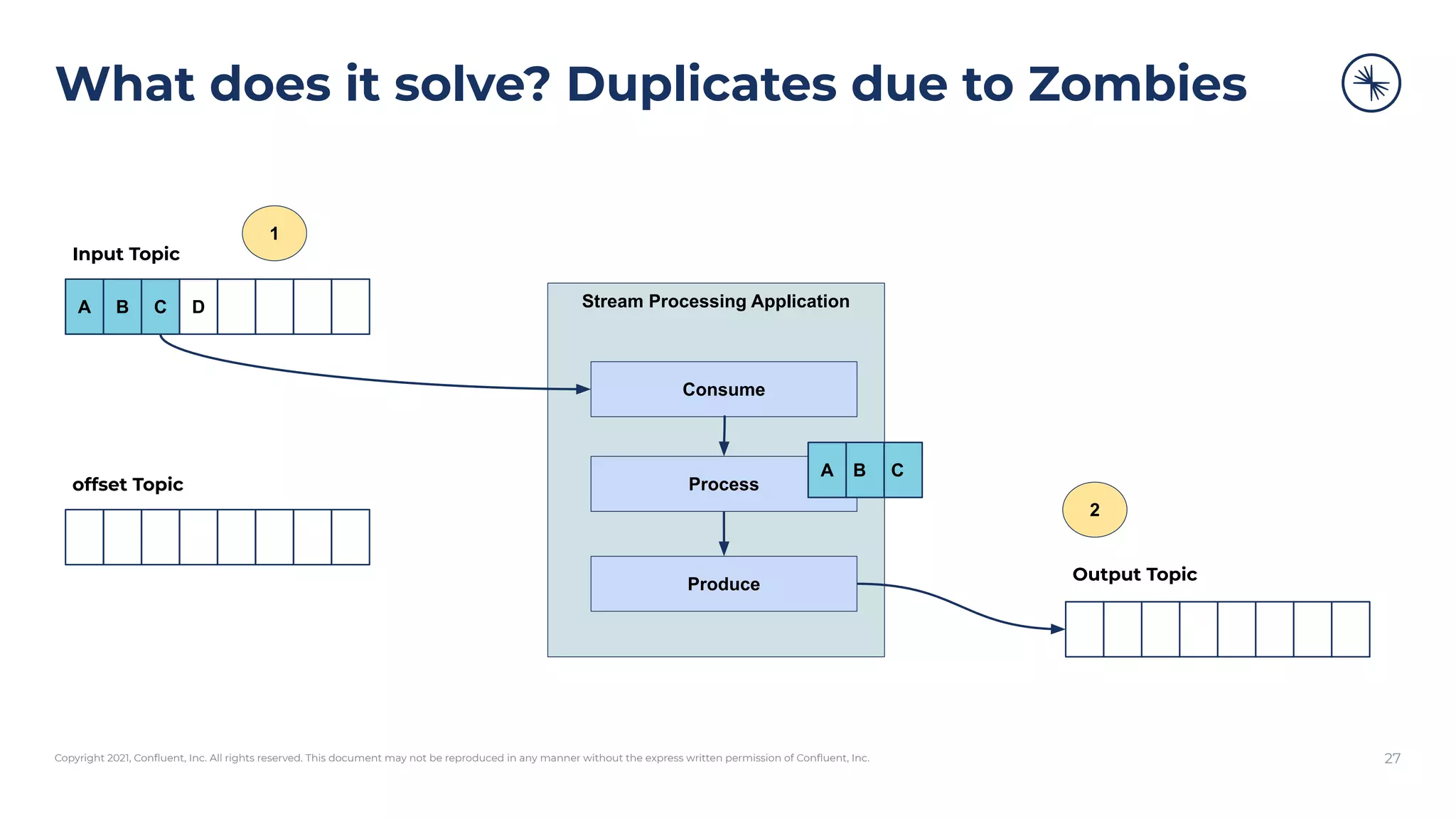

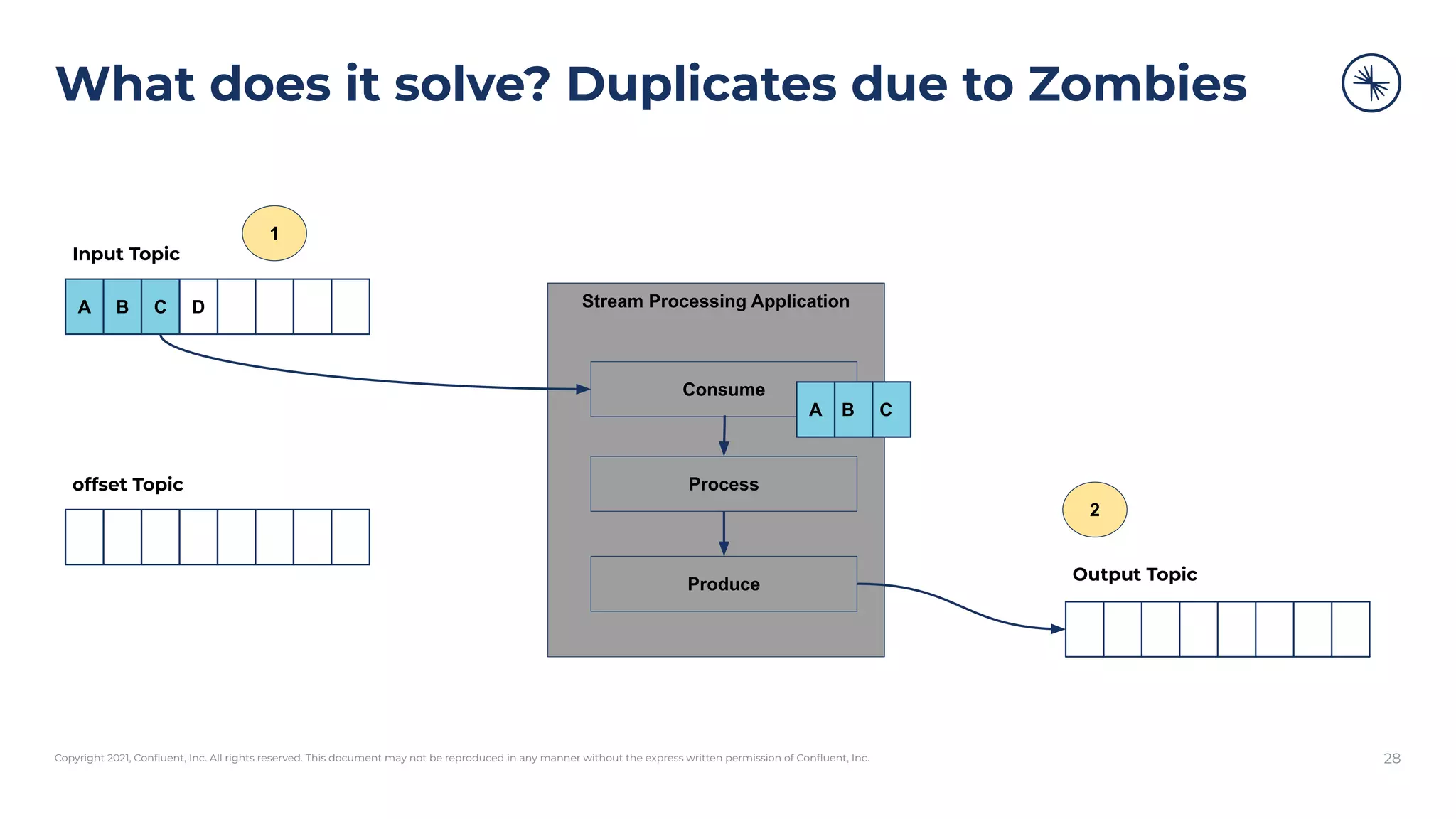

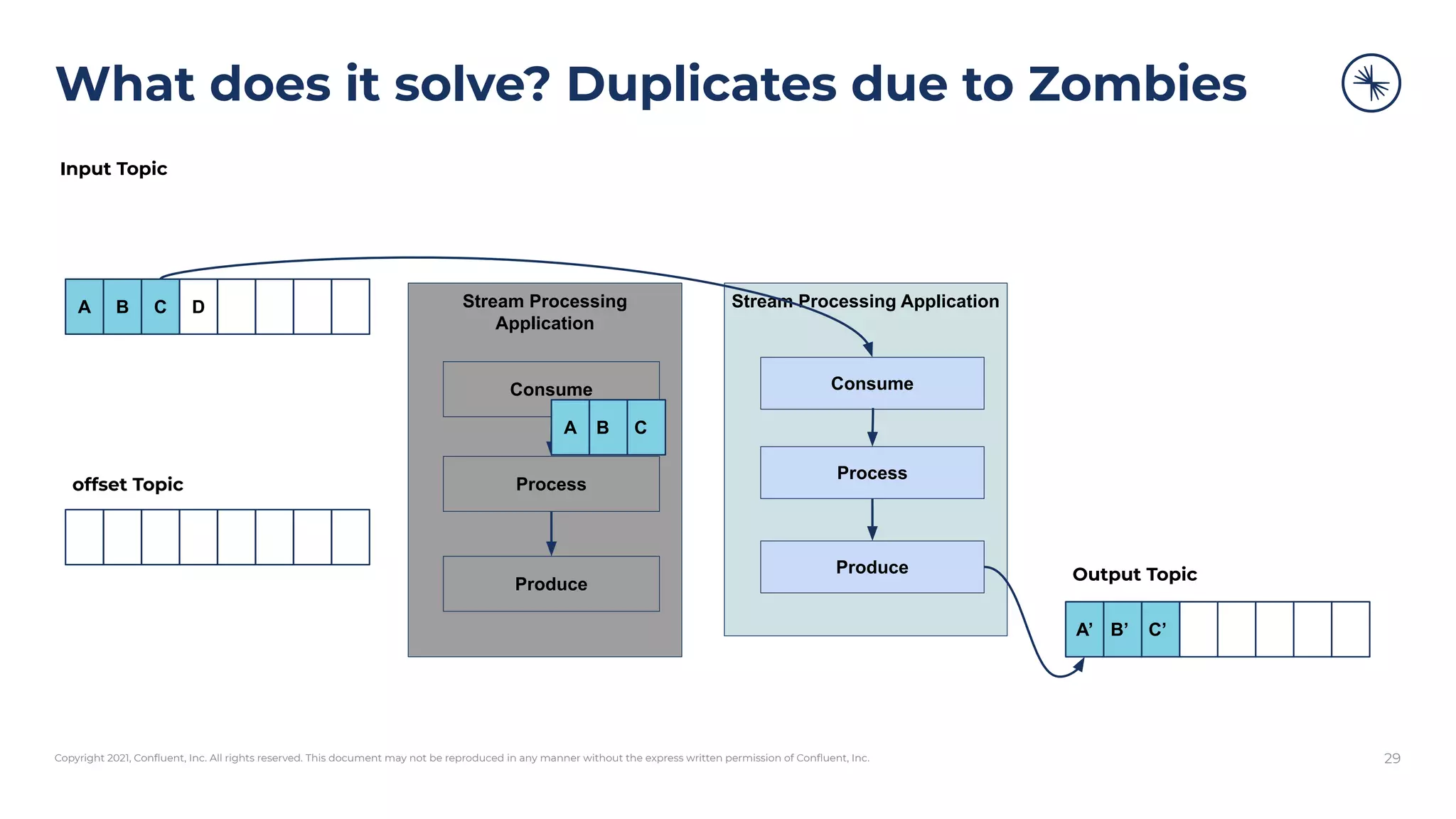

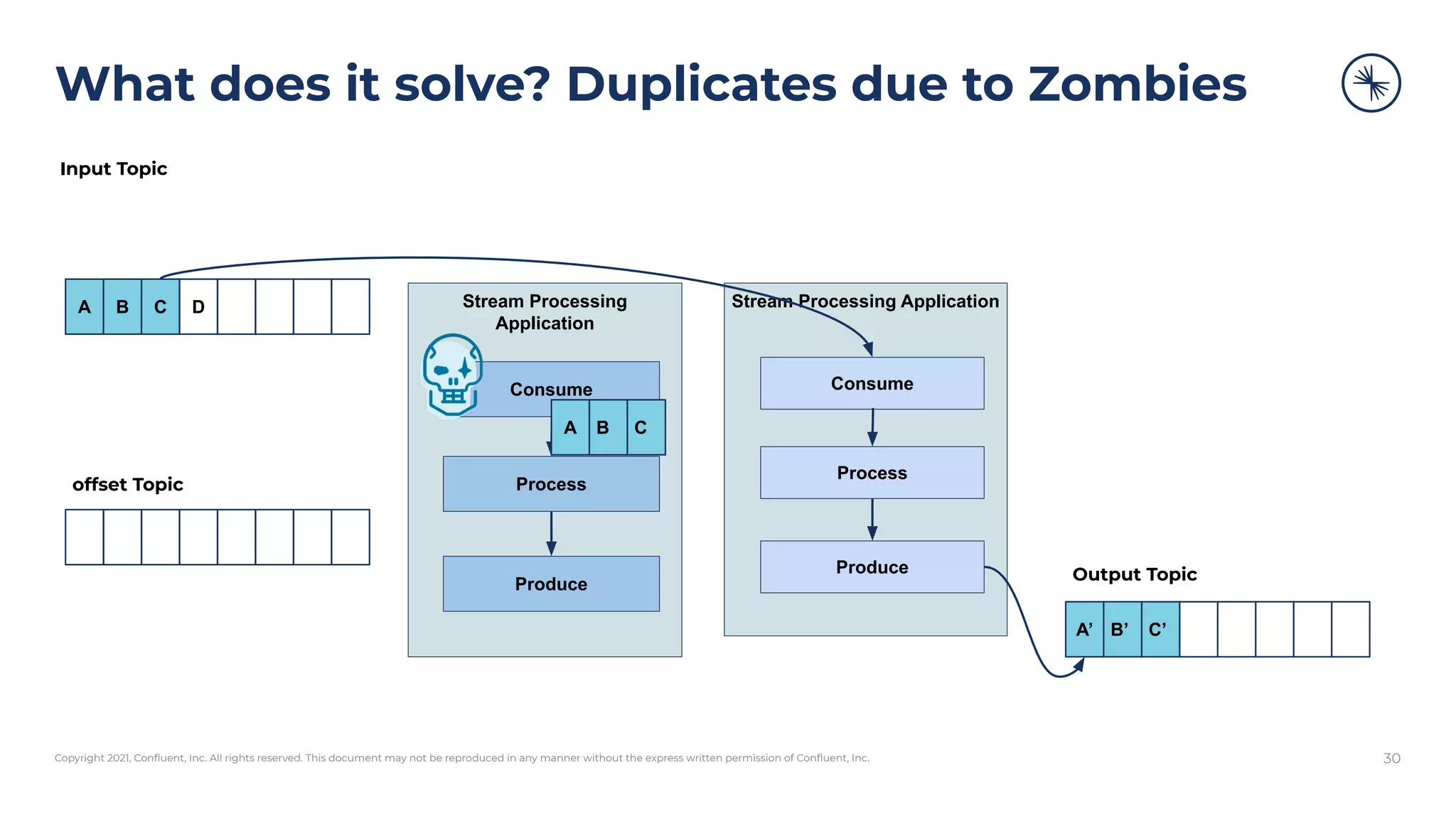

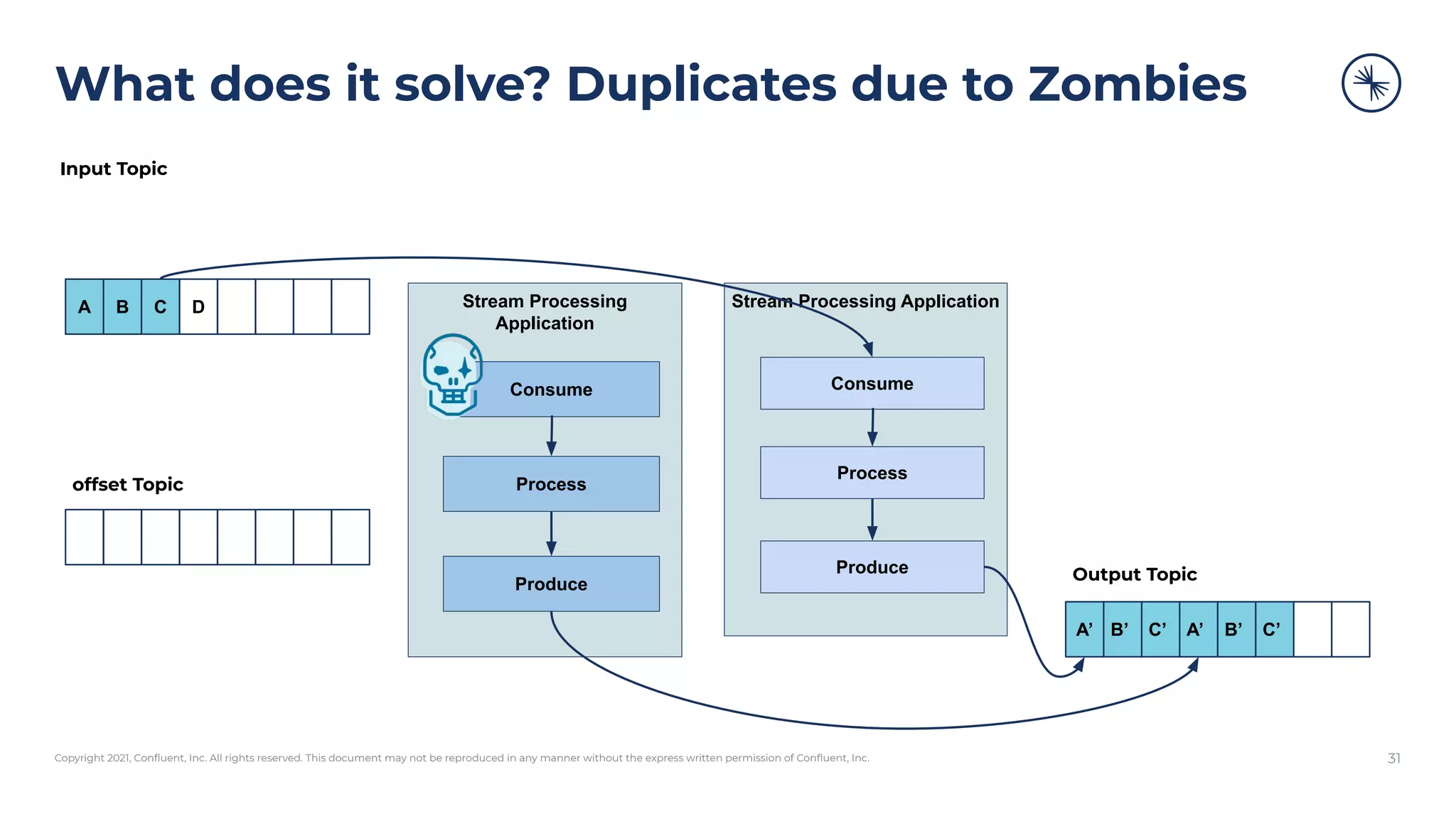

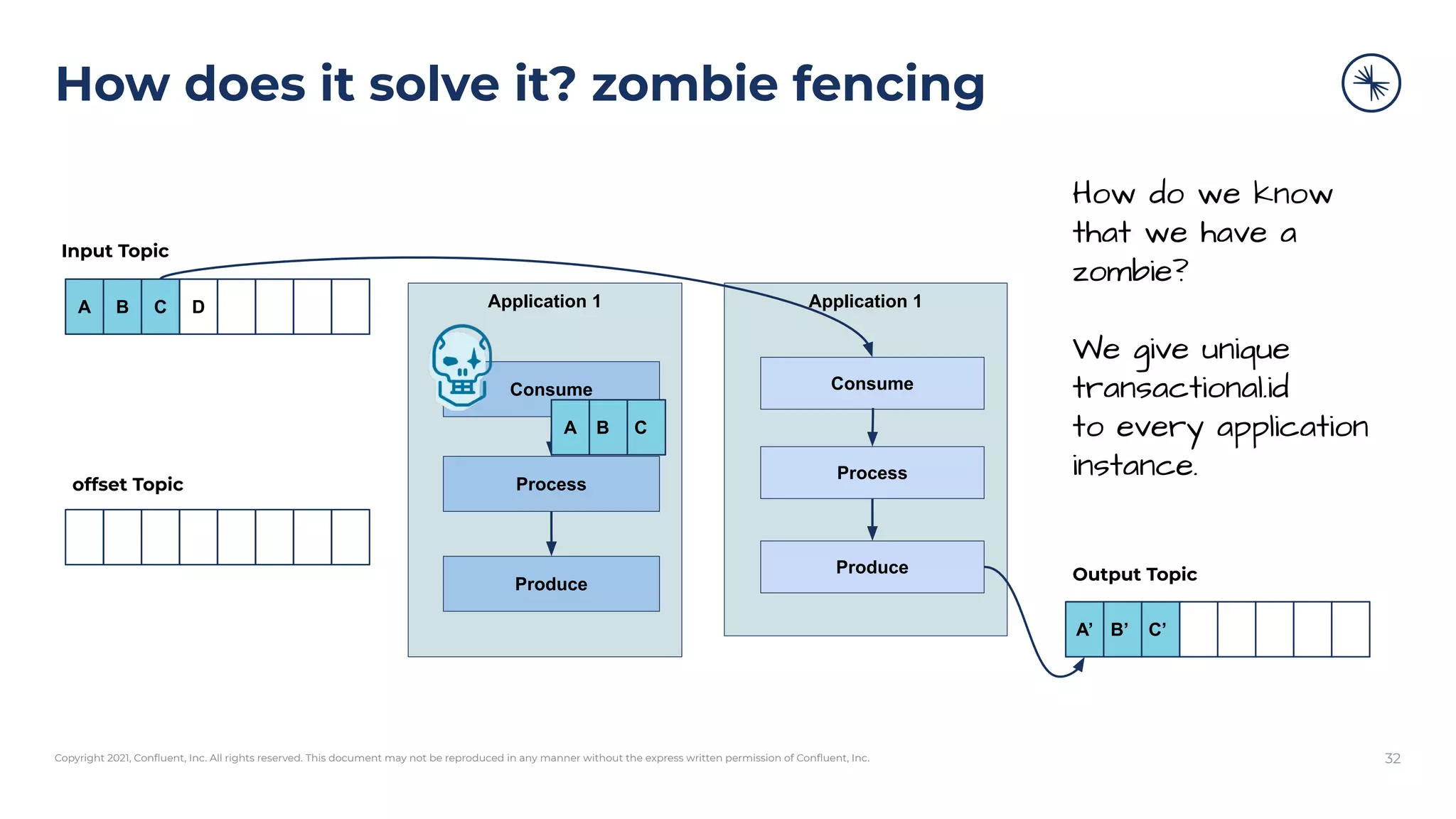

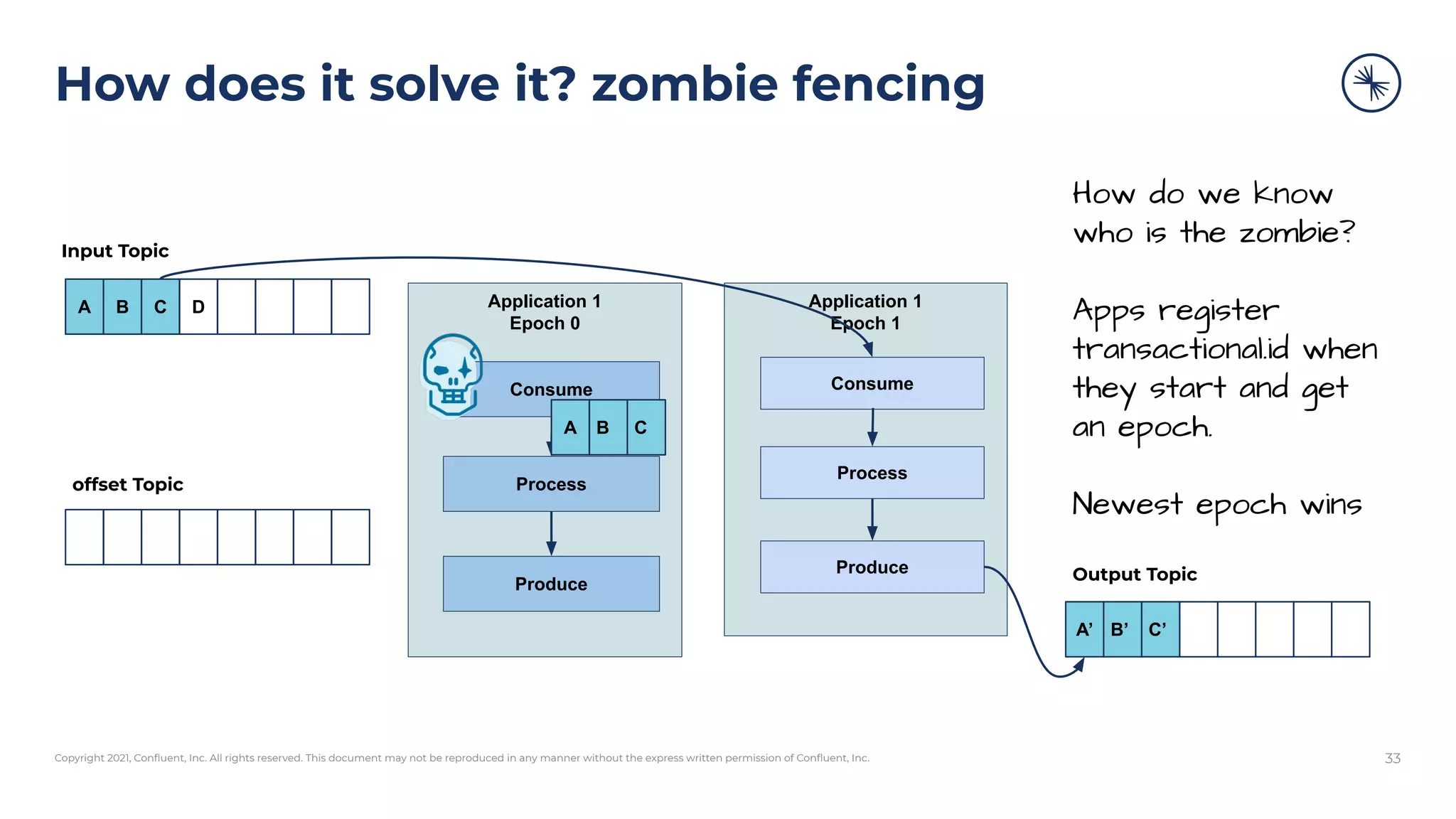

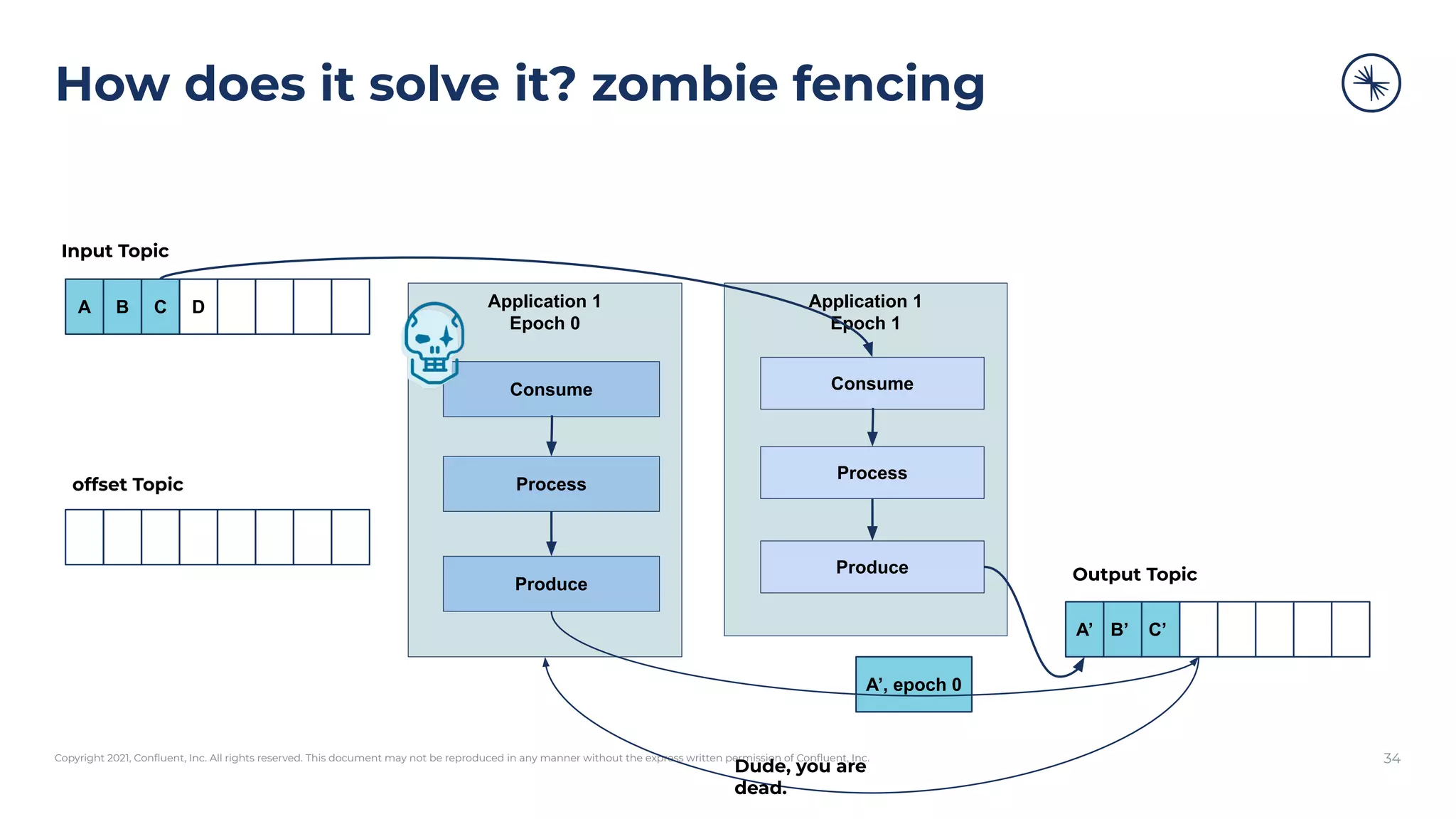

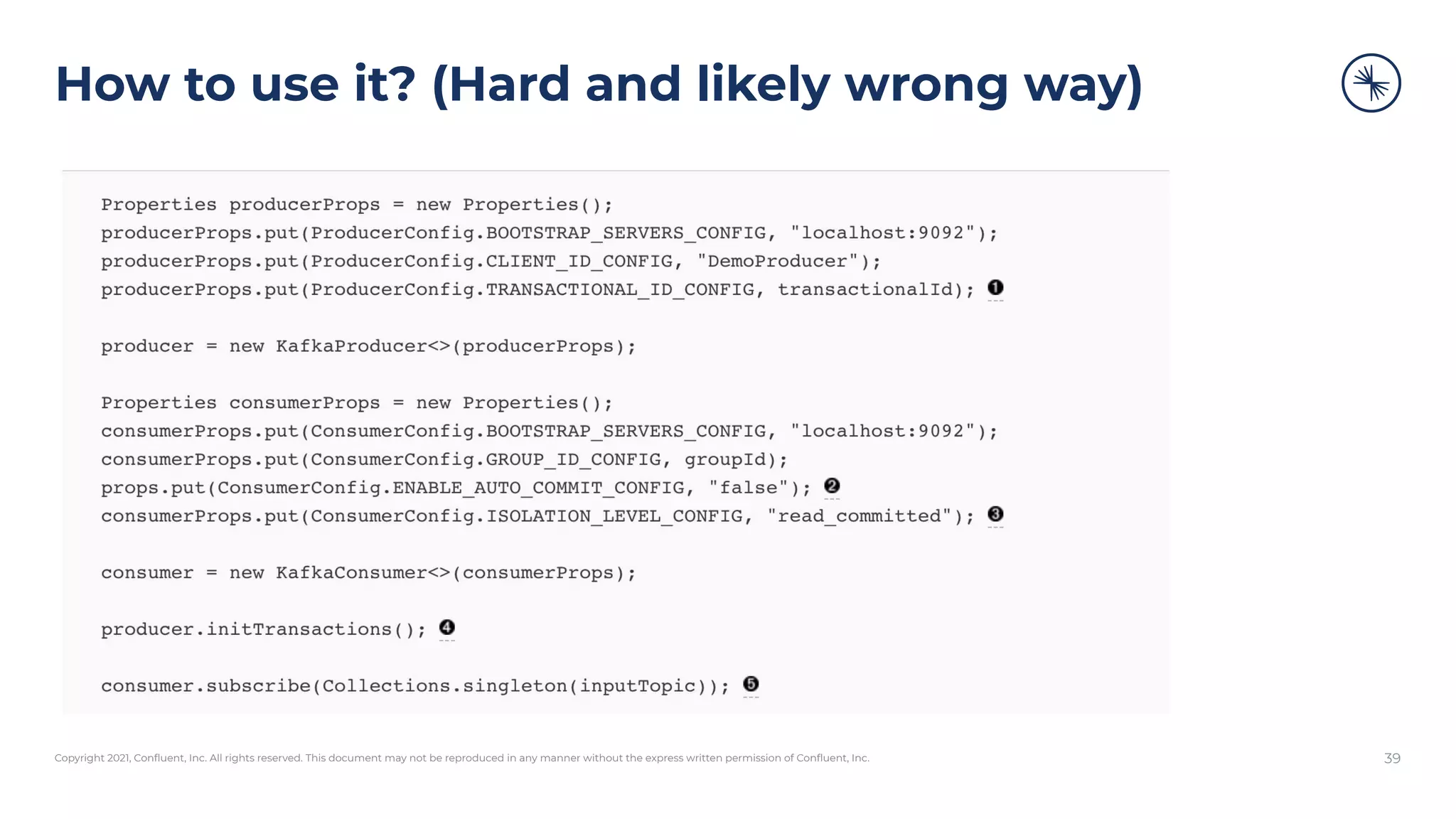

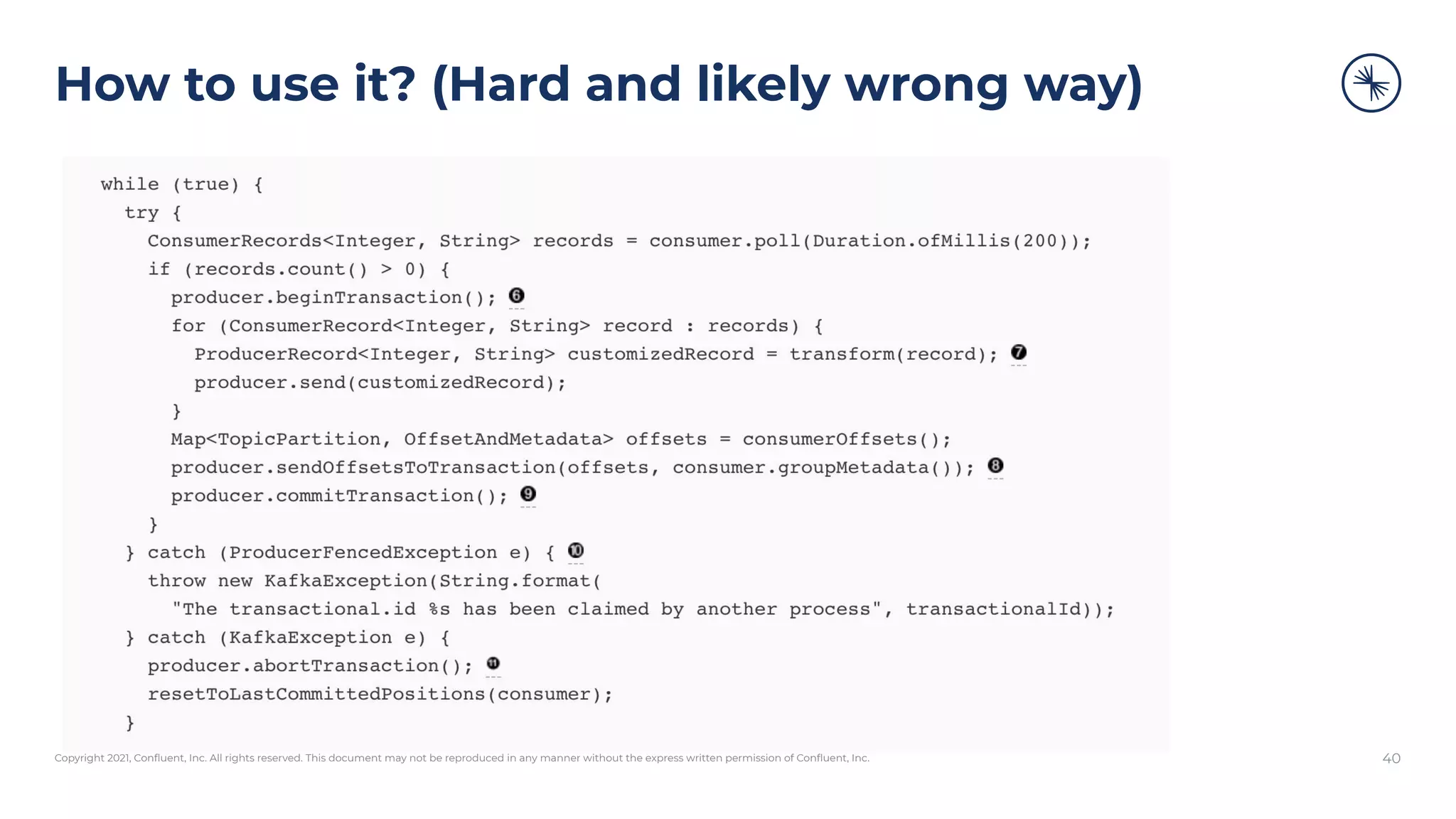

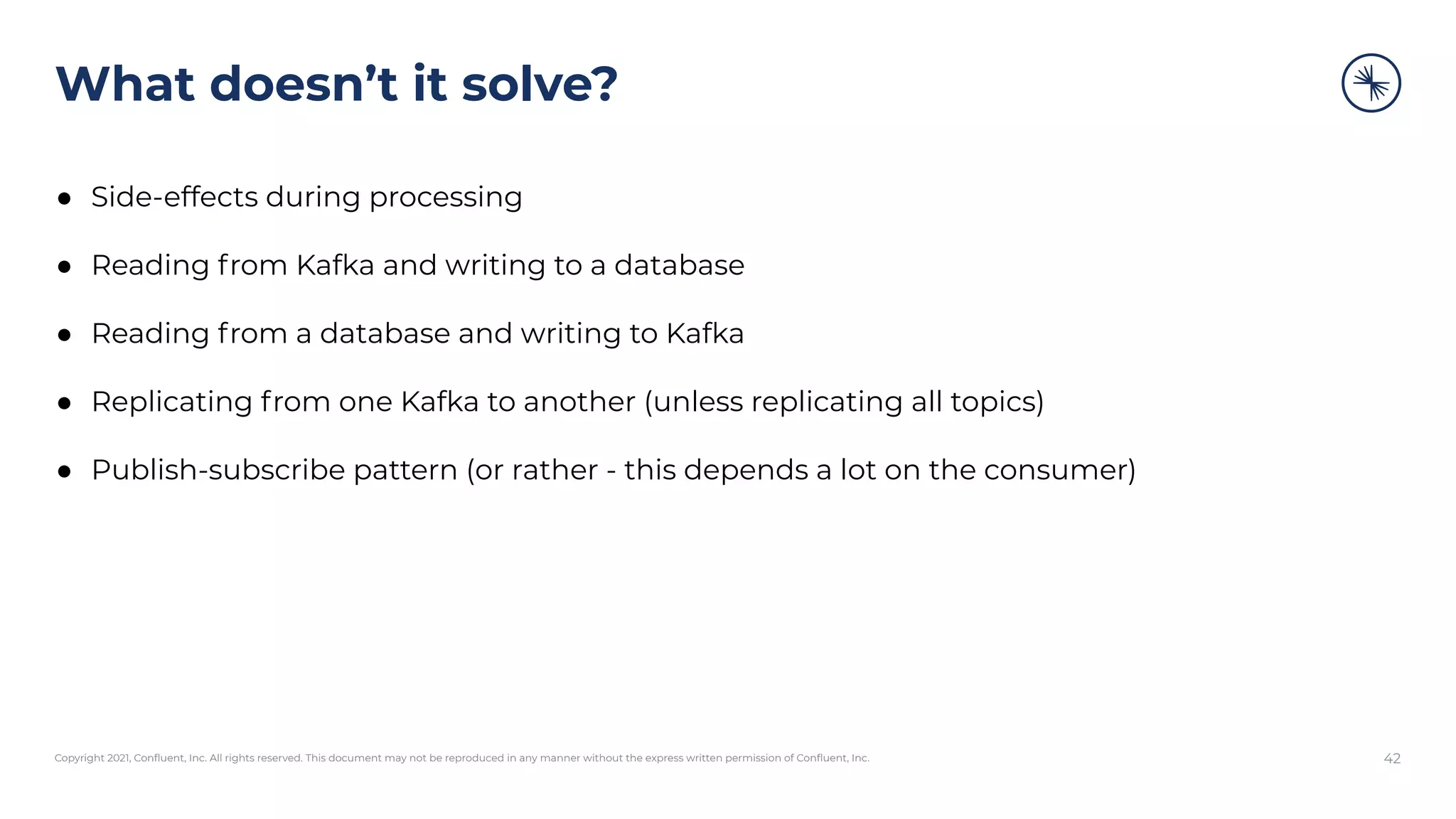

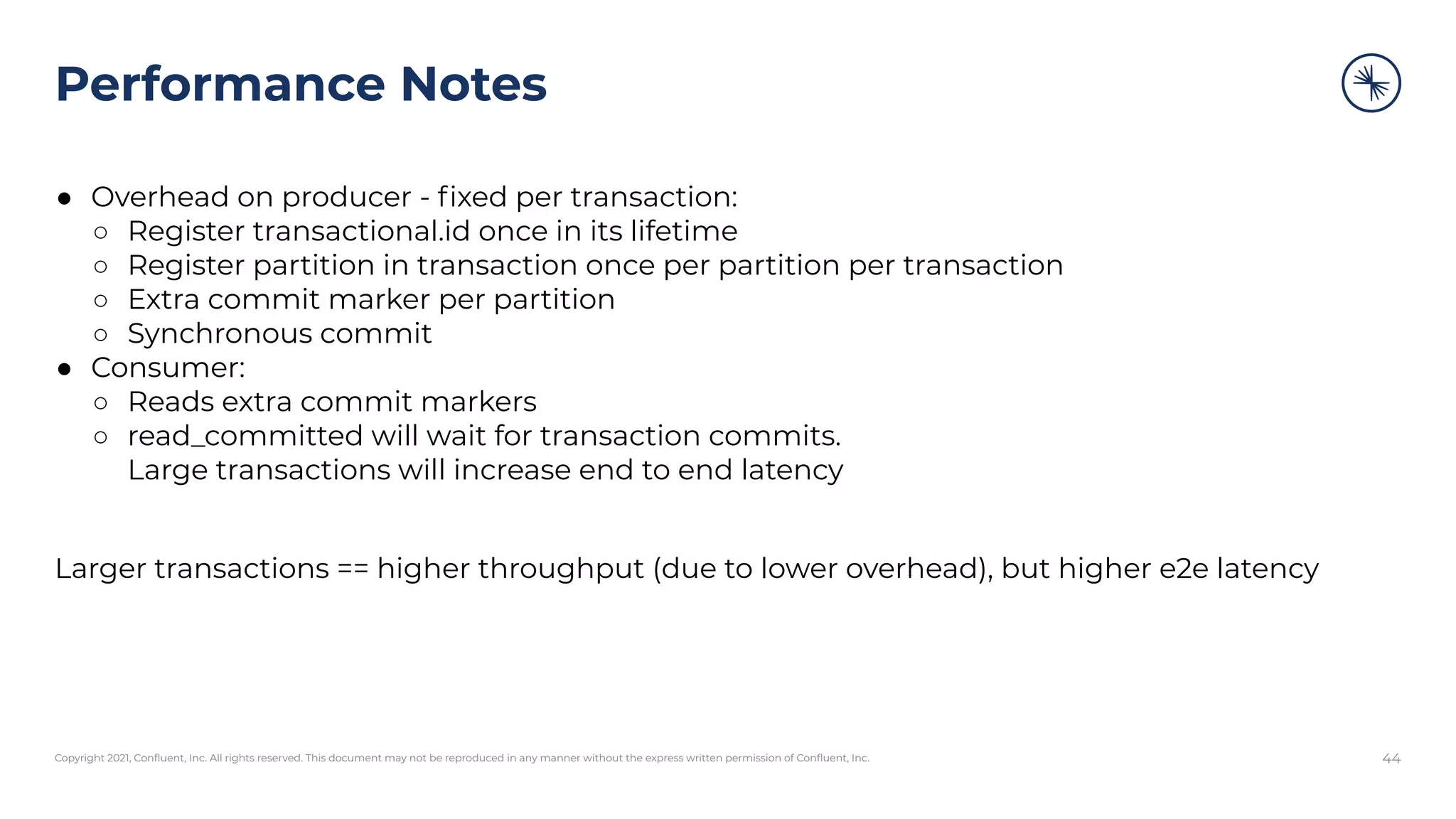

The document provides a comprehensive guide on implementing exactly-once semantics in Apache Kafka, detailing idempotent producers and transactions. It explains how to handle duplicates caused by retries, crashes, and application zombies, along with the necessary configurations for optimal usage. Recommendations include using Kafka Streams with exactly_once guarantees and caution against certain scenarios where this reliability may not be needed.