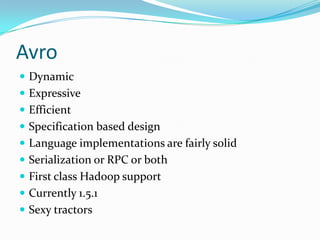

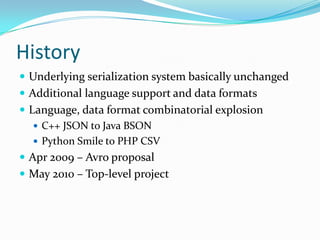

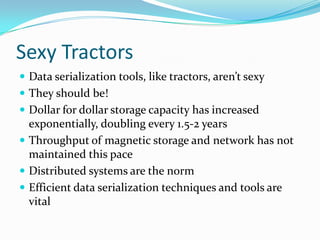

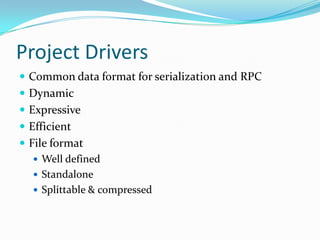

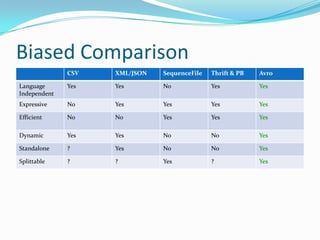

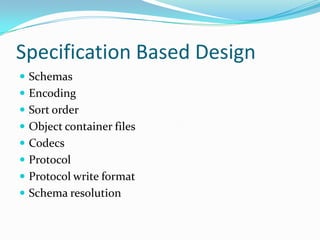

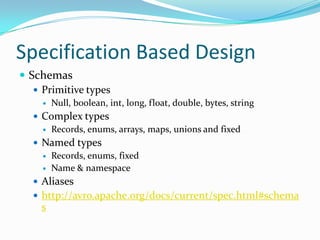

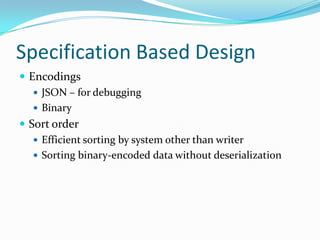

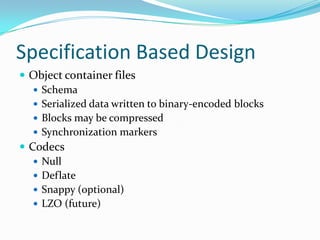

Avro is a data serialization system that provides dynamic typing, a schema-based design, and efficient encoding. It supports serialization, RPC, and has implementations in many languages with first-class support for Hadoop. The project aims to make data serialization tools more useful and "sexy" for distributed systems.

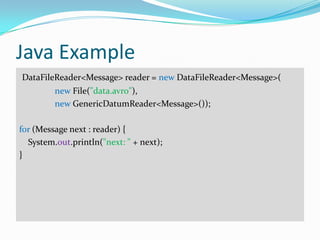

![Schema Example

log-message.avpr

{

"namespace": "com.emoney",

"name": "LogMessage",

"type": "record",

"fields": [

{"name": "level", "type": "string", "comment" : "this is ignored"},

{"name": "message", "type": "string", "description" : "this is the message"},

{"name": "dateTime", "type": "long"},

{"name": "exceptionMessage", "type": ["null", "string"]}

]

}](https://image.slidesharecdn.com/avro-public-111028124708-phpapp01/85/Avro-12-320.jpg)

![Protocol

{

"namespace": "com.acme",

"protocol": "HelloWorld",

"doc": "Protocol Greetings",

"types": [

{"name": "Greeting", "type": "record", "fields": [ {"name": "message", "type": "string"}]},

{"name": "Curse", "type": "error", "fields": [ {"name": "message", "type": "string"}]} ],

"messages": {

"hello": {

"doc": "Say hello.",

"request": [{"name": "greeting", "type": "Greeting" }],

"response": "Greeting",

"errors": ["Curse"]

}

}

}](https://image.slidesharecdn.com/avro-public-111028124708-phpapp01/85/Avro-16-320.jpg)