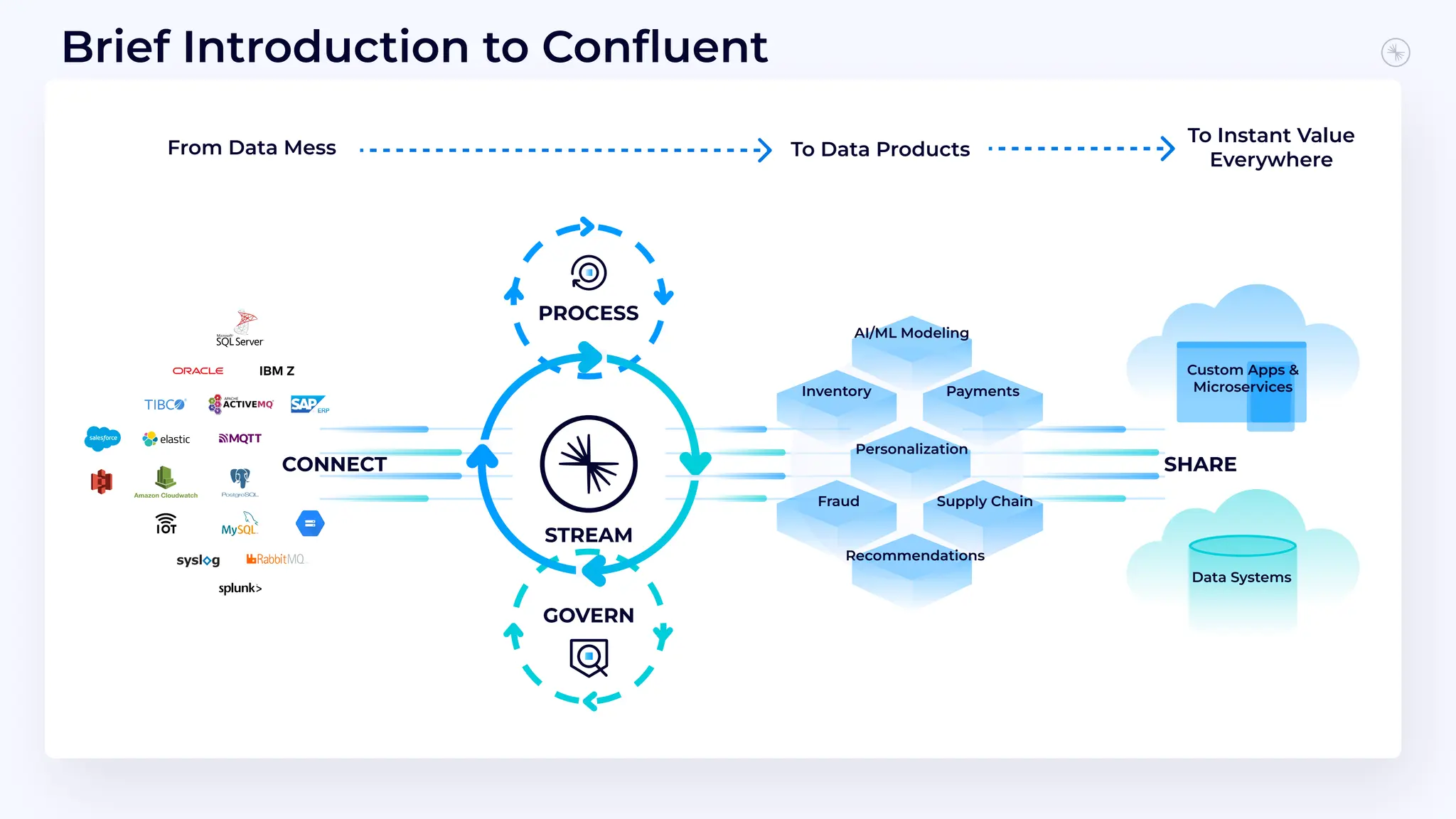

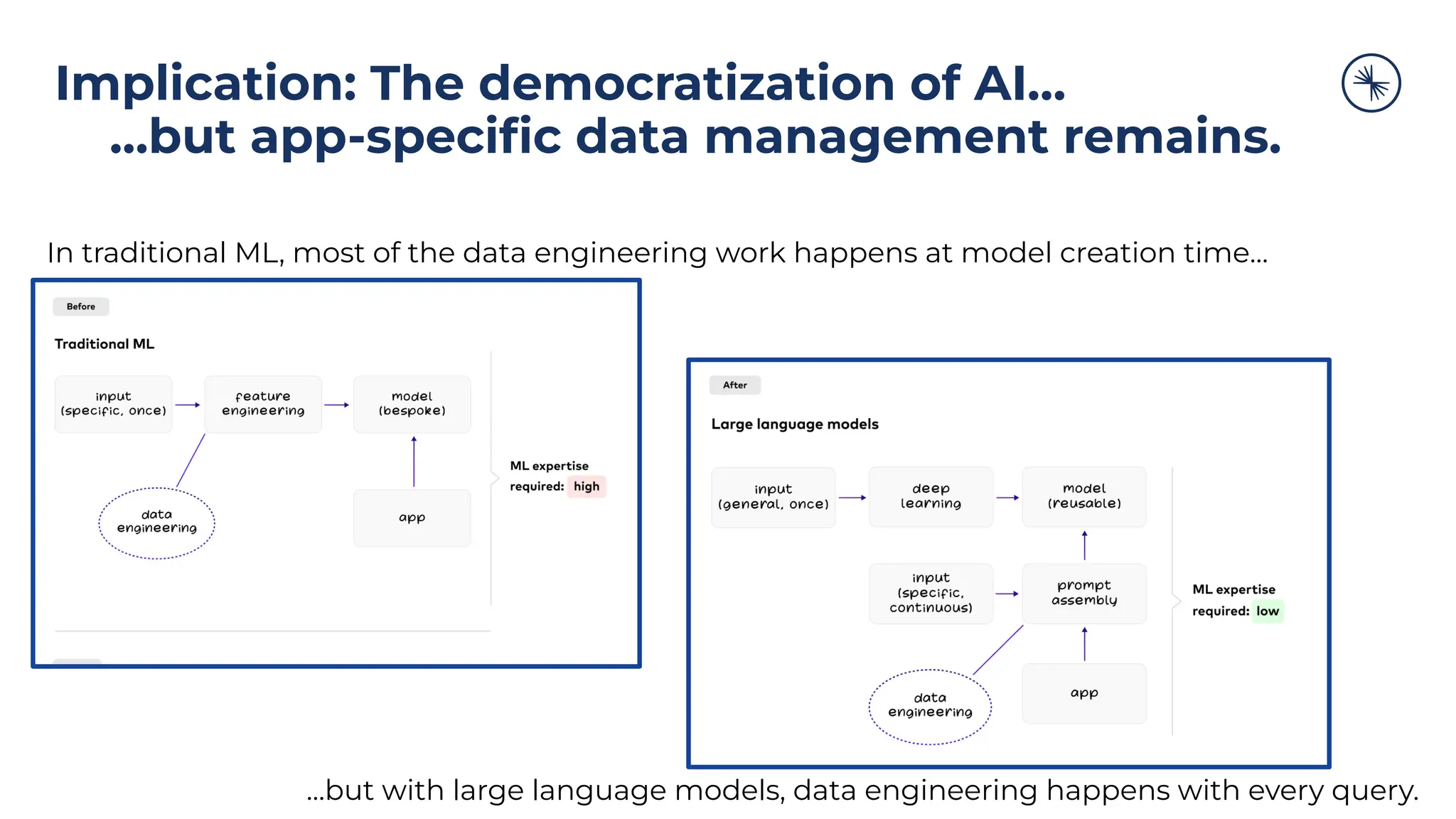

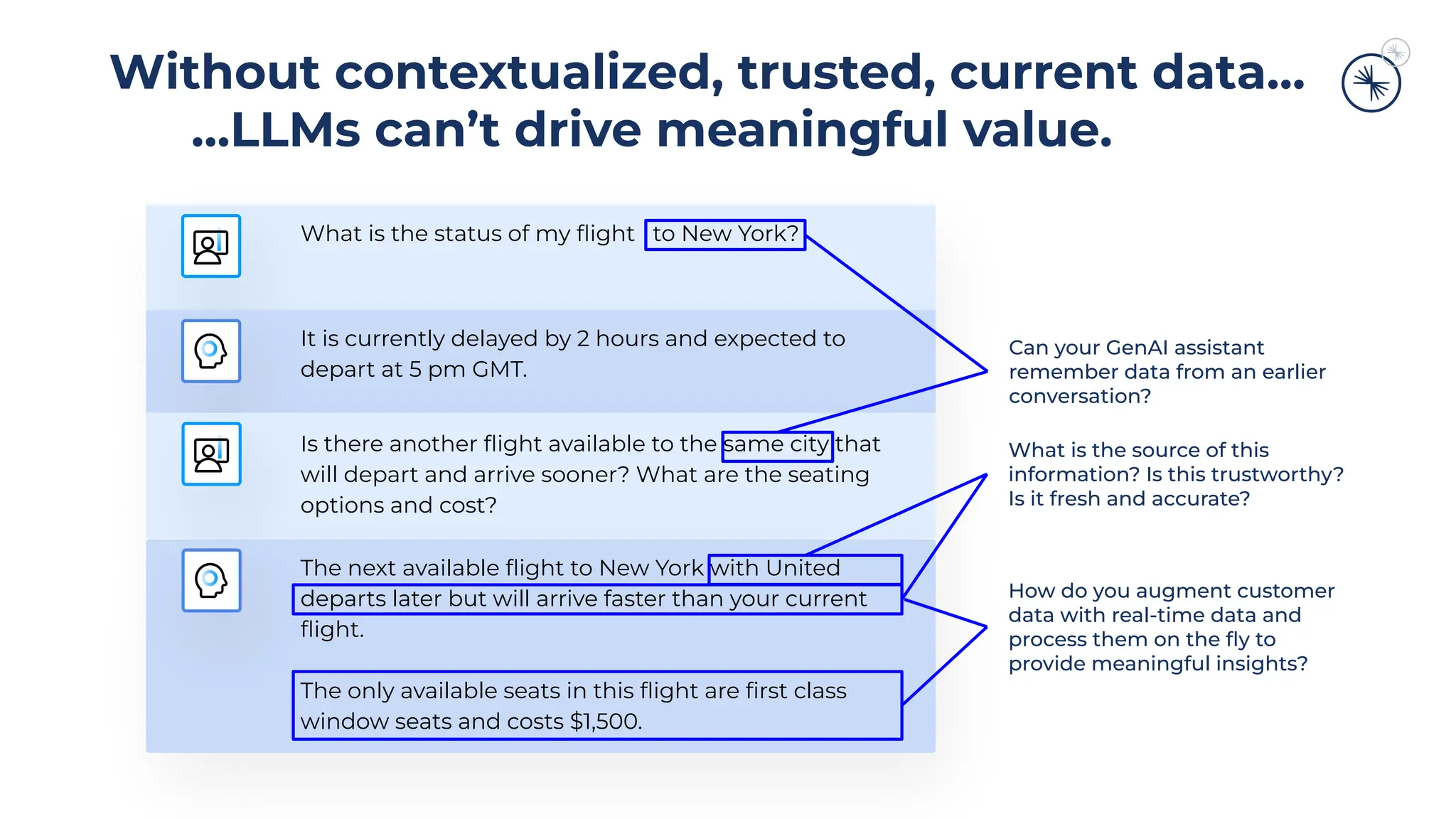

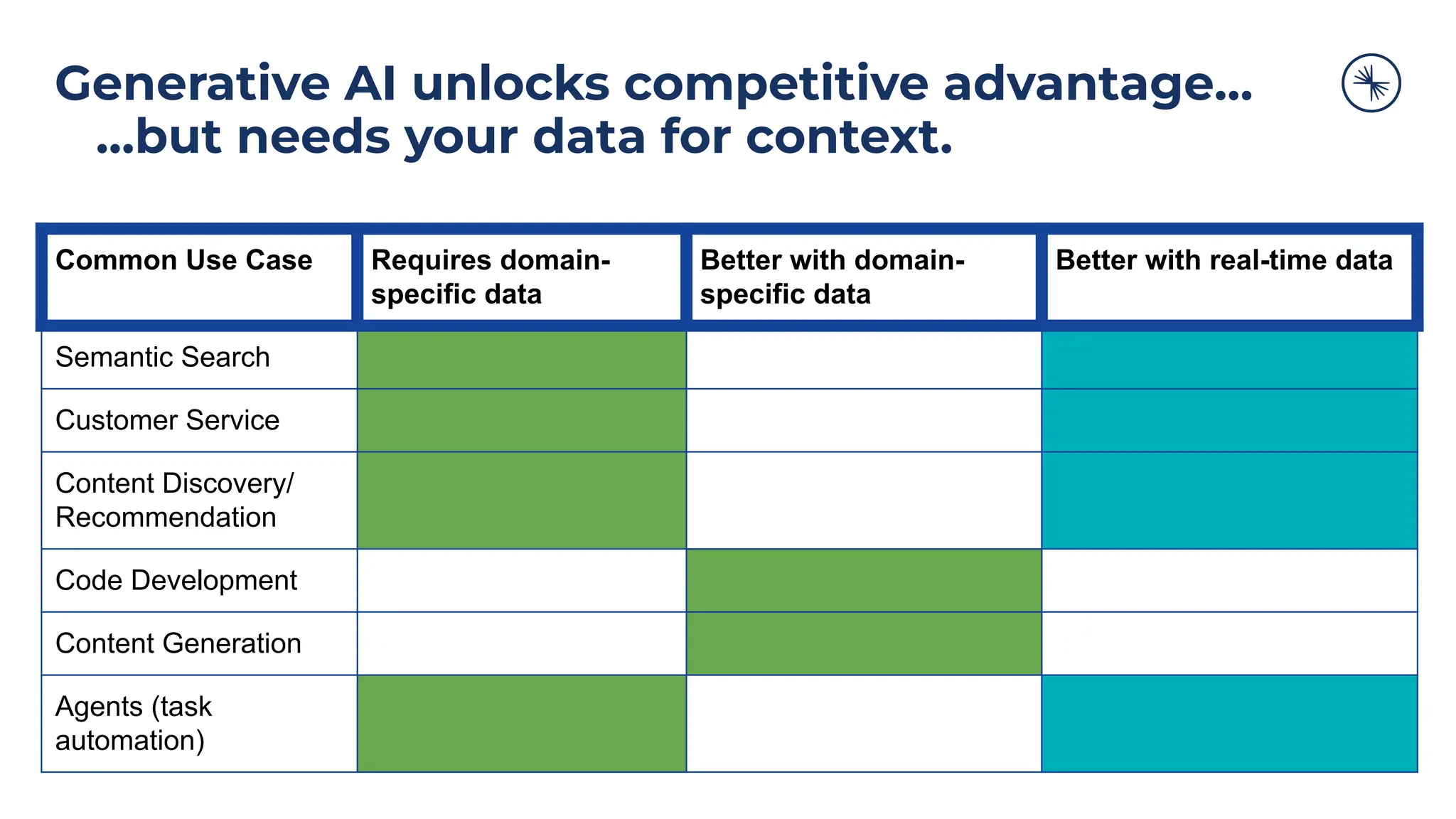

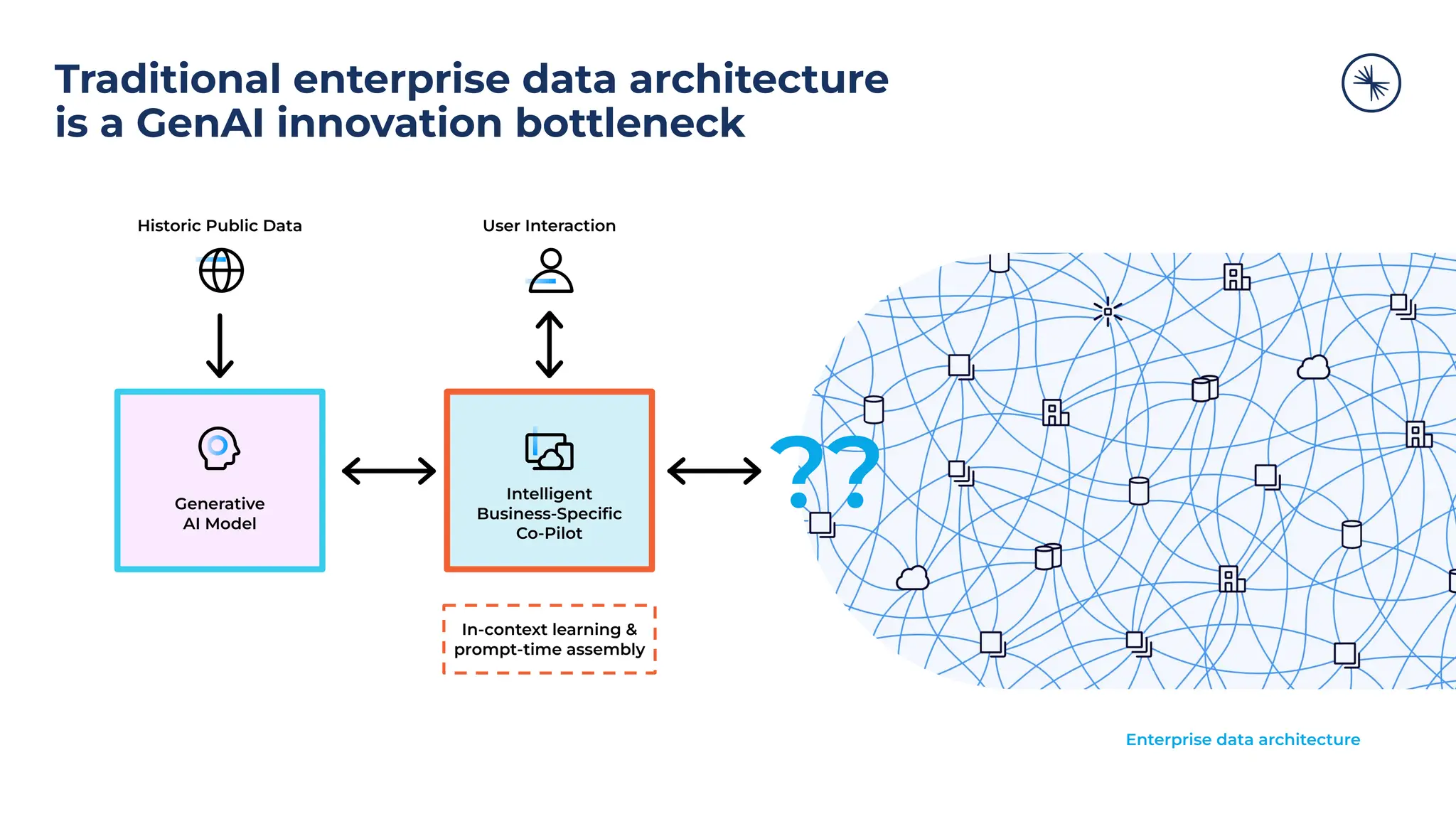

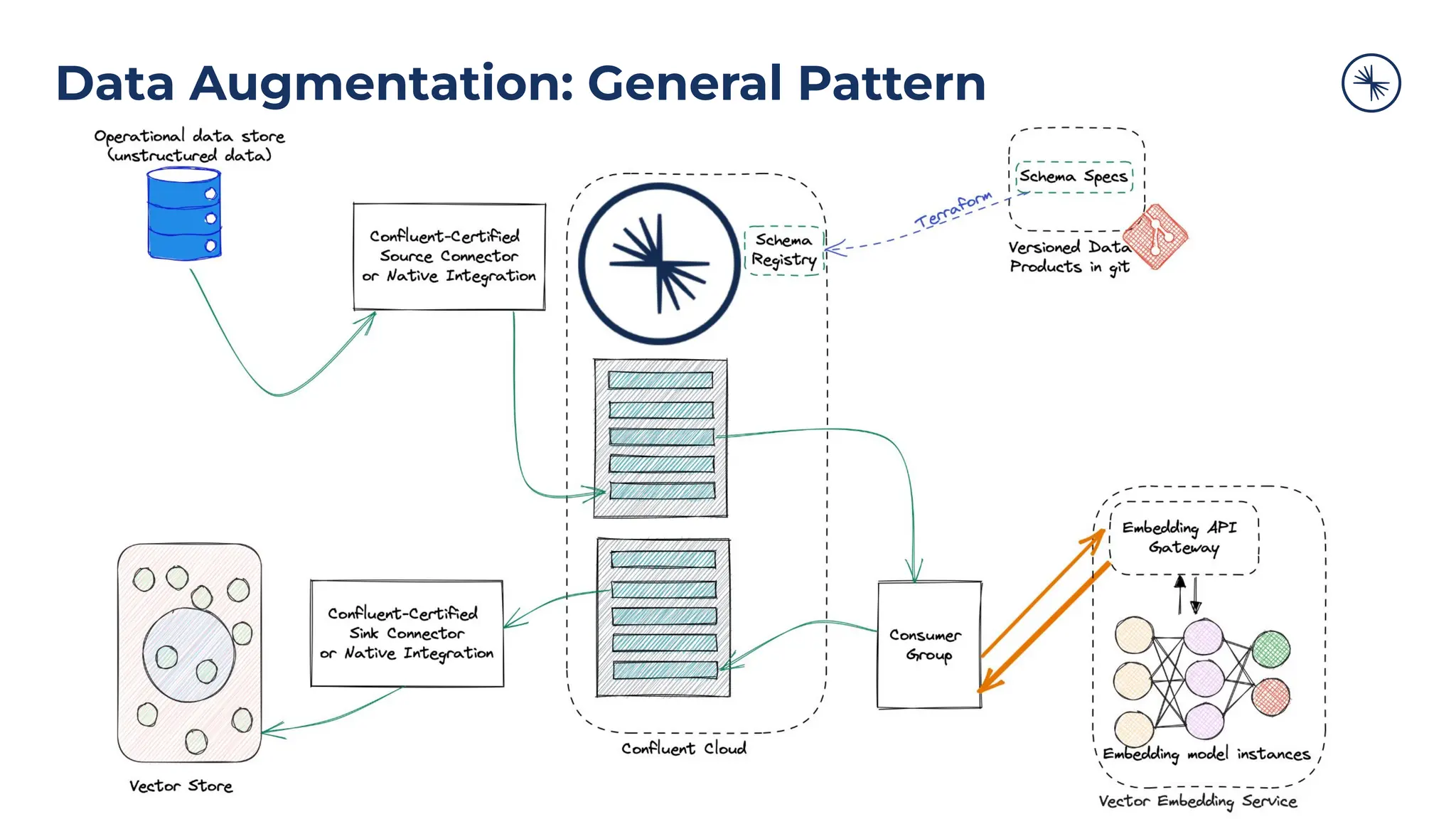

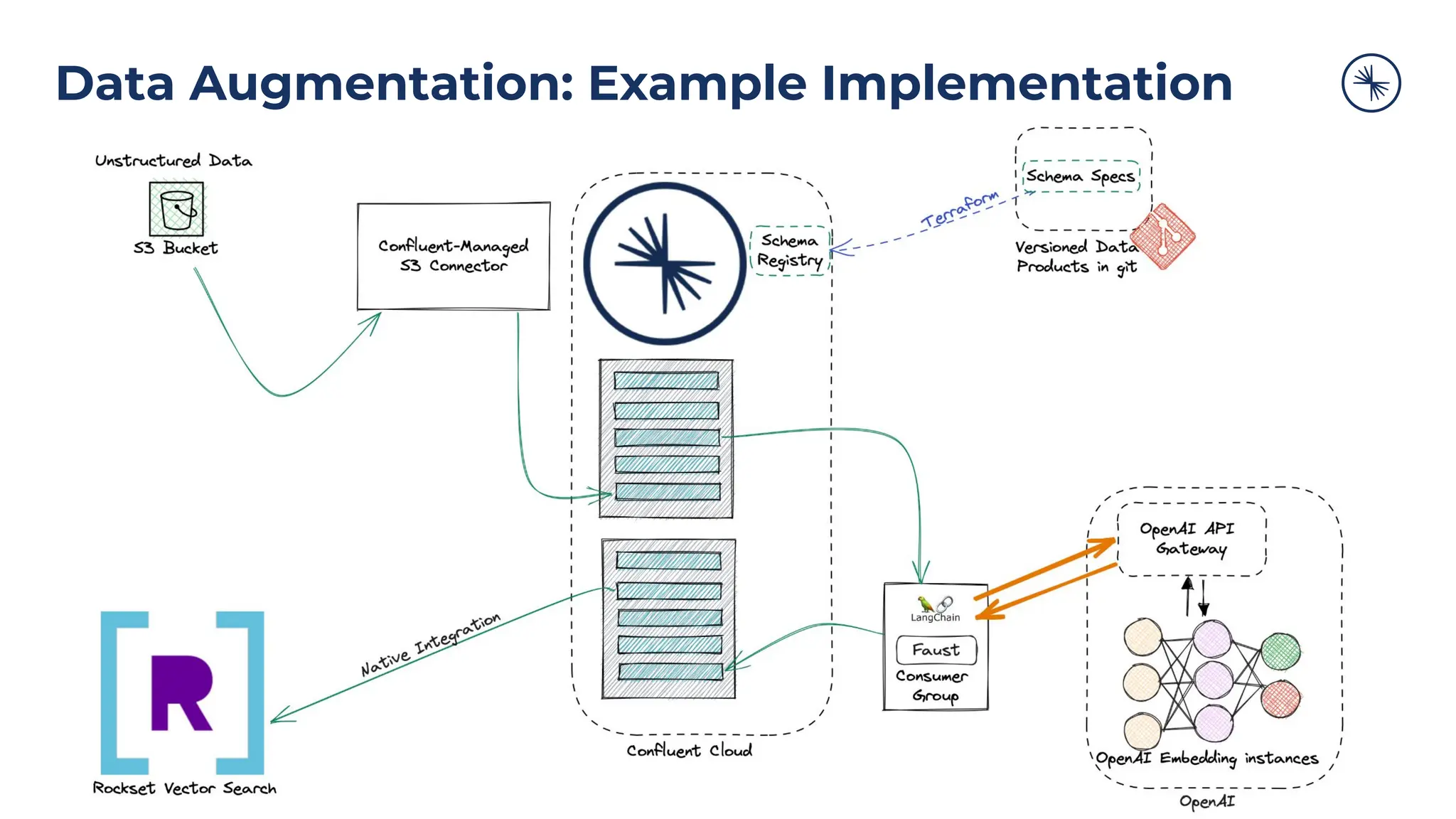

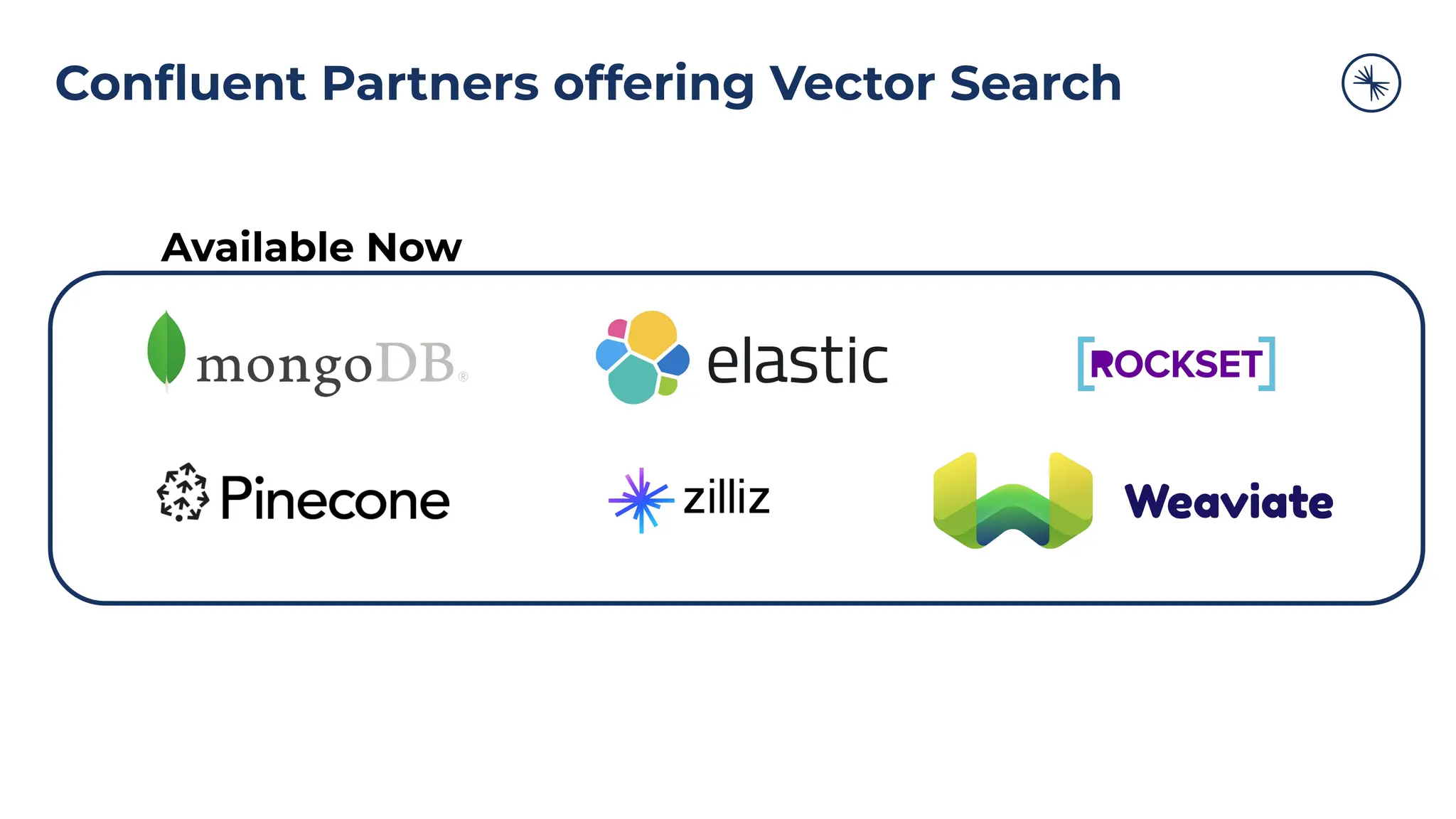

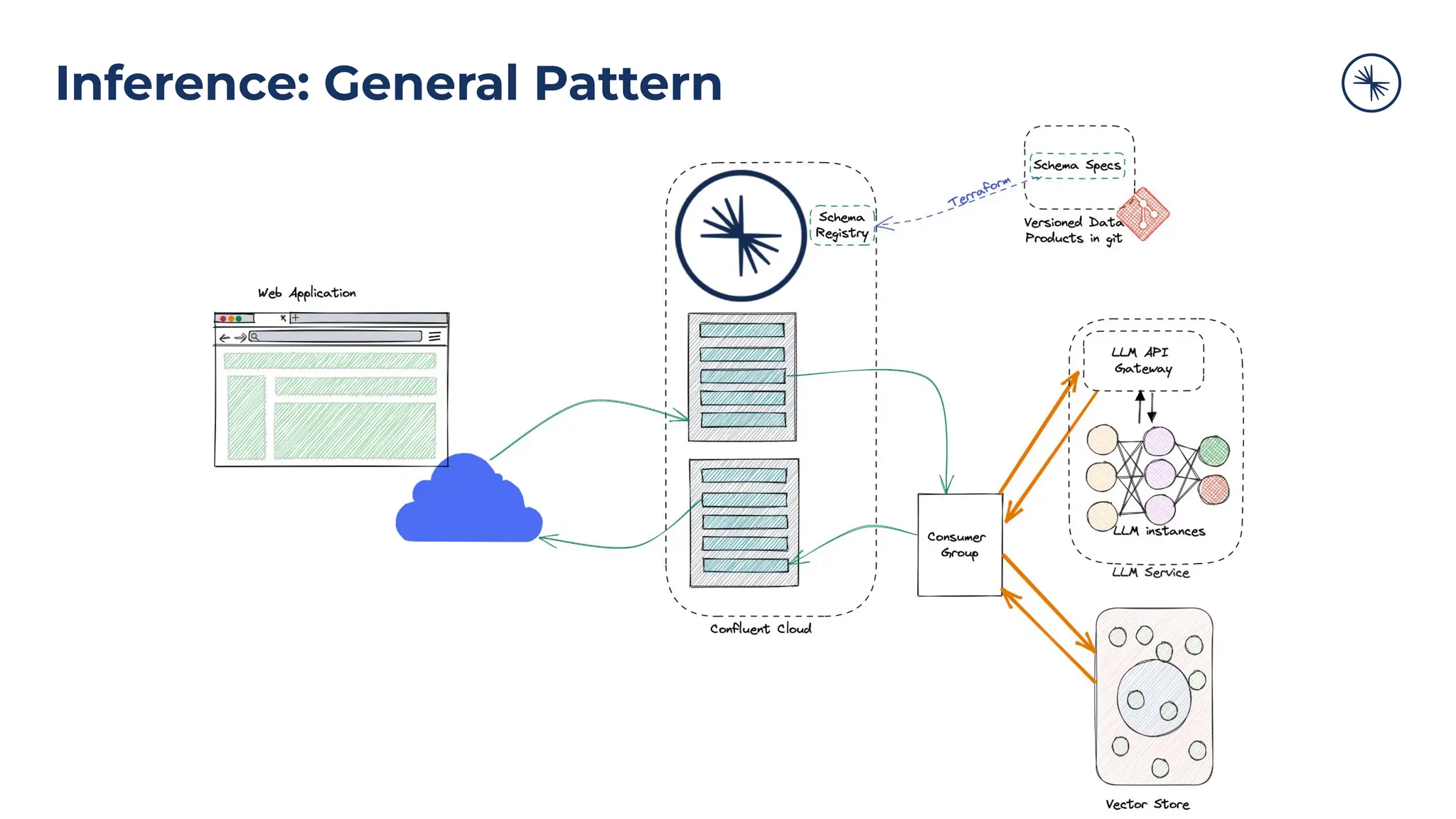

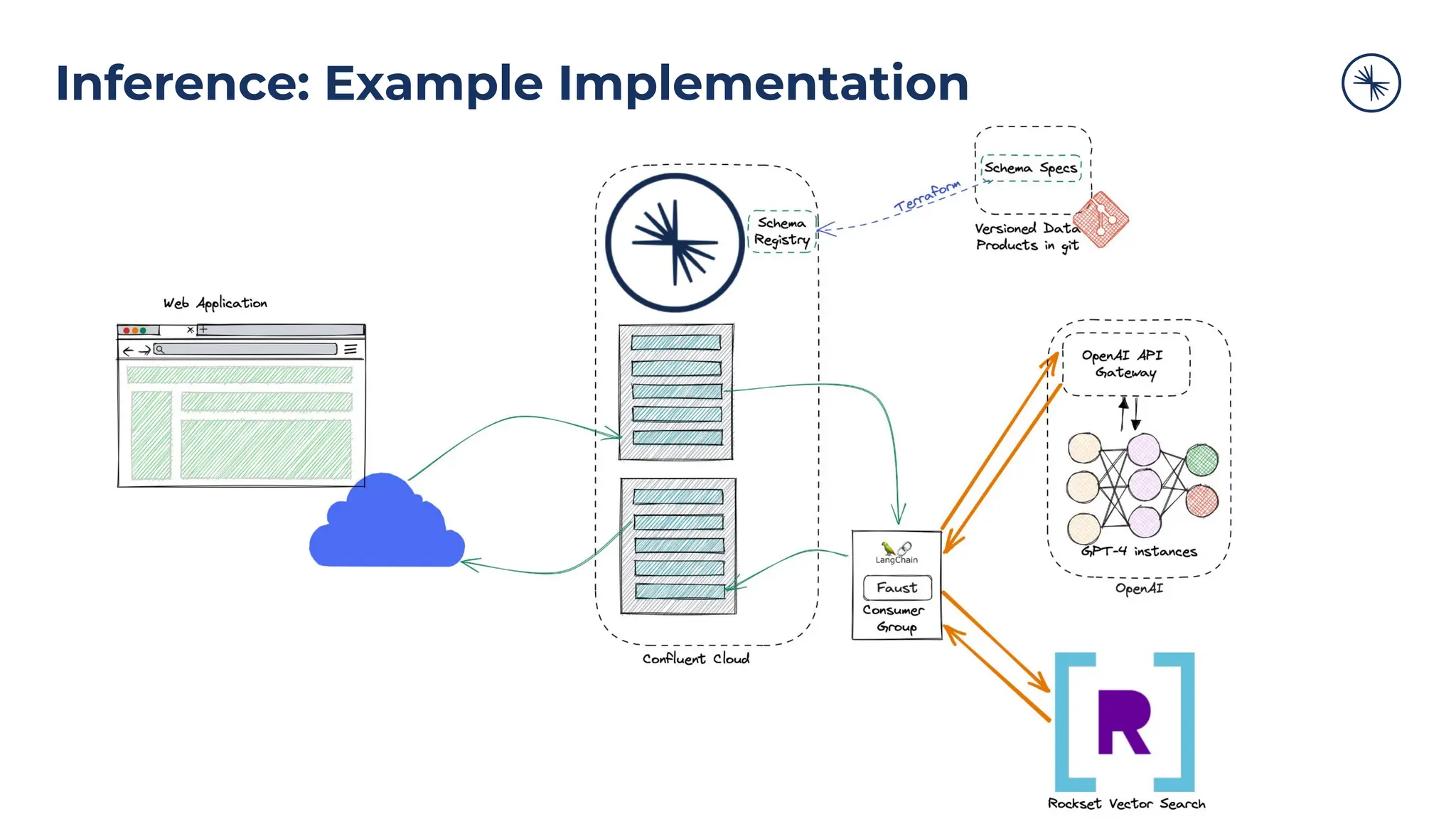

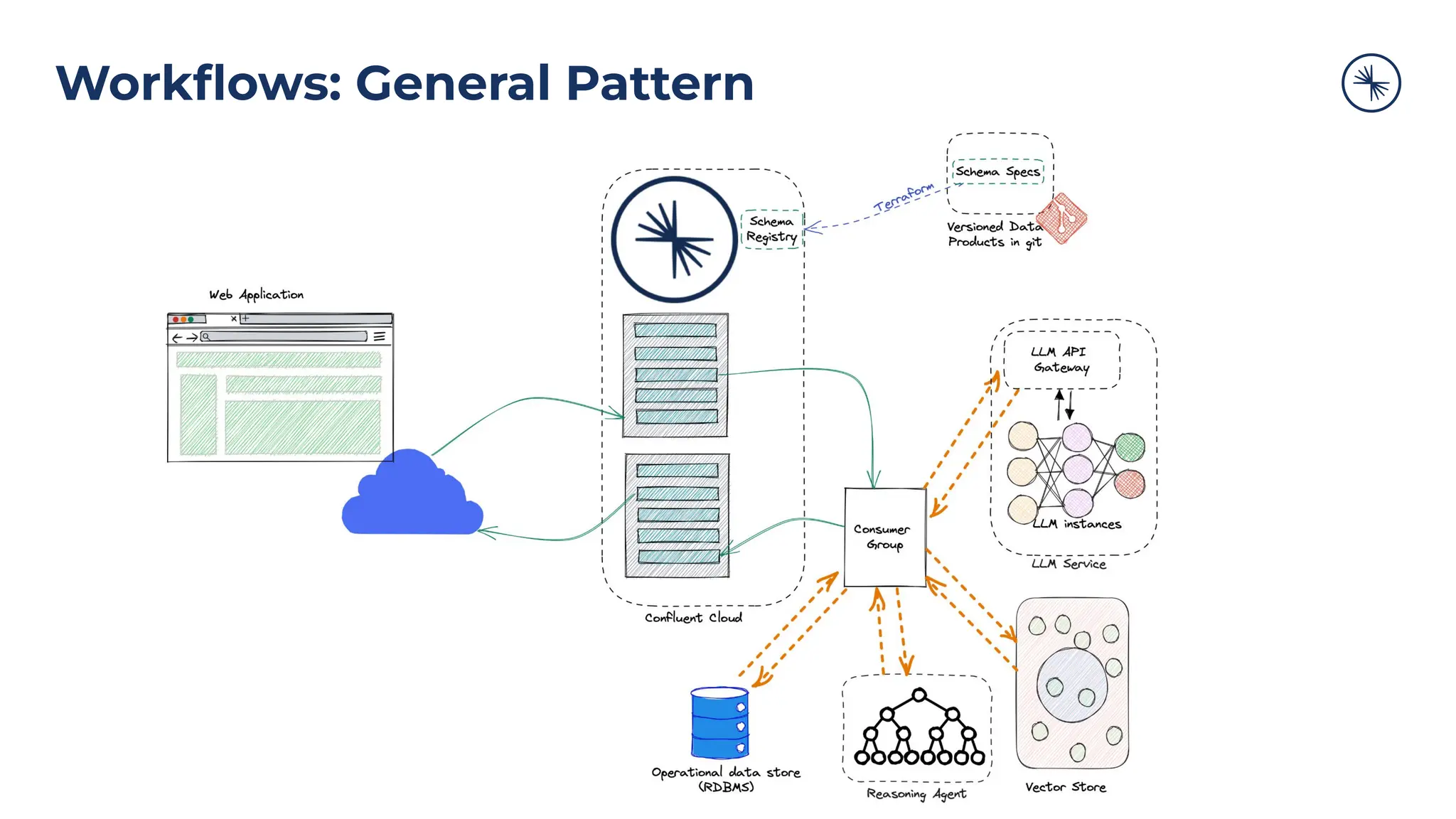

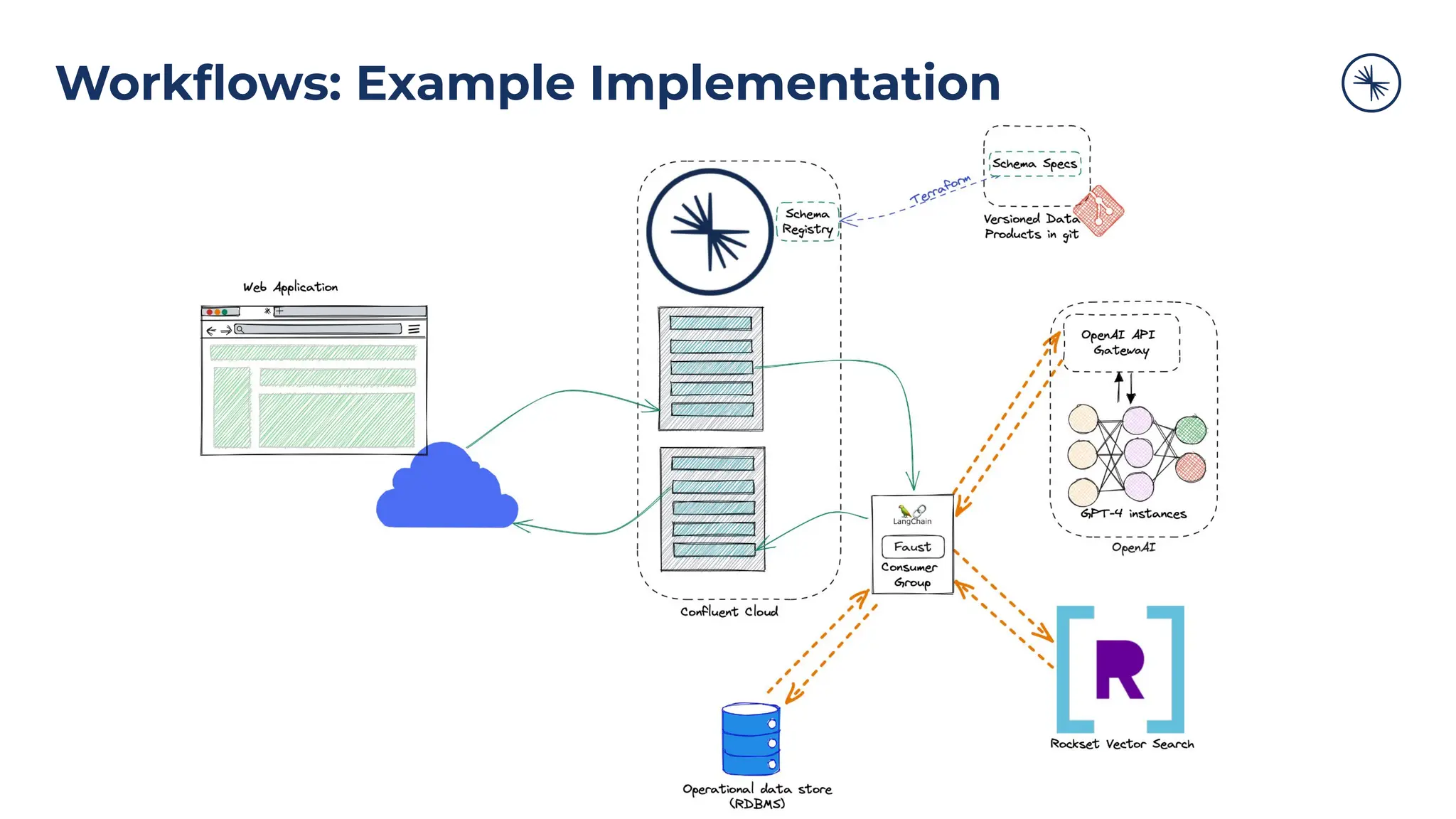

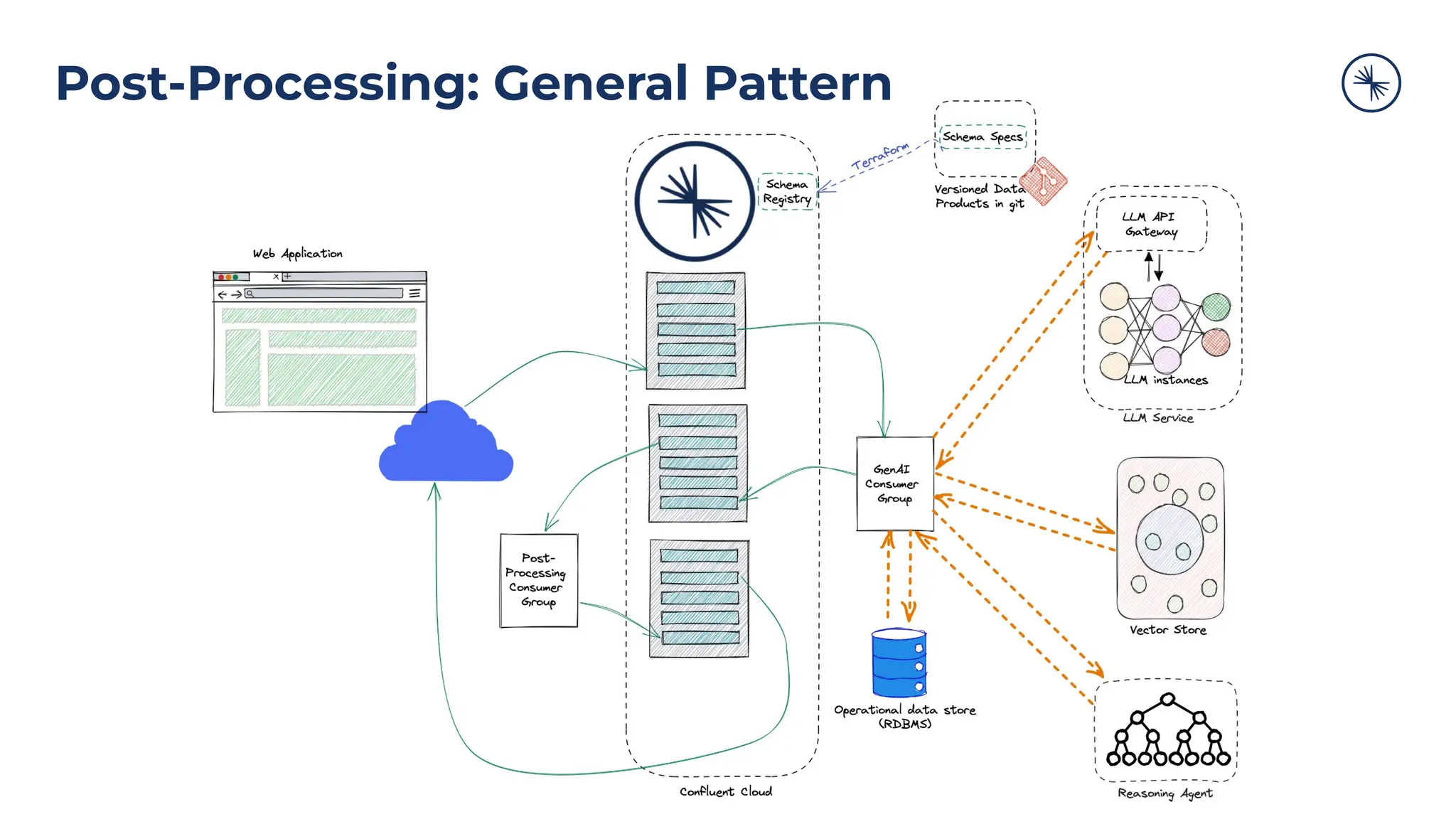

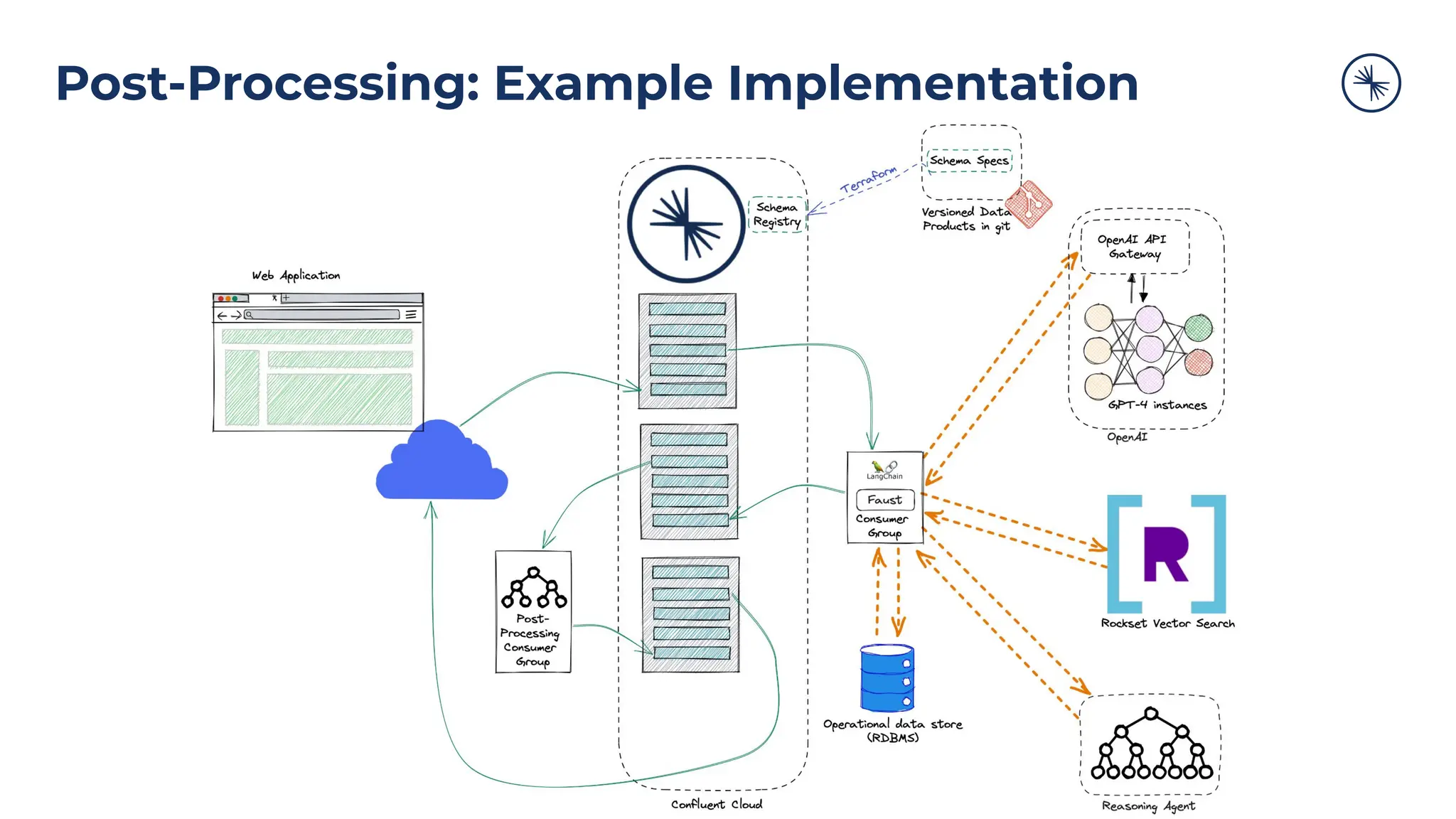

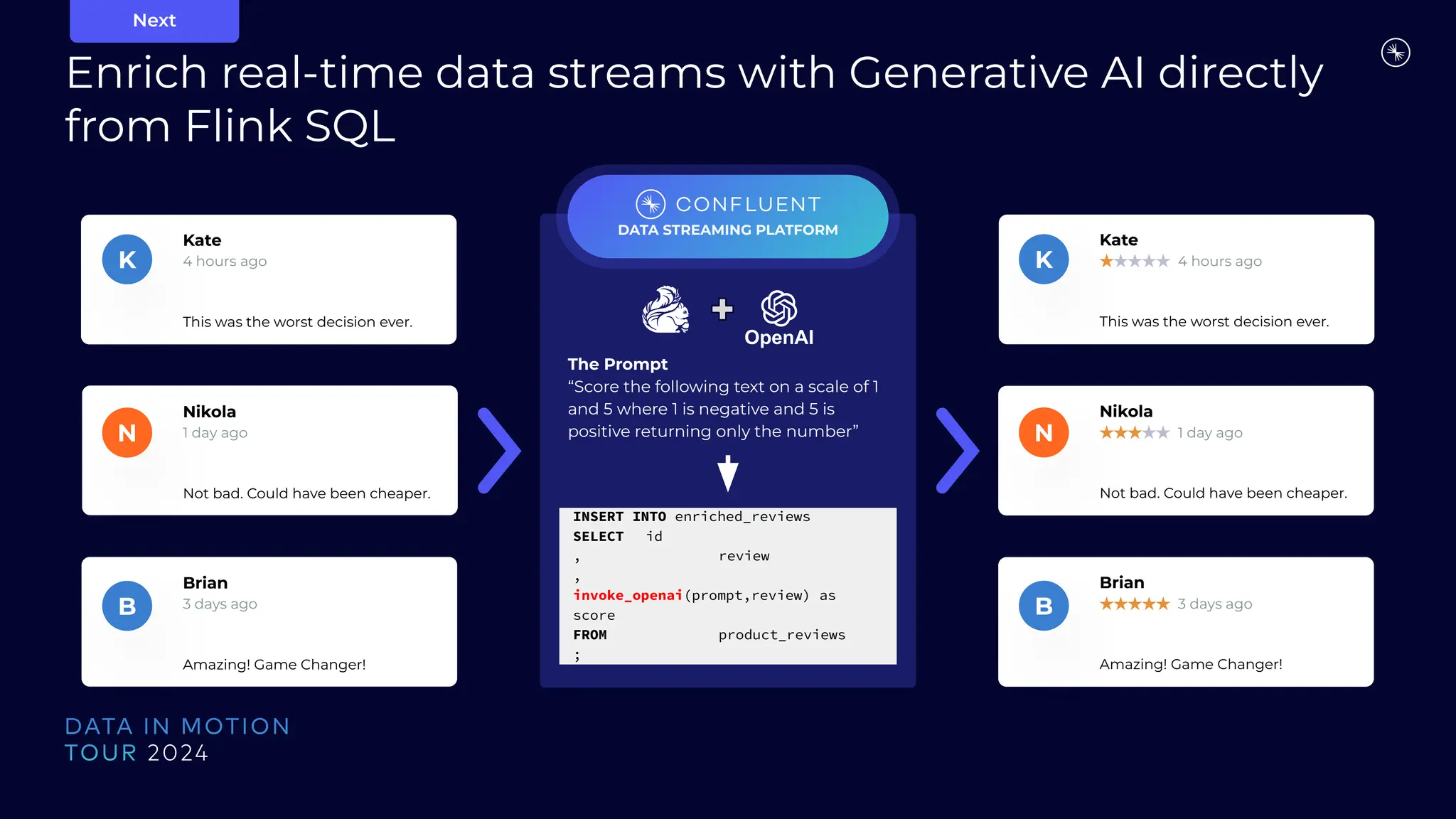

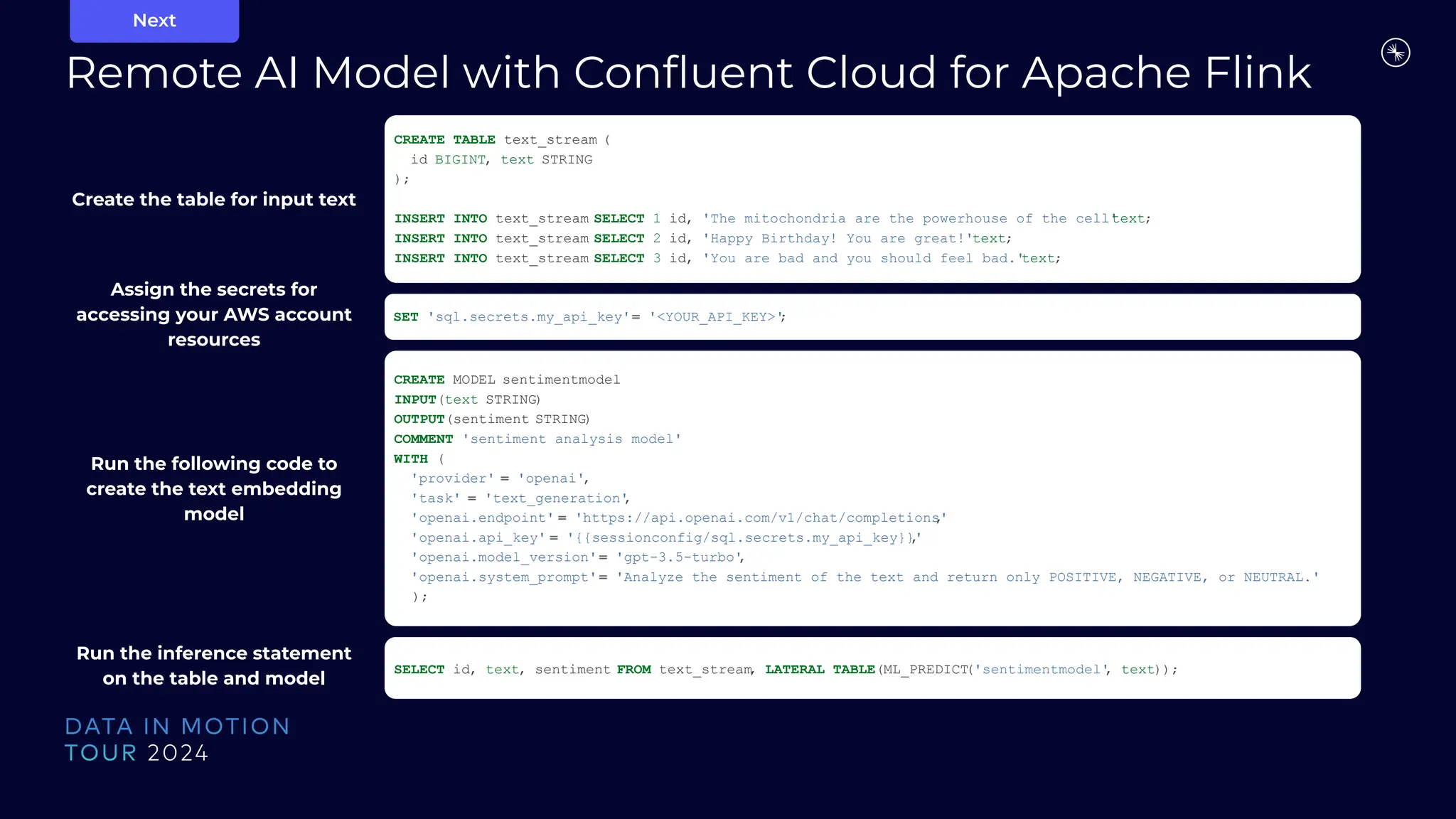

The document outlines the importance of real-time data streaming for enhancing generative AI applications, emphasizing the integration of operational data across enterprises to provide reliable and scalable insights. It highlights how large language models (LLMs) and vector stores can be modularized for improved performance, requiring contextual and trustworthy data to drive value. Additionally, it details the process for AI application development, including data augmentation, inference, workflows, and post-processing, facilitated by Confluent's platform.