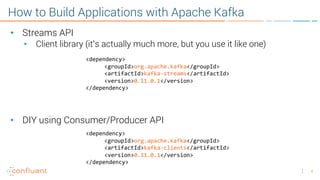

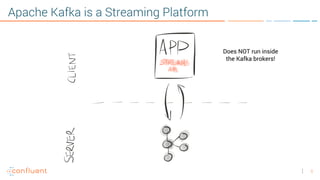

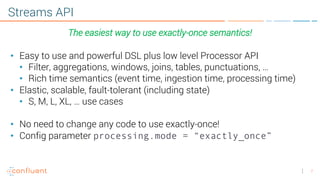

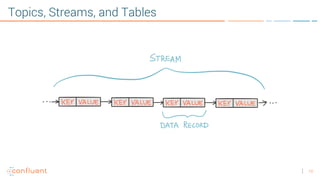

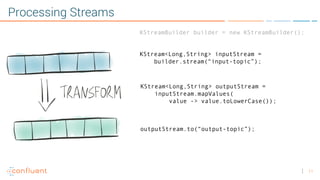

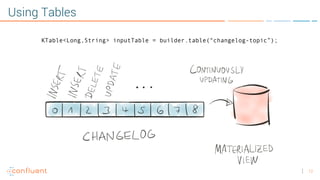

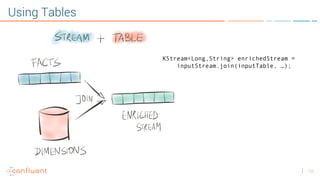

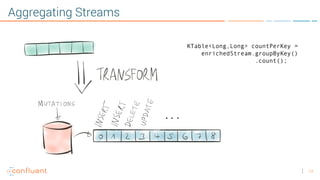

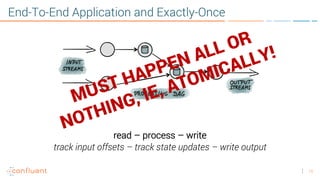

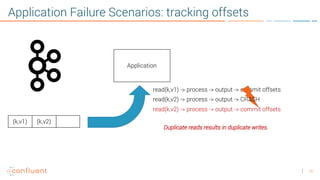

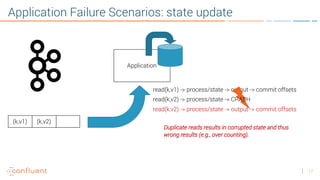

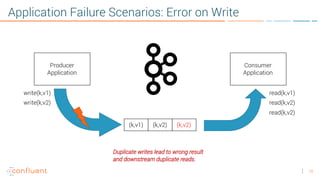

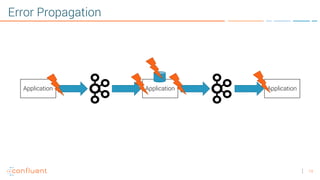

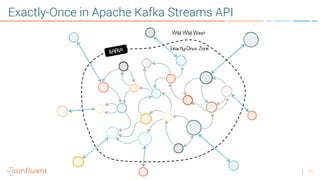

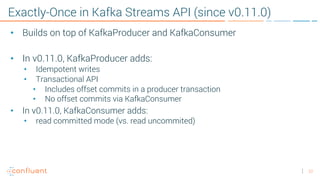

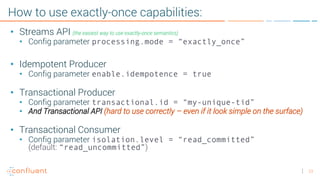

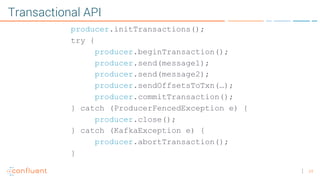

The document provides an overview of building stream processing applications with Apache Kafka, emphasizing the Streams API for achieving exactly-once processing guarantees. It details various components such as topics, streams, tables, and error handling scenarios, highlighting the importance of offset tracking and state management in ensuring strong consistency. Additionally, it discusses configuration parameters and transactional capabilities that facilitate the implementation of these guarantees.