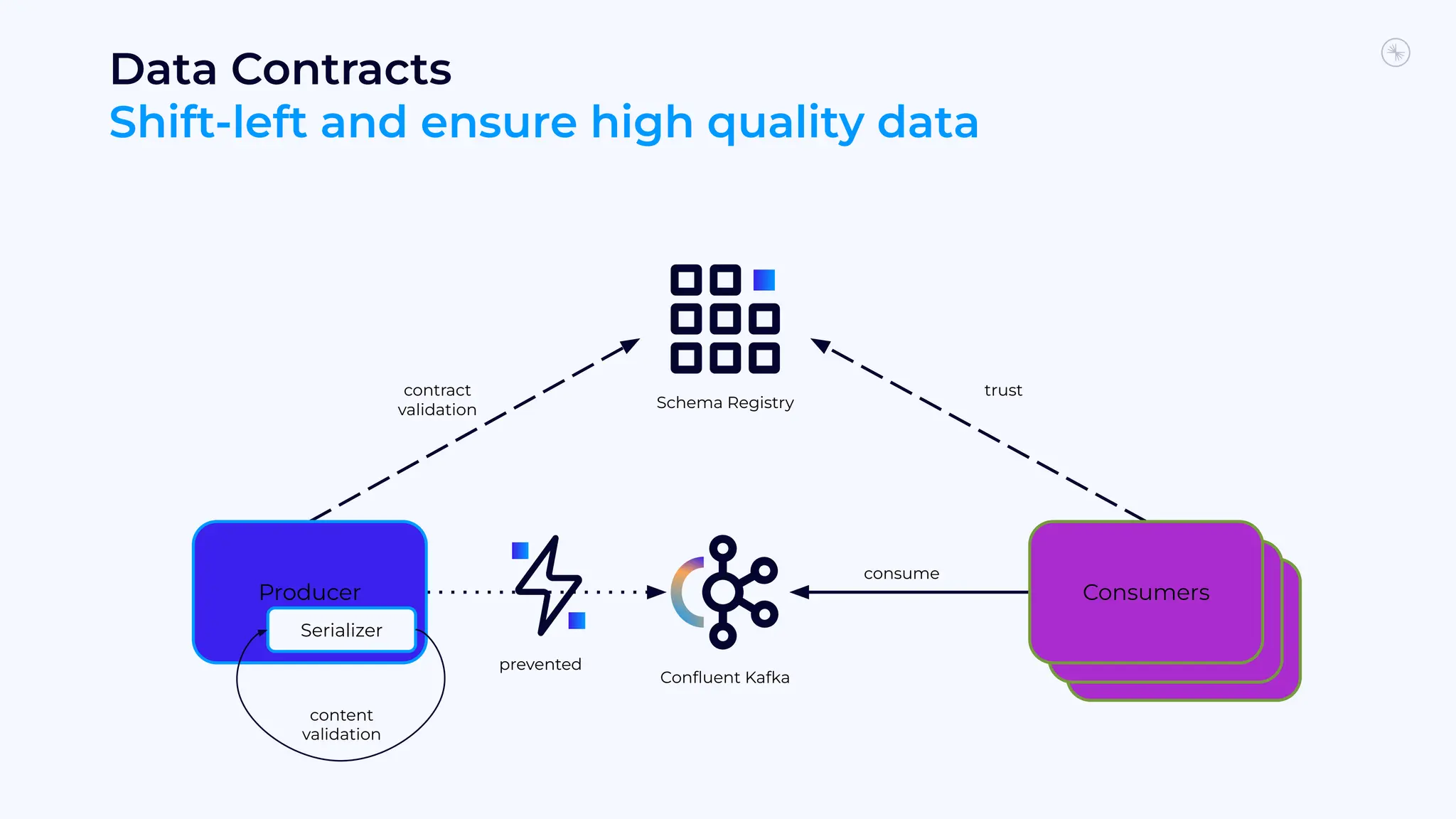

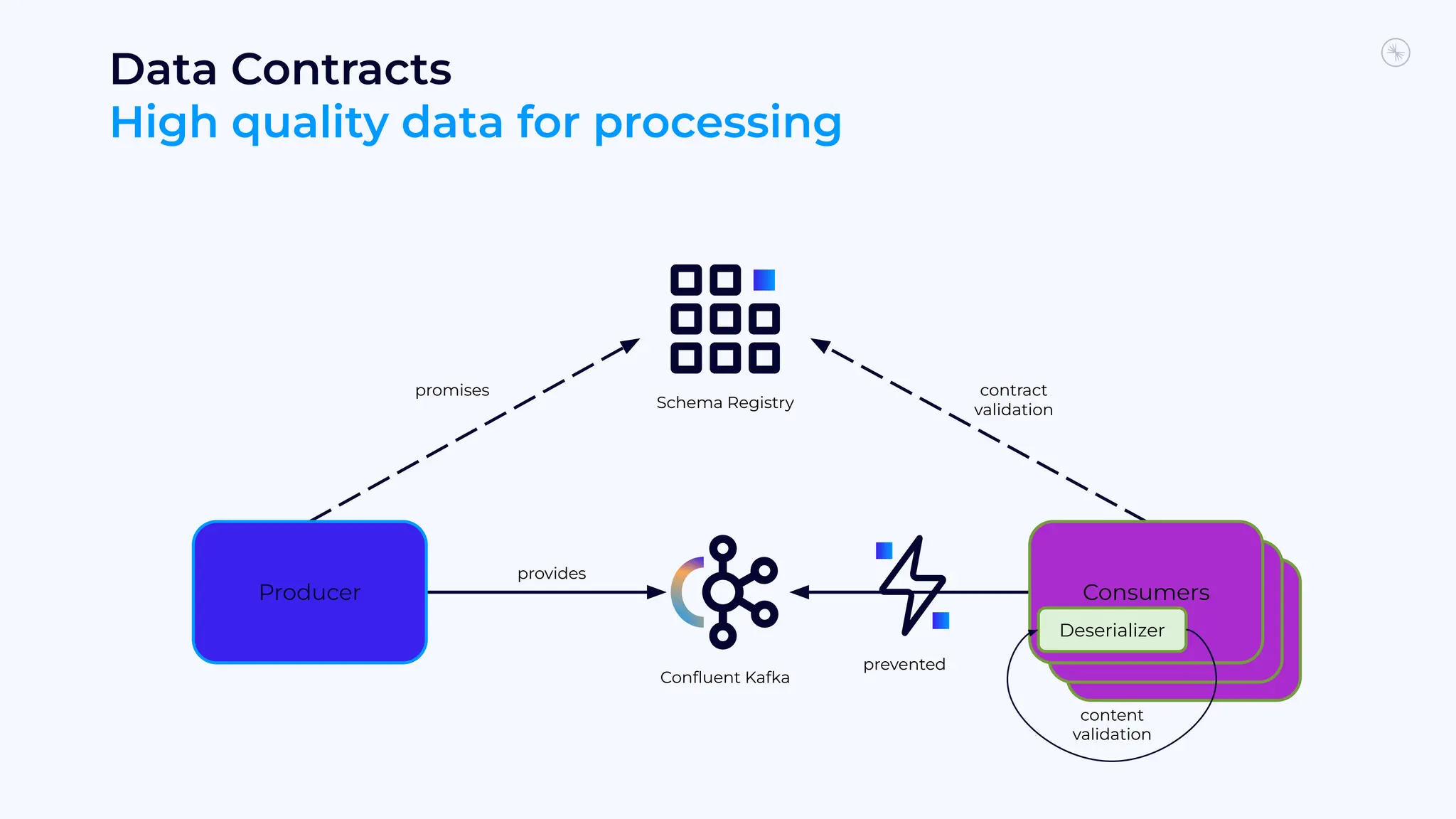

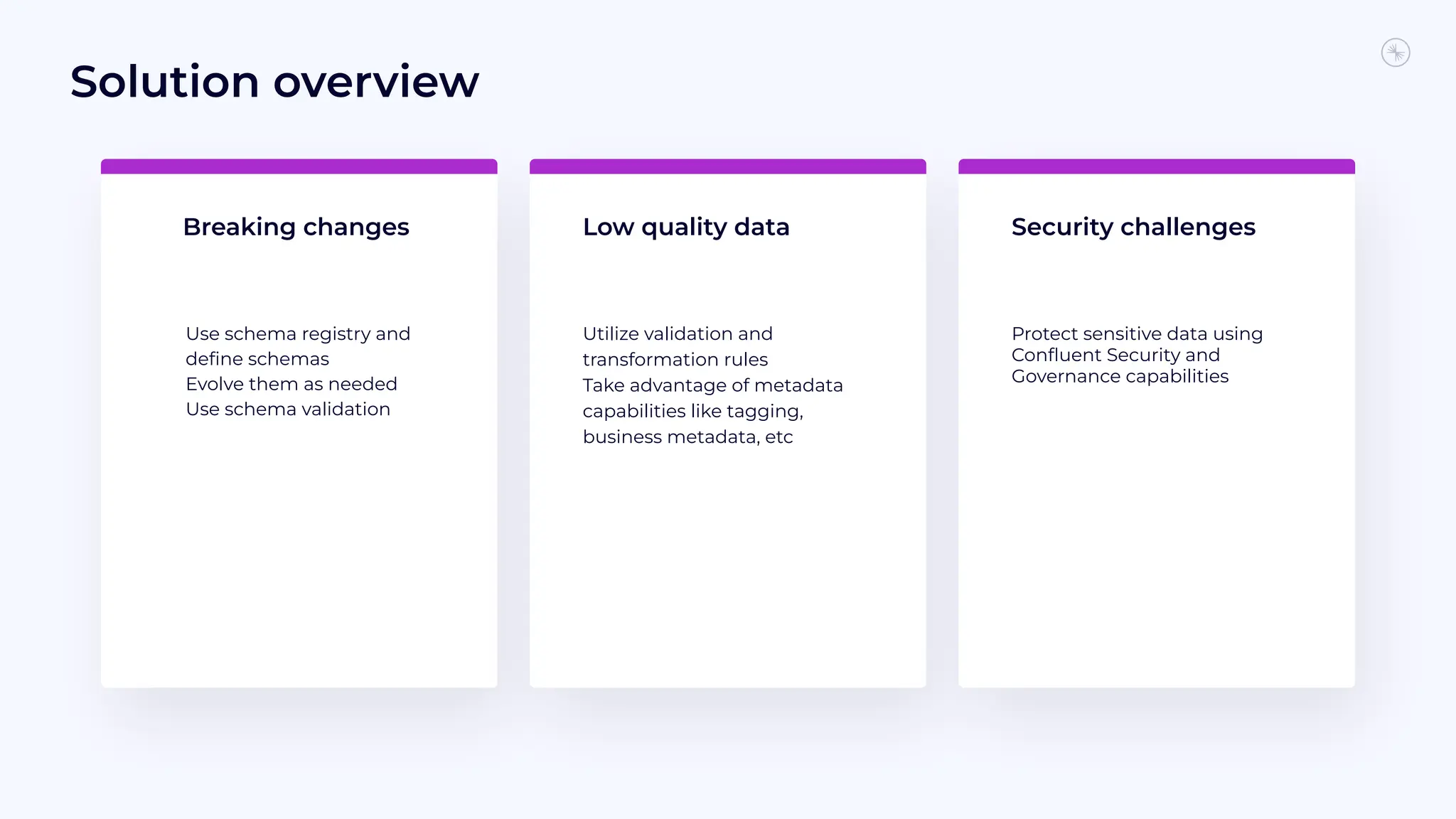

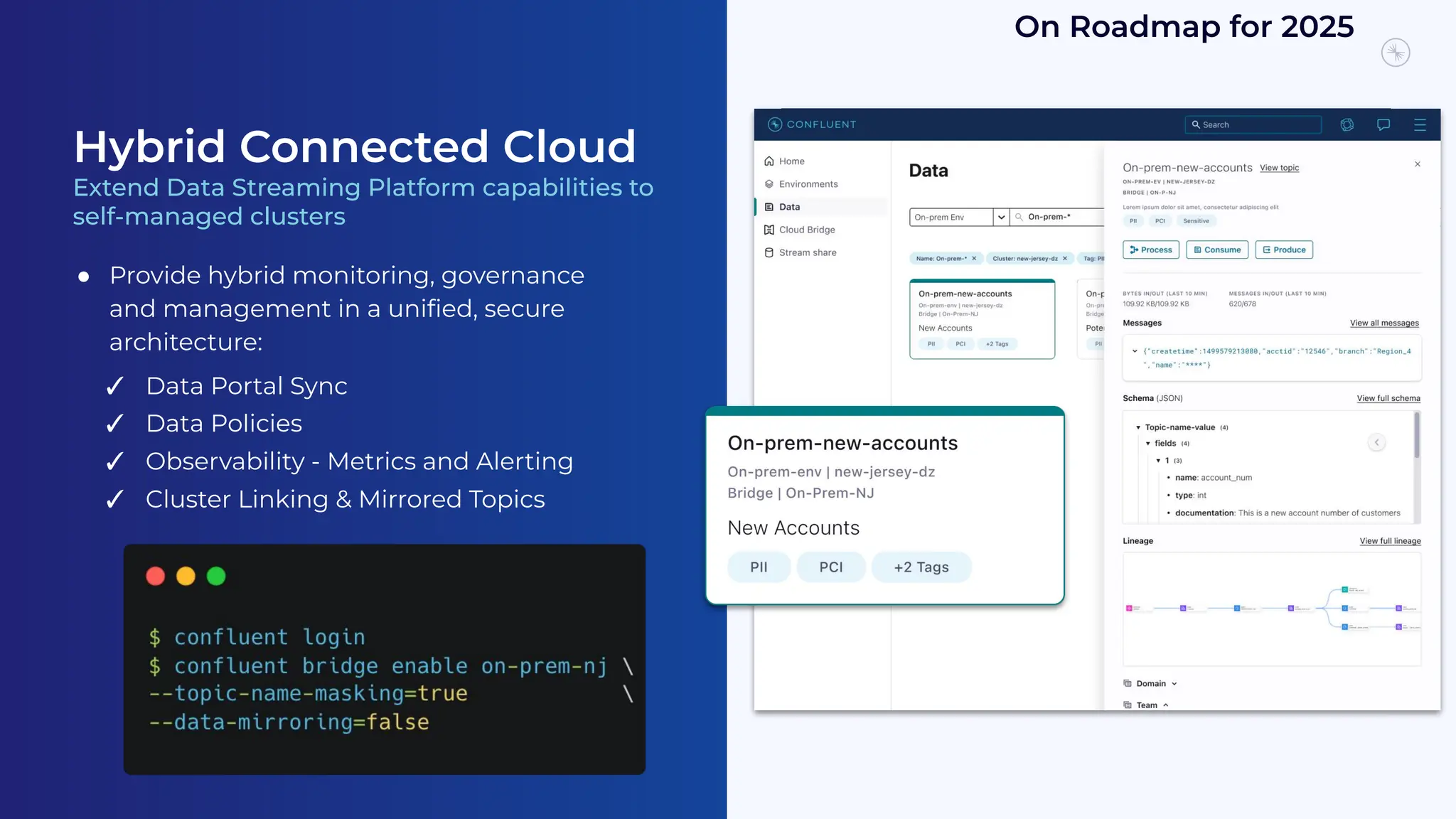

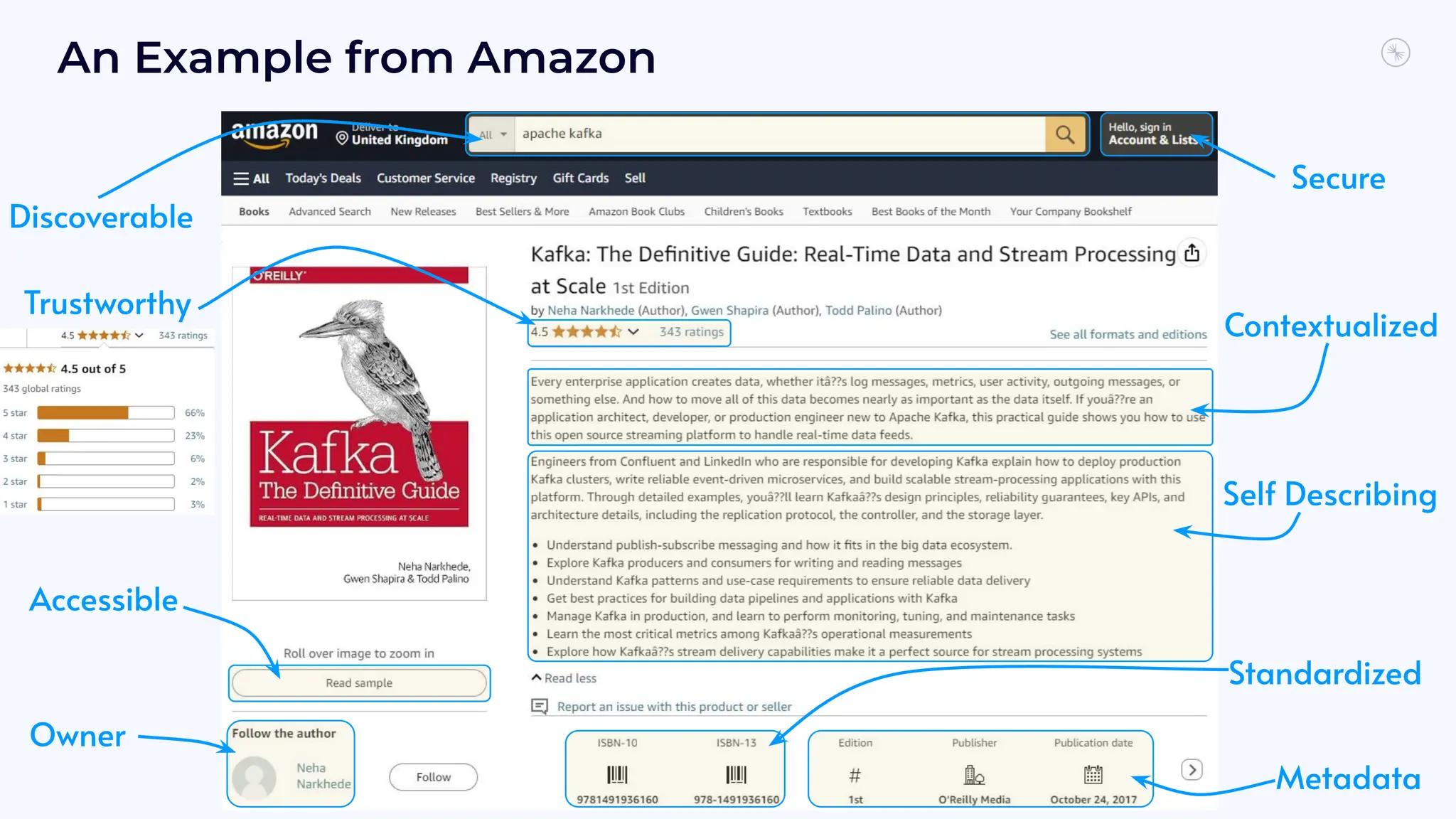

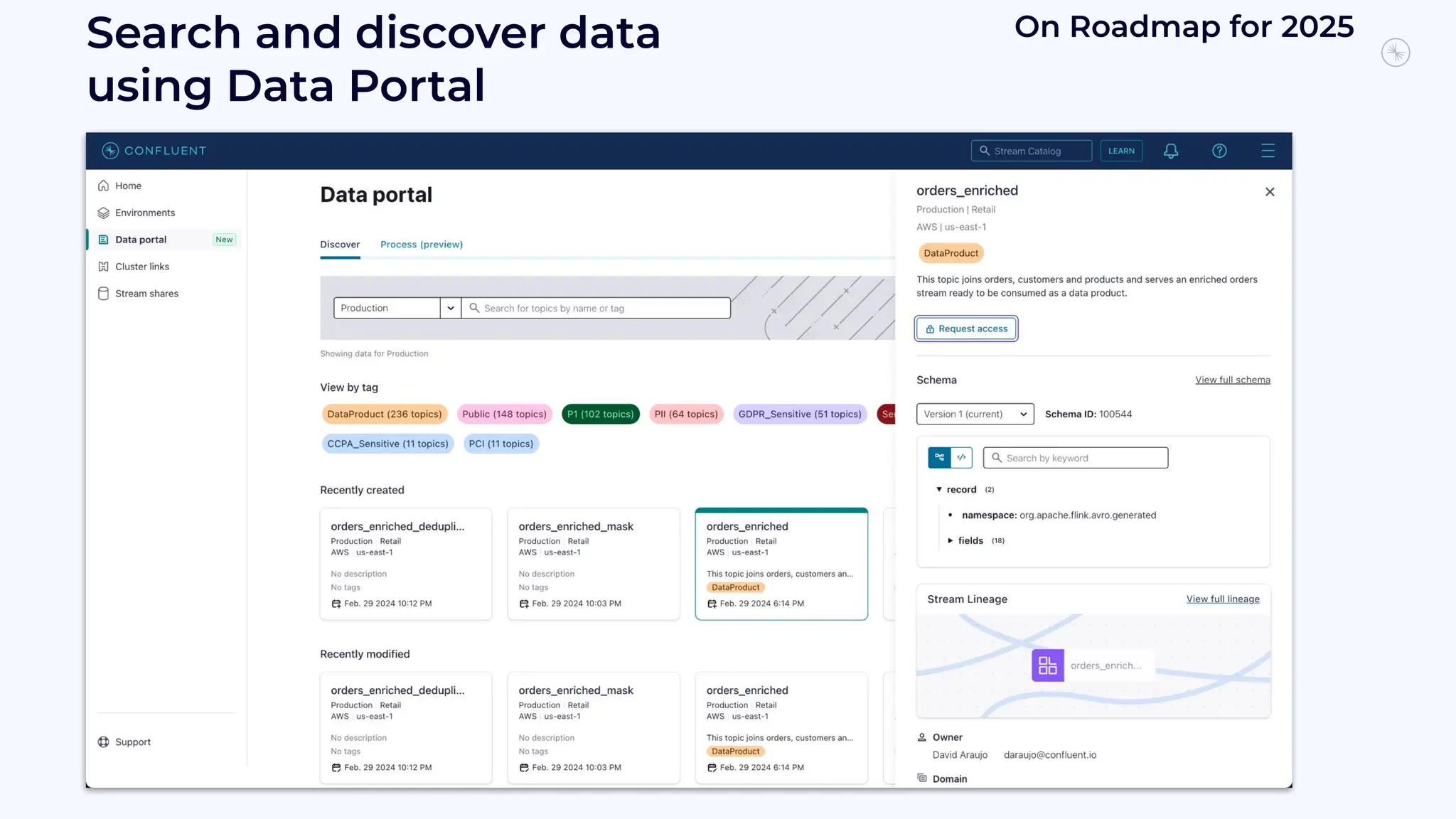

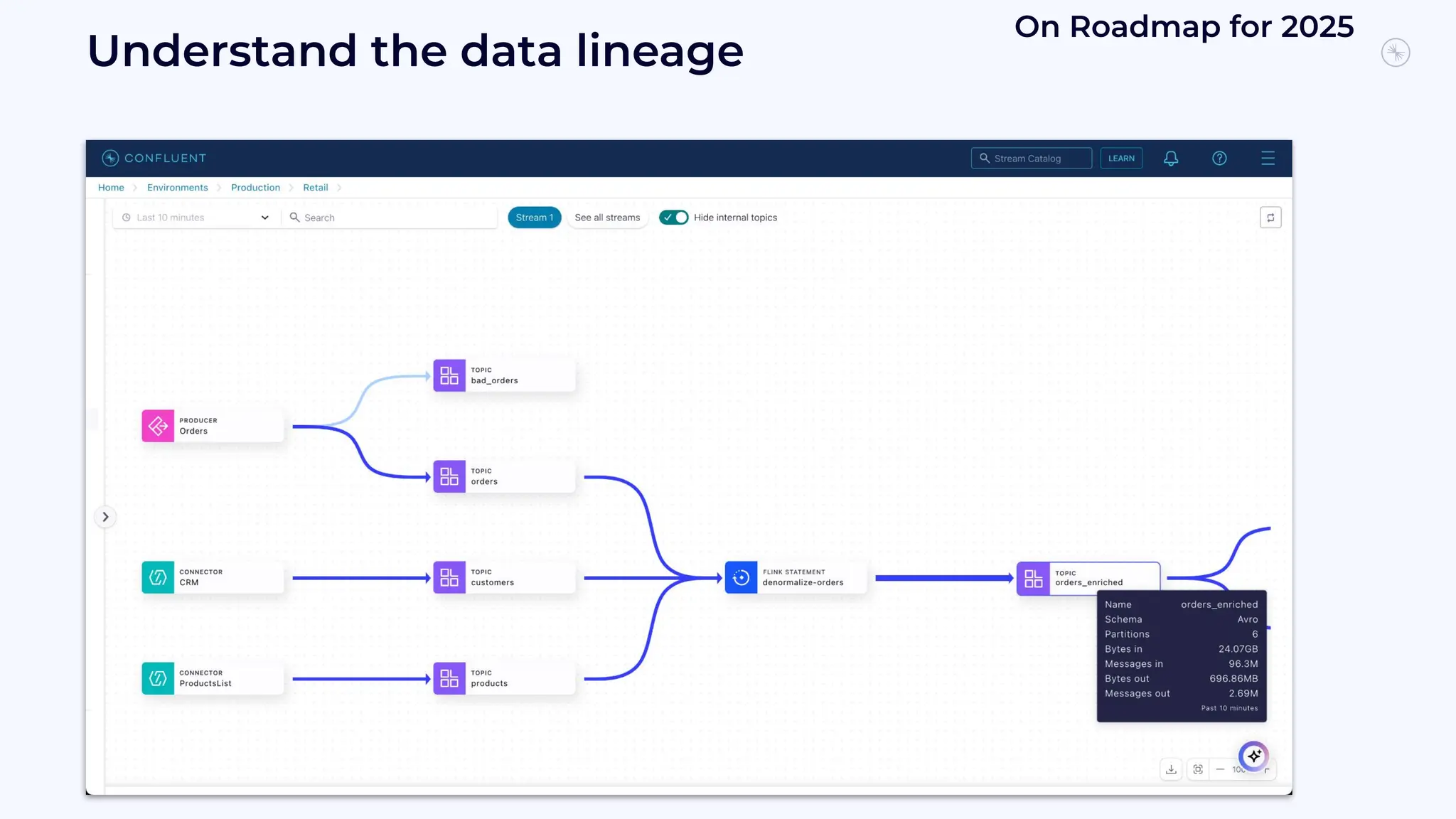

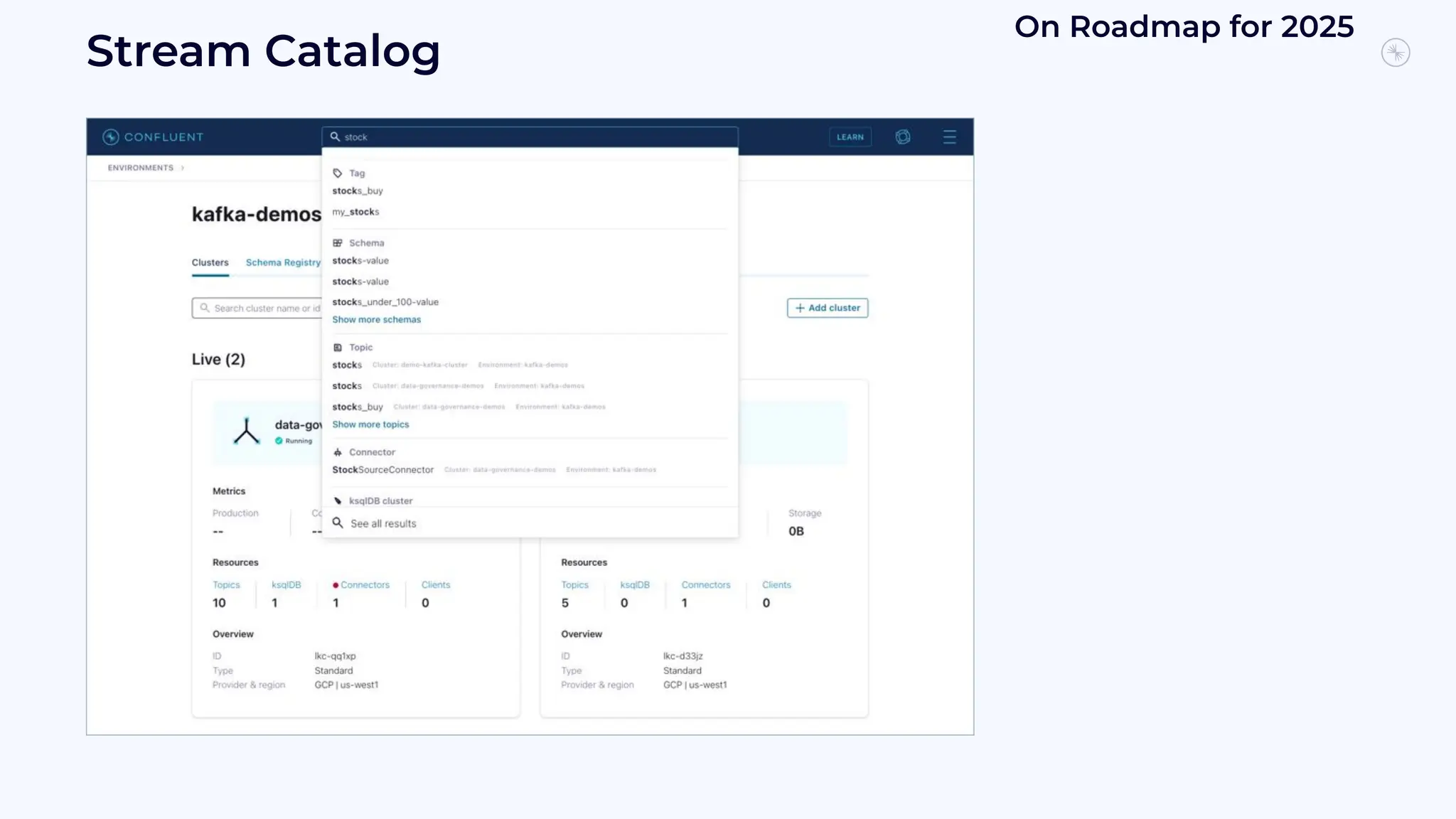

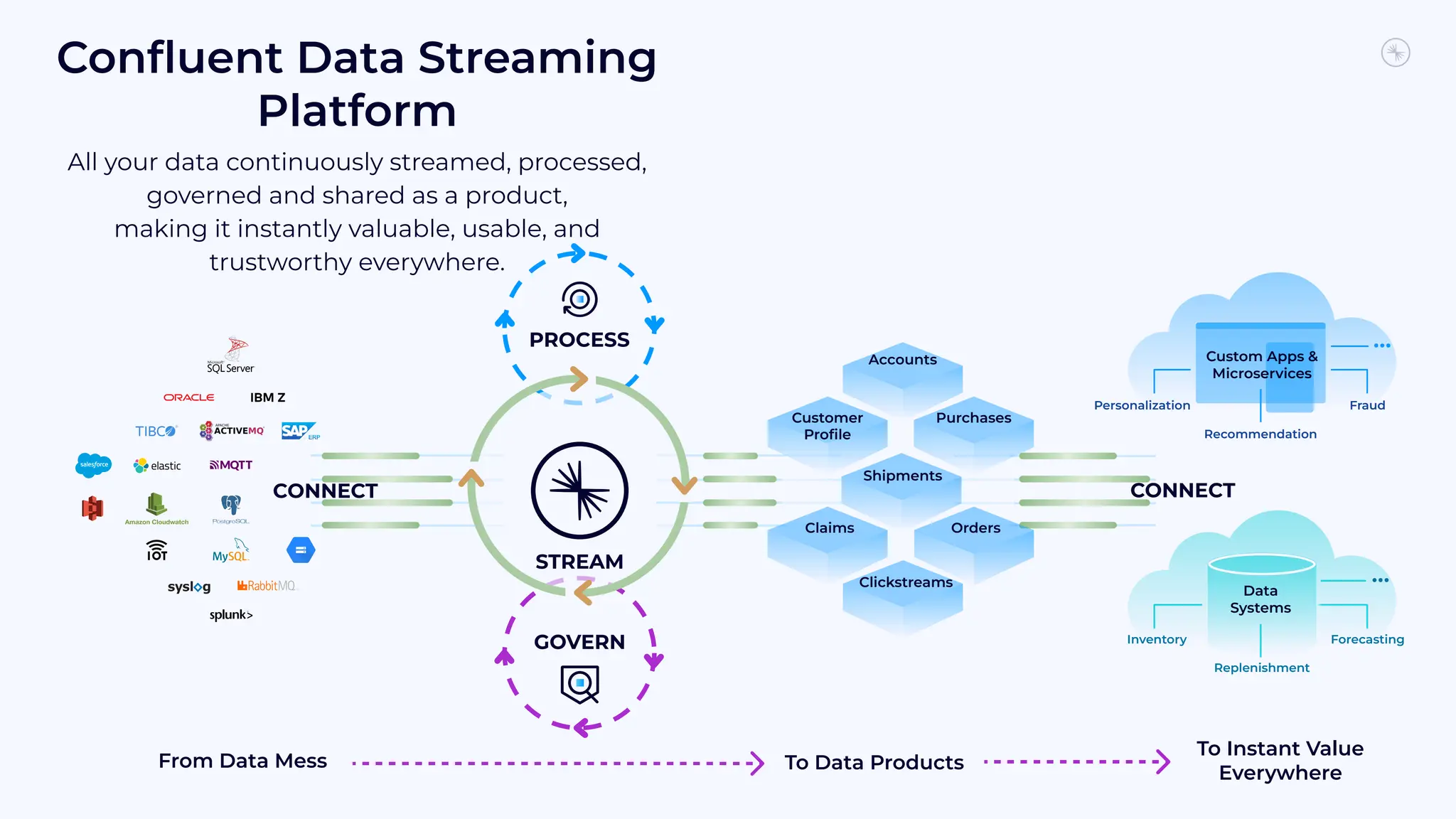

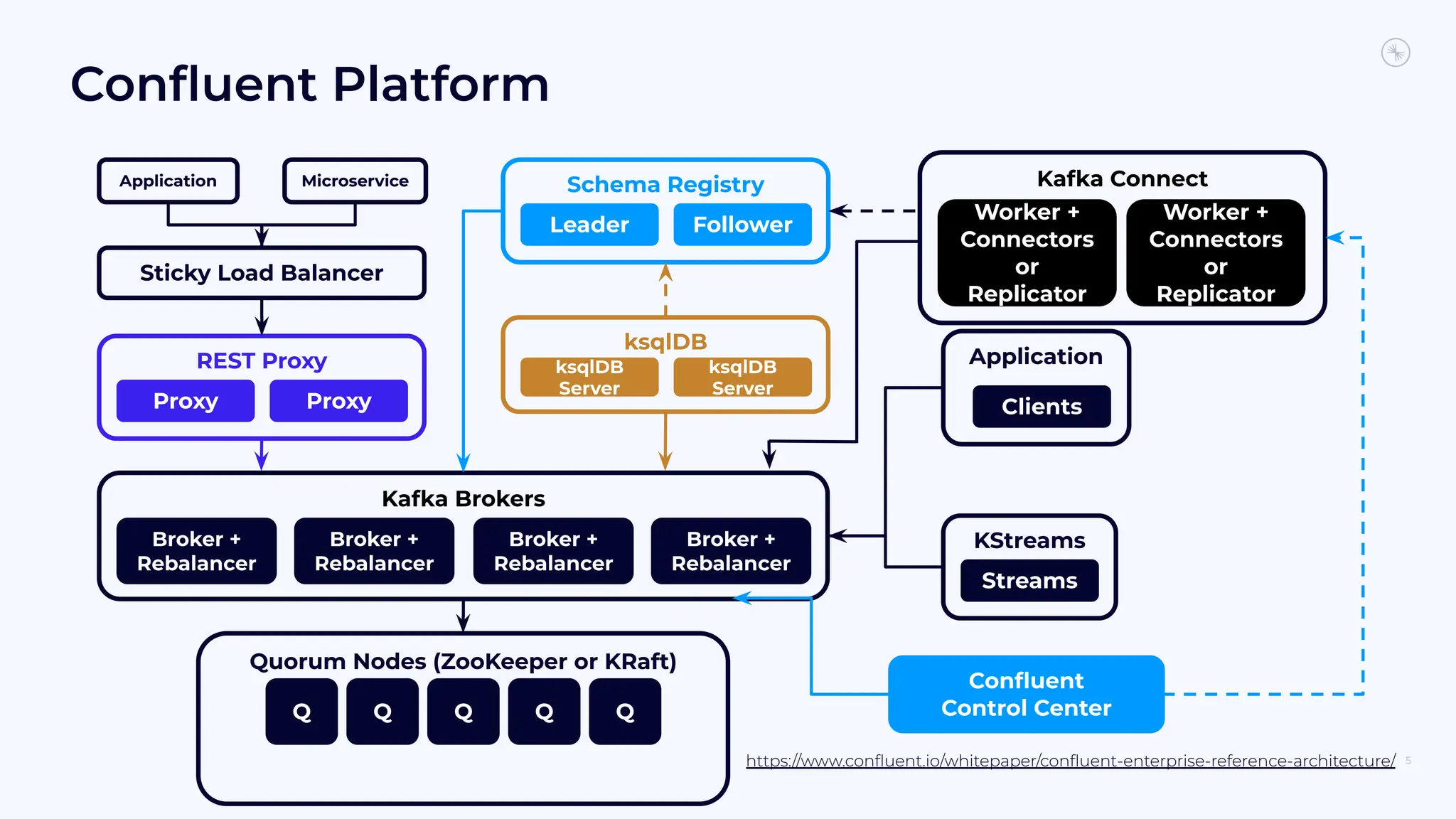

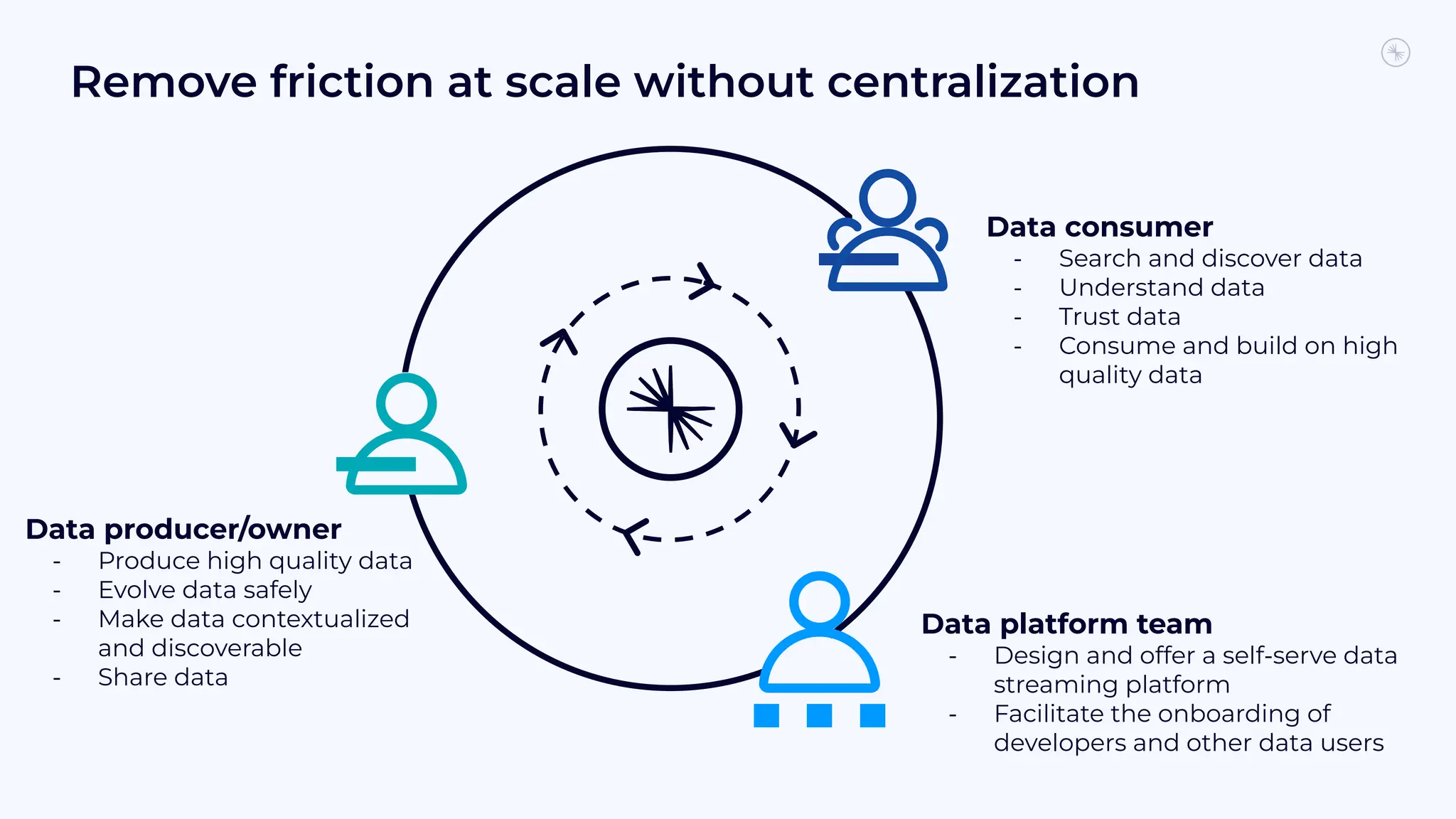

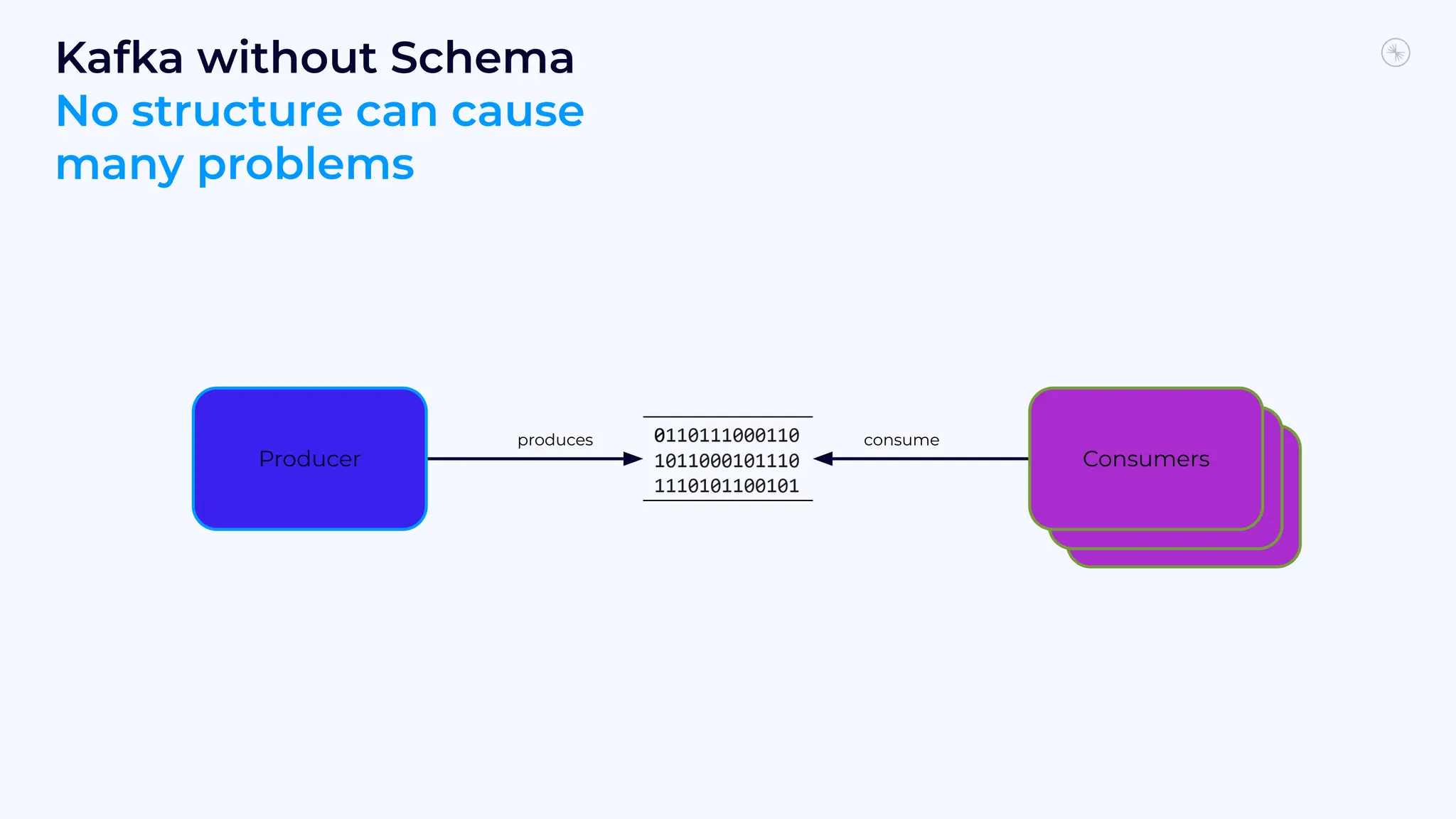

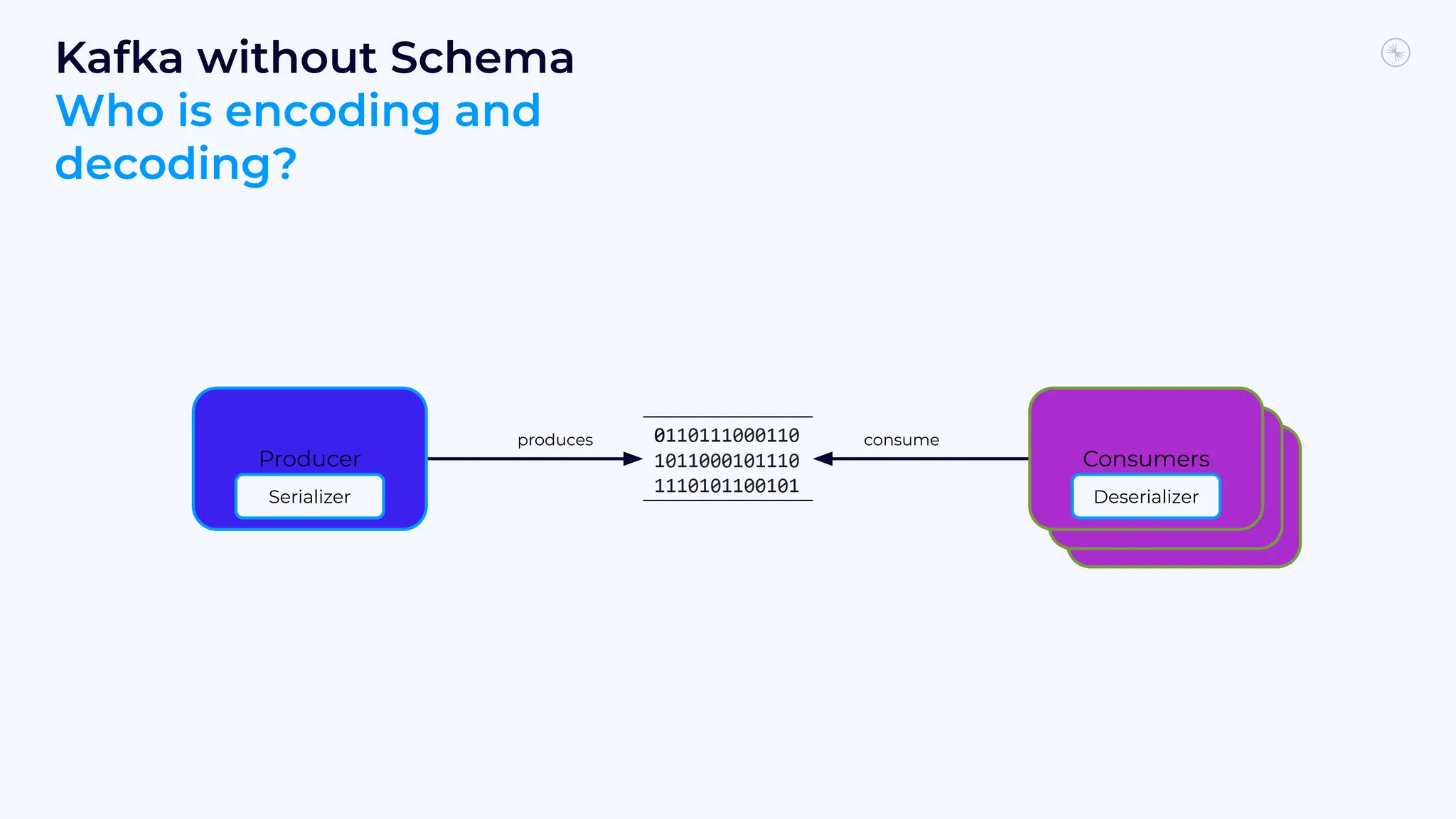

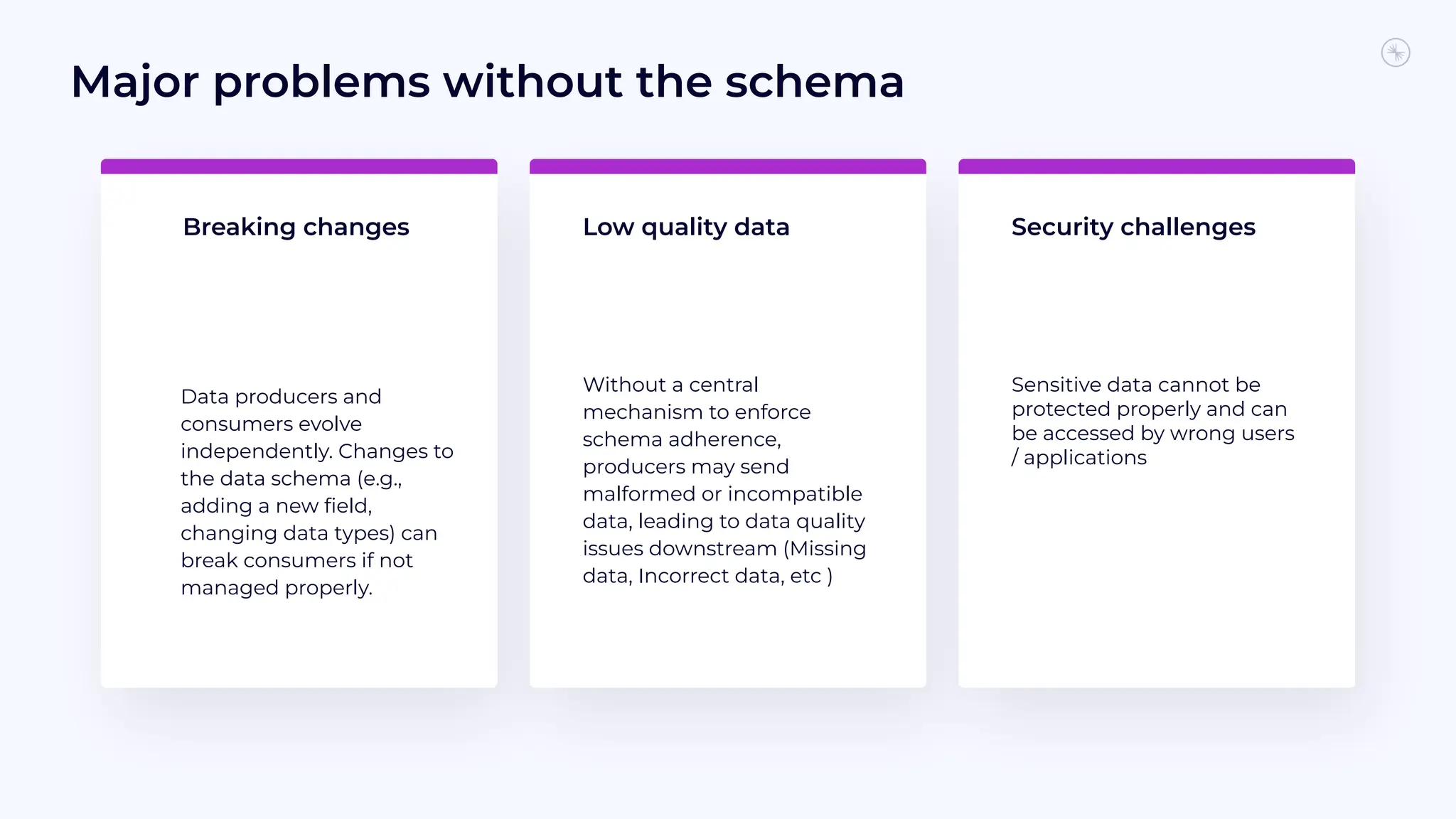

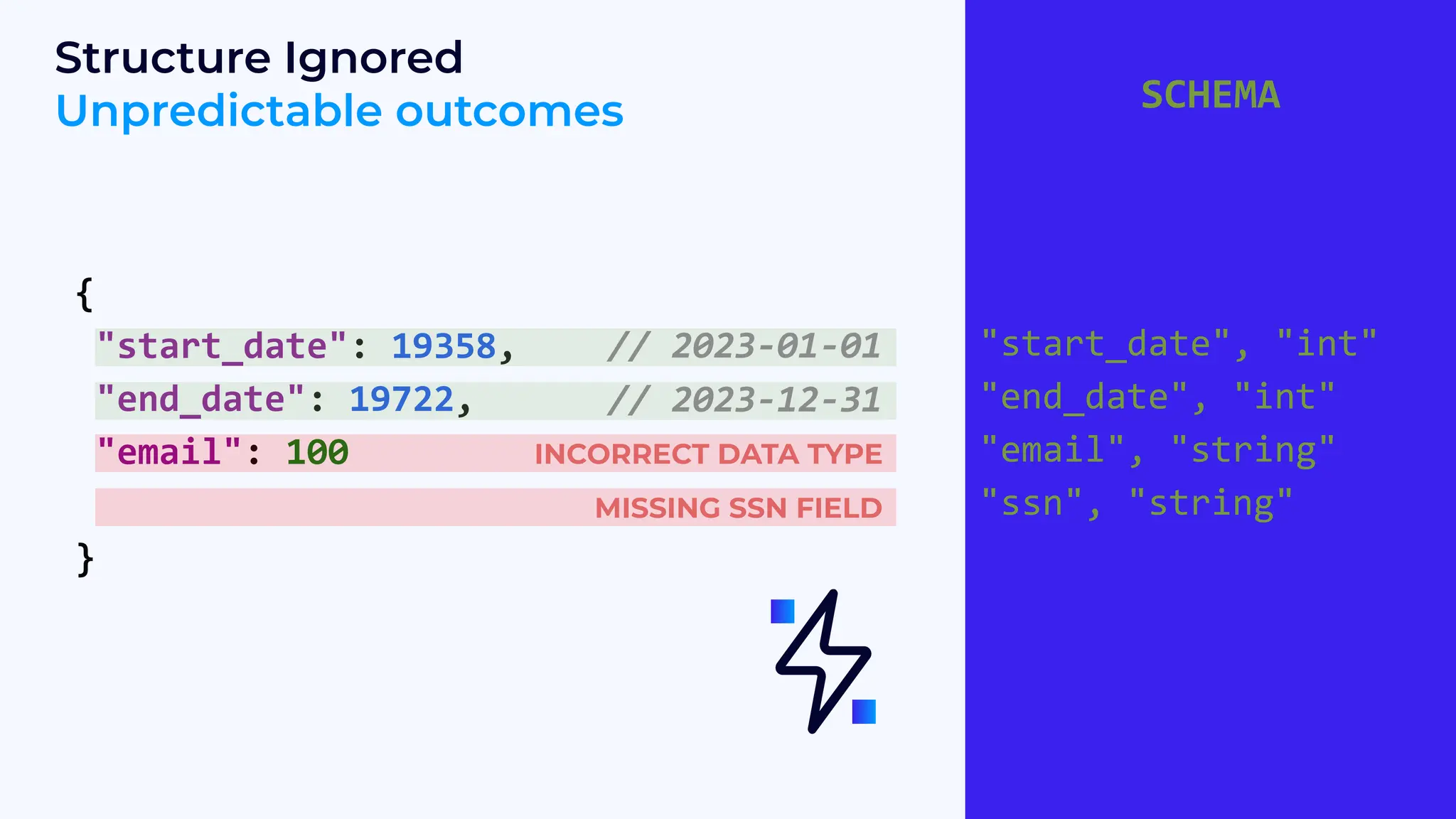

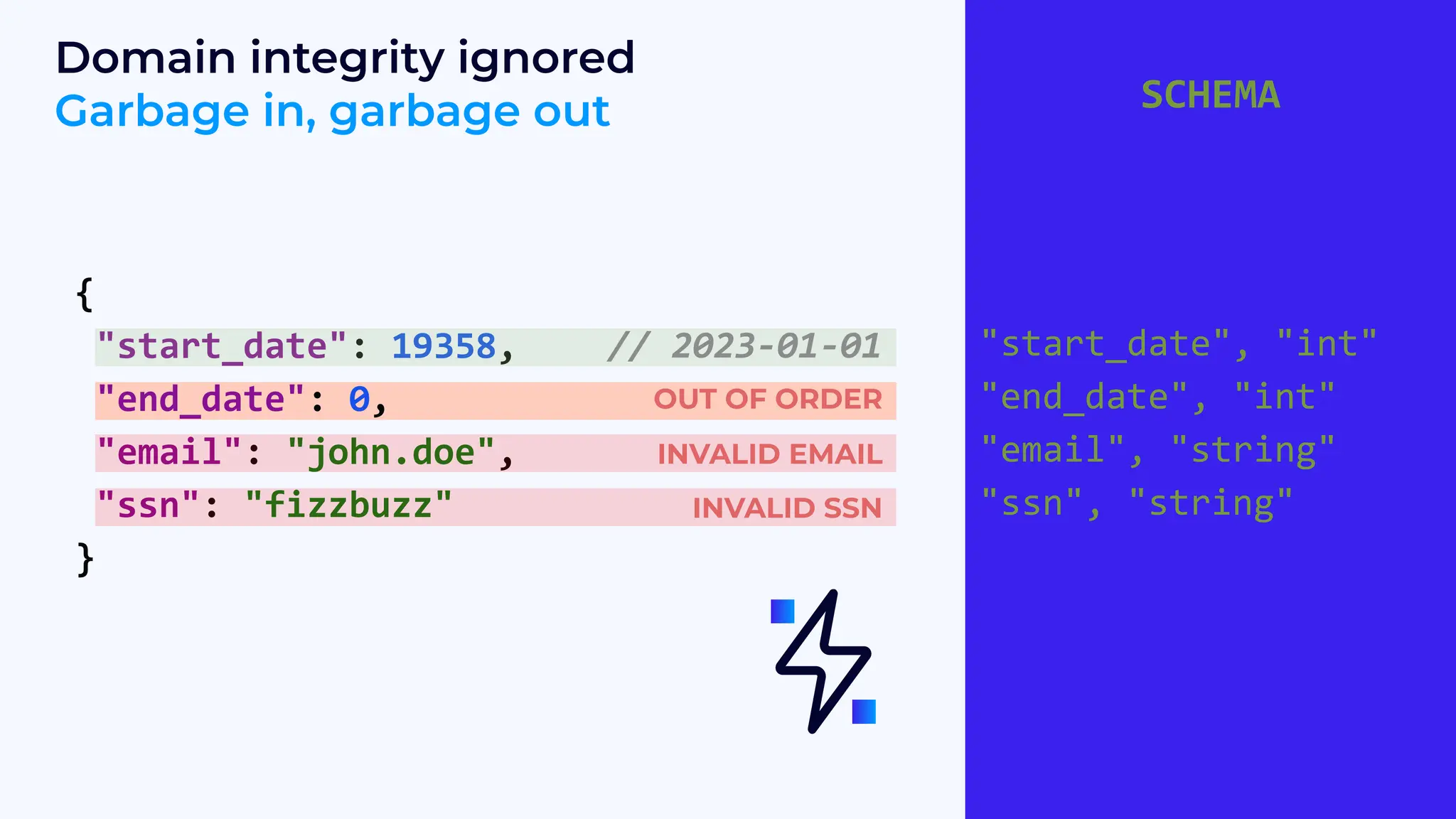

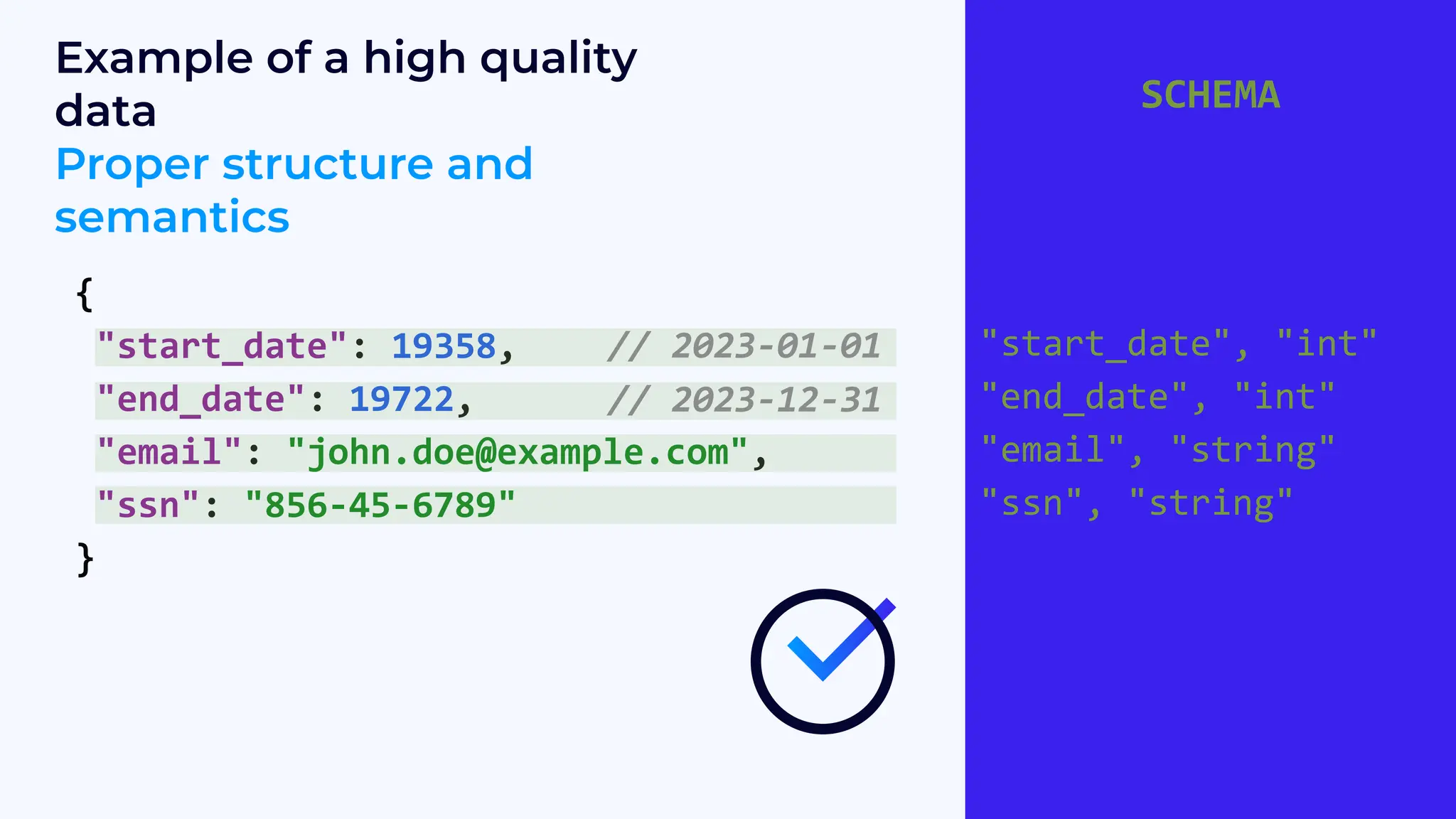

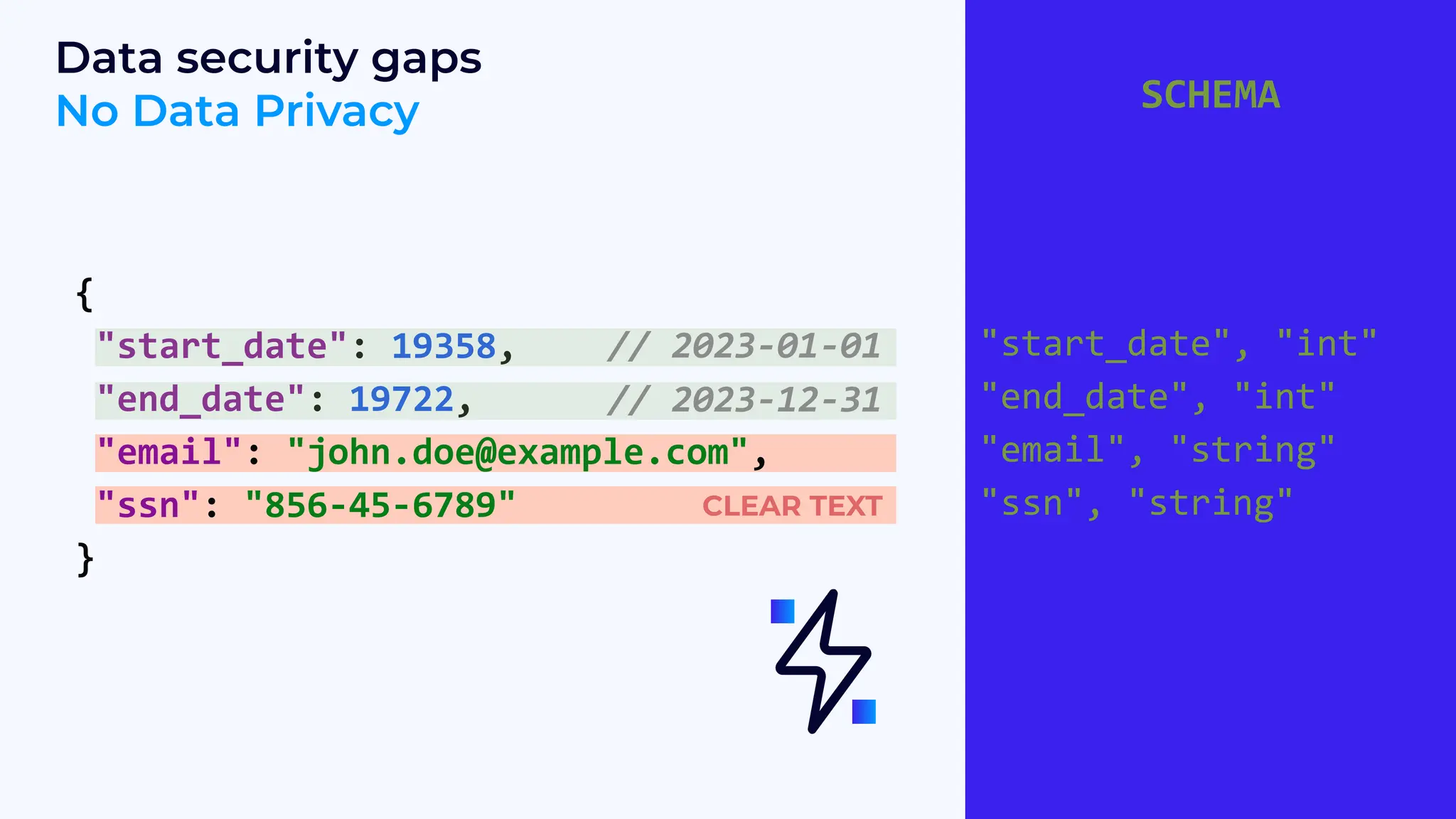

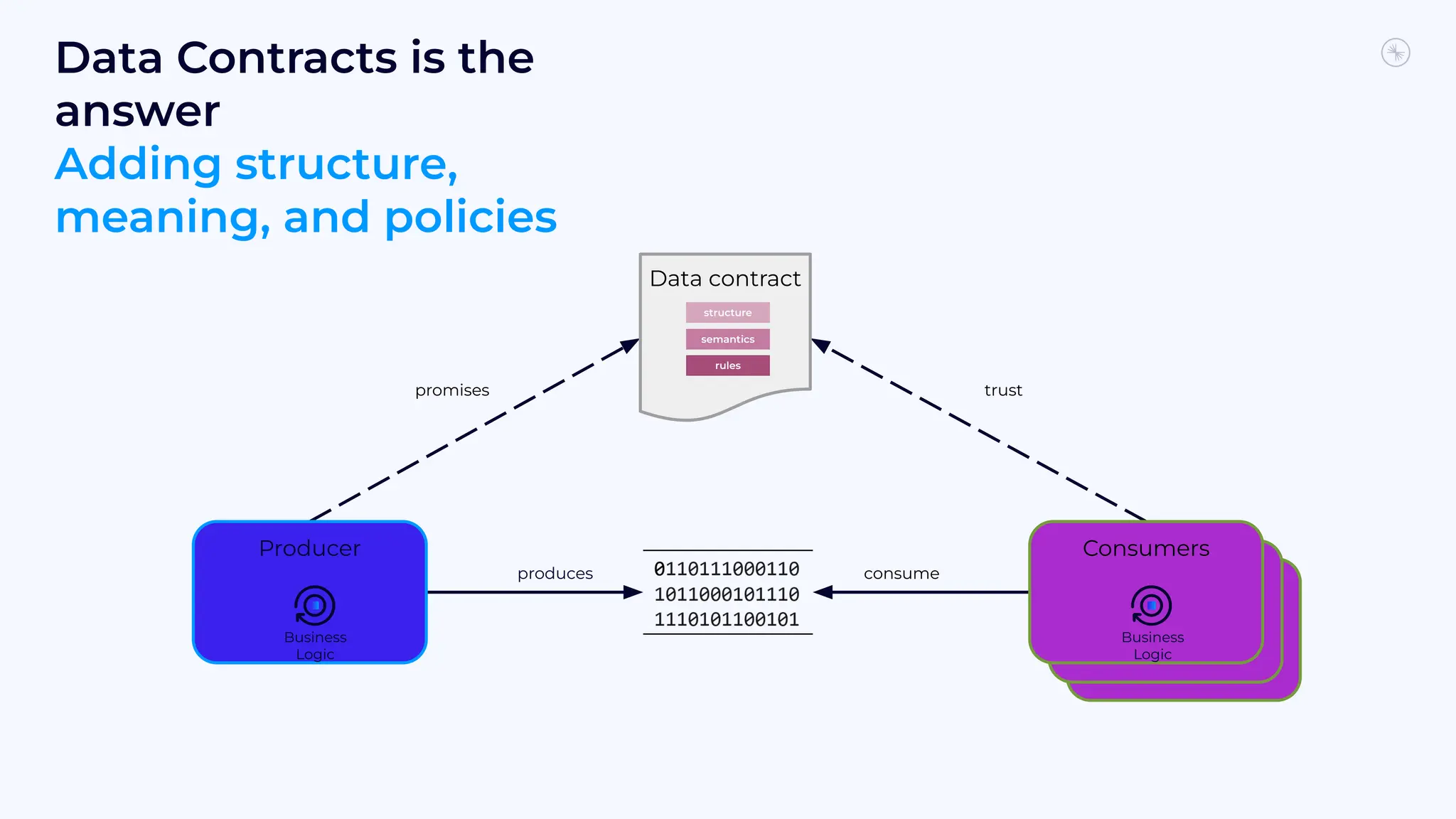

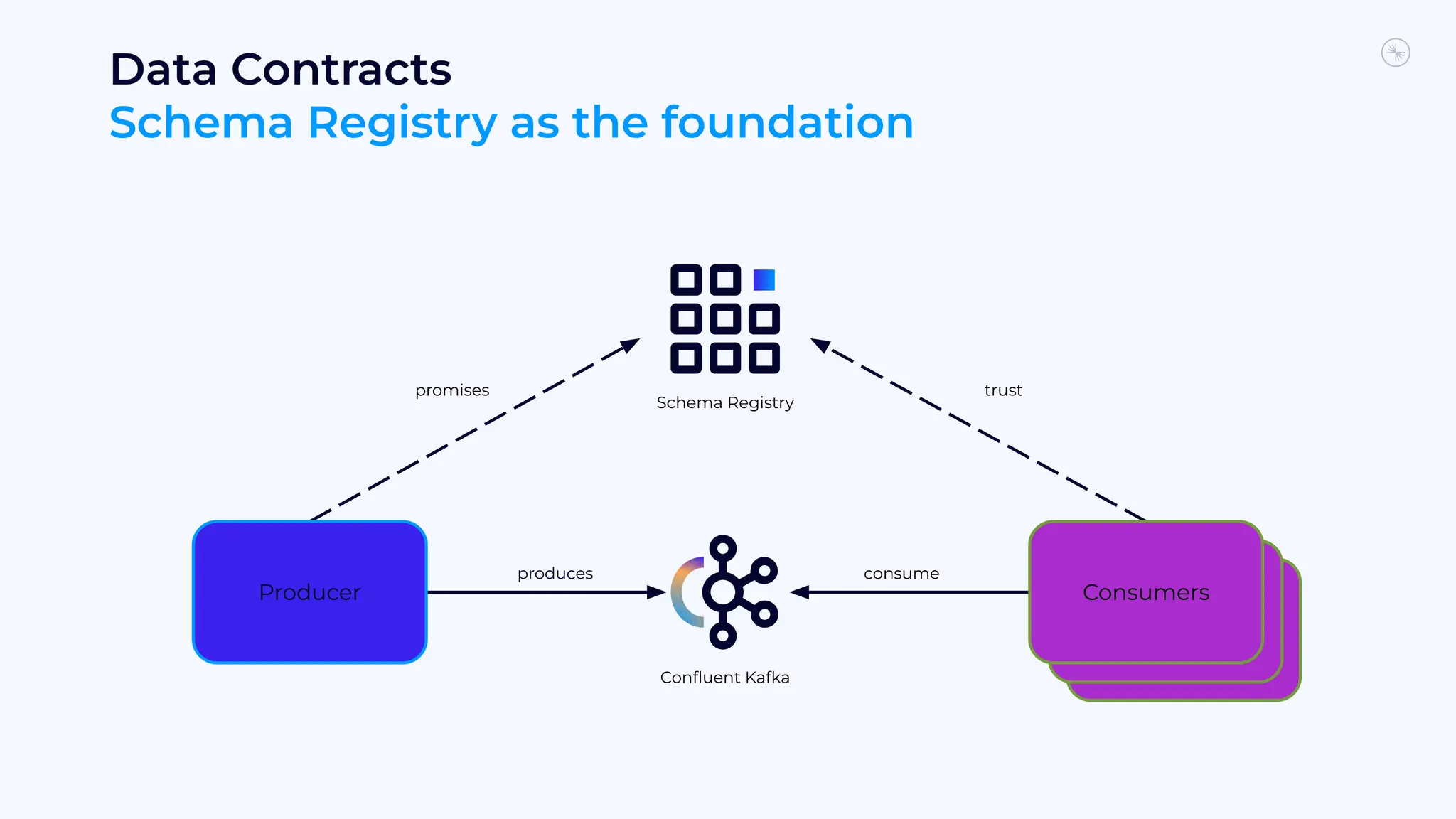

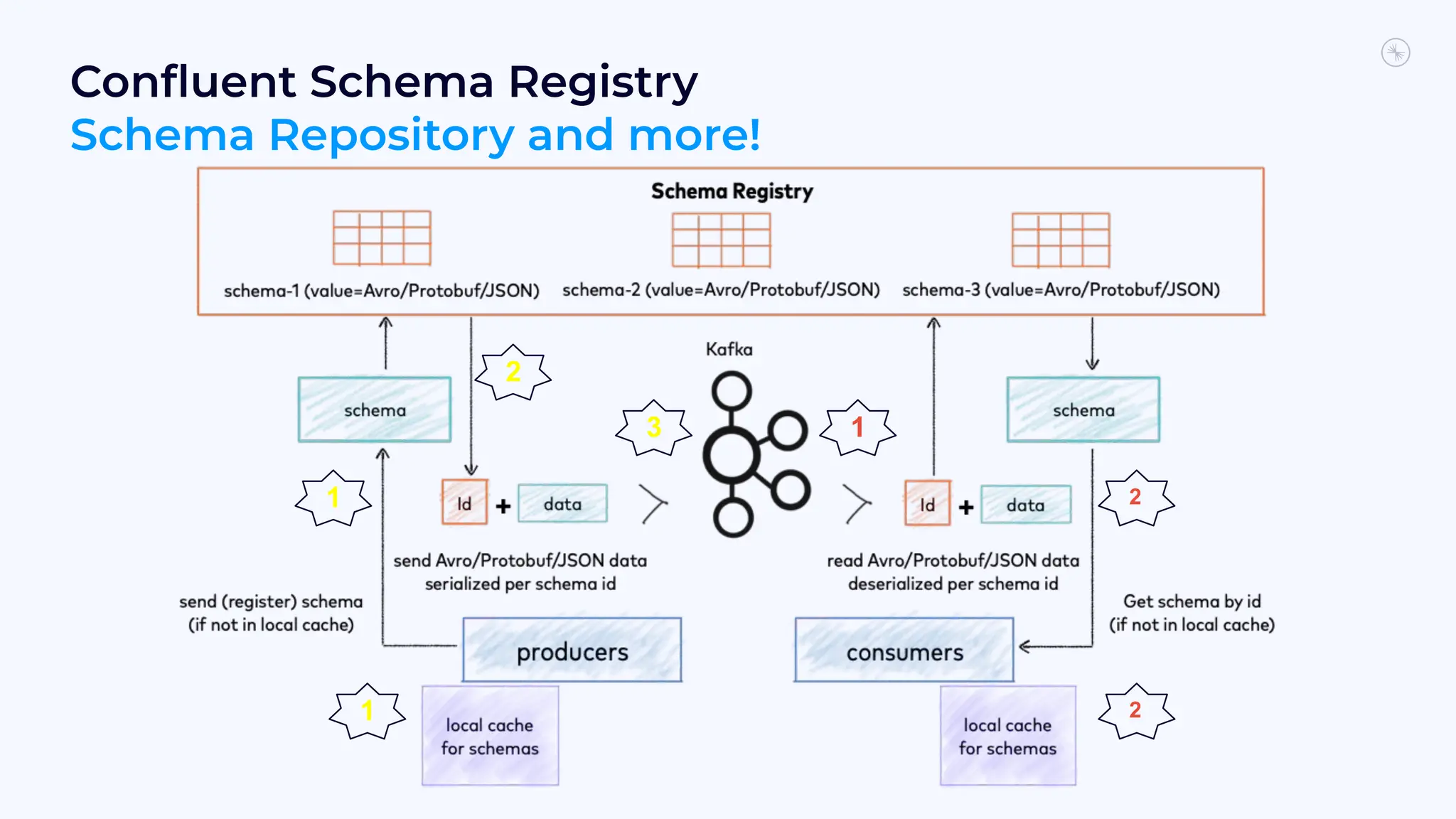

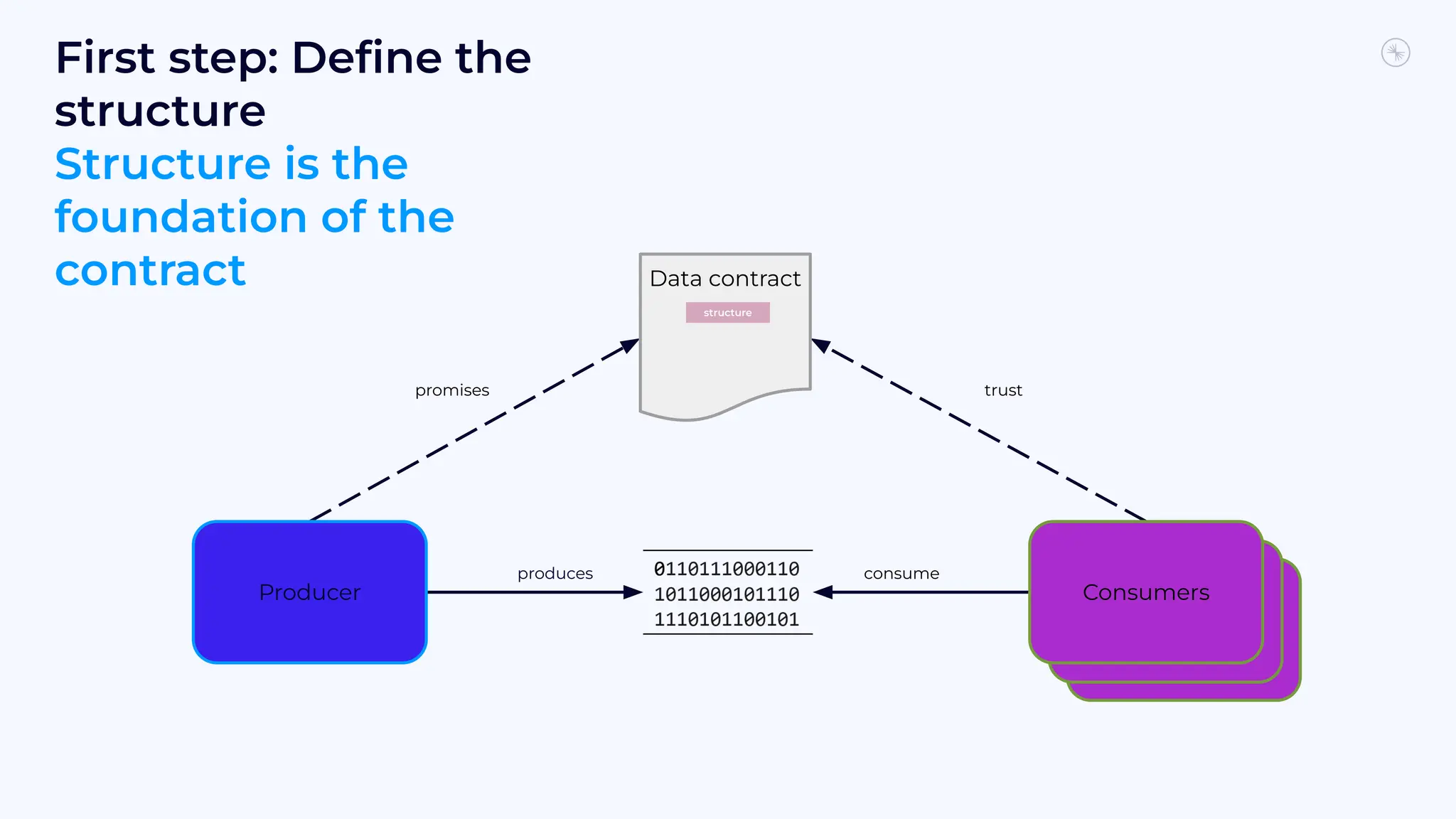

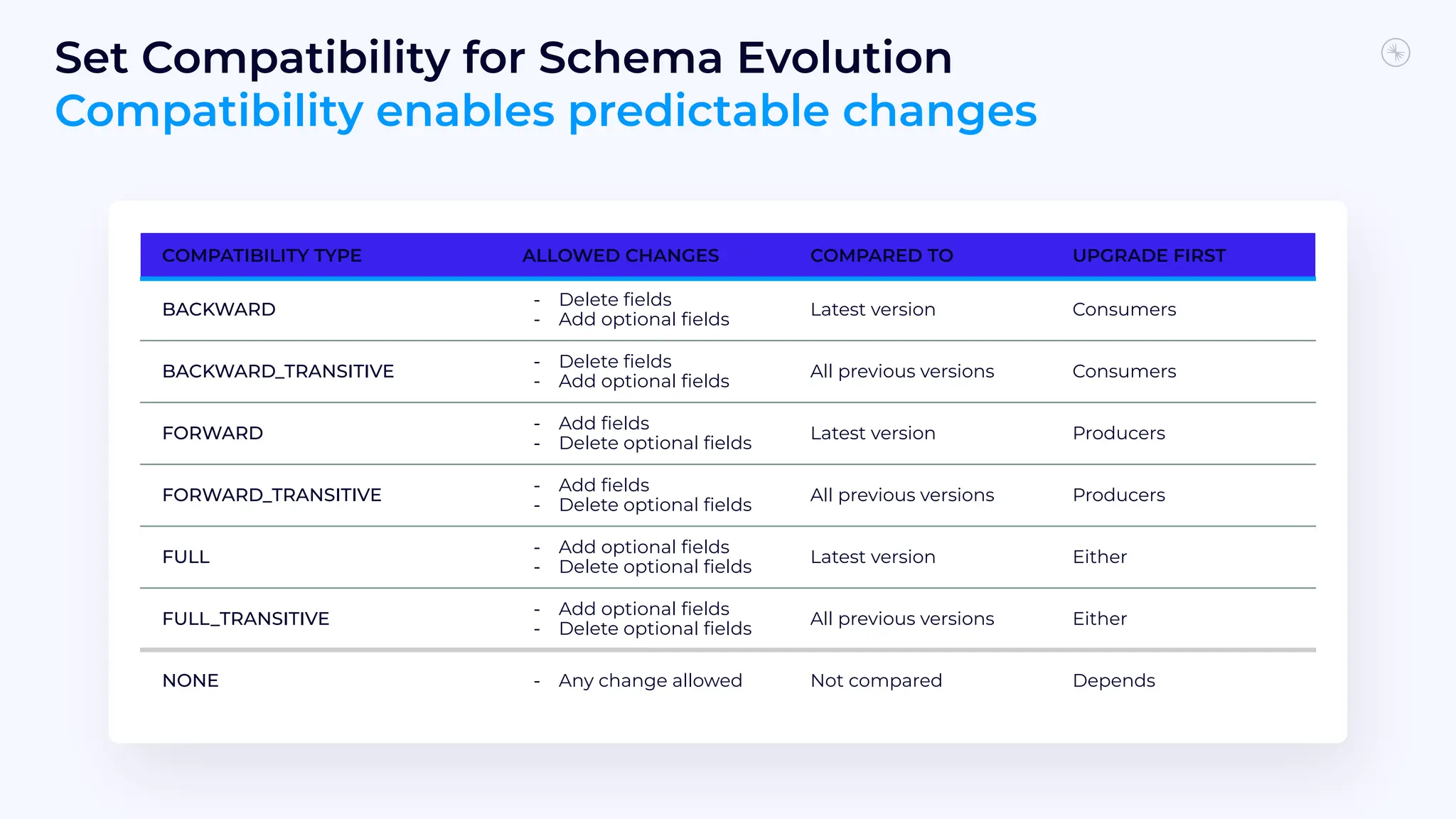

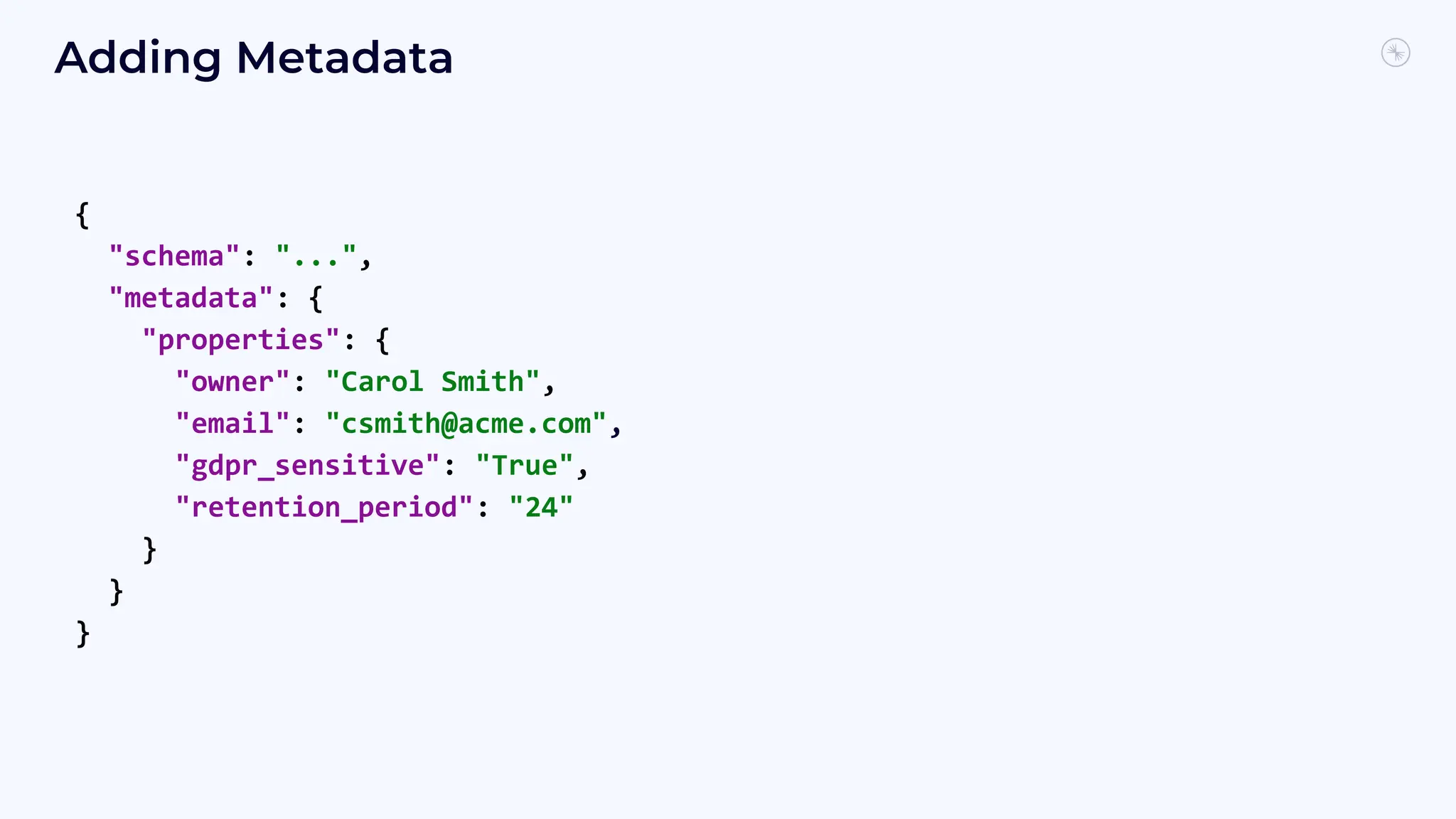

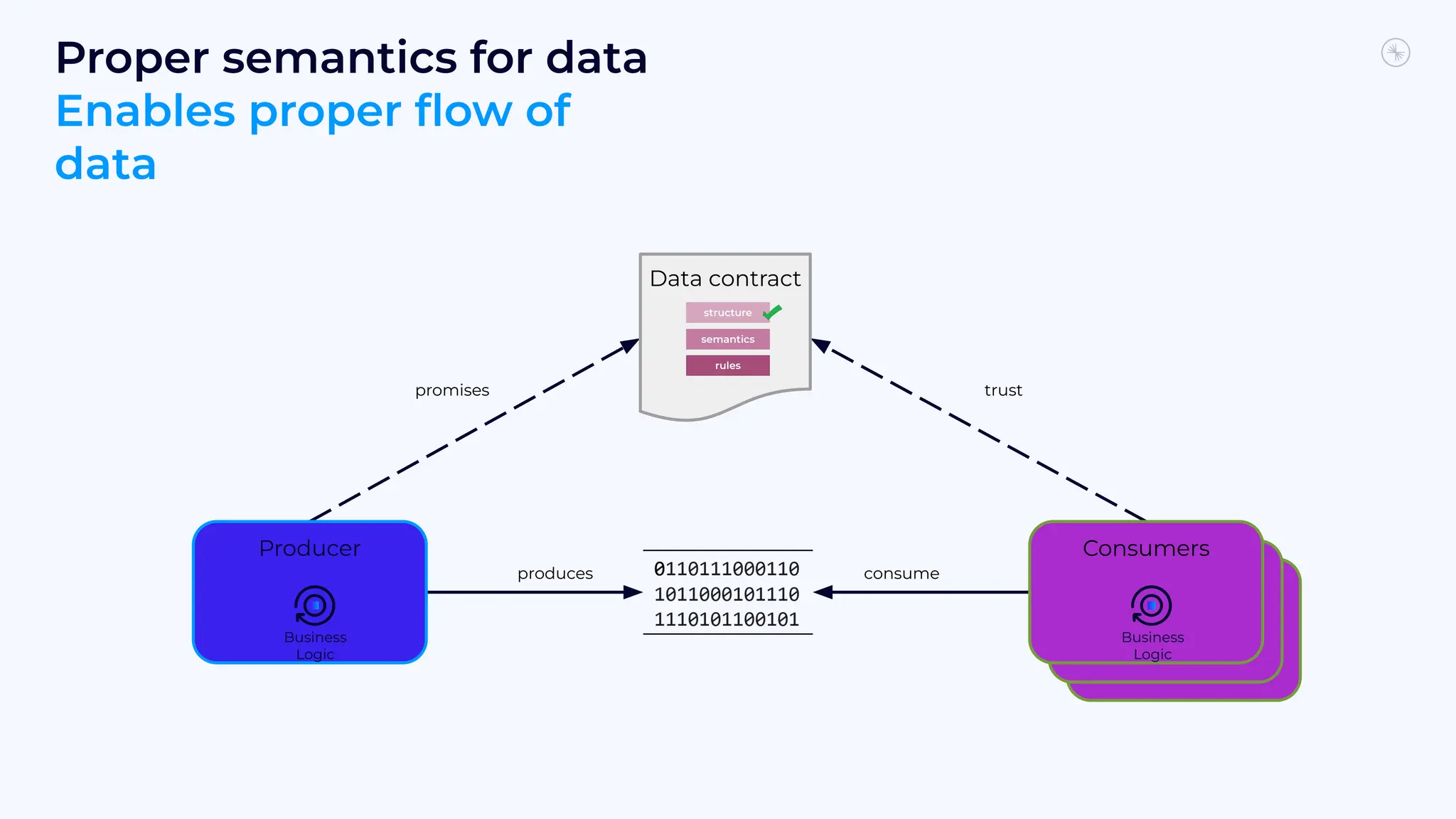

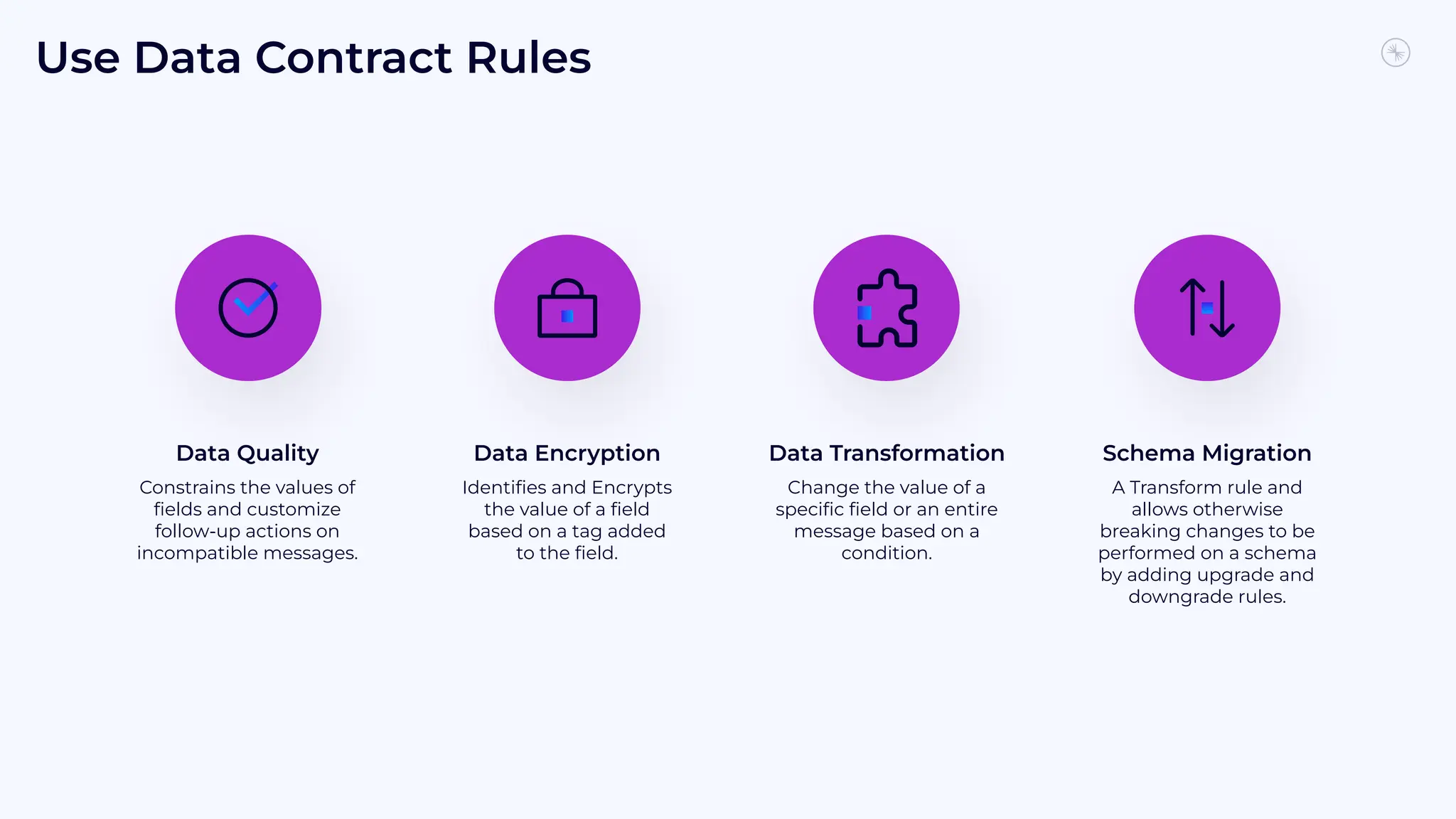

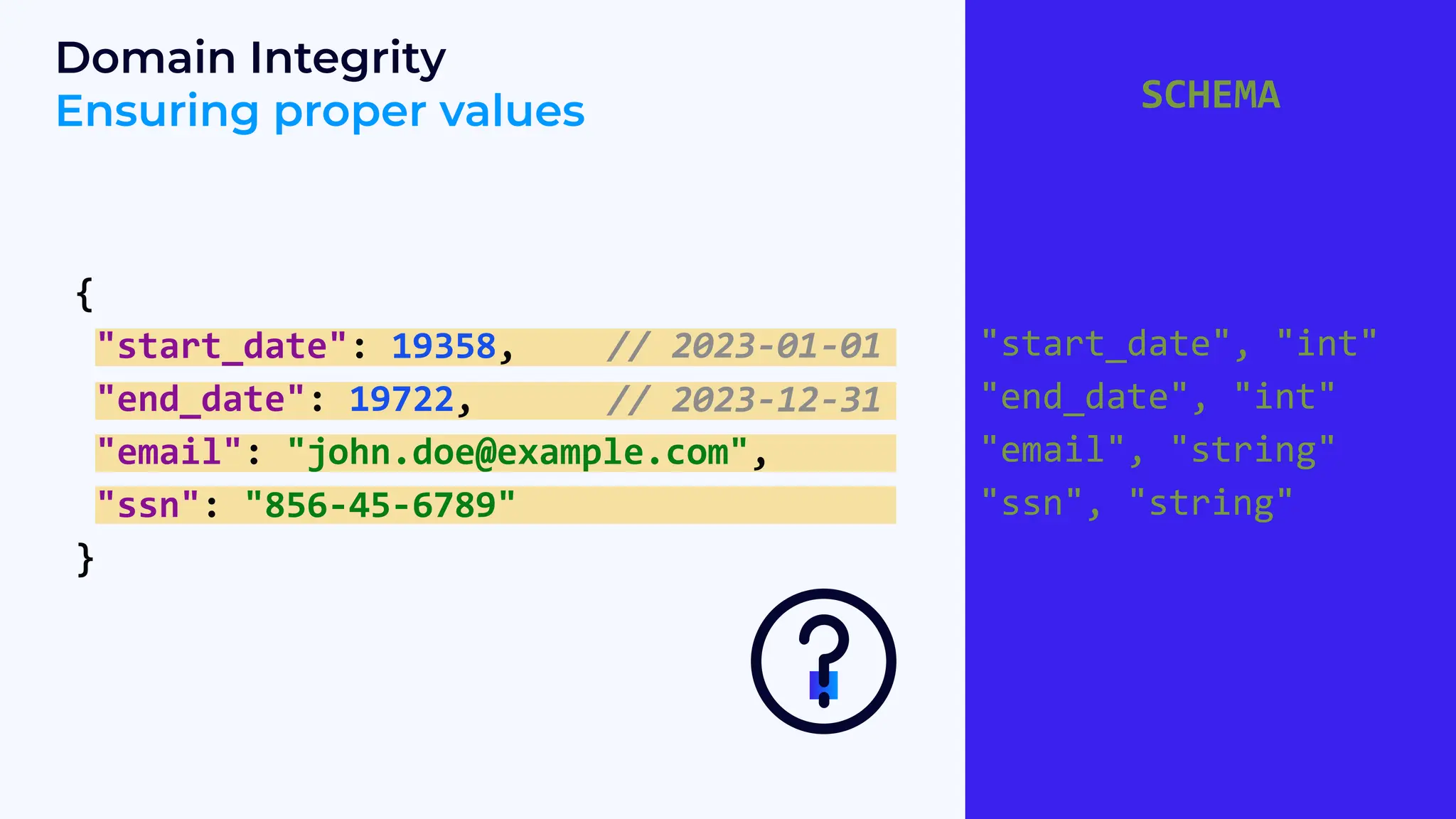

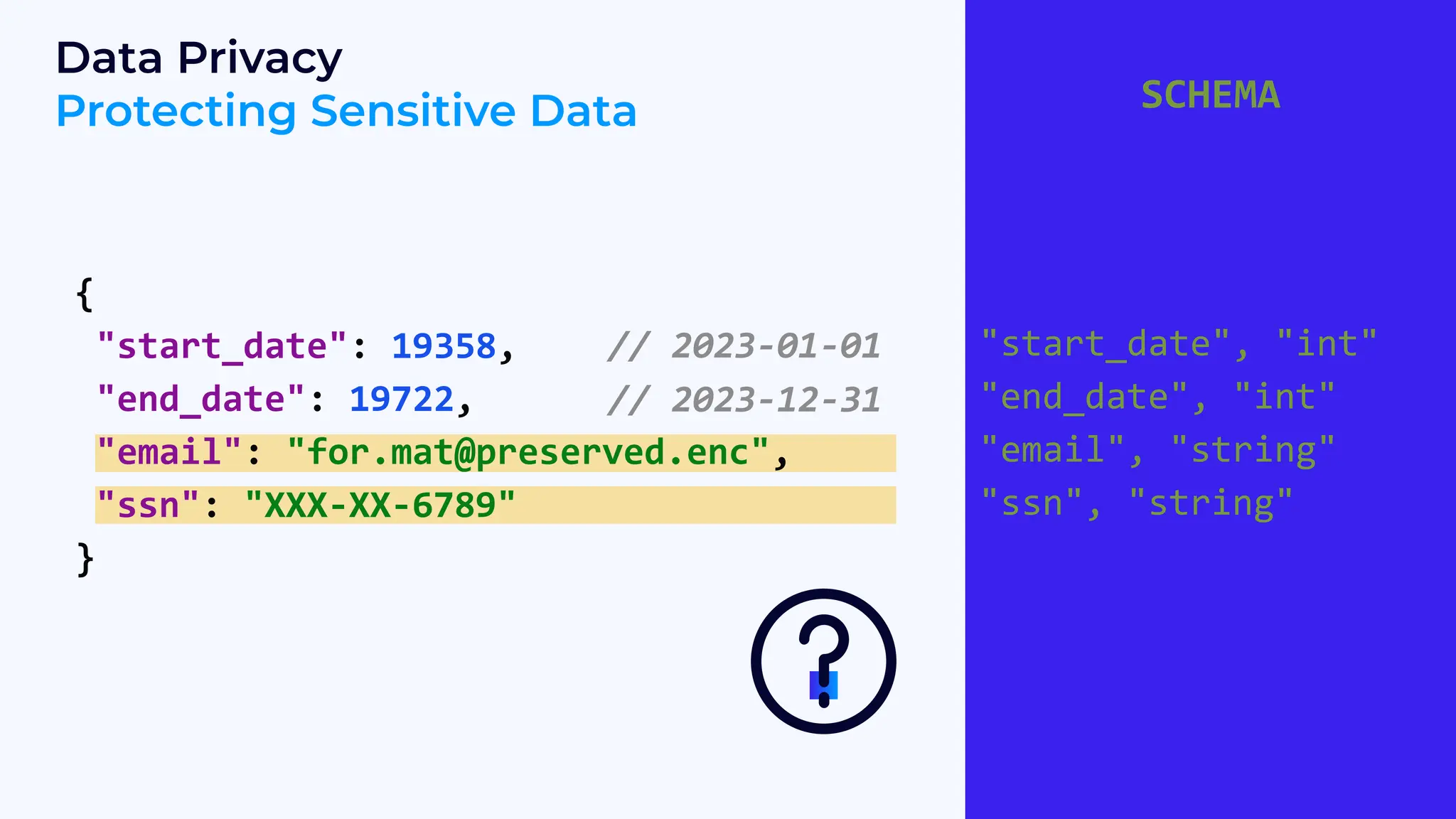

The document discusses stream governance and technical features of the Confluent platform, including schema management, data contracts, and the importance of schema adherence to prevent data quality issues. It outlines a roadmap for future developments such as data cataloging and encryption, emphasizing the need for reliable data processing and security measures. The content emphasizes the significance of enforcing data contracts to enhance data trustworthiness and reduce operational complexities.

![{

"type": "record",

"name": "Membership",

"fields": [

{"name": "start_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "end_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "email", "type": "string"},

{"name": "ssn", "type": "string"}

]

}

Example Avro Schema](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-11-2048.jpg)

![{

"type": "record",

"name": "Membership",

"fields": [

{"name": "start_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "end_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "email", "type": "string"},

{"name": "ssn", "type": "string"},

{"name": "full_name", "type": ["null", "string"]}

]

}

Change in Structure

Can be disruptive](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-12-2048.jpg)

![{

"type": "record",

"name": "Membership",

"fields": [

{"name": "start_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "end_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "email", "type": "string"},

{"name": "ssn", "type": "string"}

]

}

Example Avro Schema](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-21-2048.jpg)

![{

"type": "record",

"name": "Membership",

"fields": [

{"name": "start_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "end_date", "type": {"type": "int", "logicalType": "date"}},

{"name": "email", "type": "string"},

{"name": "ssn", "type": "string"},

{"name": "full_name", "type": ["null", "string"]}

]

}

Constant Change

How do we know if this is allowed?](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-22-2048.jpg)

![Data Quality Rule

{

"schema": "...",

"schemaType": "AVRO",

"ruleSet": {

"domainRules": [{

"name": "validateEmail",

"kind": "CONDITION",

"mode": "WRITE",

"type": "CEL",

"doc": "Rule checks email is well formatted and sends record to a DLQ if not.",

"expr": "Membership.email.matches(r".+@.+..+")",

"onFailure": "DLQ",

"params": {

"dlq.topic": "bad_members"

}

}]

}

}](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-28-2048.jpg)

![Data Encryption Rule

{

"schema": "...",

"schemaType": "AVRO",

"metadata": {

"tags": {

"Membership.email": ["PII"]

}

},

"ruleSet": {

"domainRules": [{

"name": "encryptPII",

"type": "ENCRYPT",

"doc": "Rule encrypts every field tagged as PII. ",

"tags": ["PII"],

"params": {

"encrypt.kek.name": "ce581594-3115-486e-b391-5ea874371e73",

"encrypt.kms.type": "aws-kms",

"encrypt.kms.key.id": "arn:aws:kms:us-east-1:586051073099:key/ce58..."

}

}]

}

}

On Roadmap](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-30-2048.jpg)

![Data Transformation Rule

{

"schema": "...",

"schemaType": "AVRO",

"ruleSet": {

"domainRules": [{

"name": "populateDefaultSSN",

"kind": "TRANSFORM",

"type": "CEL_FIELD",

"doc": "Rule checks if ssn is empty and replaces it with 'unspecified' if it is.",

"mode": "WRITE",

"expr": "name == 'ssn' ; value == '' ? 'unspecified' : value"

}]

}

}](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-31-2048.jpg)

![Schema Migration Rule

{

"schema": "...",

"schemaType": "AVRO",

"ruleSet": {

"migrationRules": [{

"name": "changeSsnToSocialSecurityNumber",

"kind": "TRANSFORM",

"type": "JSONATA",

"doc": "Consumer is on new major version and gets socialSecurityNumber while producer sends ssn.",

"mode": "UPGRADE",

"expr": "$merge([$sift($, function($v, $k) {$k != 'ssn'}), {'socialSecurityNumber': $.'ssn'}])"

}, {

"name": "changeSocialSecurityNumberToSsn",

"kind": "TRANSFORM",

"type": "JSONATA",

"doc": "Consumer is on old major version and gets ssn while producer sends socialSecurityNumber.",

"mode": "DOWNGRADE",

"expr": "$merge([$sift($, function($v, $k) {$k != 'socialSecurityNumber'}), {'ssn':

$.'socialSecurityNumber'}])"

}]

}](https://image.slidesharecdn.com/confluentdatacontractsfeatureswebinar-17thseptember2024-240920103424-d2d0dff3/75/Strumenti-e-Strategie-di-Stream-Governance-con-Confluent-Platform-32-2048.jpg)