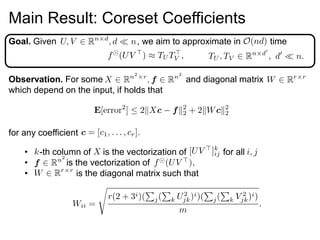

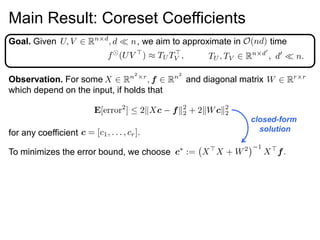

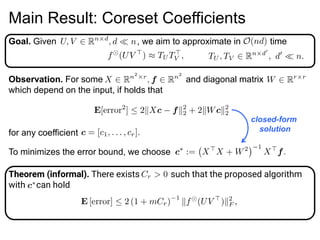

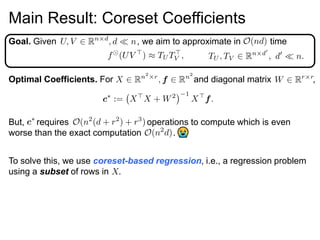

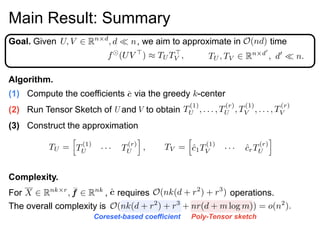

1) The document proposes a polynomial tensor sketch method to approximate element-wise matrix functions in linear time. It combines tensor sketching, which can approximate matrix monomials fast, with polynomial approximation of the target function.

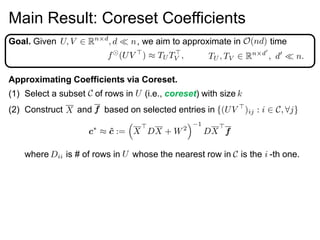

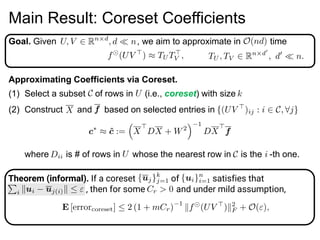

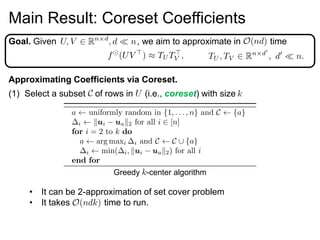

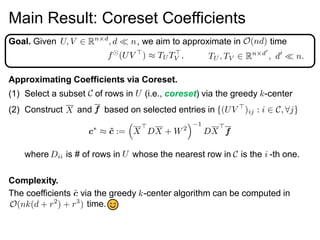

2) Coreset-based regression is used to efficiently compute optimal polynomial coefficients by selecting a small subset of rows.

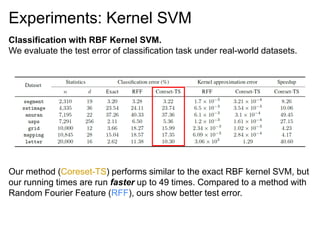

3) Experiments show the method outperforms alternatives like random Fourier features for applications like kernel approximation, kernel SVM, and Sinkhorn algorithm, providing speedups of up to 49x.

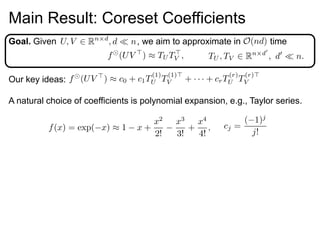

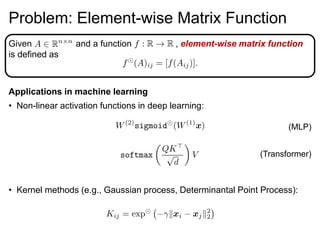

![Main Result: Polynomial Tensor Sketch

Our key ideas:

1. Tensor sketch [PP13] can approximate element-wise matrix monomial

function in time, i.e.,

Goal. Given , we aim to approximate in time](https://image.slidesharecdn.com/polytensorpresentation-200706075707/85/Polynomial-Tensor-Sketch-for-Element-wise-Matrix-Function-ICML-2020-7-320.jpg)

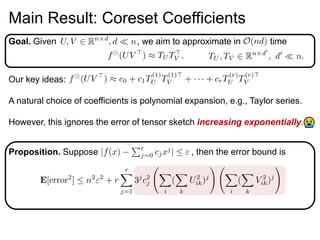

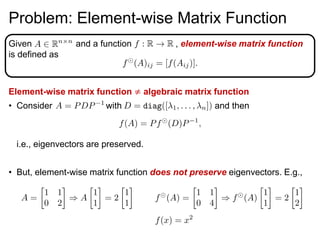

![Main Result: Polynomial Tensor Sketch

Our key ideas:

1. Tensor sketch [PP13] can approximate element-wise matrix monomial

function in time, i.e.,

2. Polynomial approximation on

Goal. Given , we aim to approximate in time](https://image.slidesharecdn.com/polytensorpresentation-200706075707/85/Polynomial-Tensor-Sketch-for-Element-wise-Matrix-Function-ICML-2020-8-320.jpg)

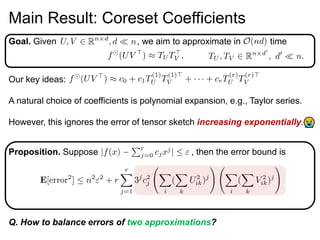

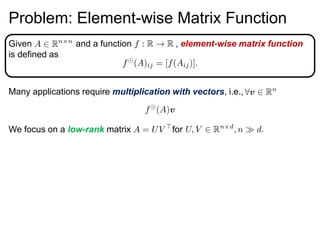

![Main Result: Polynomial Tensor Sketch

Our key ideas:

1. Tensor sketch [PP13] can approximate element-wise matrix monomial

function in time.

2. Polynomial approximation on

Applying Tensor sketch with , the low-rank approximation on

can be done in time.

target dimension for Tensor sketch

polynomial degree

Goal. Given , we aim to approximate in time](https://image.slidesharecdn.com/polytensorpresentation-200706075707/85/Polynomial-Tensor-Sketch-for-Element-wise-Matrix-Function-ICML-2020-9-320.jpg)

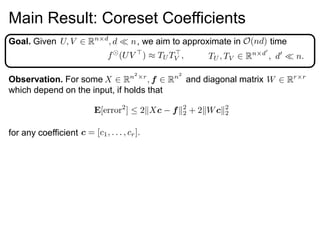

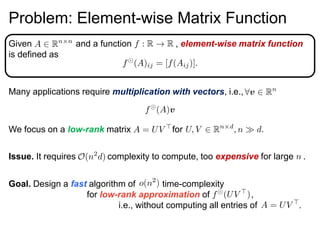

![Main Result: Polynomial Tensor Sketch

Our key ideas:

1. Tensor sketch [PP13] can approximate element-wise matrix monomial

function in time.

2. Polynomial approximation on

Applying Tensor sketch with , the low-rank approximation on

can be done in time.

Q. How can find good coefficients?

target dimension for Tensor sketch

polynomial degree

Goal. Given , we aim to approximate in time](https://image.slidesharecdn.com/polytensorpresentation-200706075707/85/Polynomial-Tensor-Sketch-for-Element-wise-Matrix-Function-ICML-2020-10-320.jpg)