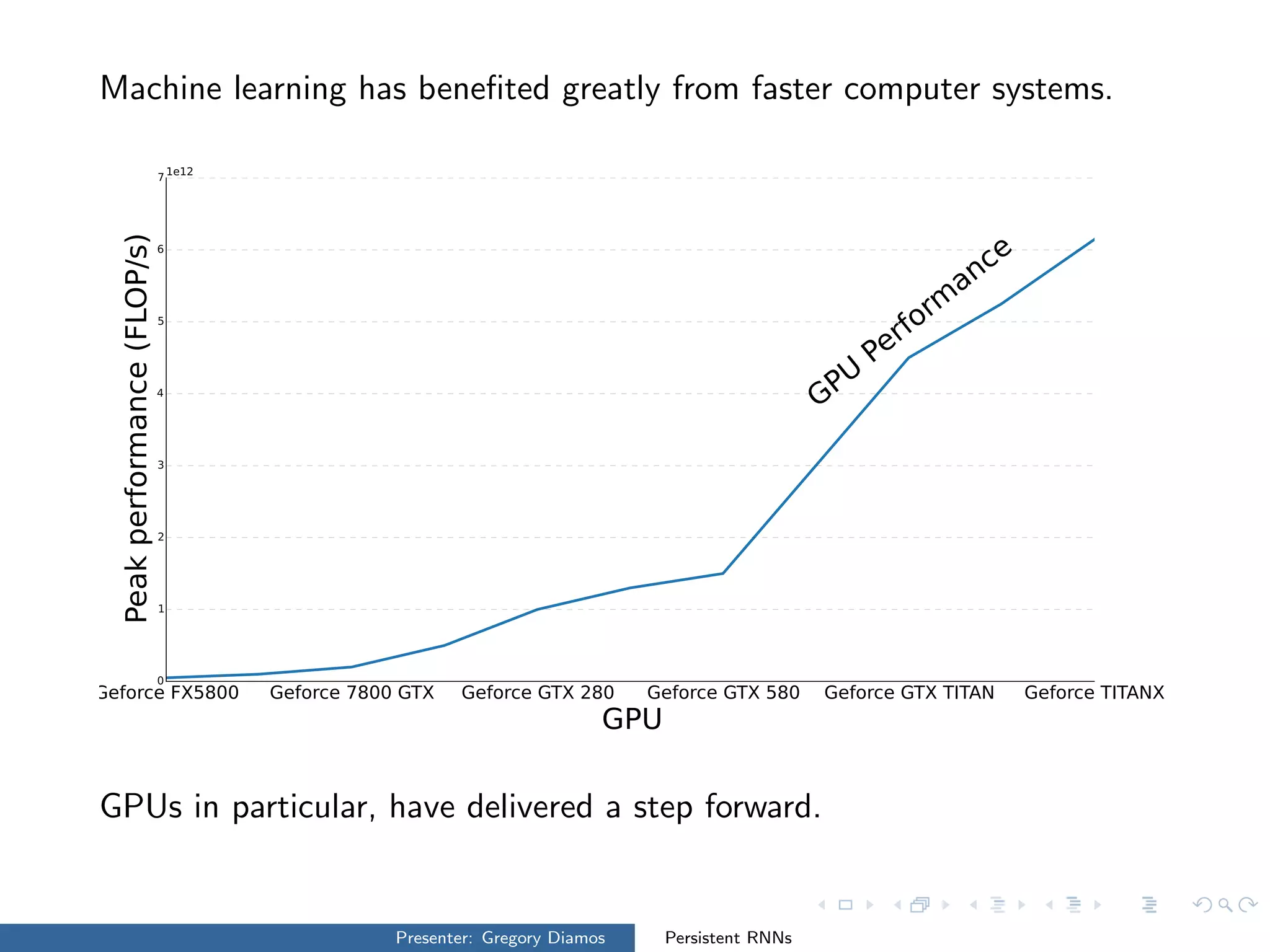

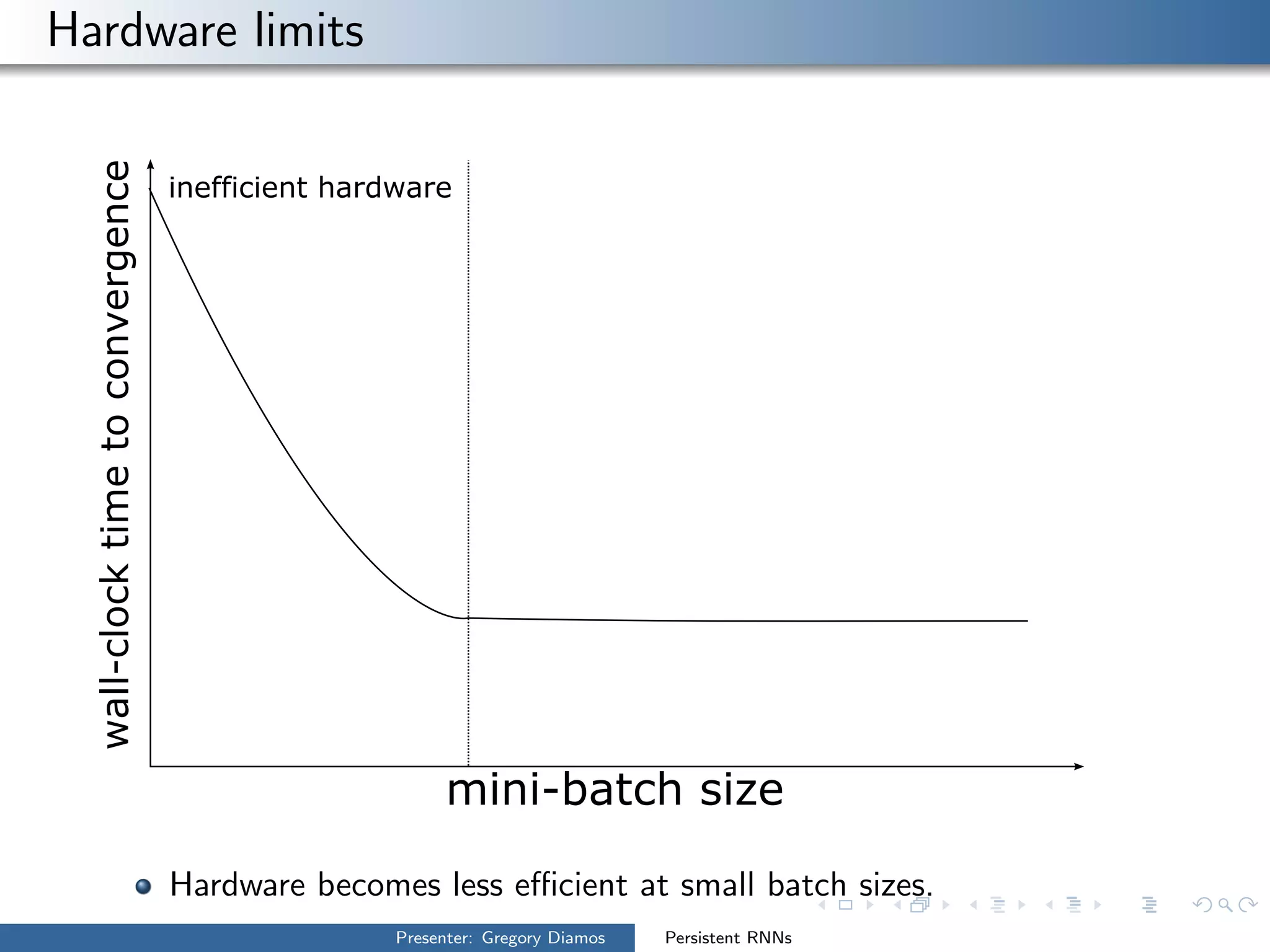

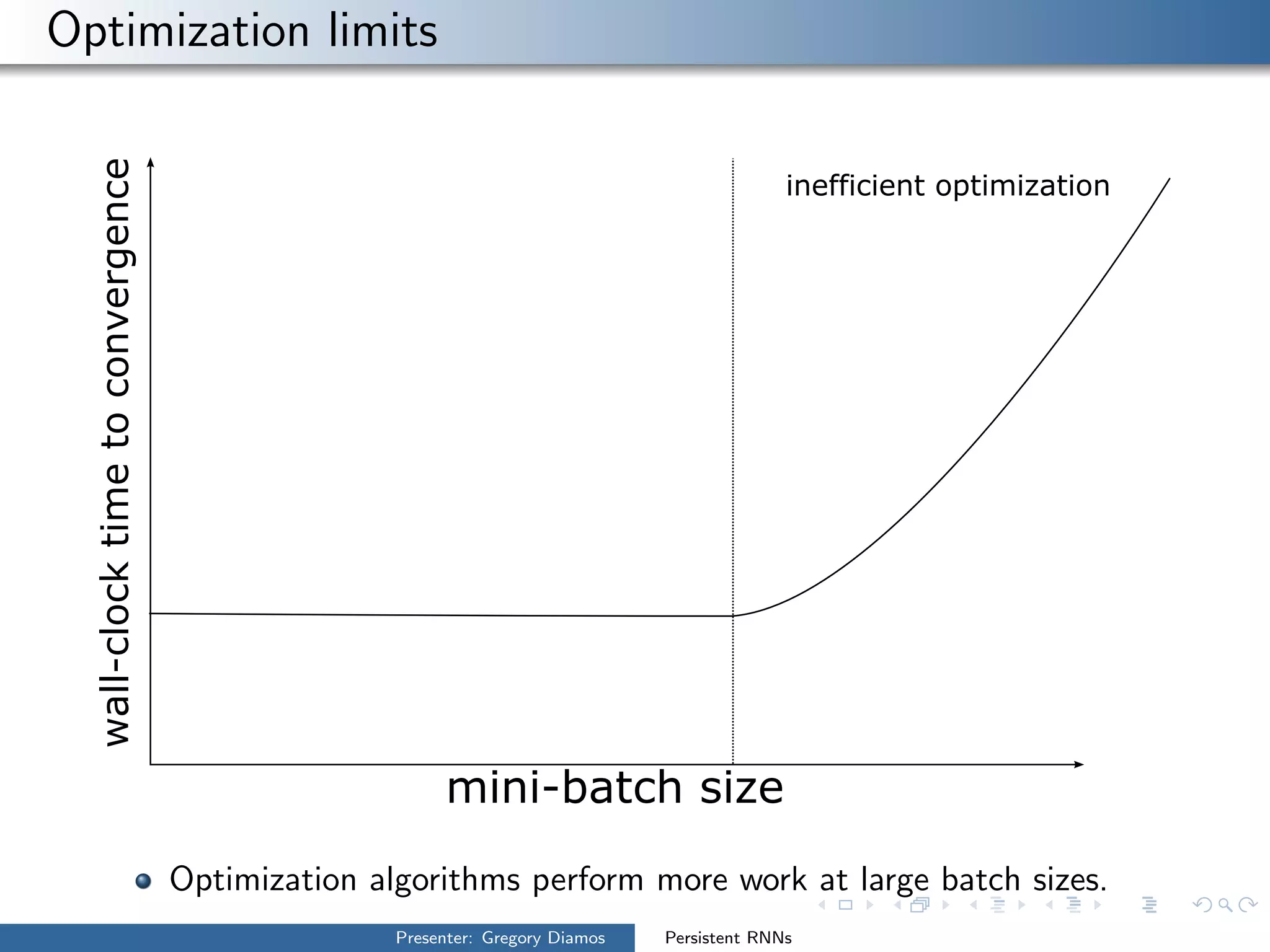

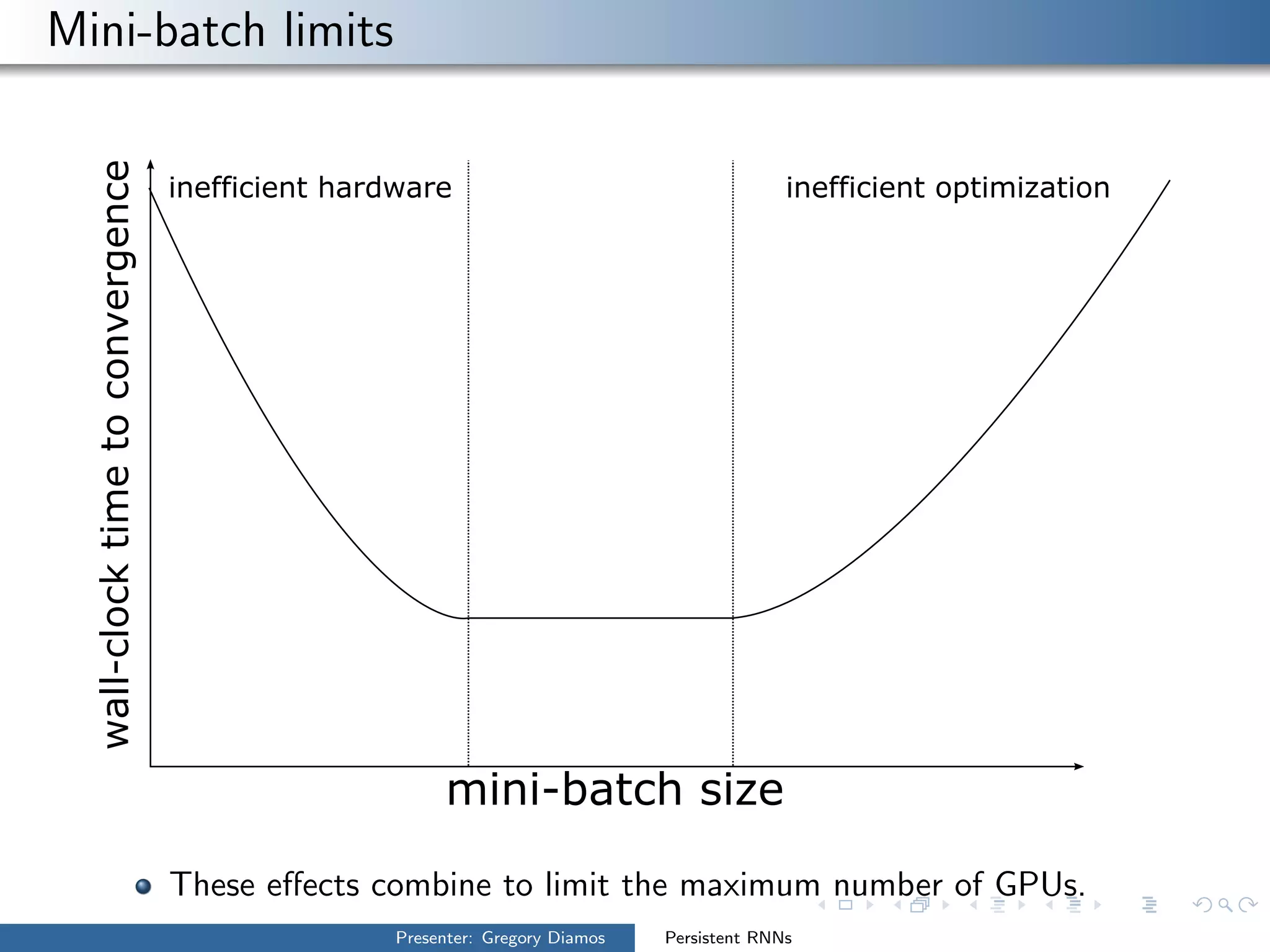

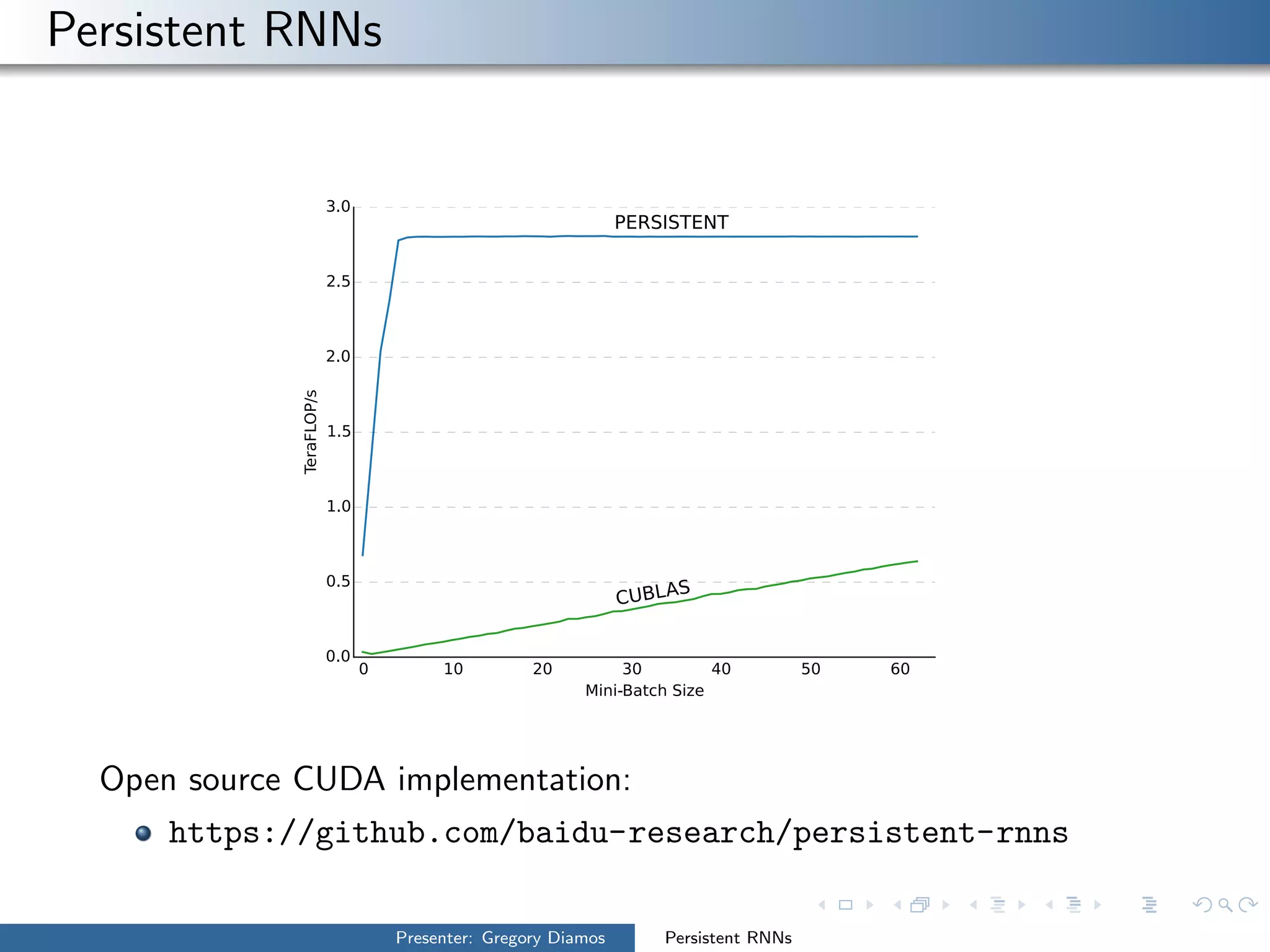

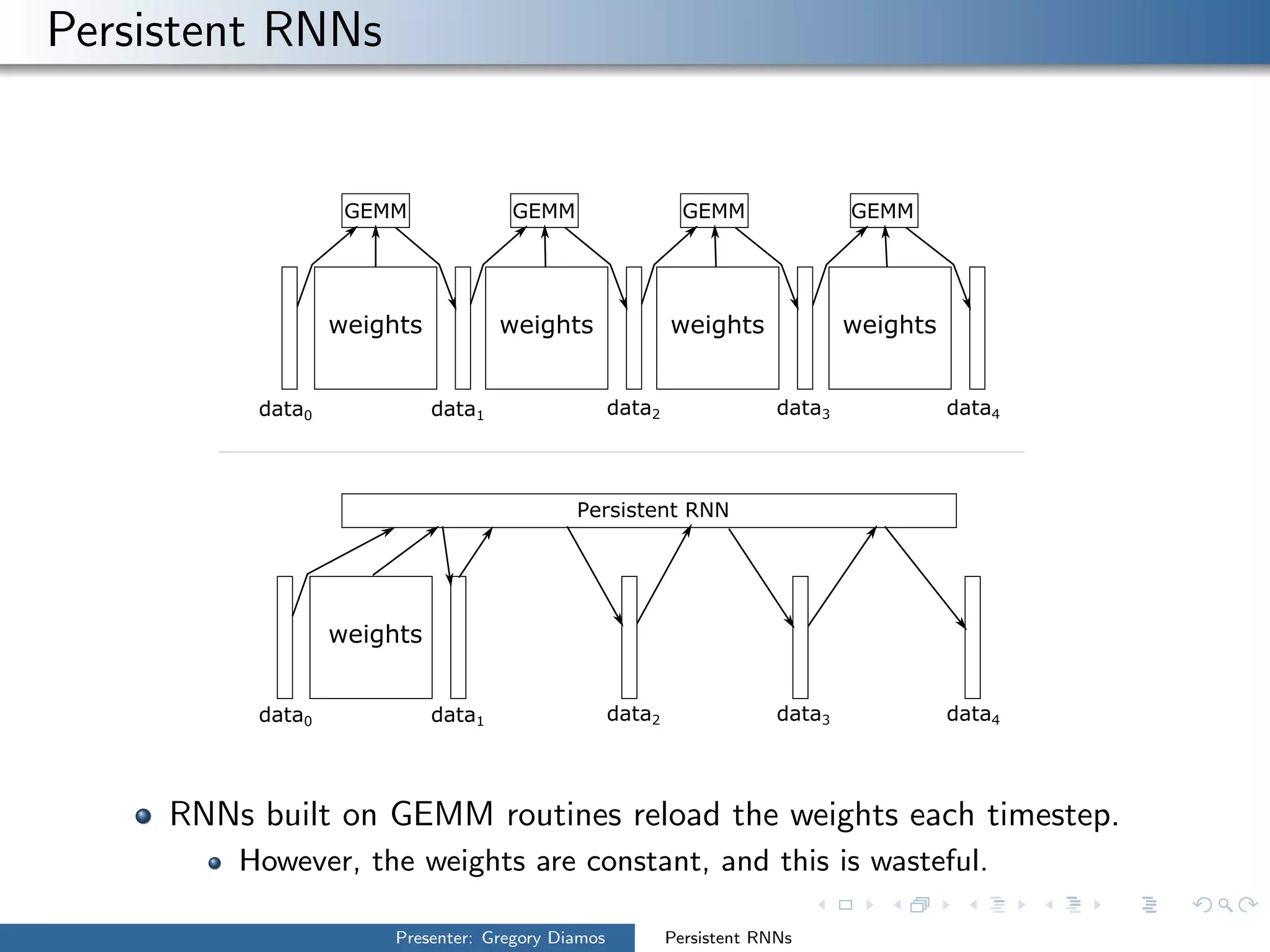

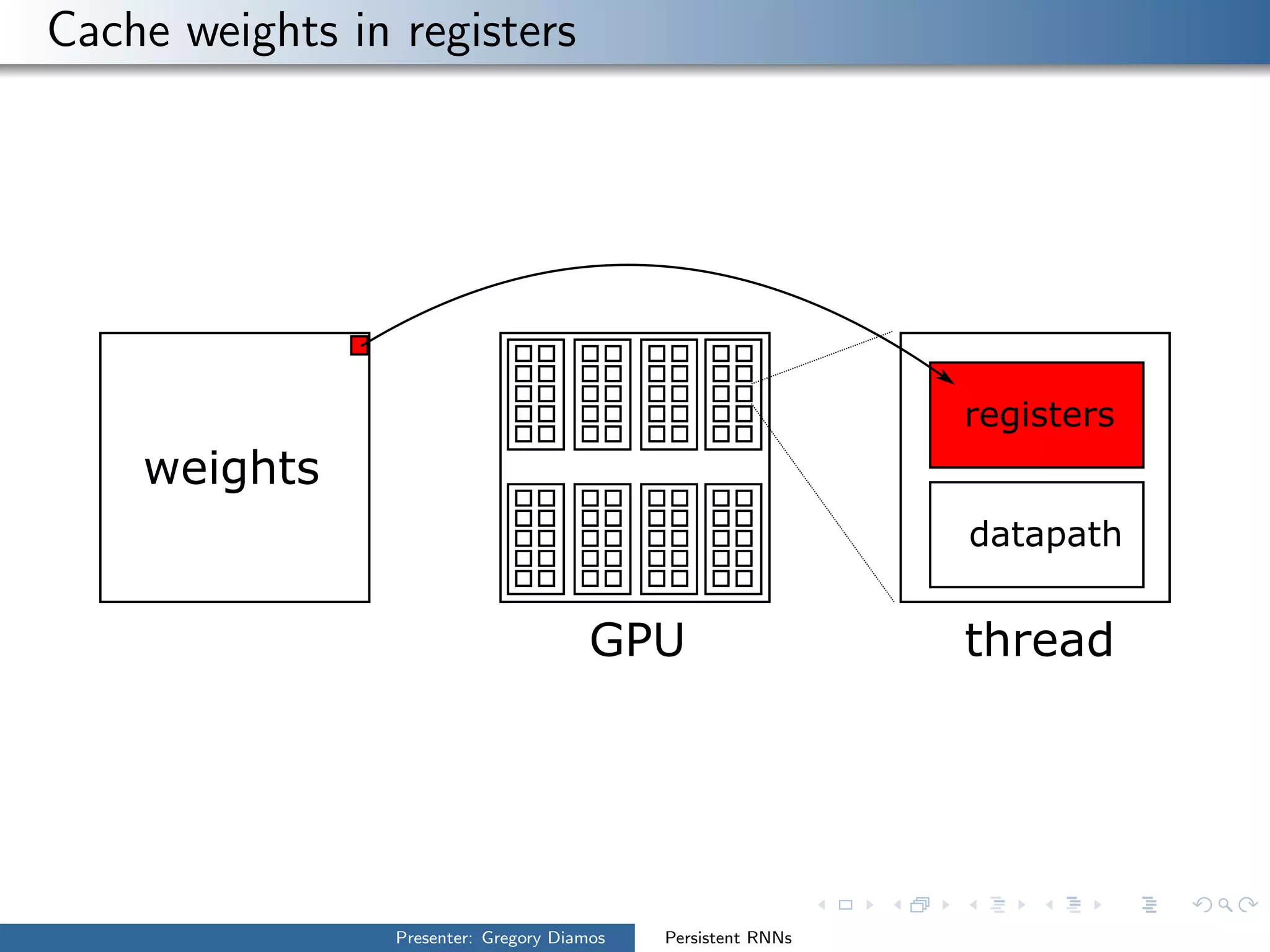

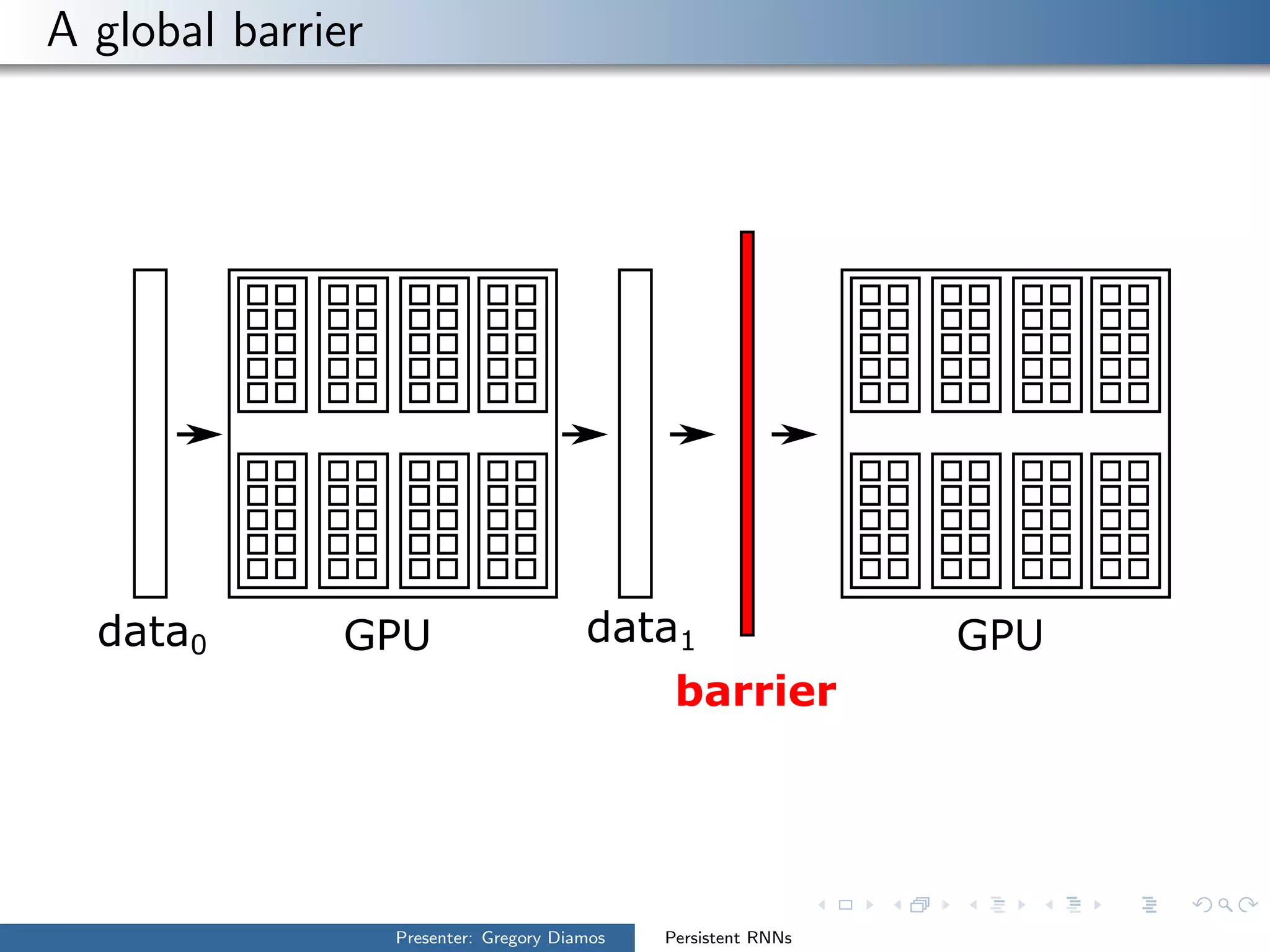

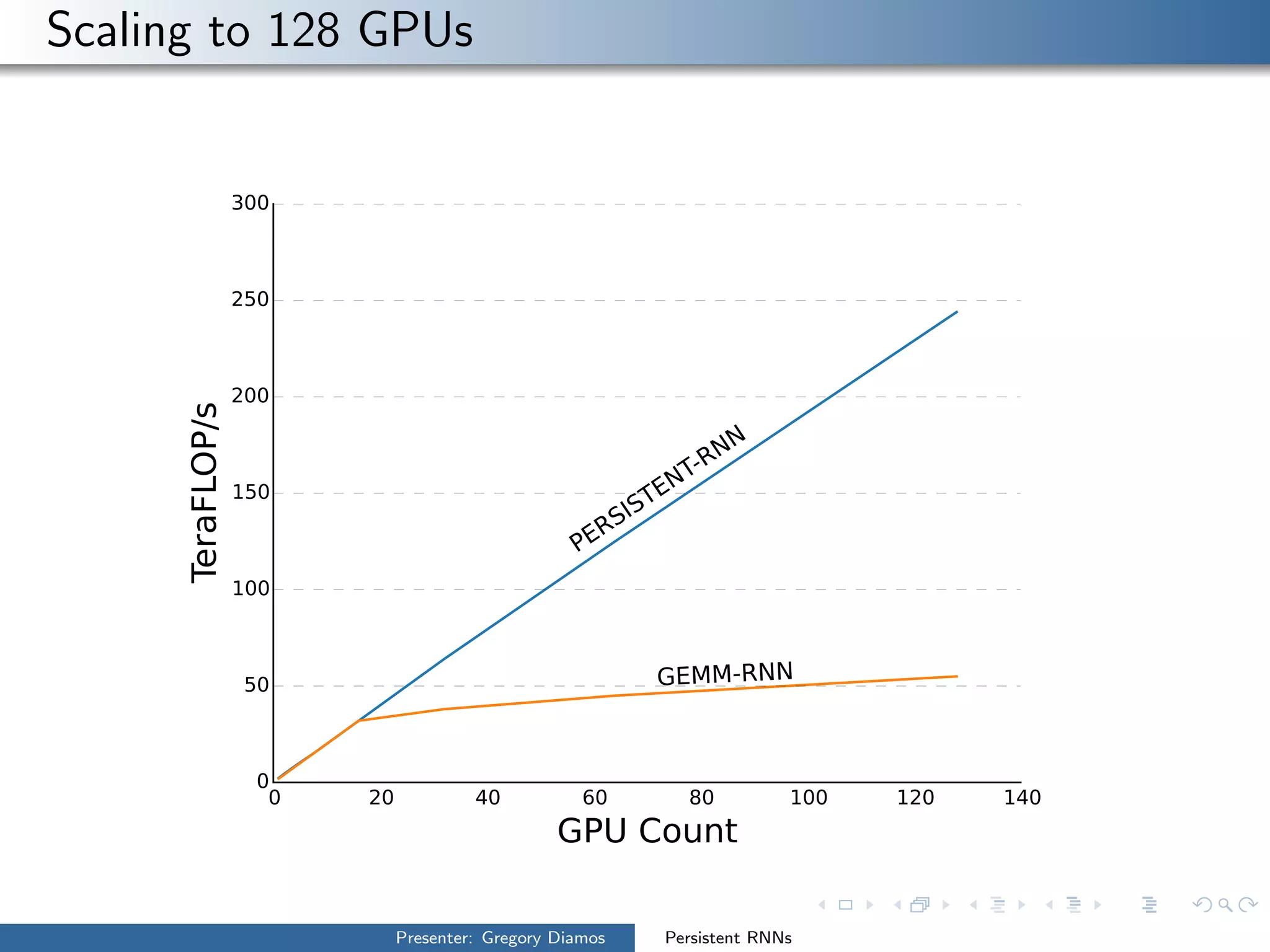

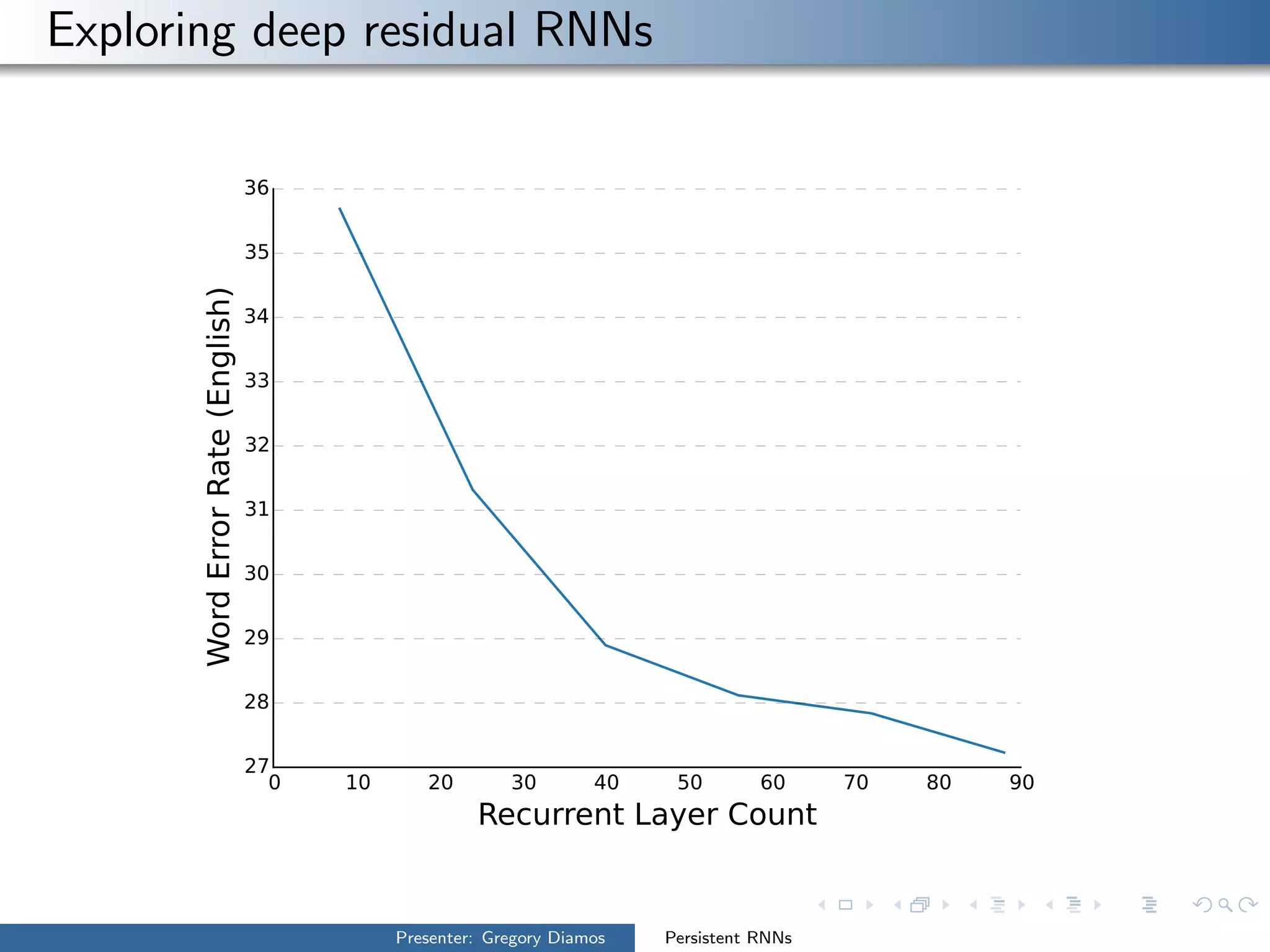

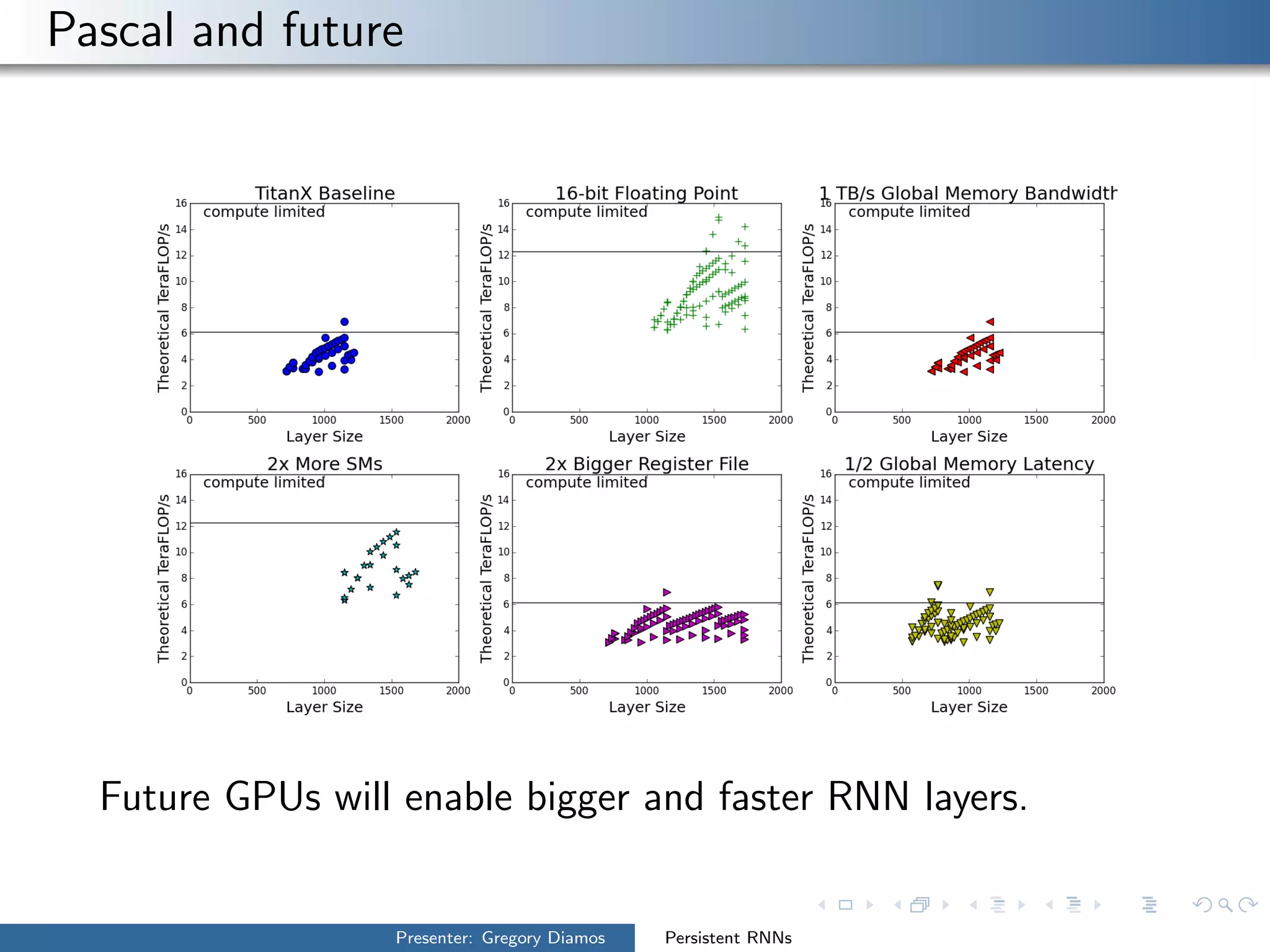

The presentation by Gregory Diamos discusses how persistent RNNs can enhance machine learning performance by efficiently utilizing GPU resources, particularly addressing the issues of data-parallelism and optimization limits. It emphasizes the potential of scaling RNNs with upcoming GPU technology while encouraging the development of more efficient algorithms. The speaker advocates for pushing performance boundaries towards achieving significant computational outputs with minimal energy consumption.