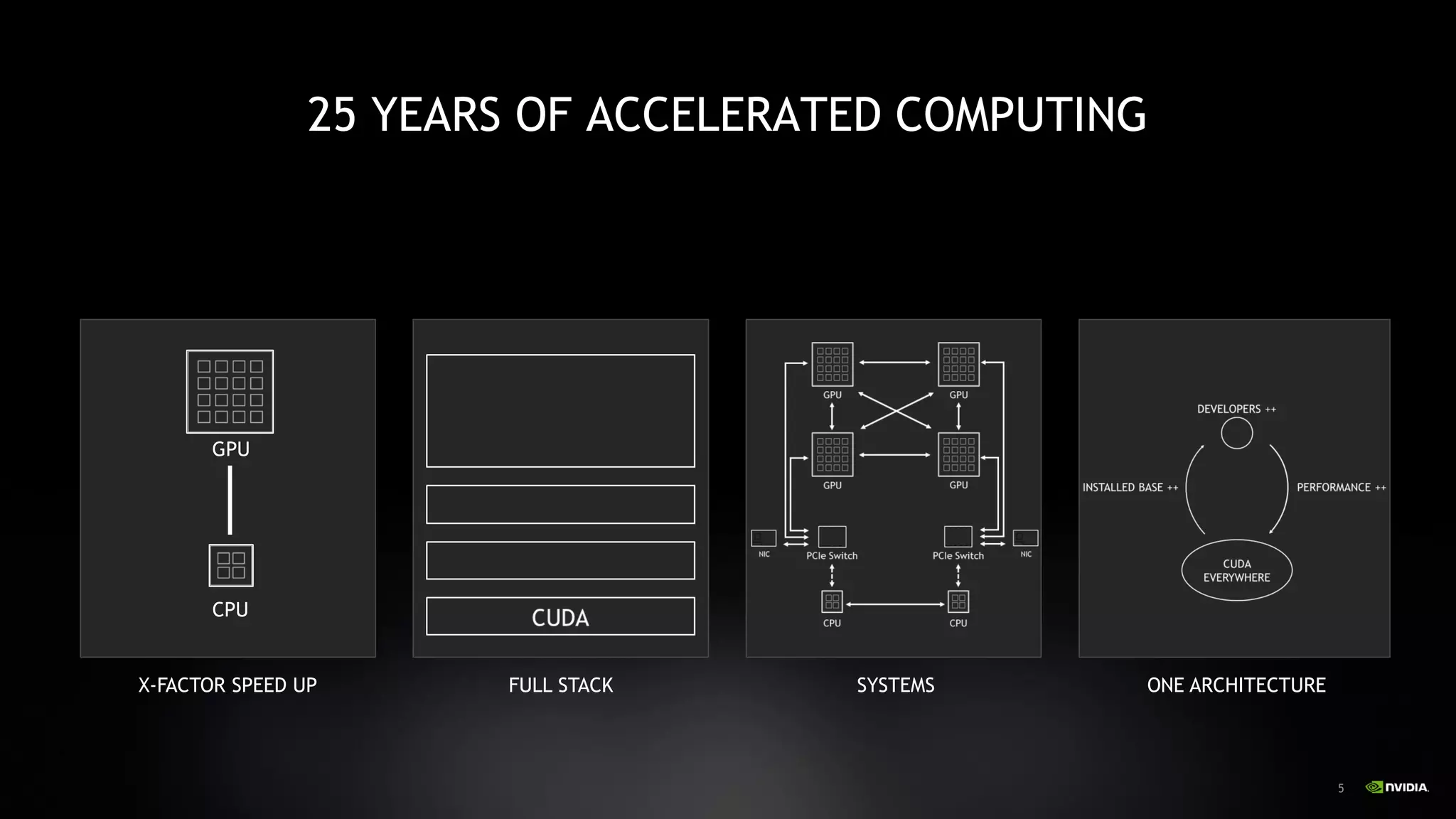

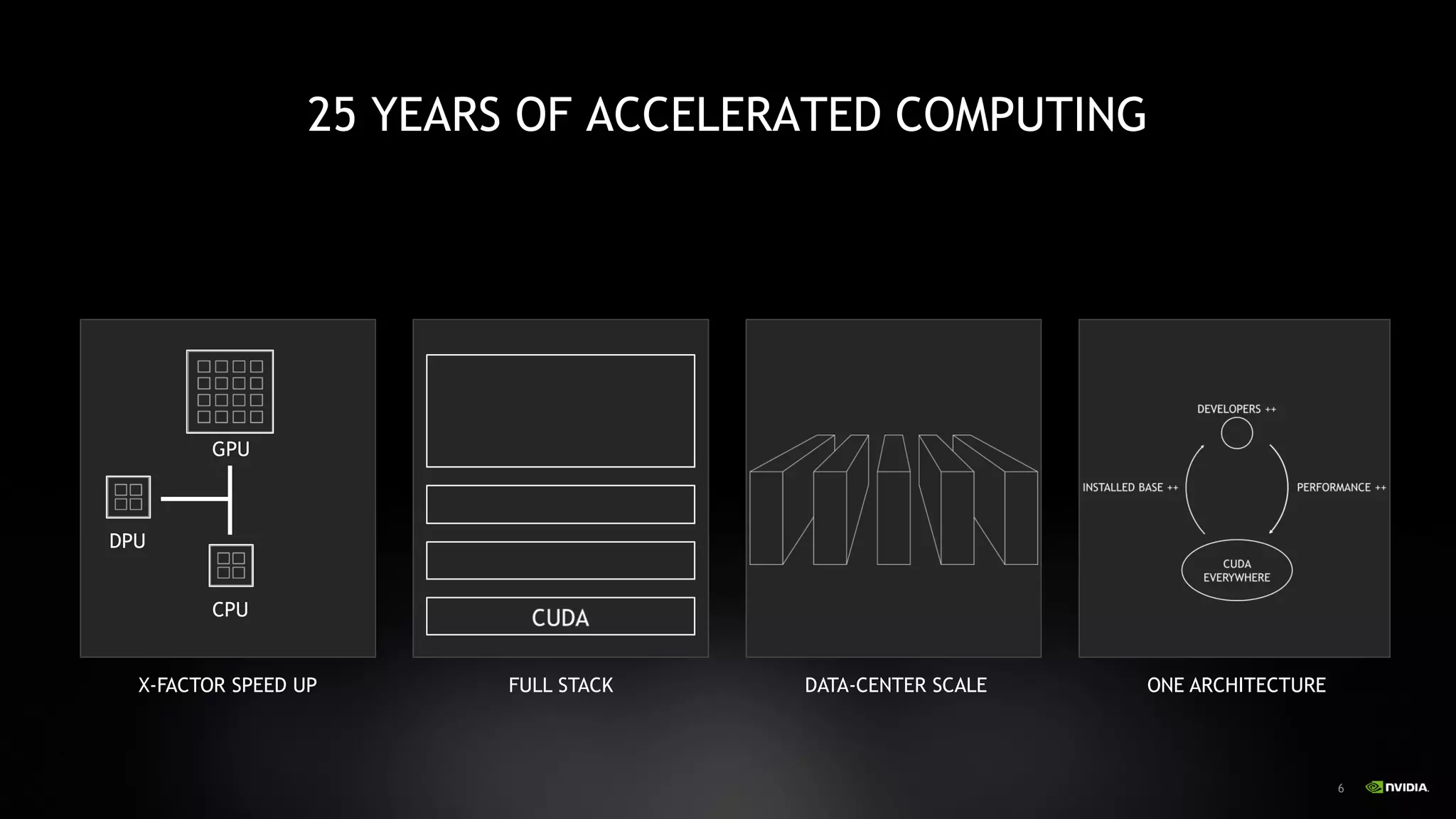

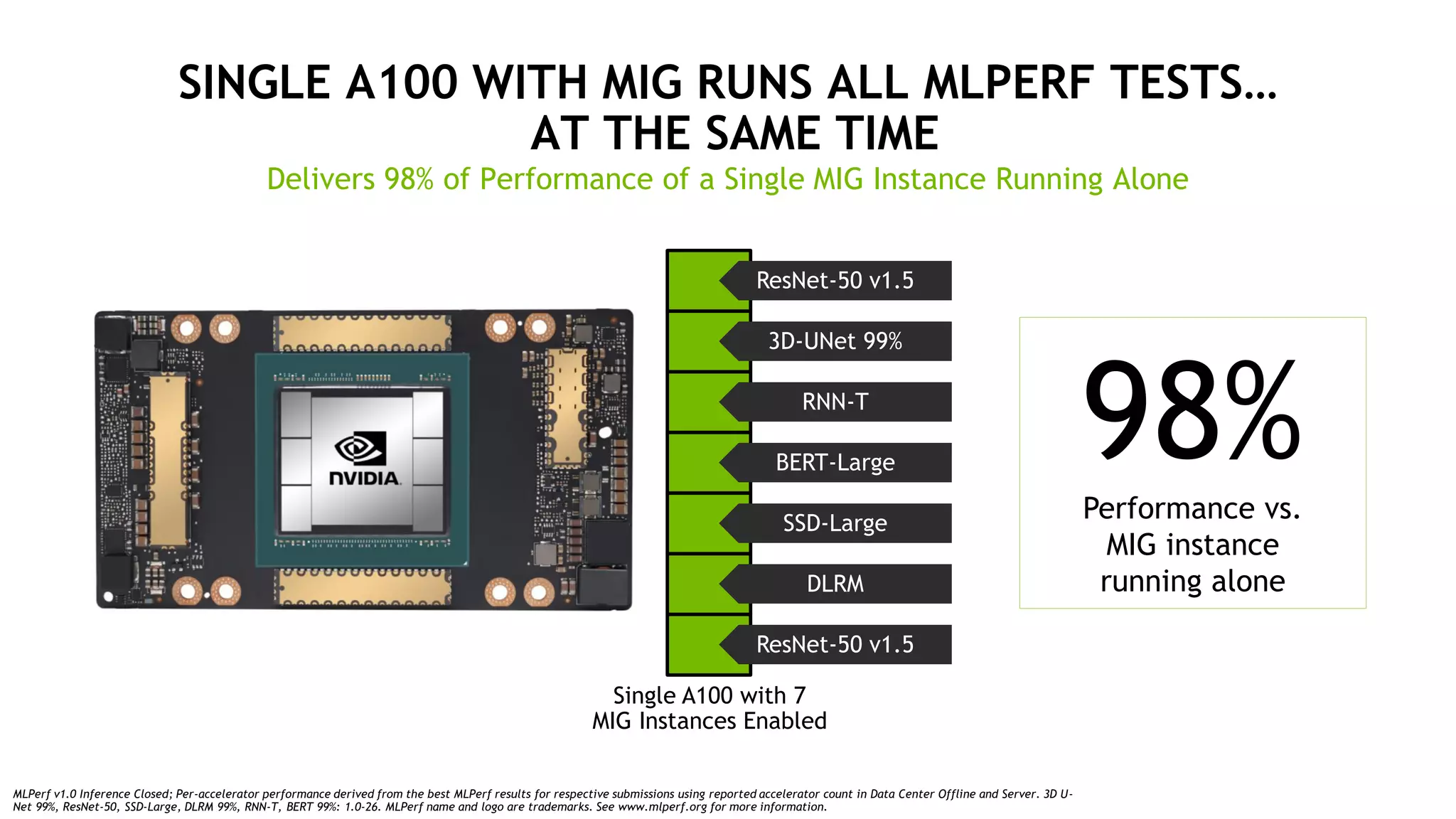

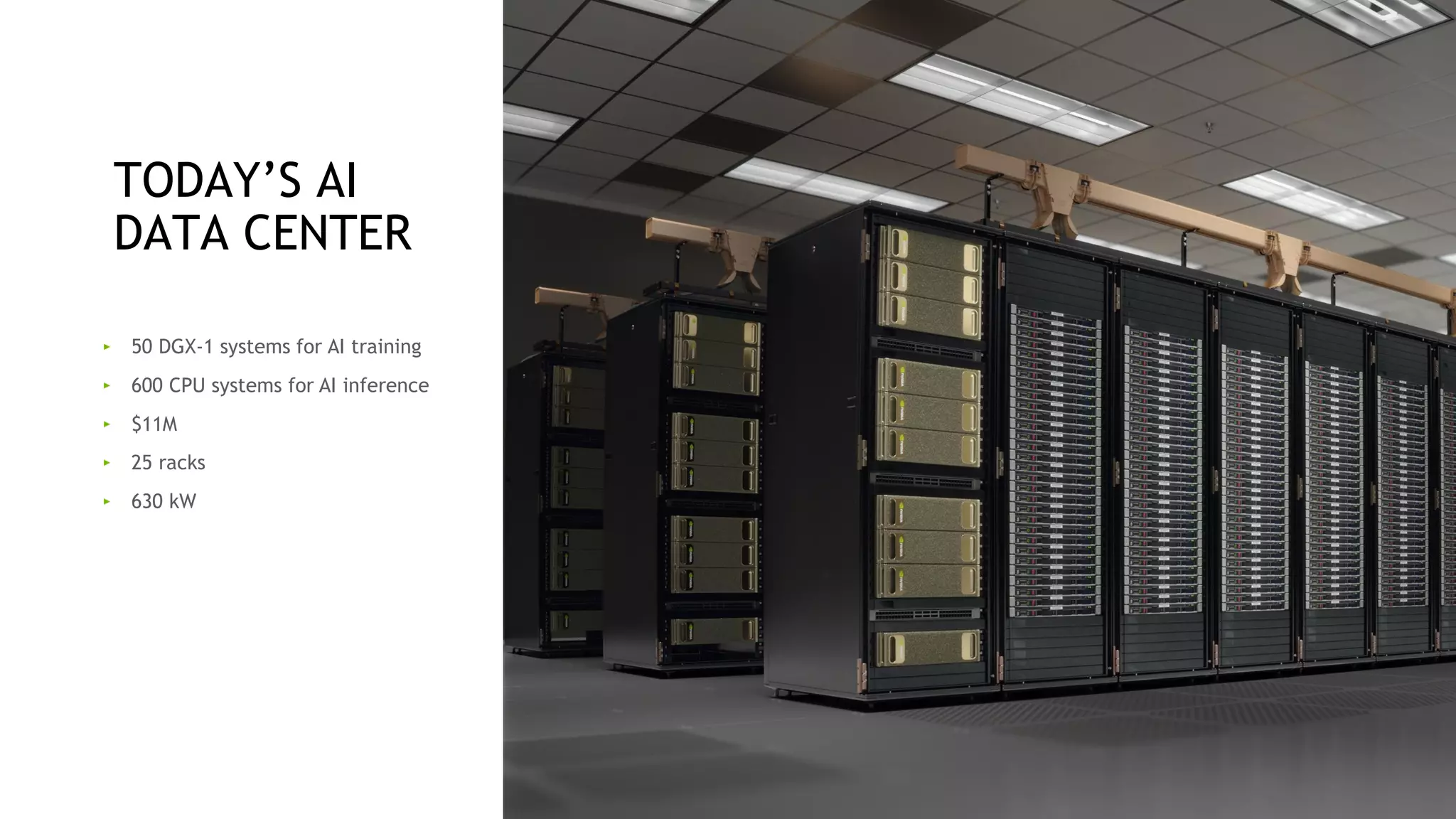

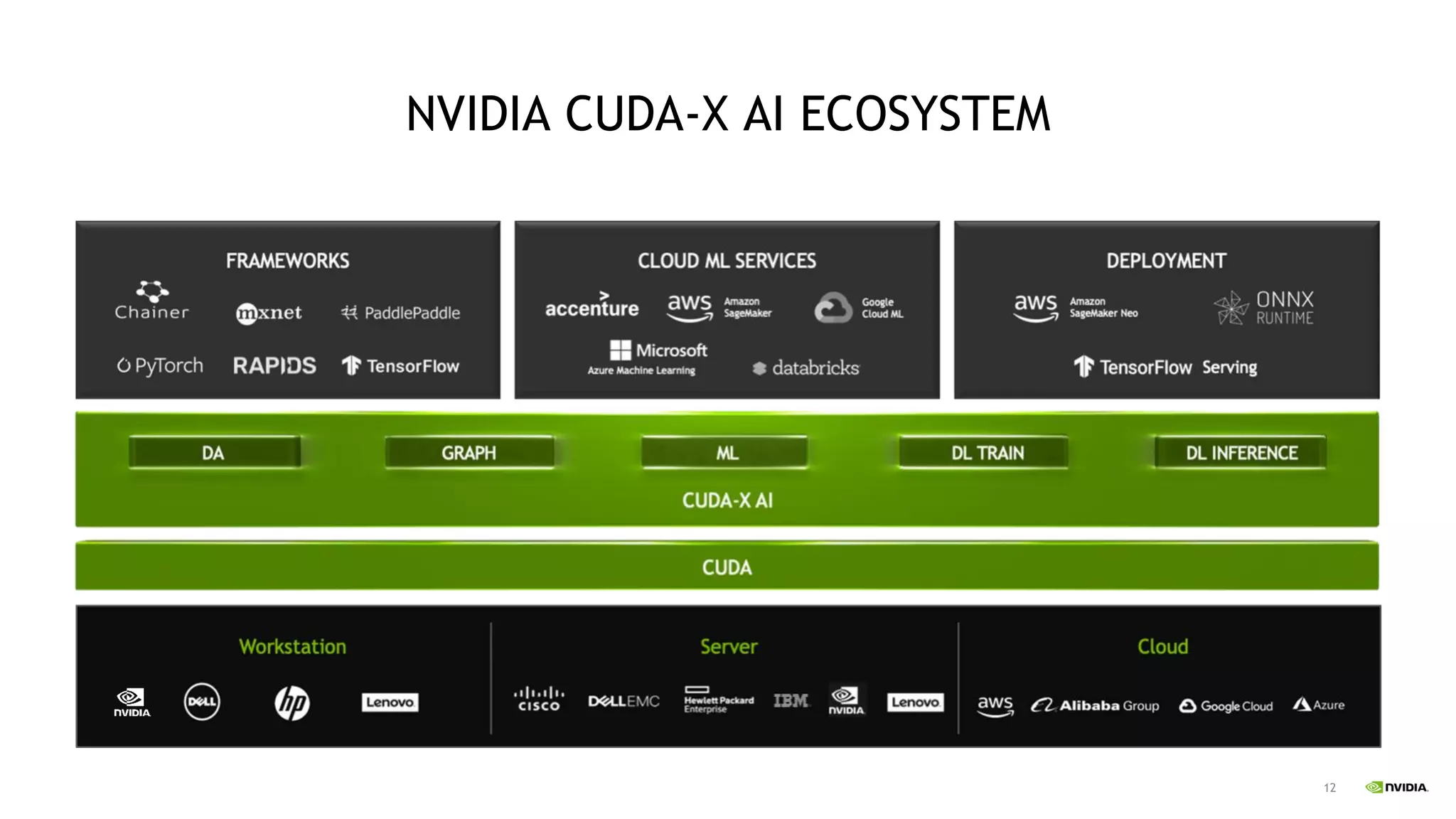

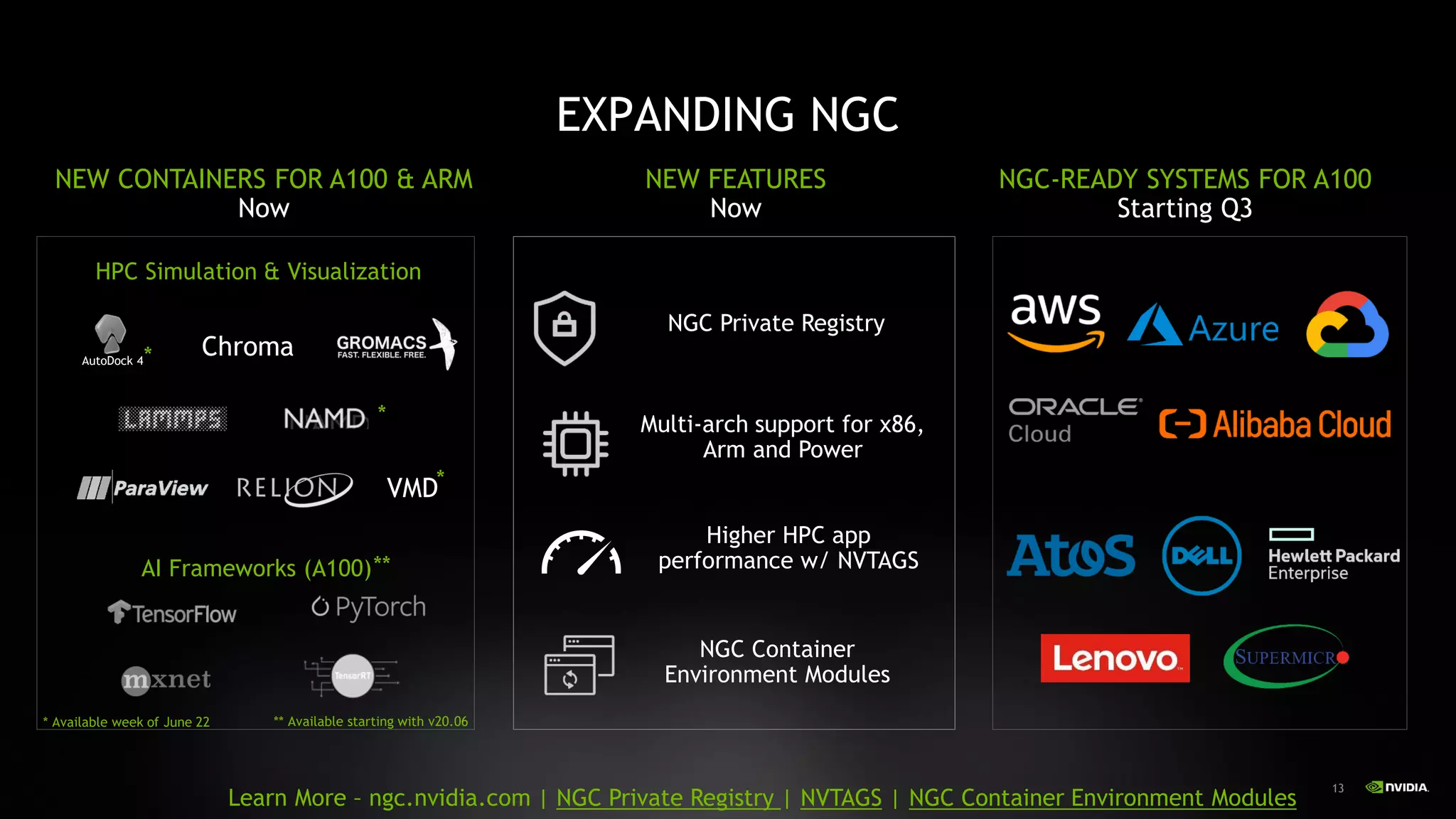

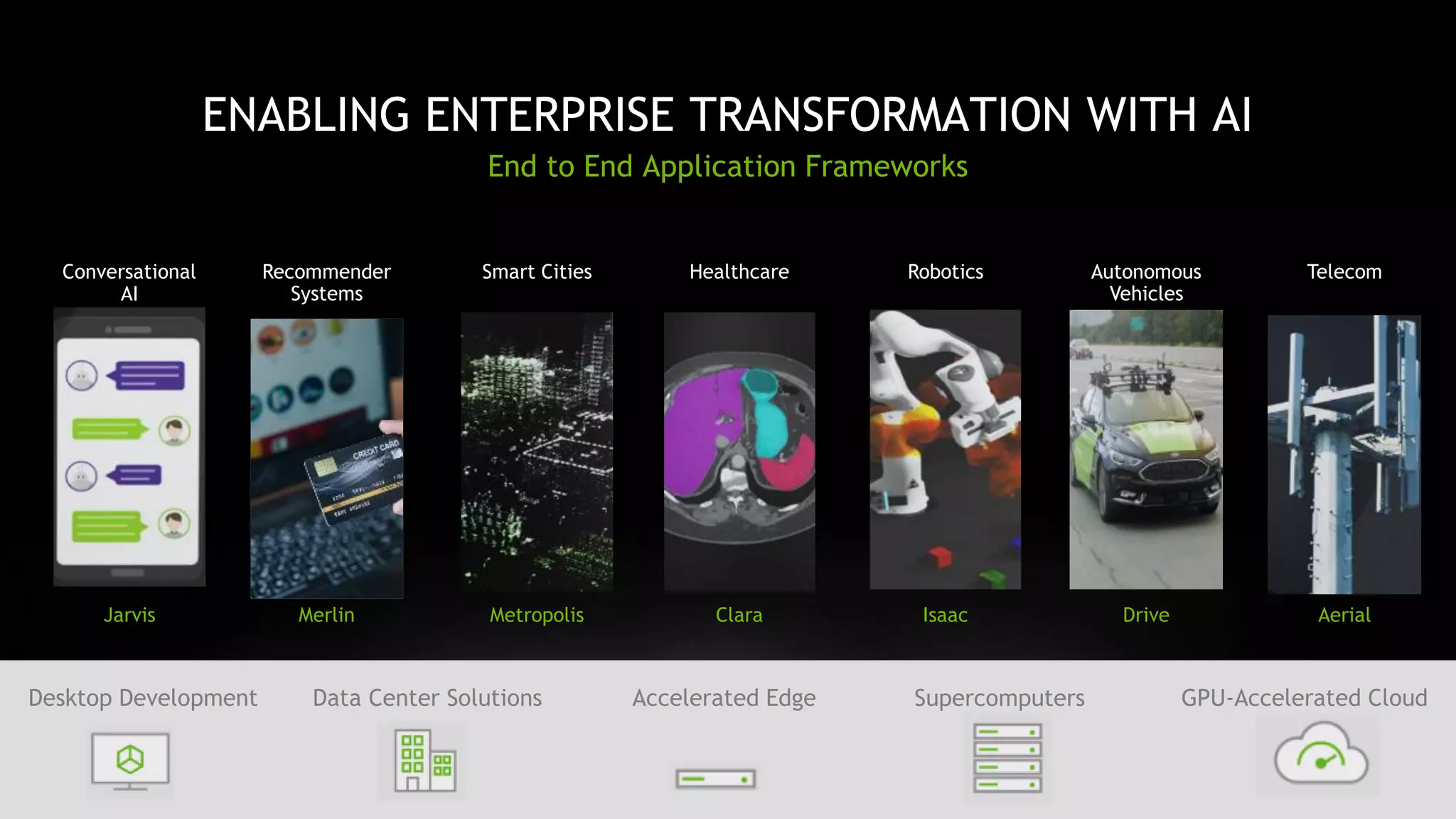

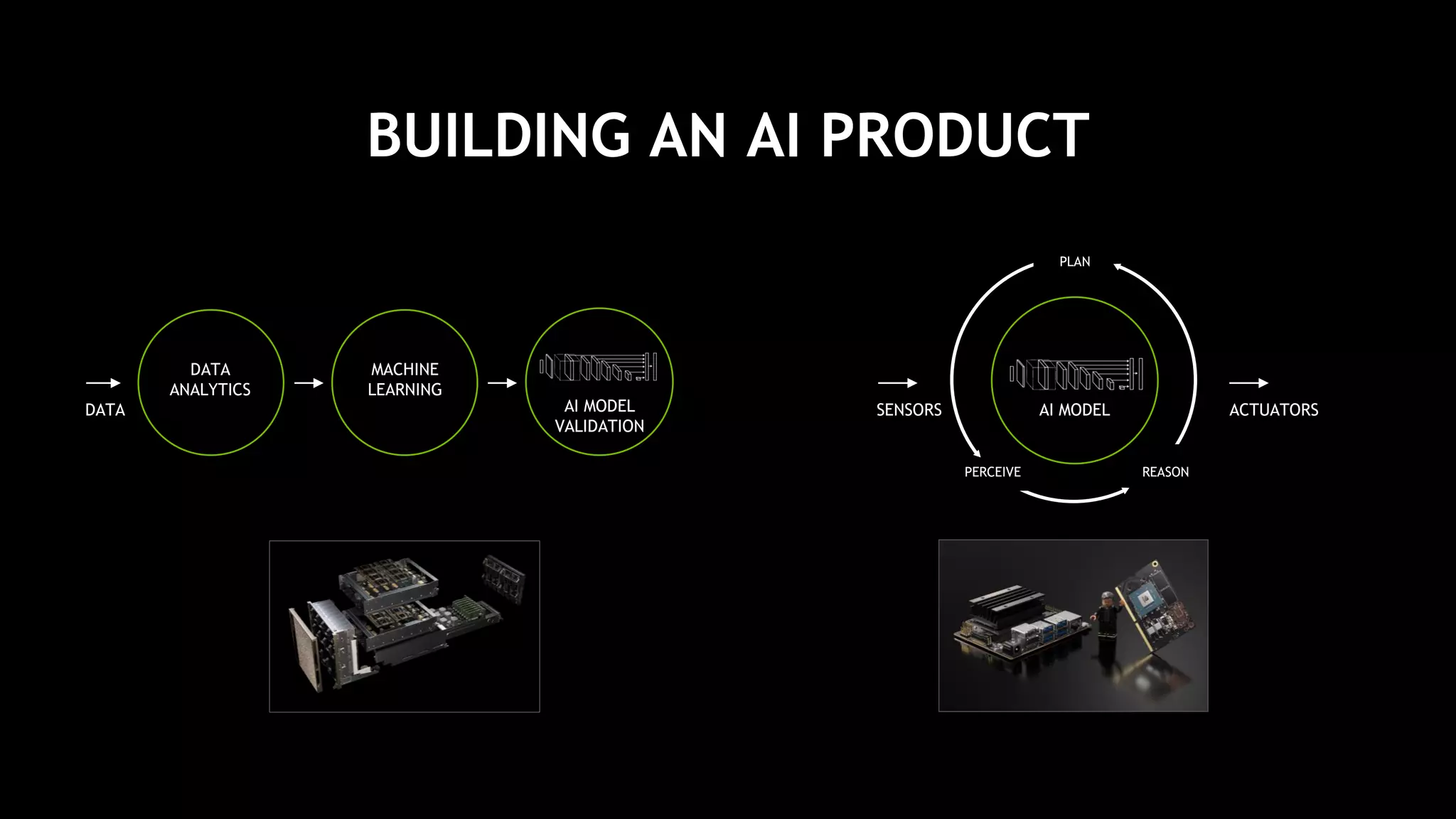

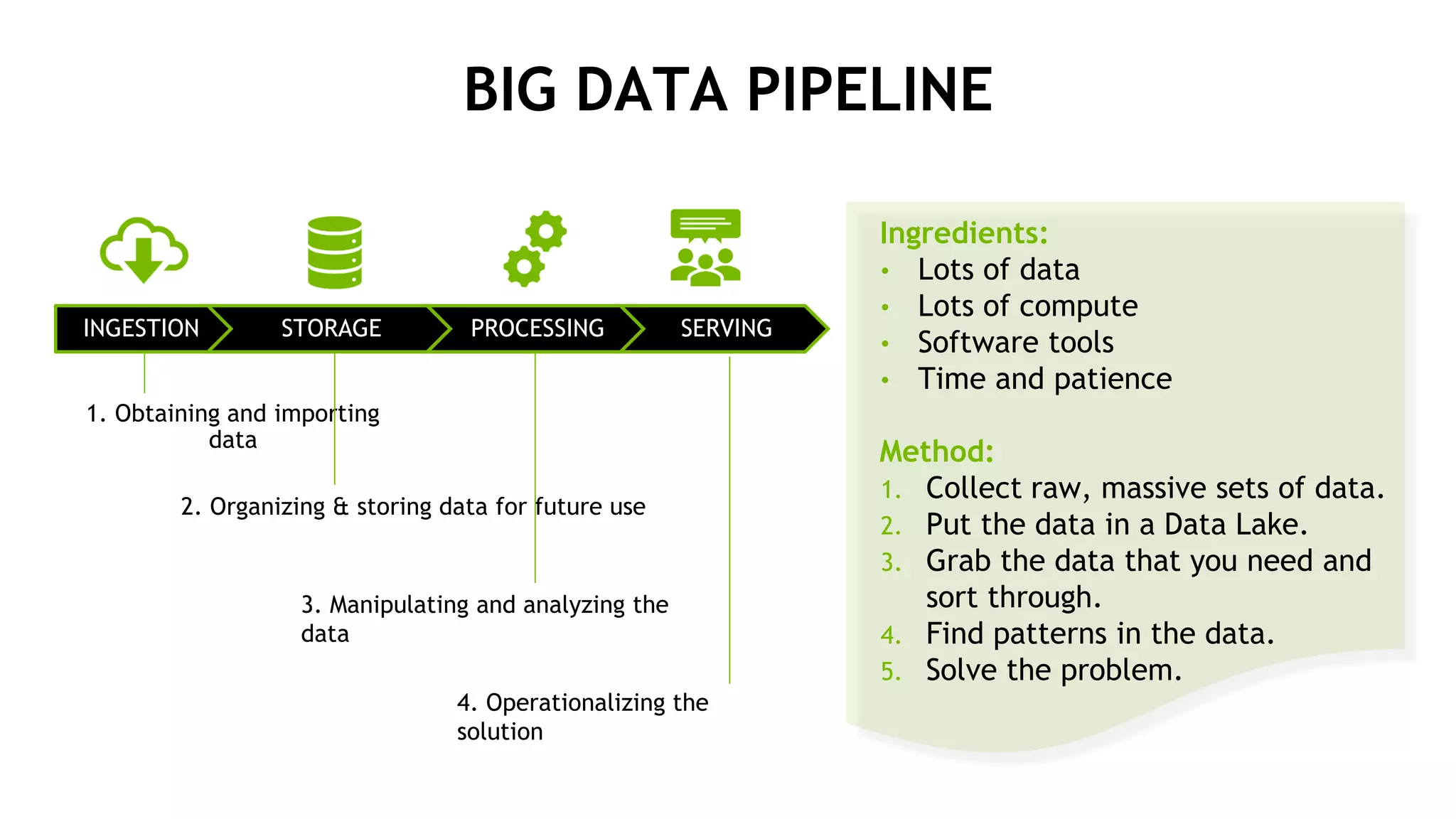

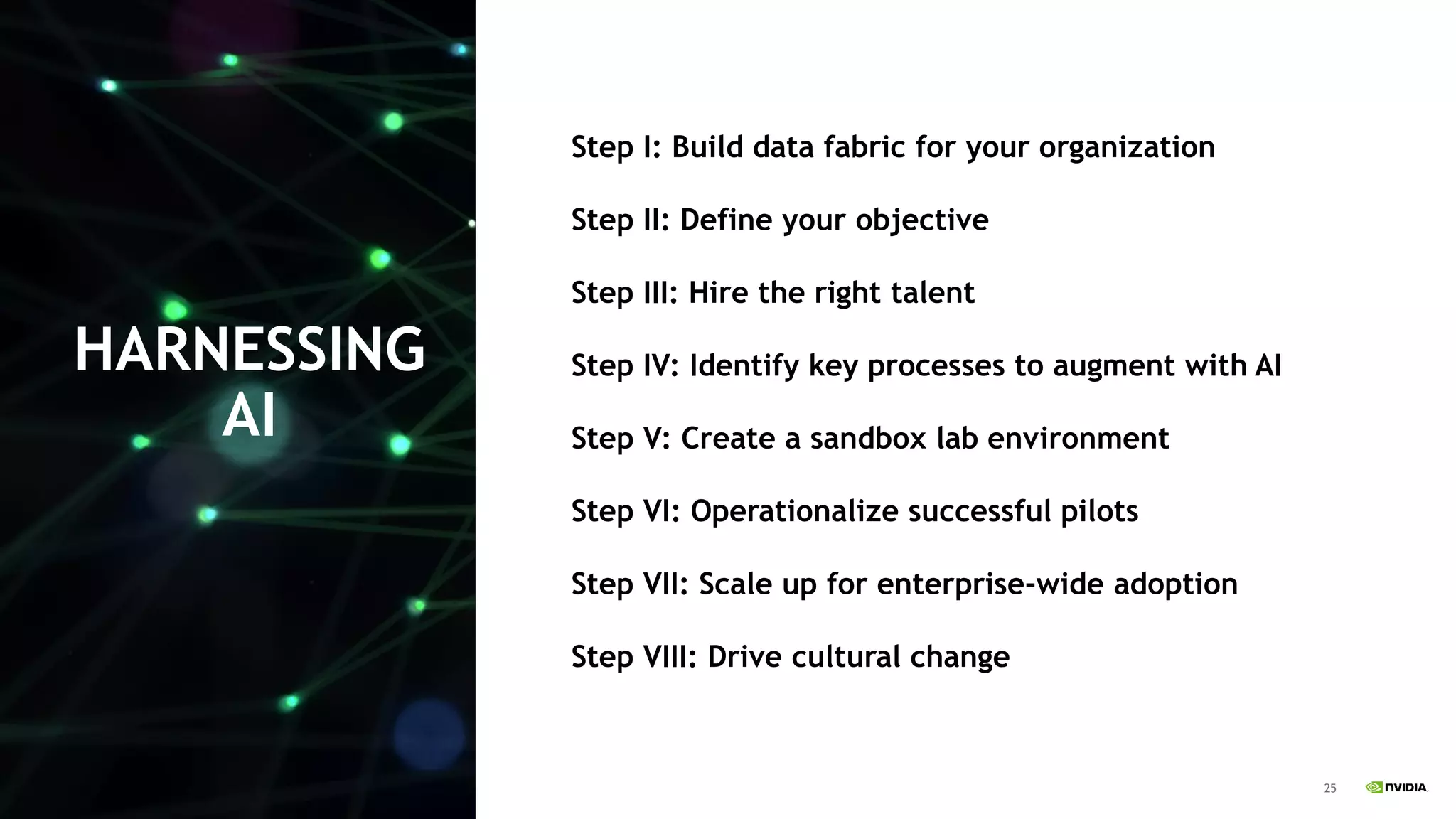

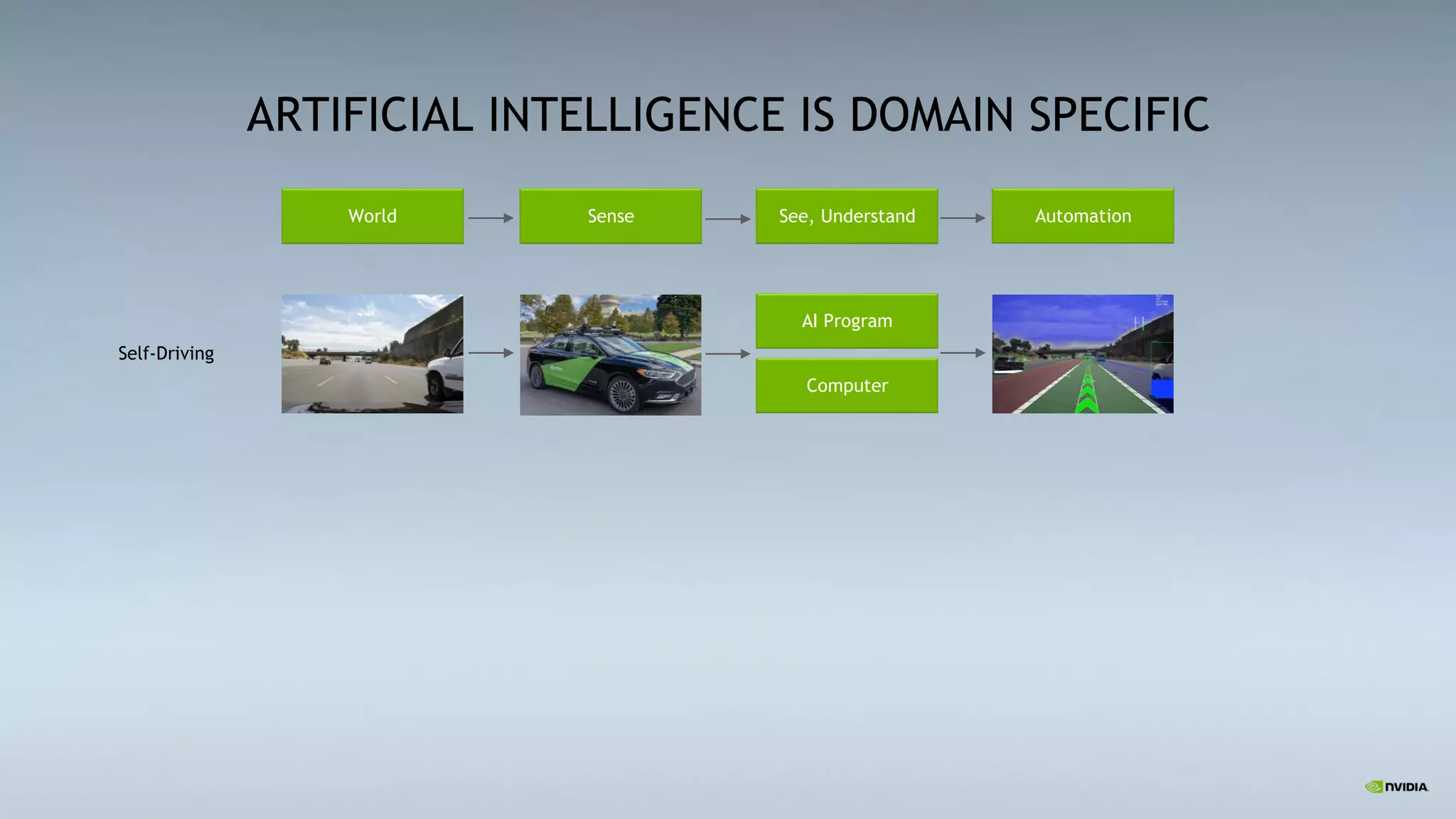

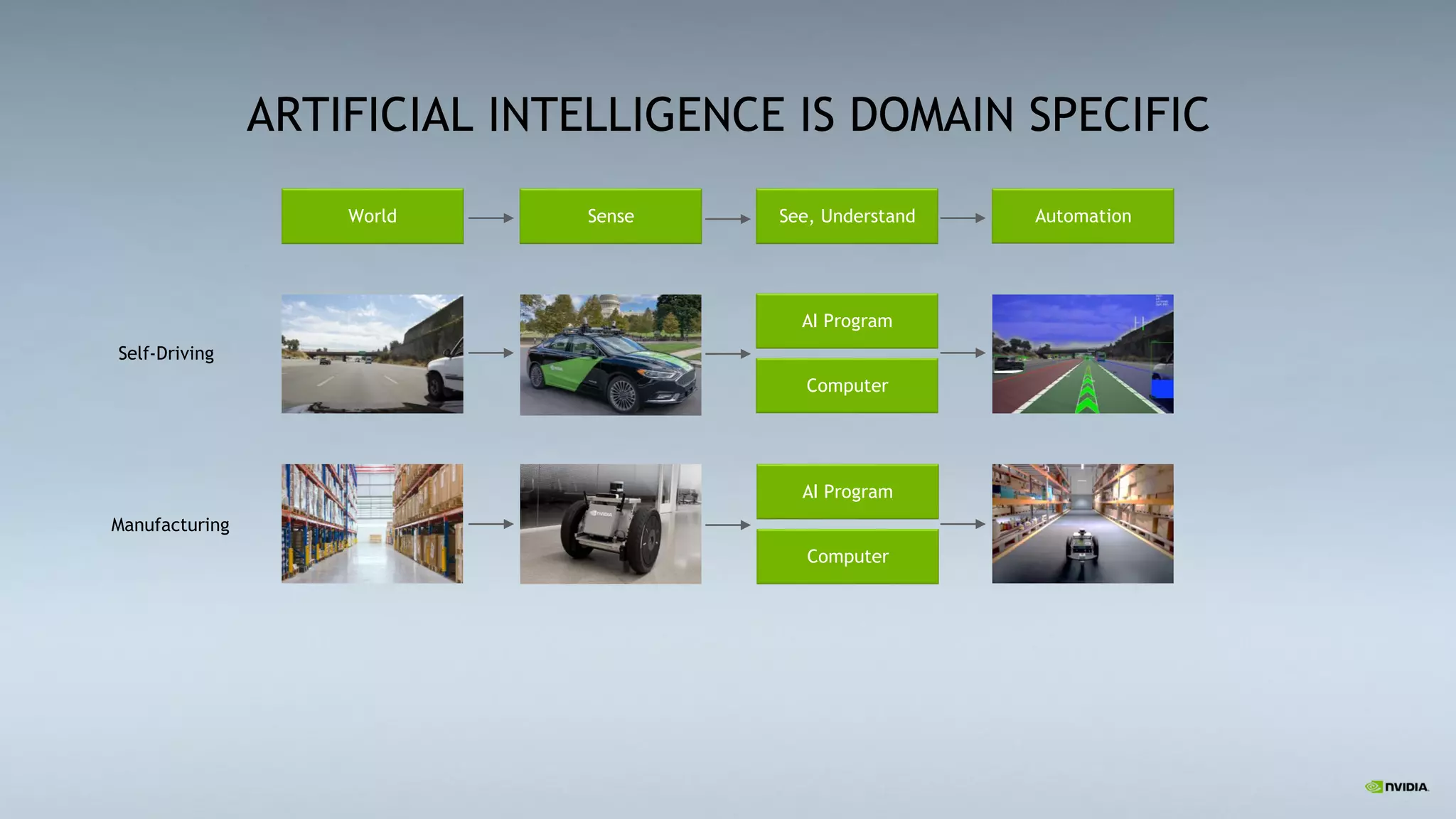

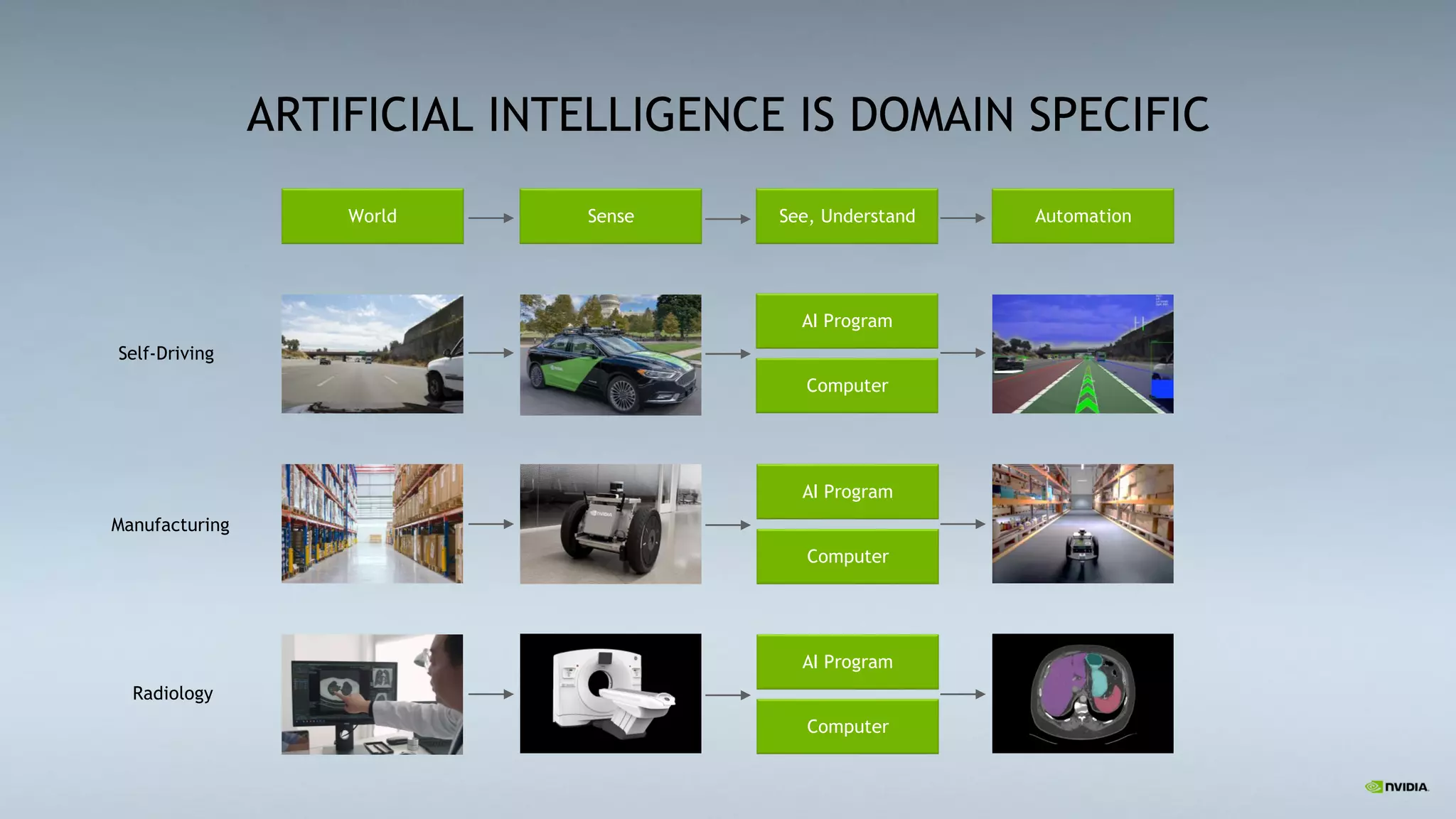

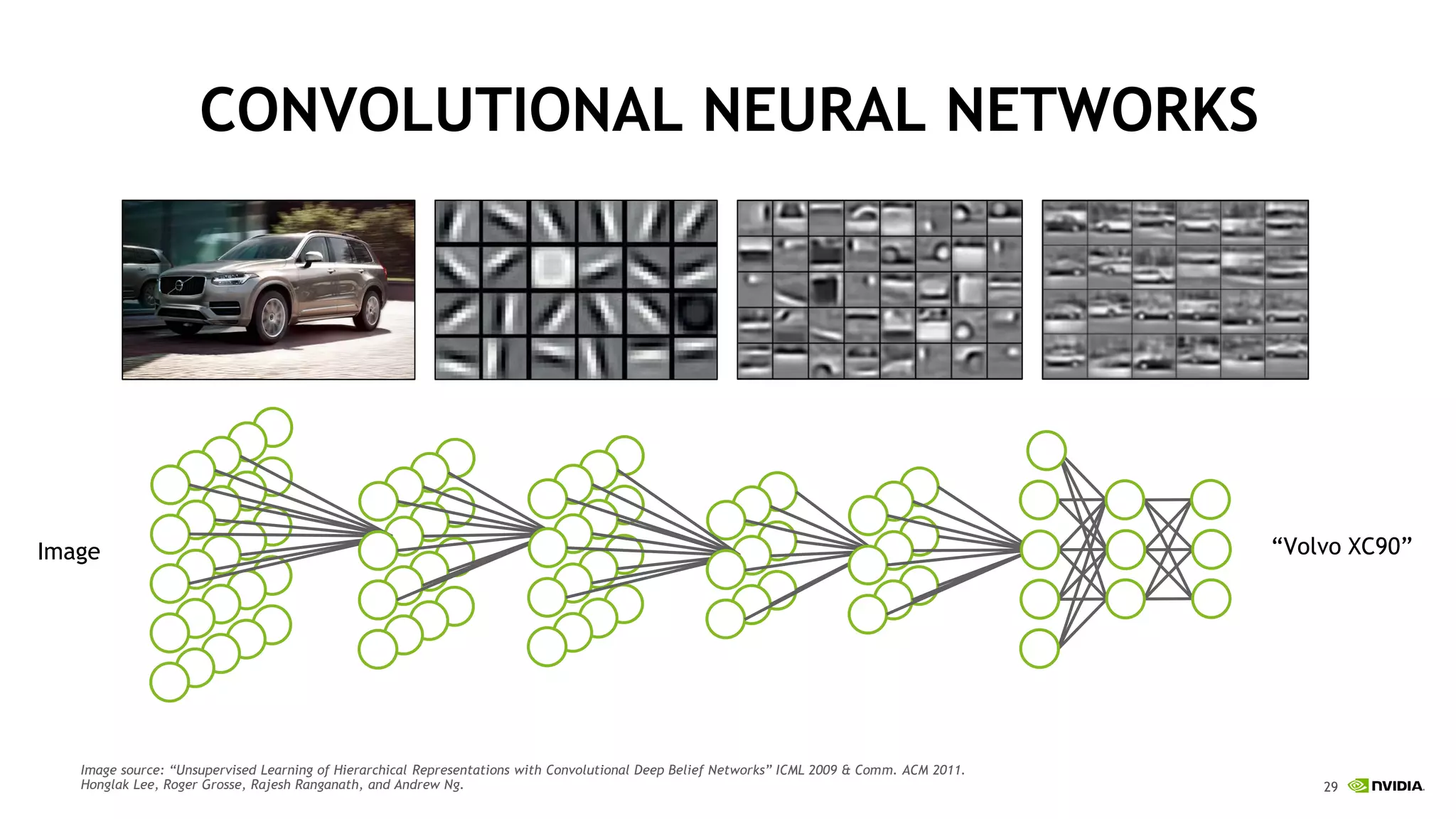

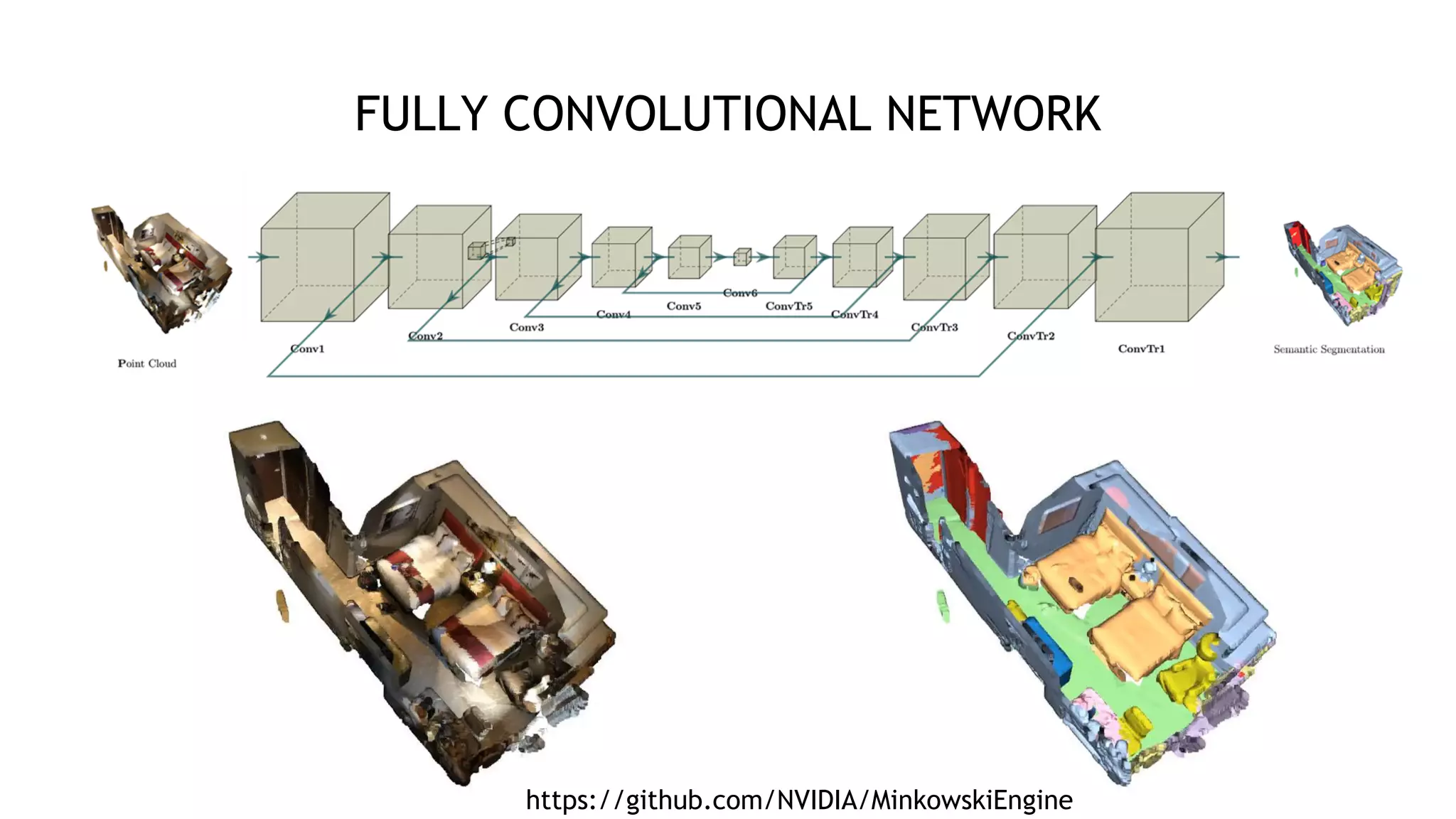

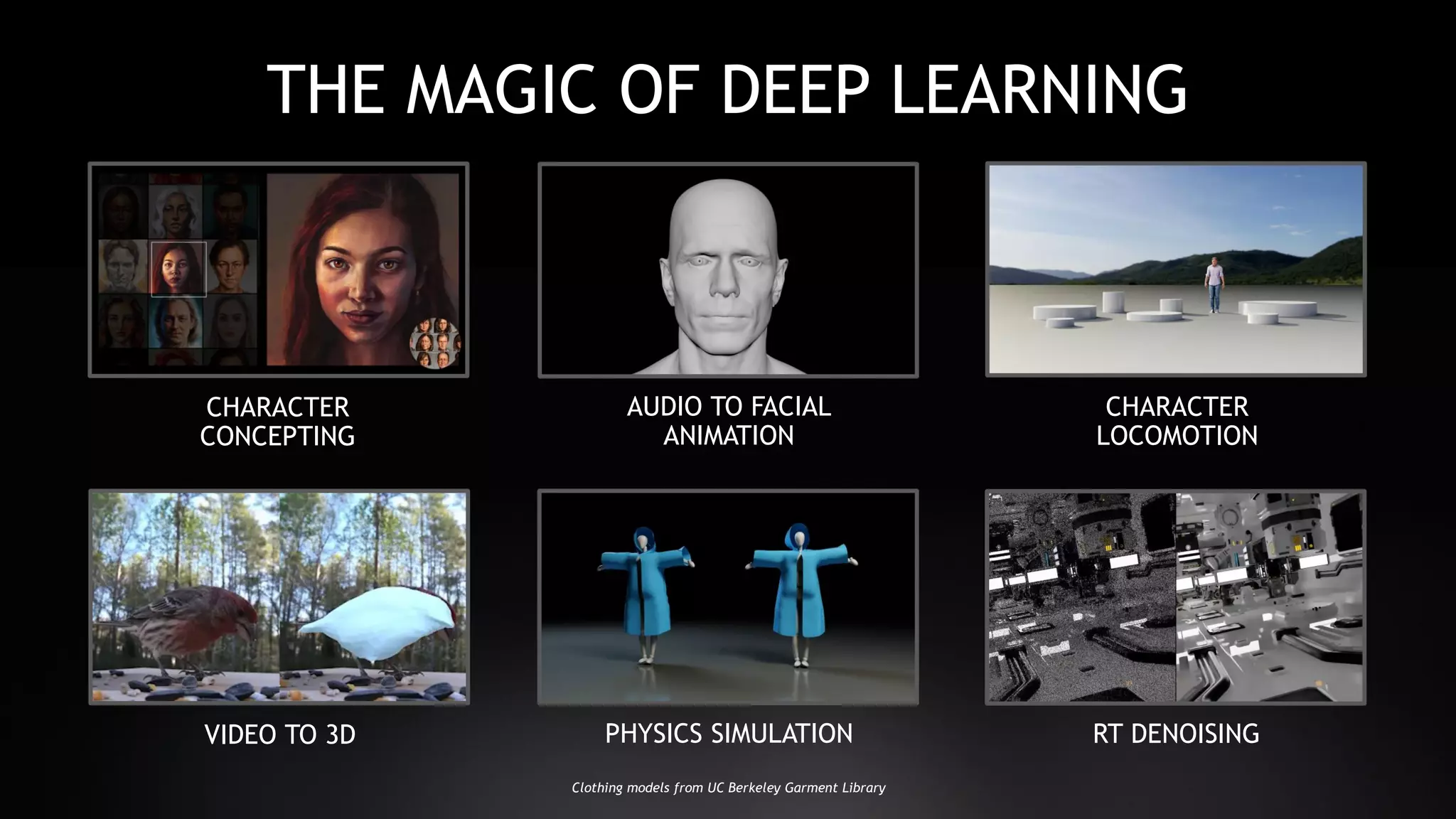

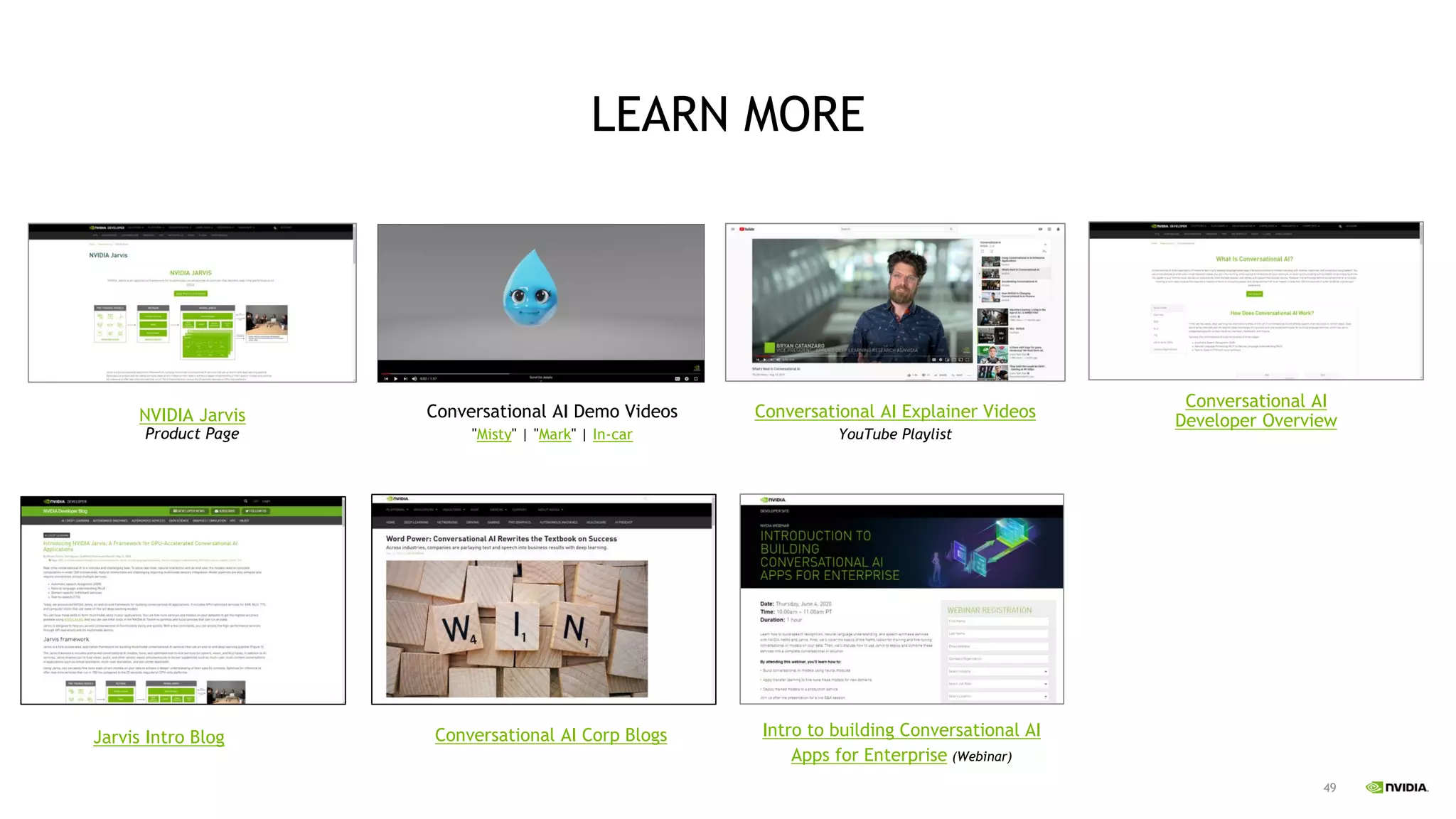

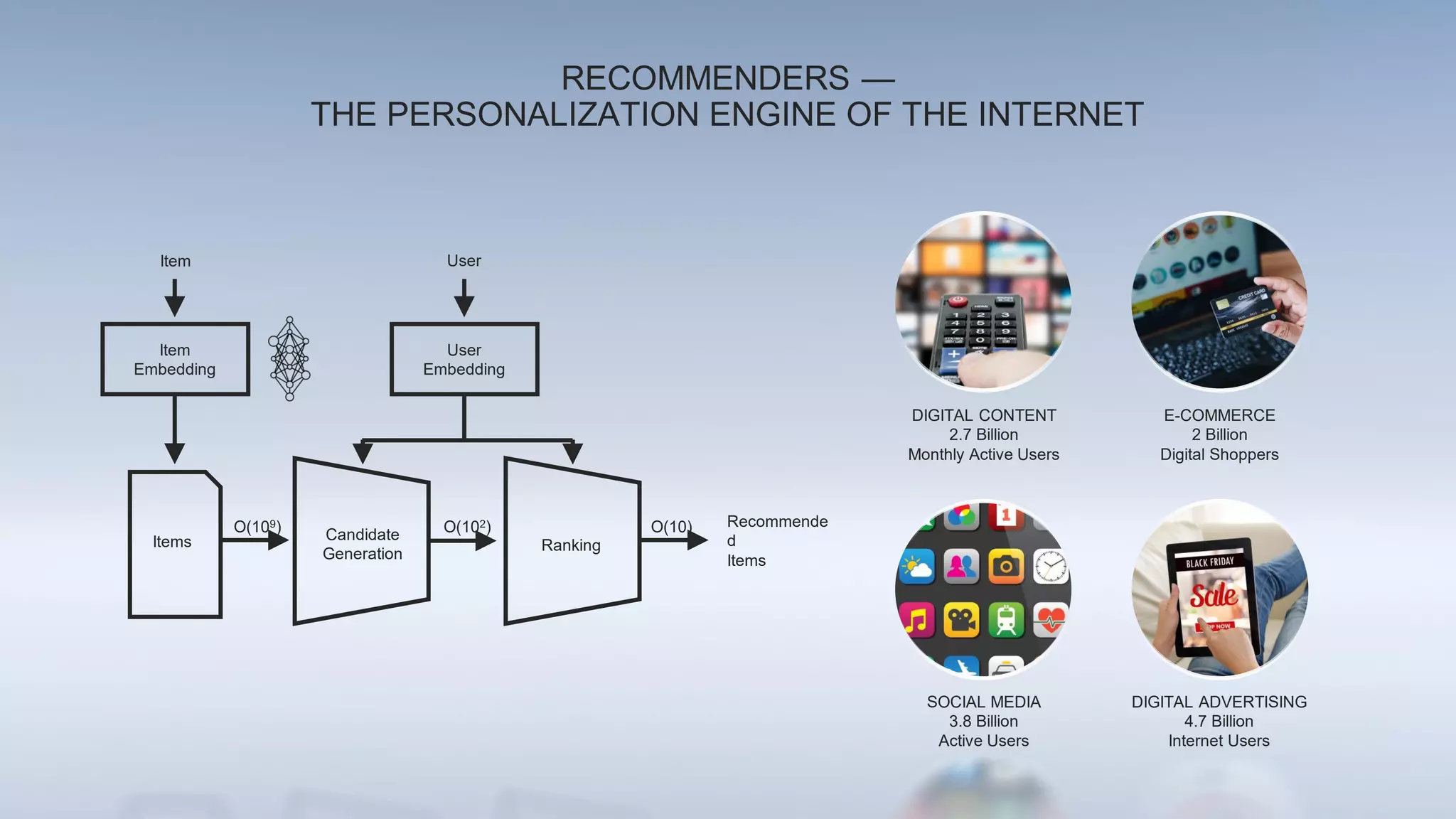

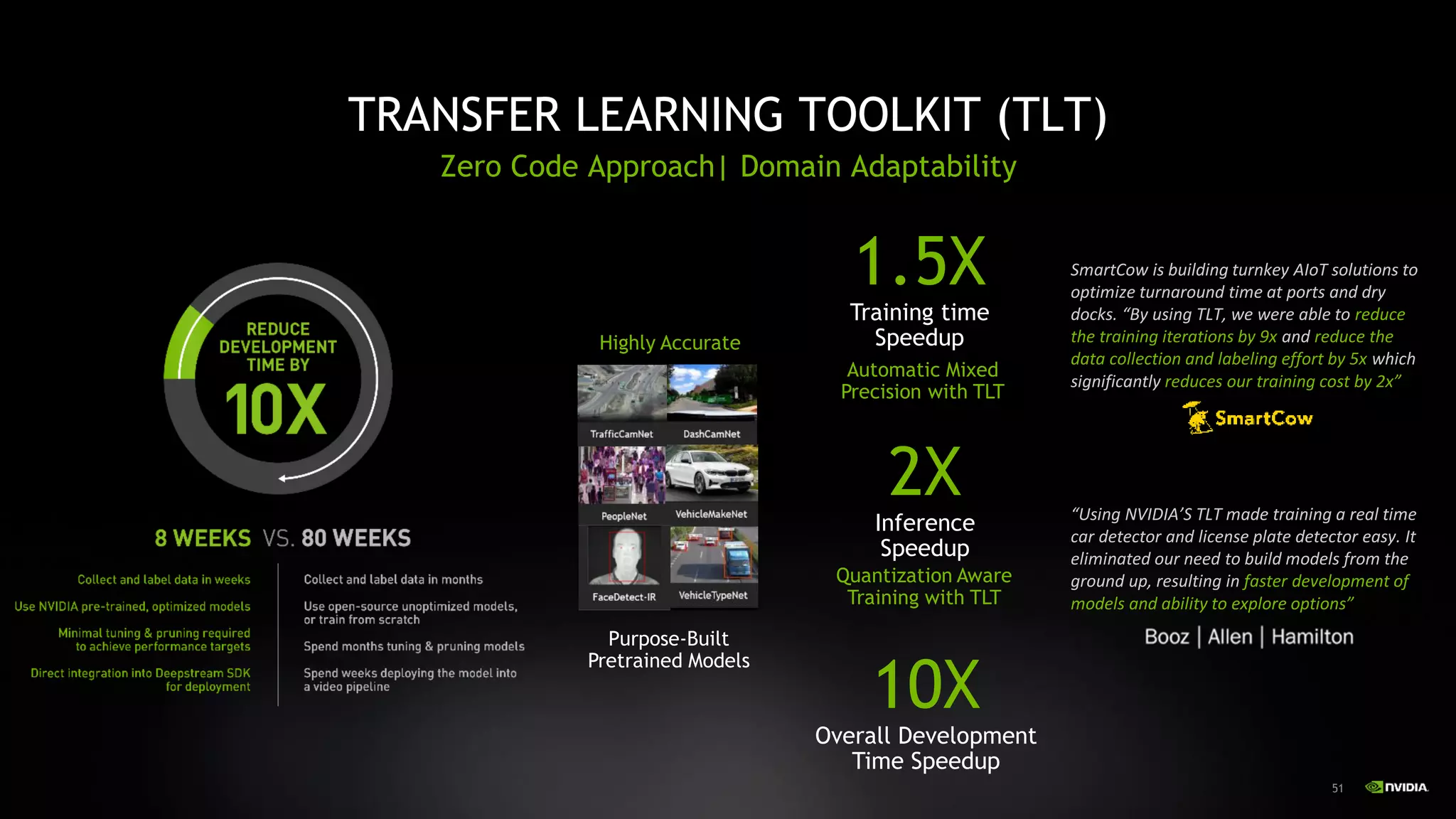

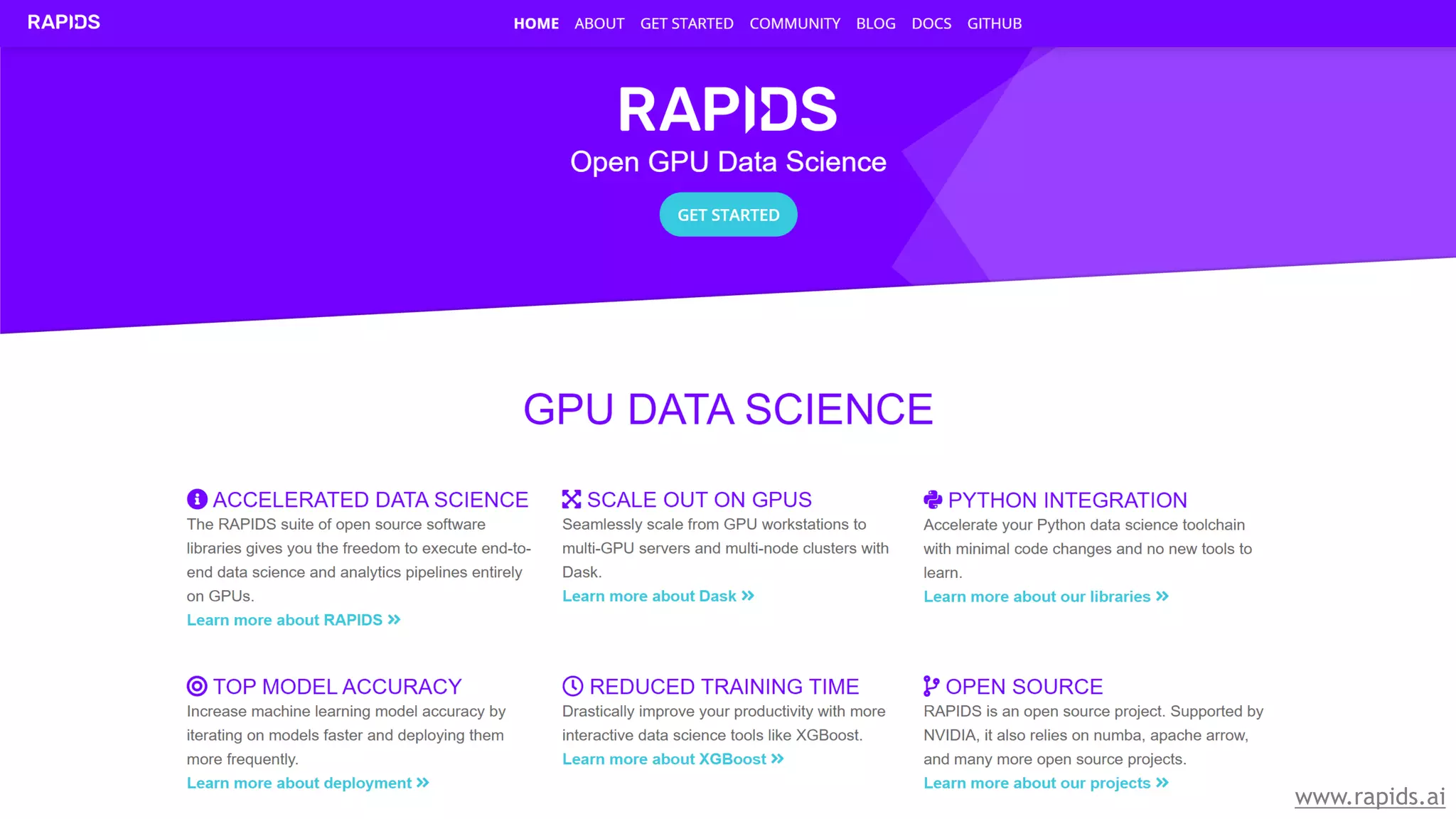

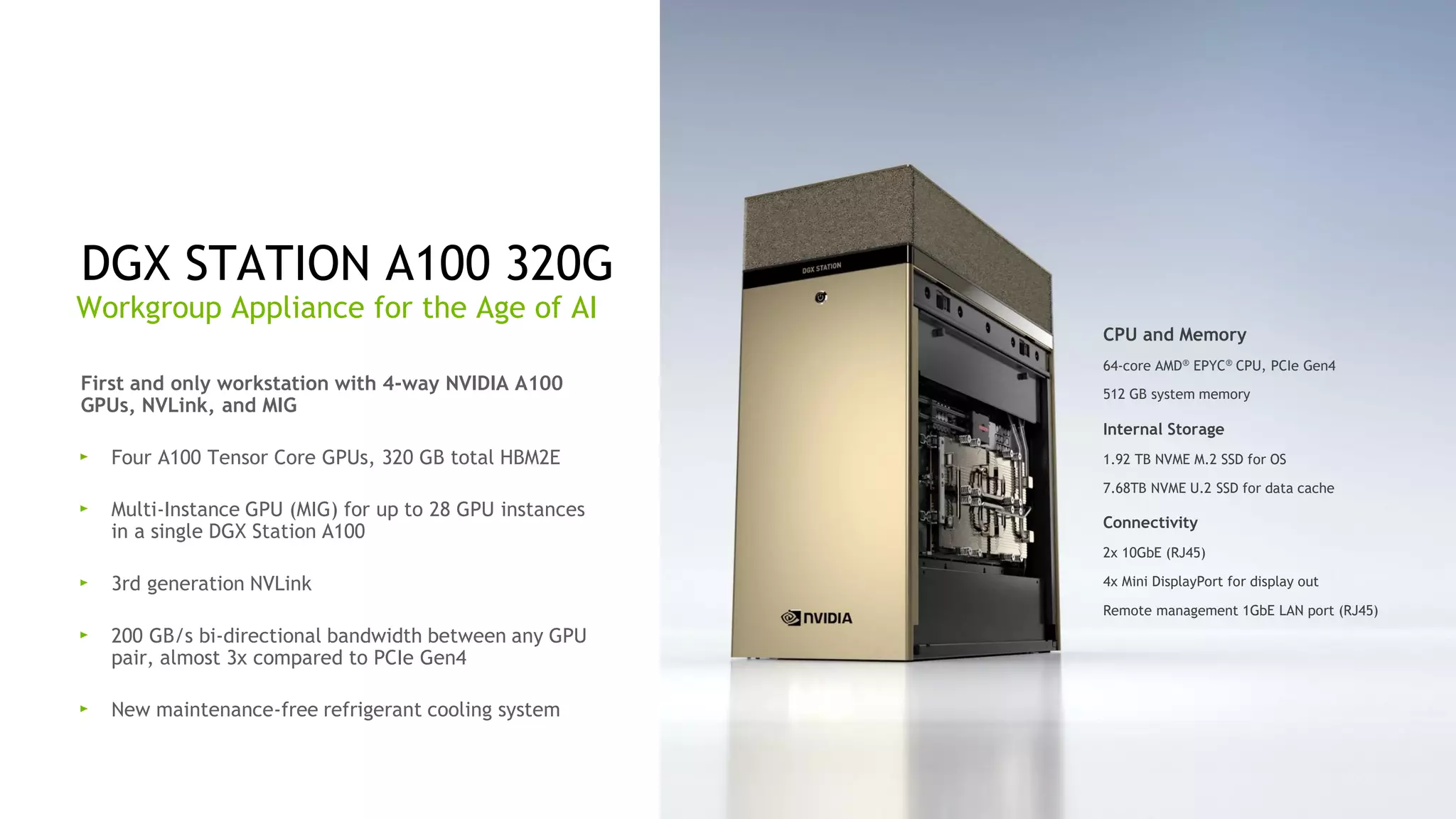

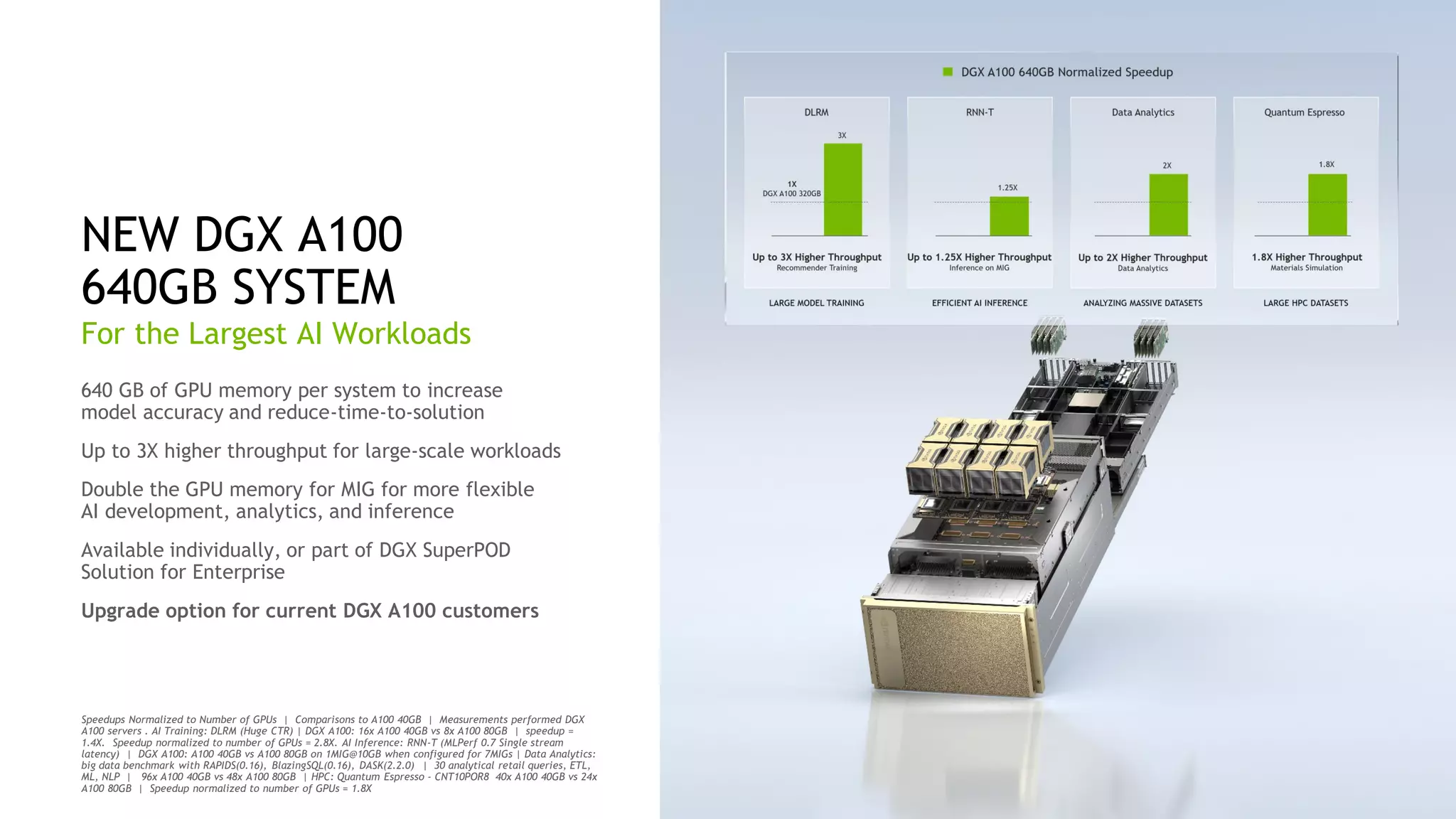

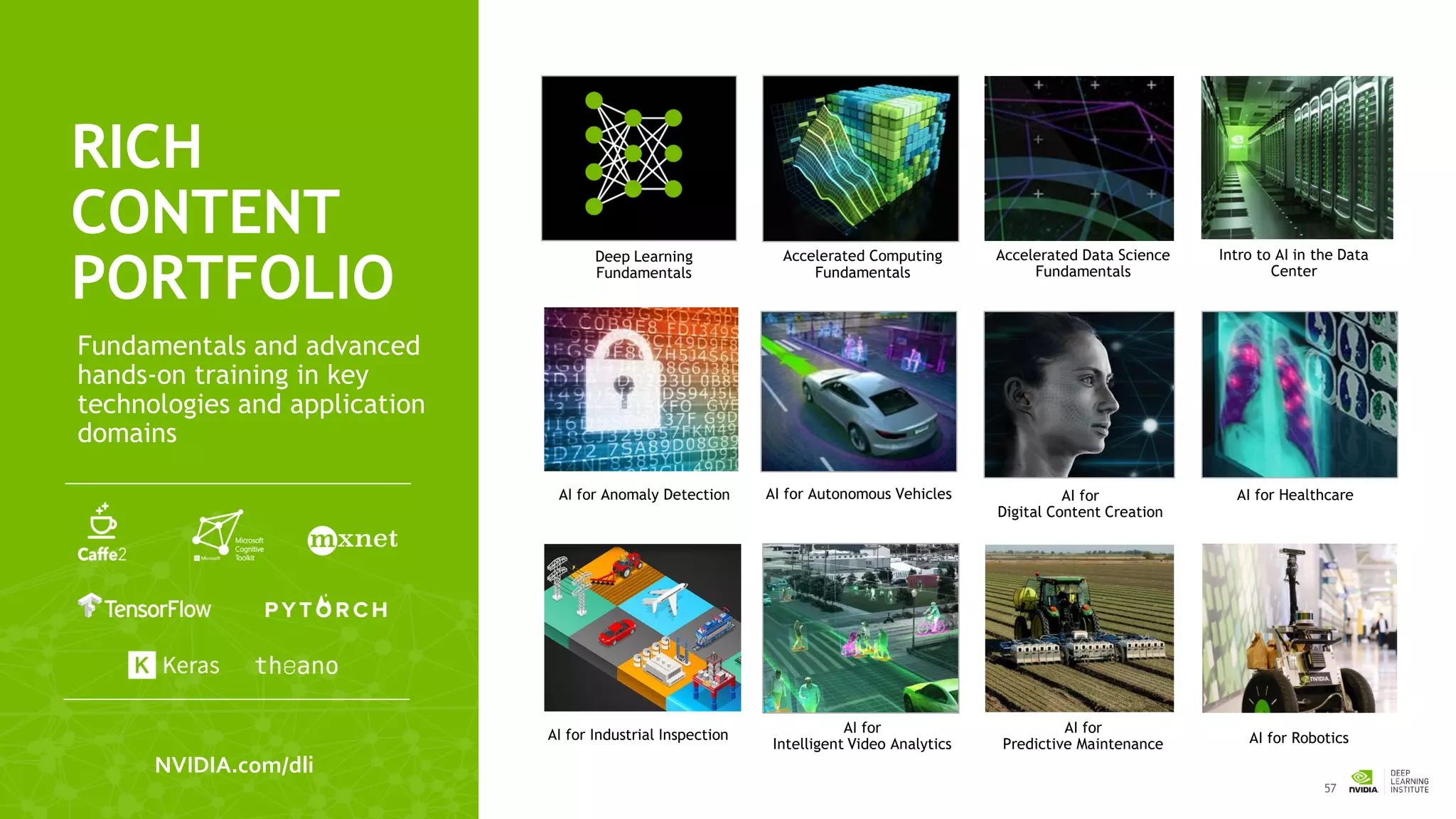

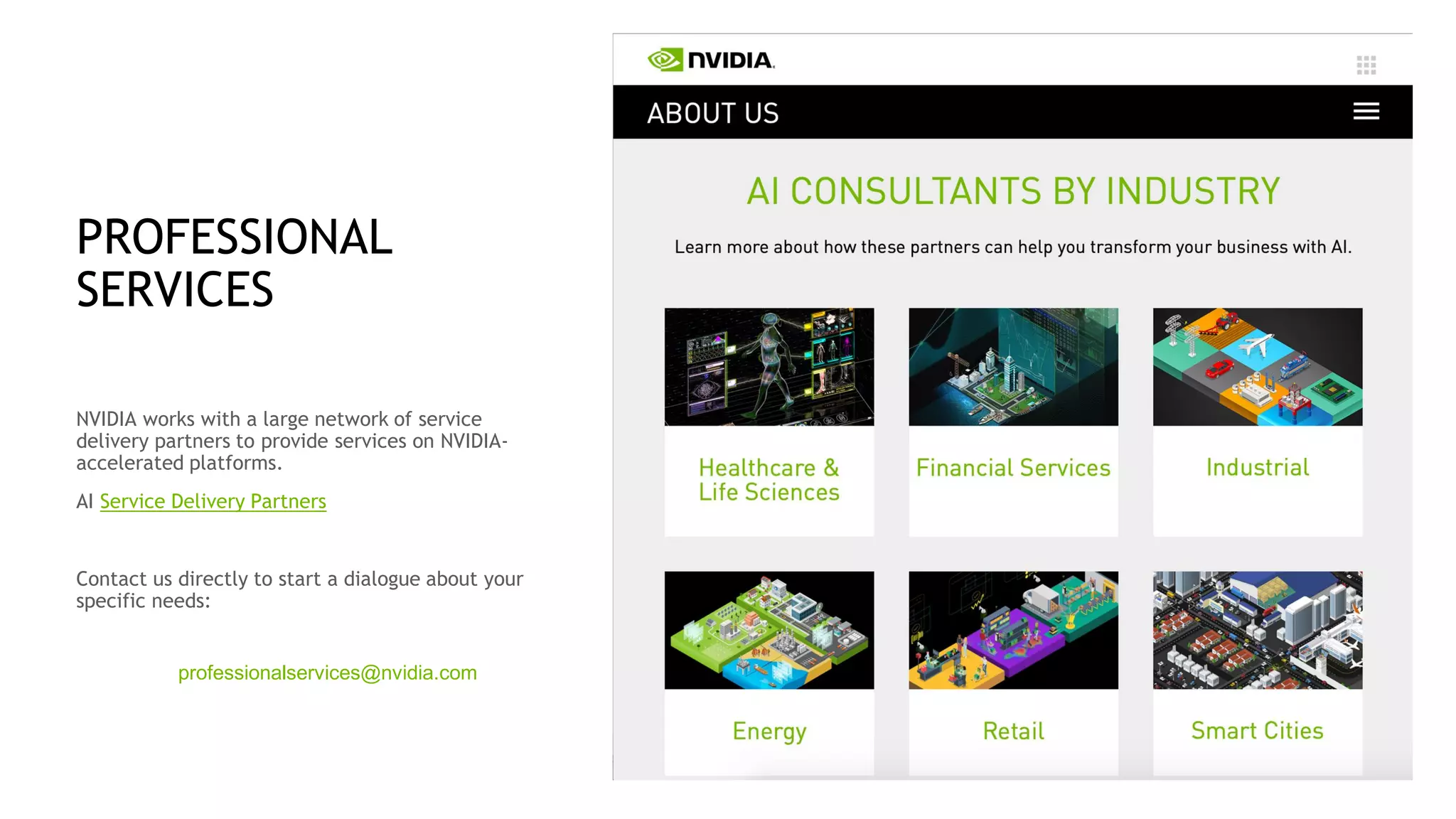

The document outlines NVIDIA's advancements in artificial intelligence, including the launch of NVIDIA Maxine and the capabilities of their GPUs and systems like the DGX A100, which deliver high performance for AI training and inference. It details the strategies for enterprise AI transformation, emphasizing the importance of data management, model training, and operationalization. Additionally, the document highlights NVIDIA's contributions to various sectors such as healthcare, autonomous vehicles, and digital content creation, showcasing their ecosystem of tools and solutions for AI development.