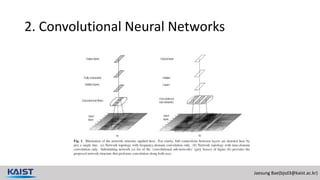

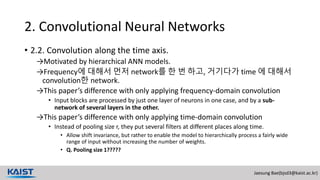

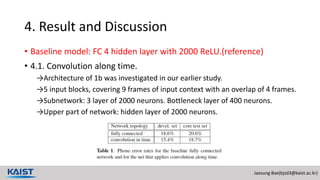

The document discusses the development of a combined time-and frequency-domain convolutional neural network for phone recognition, achieving a new record error rate of 16.7% on the TIMIT dataset. It highlights the differences in performance between convolution along frequency and time, as well as the hierarchical processing approach used to enhance the model's ability to handle longer inputs. Experimental settings and results are detailed, emphasizing the effectiveness of the architecture and parameters used in the study.