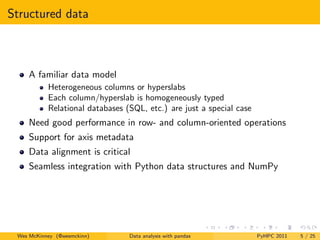

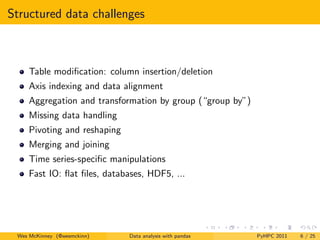

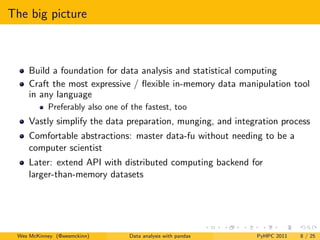

Pandas is a Python library for data analysis and manipulation. It provides high performance tools for structured data, including DataFrame objects for tabular data with row and column indexes. Pandas aims to have a clean and consistent API that is both performant and easy to use for tasks like data cleaning, aggregation, reshaping and merging of data.

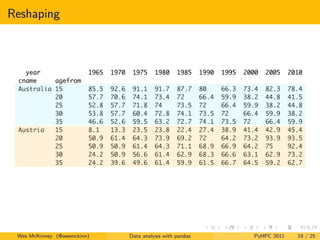

![Reshaping

In [5]: df.unstack(’agefrom’).stack(’year’)

Wes McKinney (@wesmckinn) Data analysis with pandas PyHPC 2011 20 / 25](https://image.slidesharecdn.com/slides-111118222930-phpapp01/85/pandas-a-Foundational-Python-Library-for-Data-Analysis-and-Statistics-20-320.jpg)