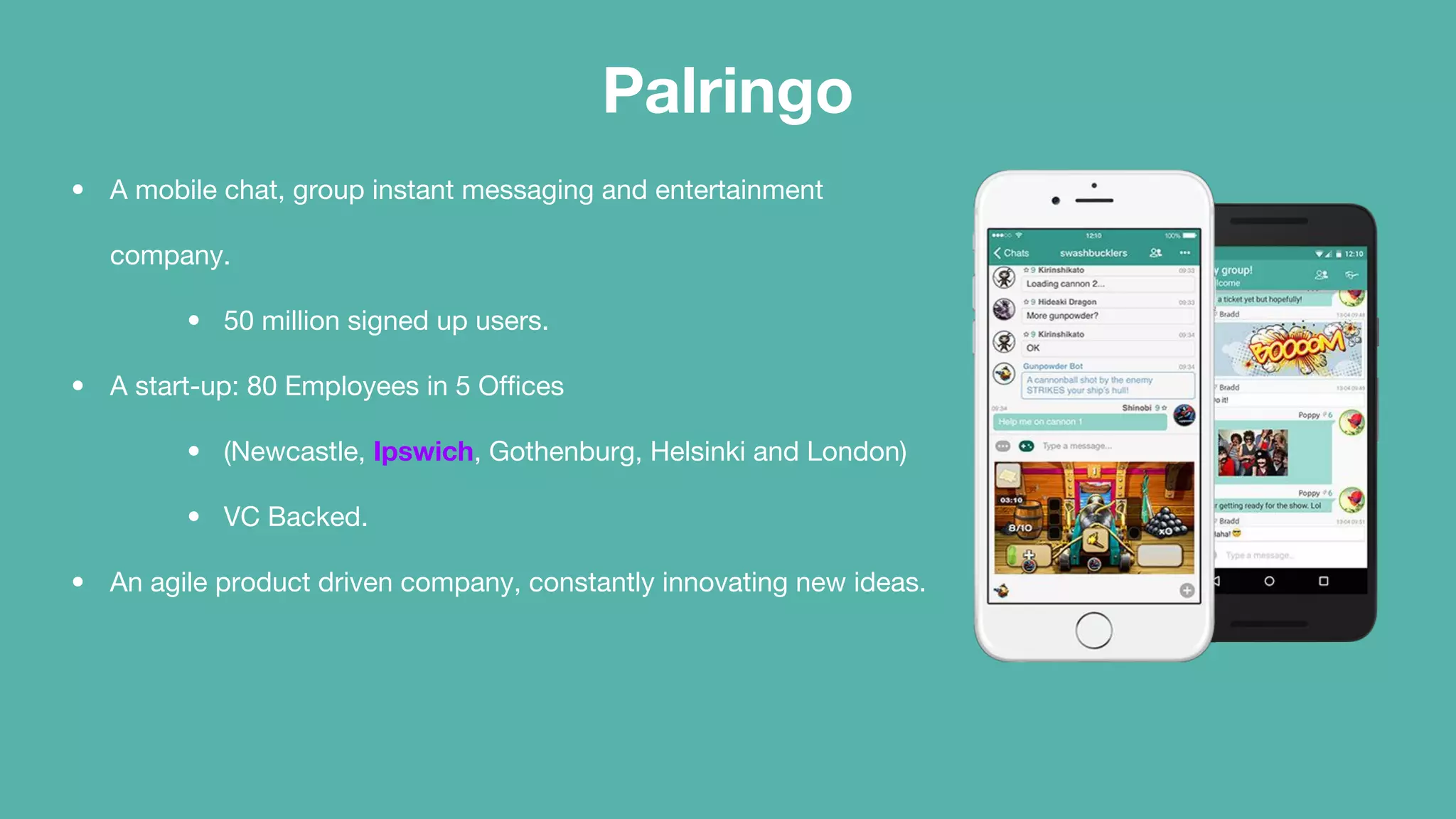

Palringo, a mobile chat and messaging startup with 50 million users, transitioned from a problematic monolithic architecture in a London datacentre to a cloud-based infrastructure to enhance scalability and reliability. The team identified numerous issues with their previous setup, including major outages and performance limitations, prompting a shift to AWS-managed services such as DynamoDB and Lambda. Future plans include breaking up remaining monolithic services and improving API integrations for better agility.