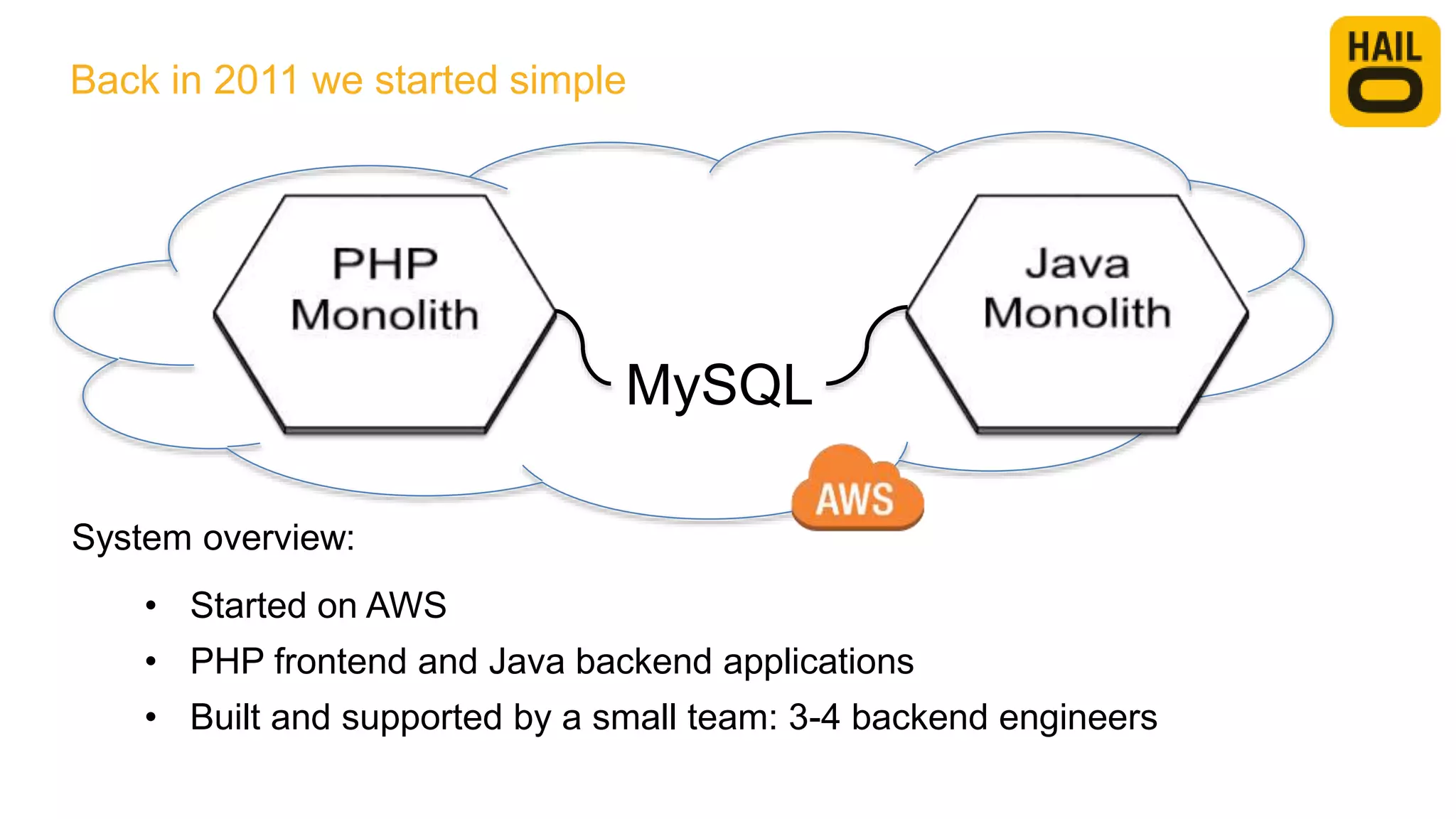

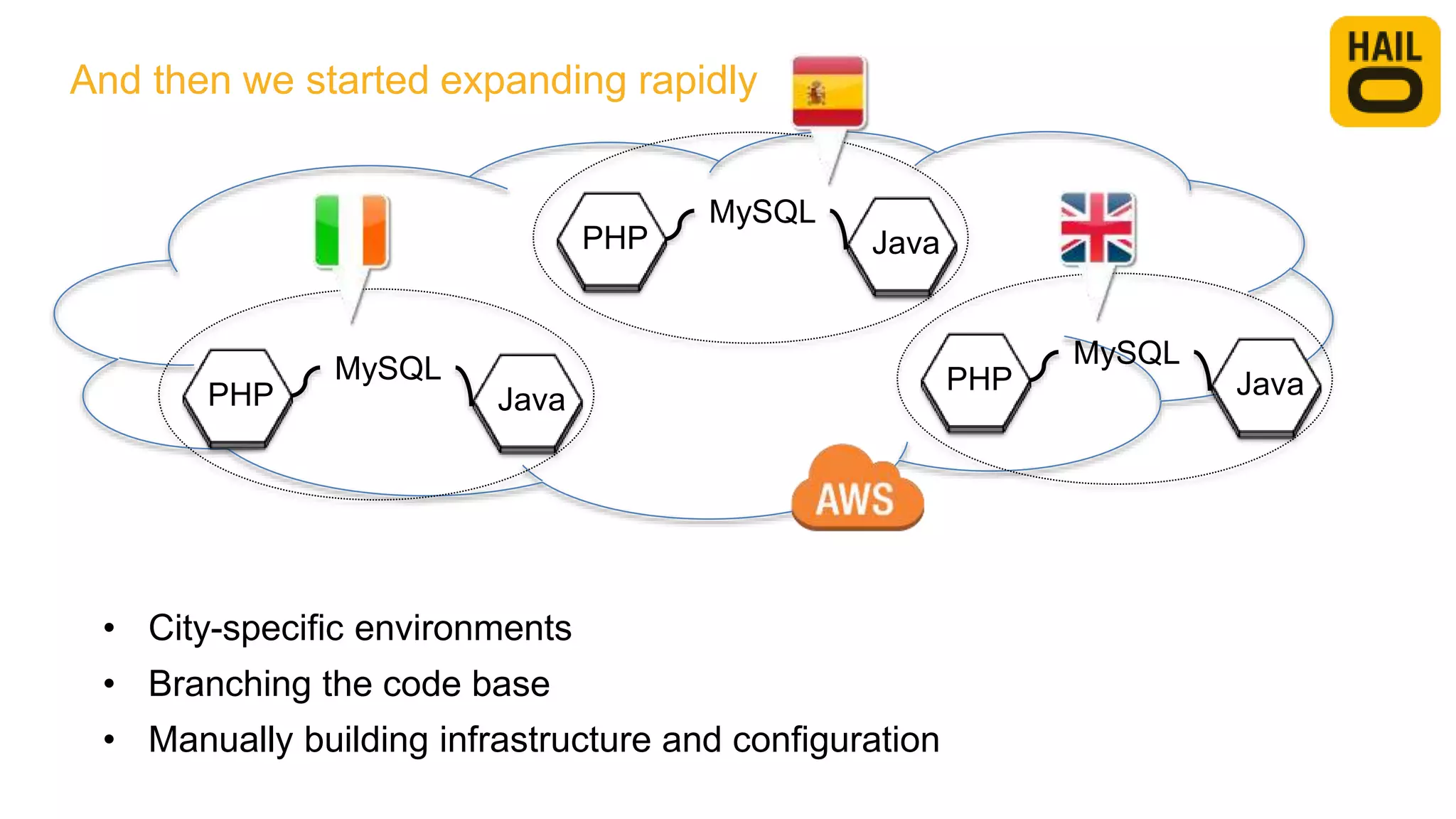

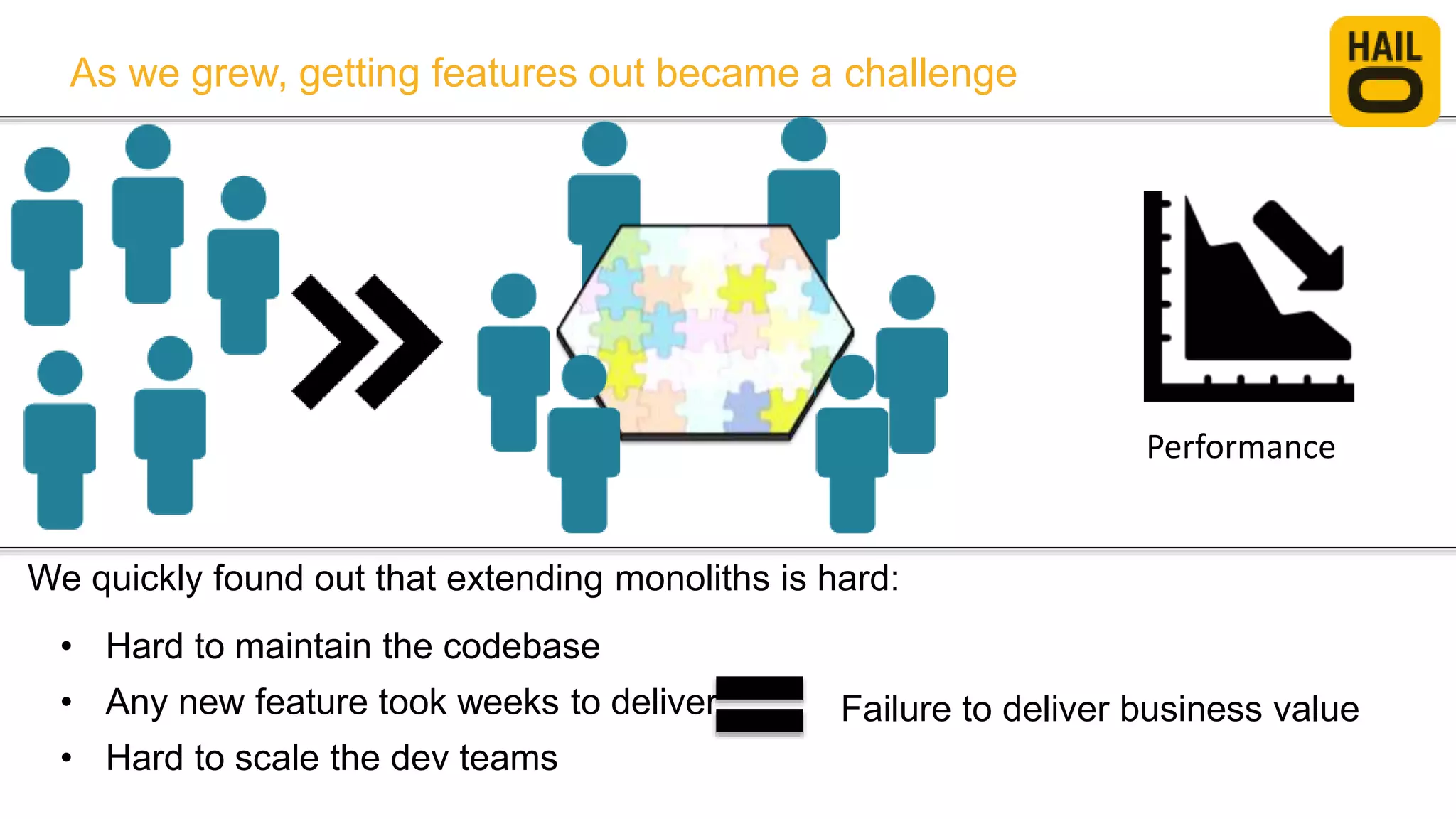

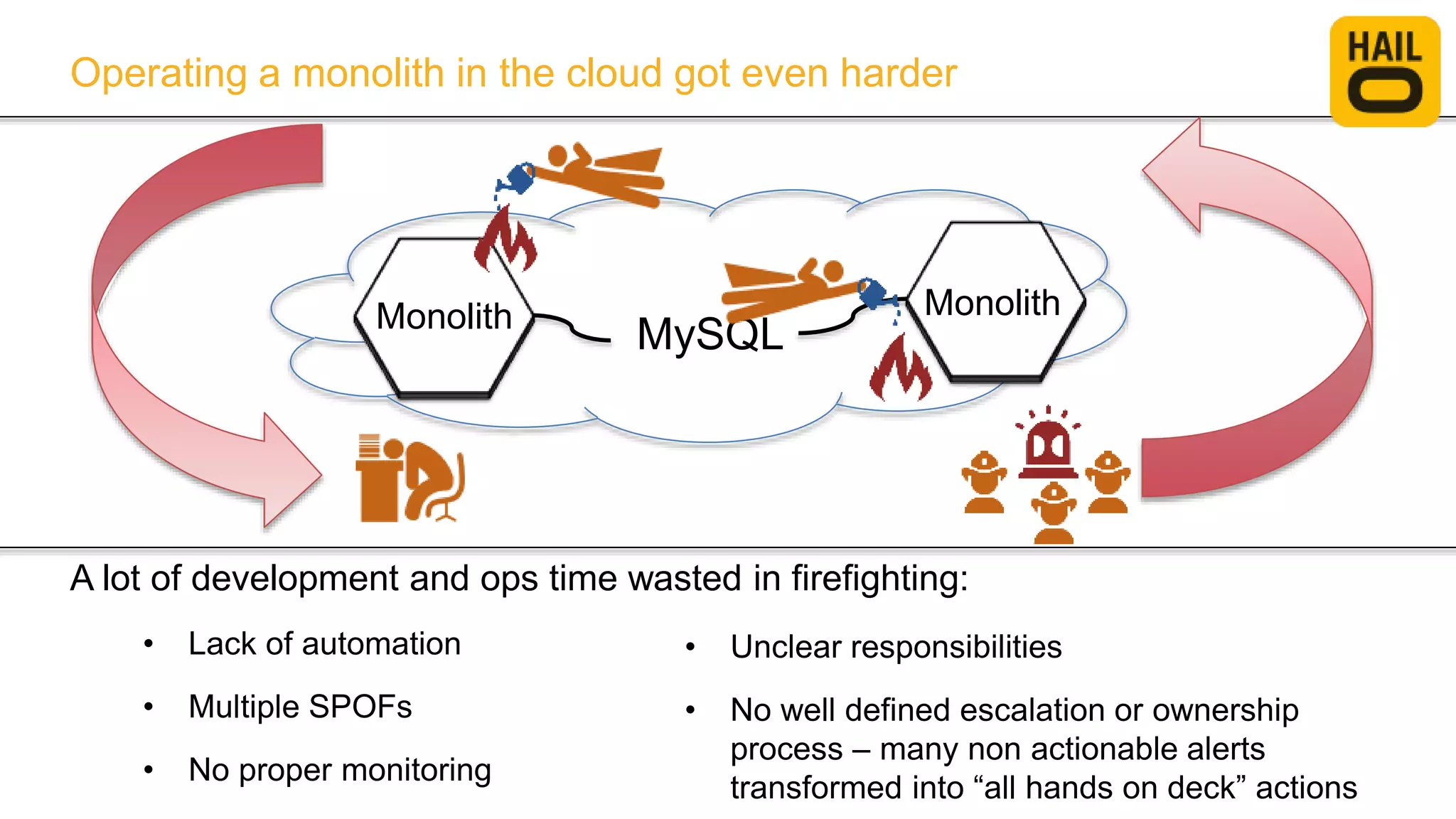

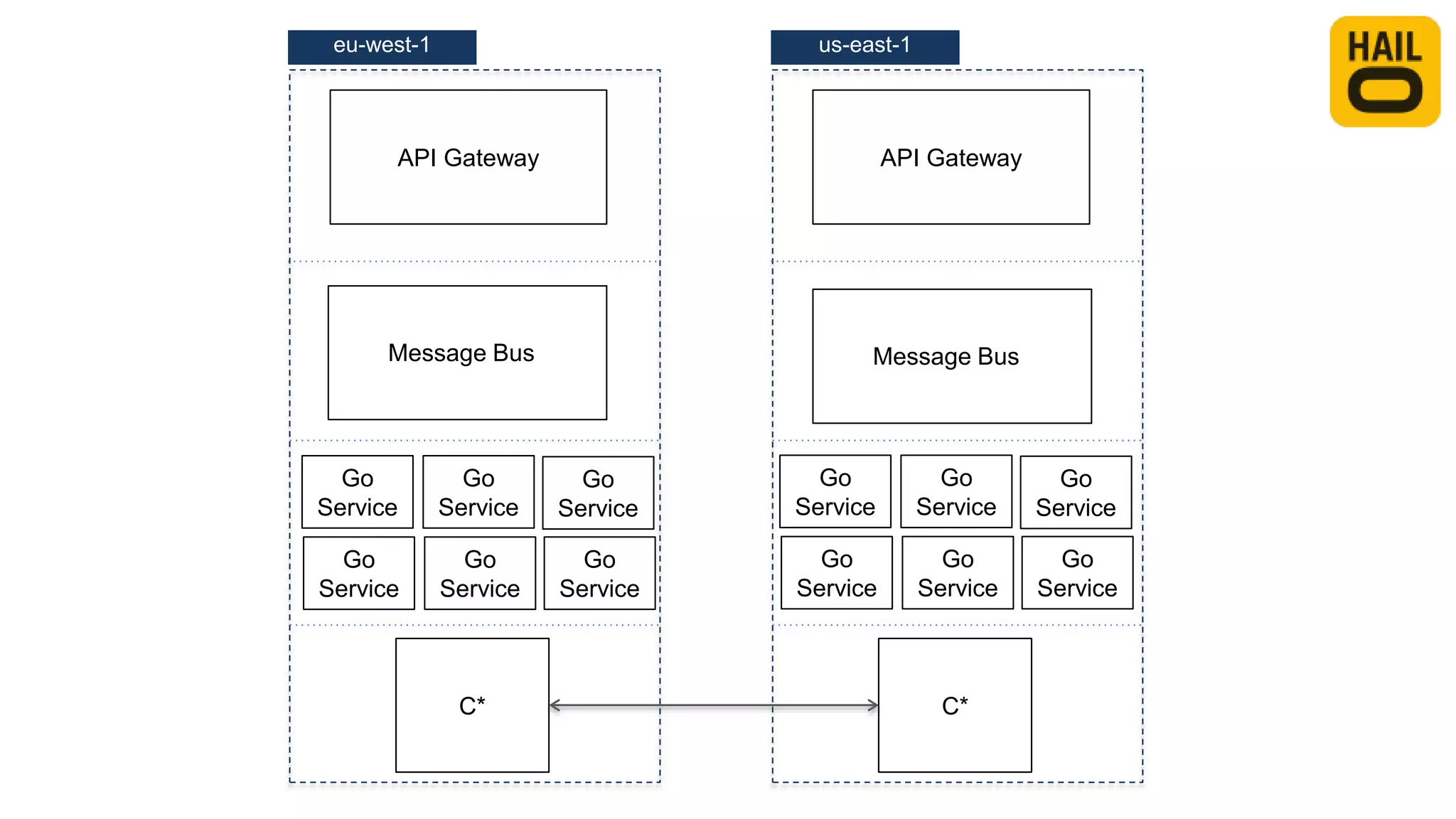

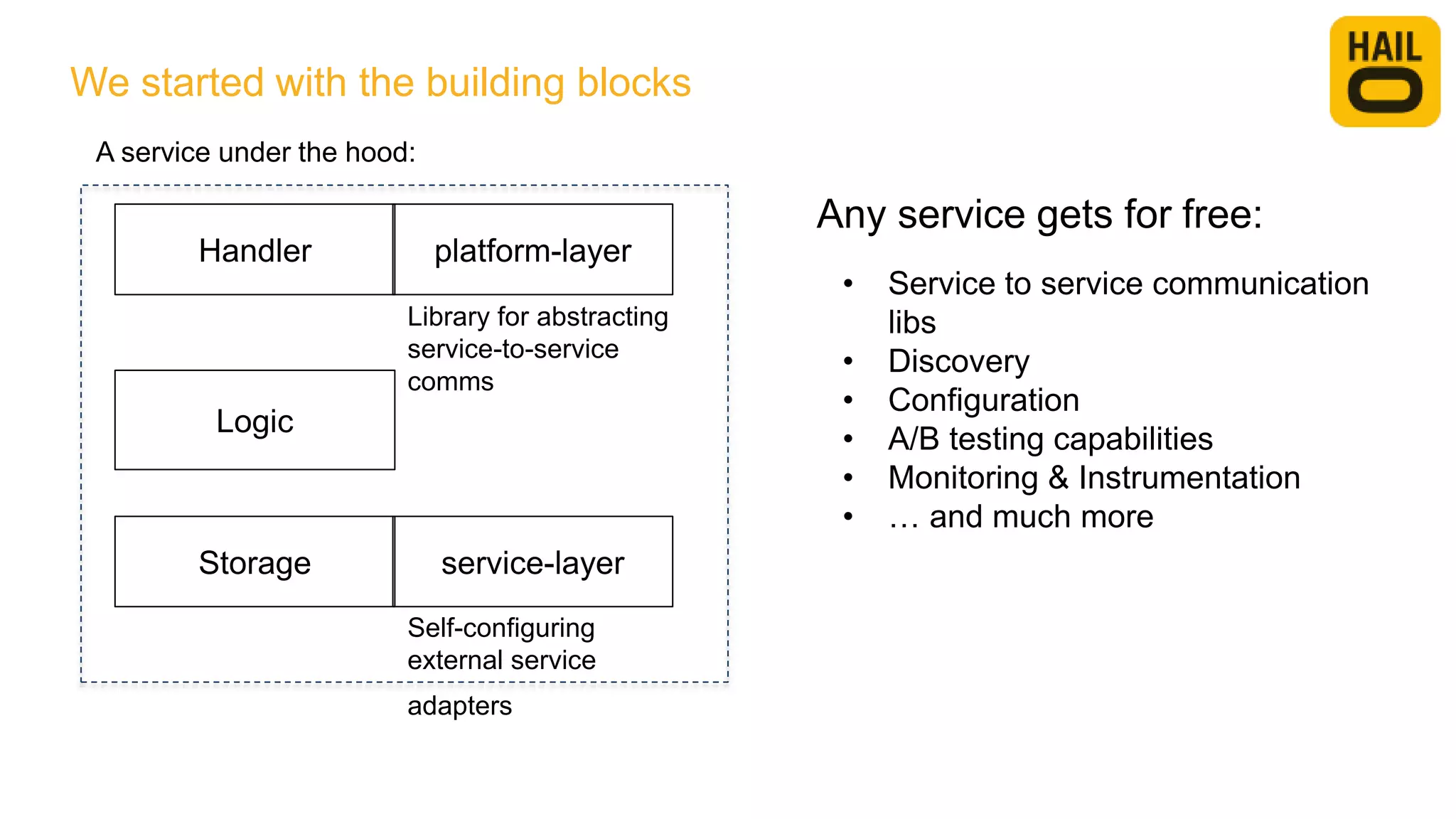

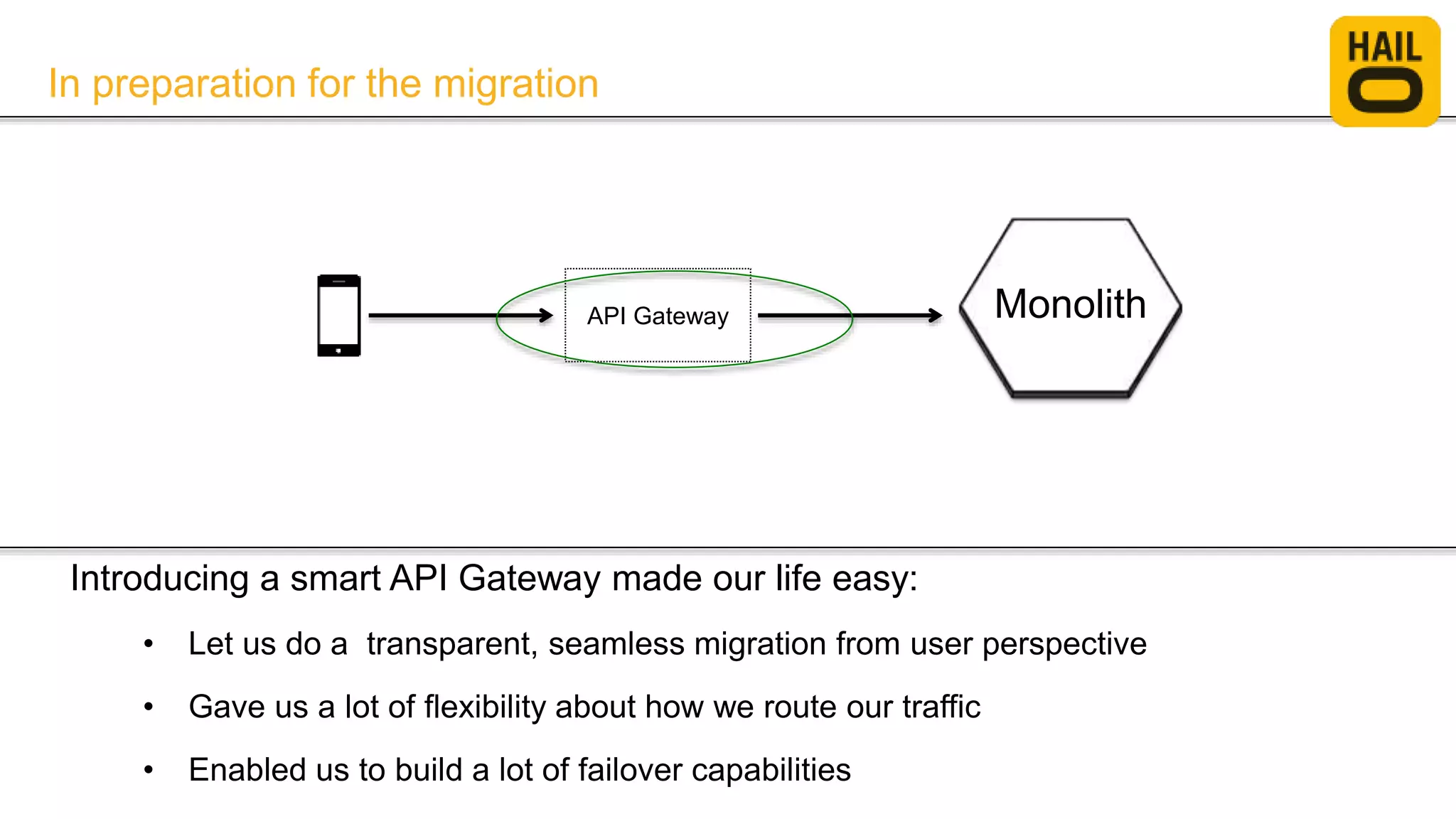

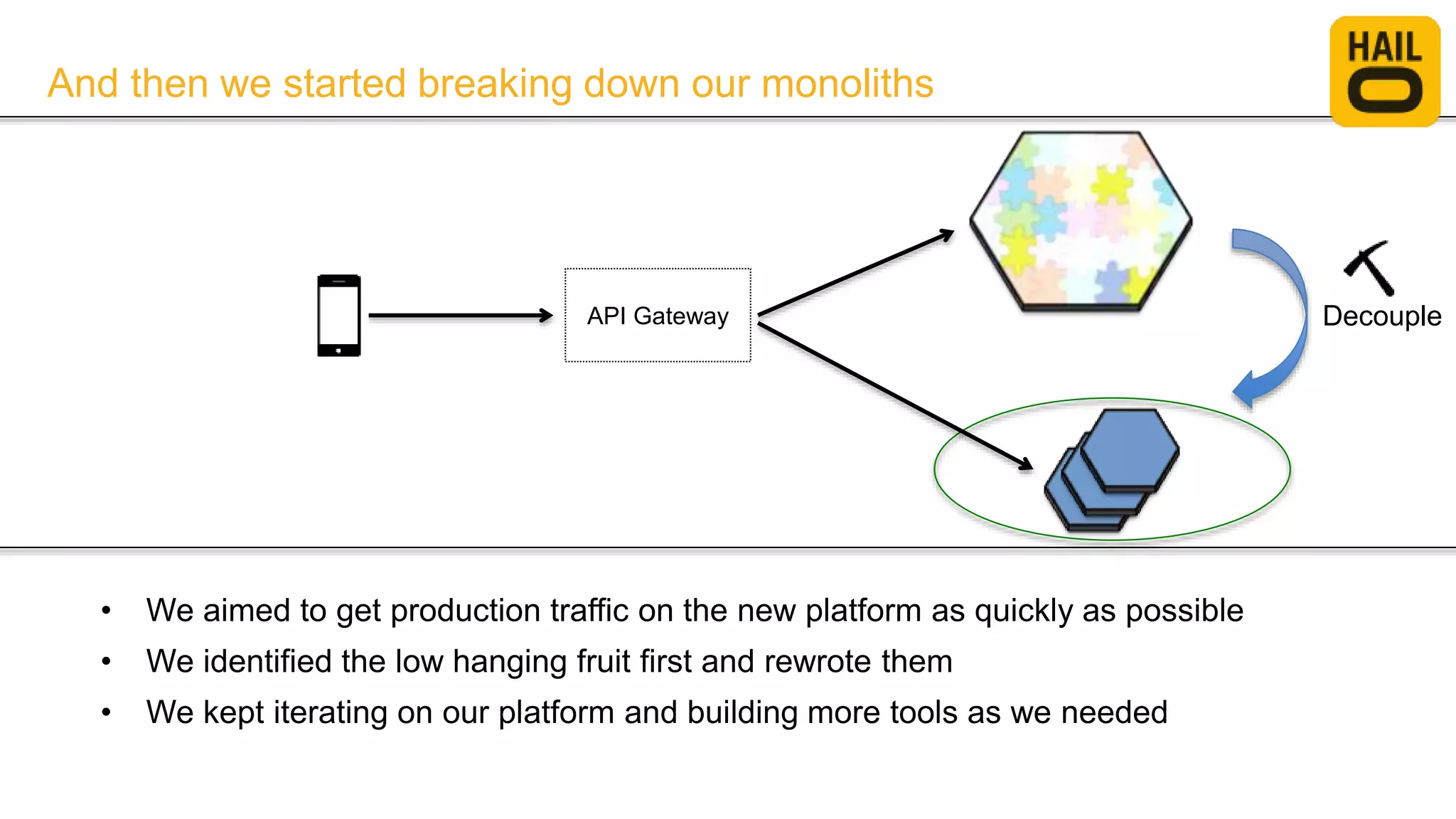

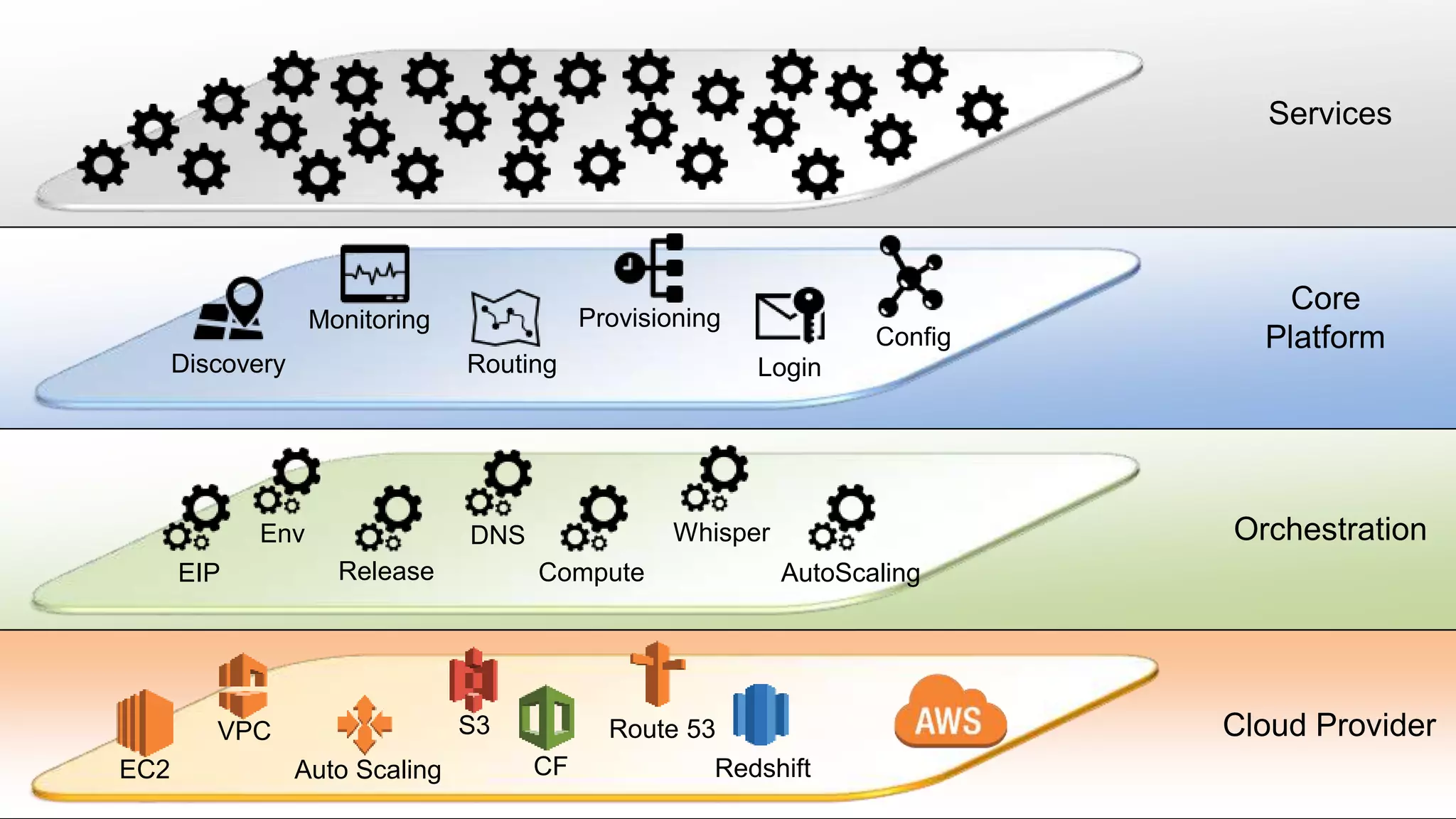

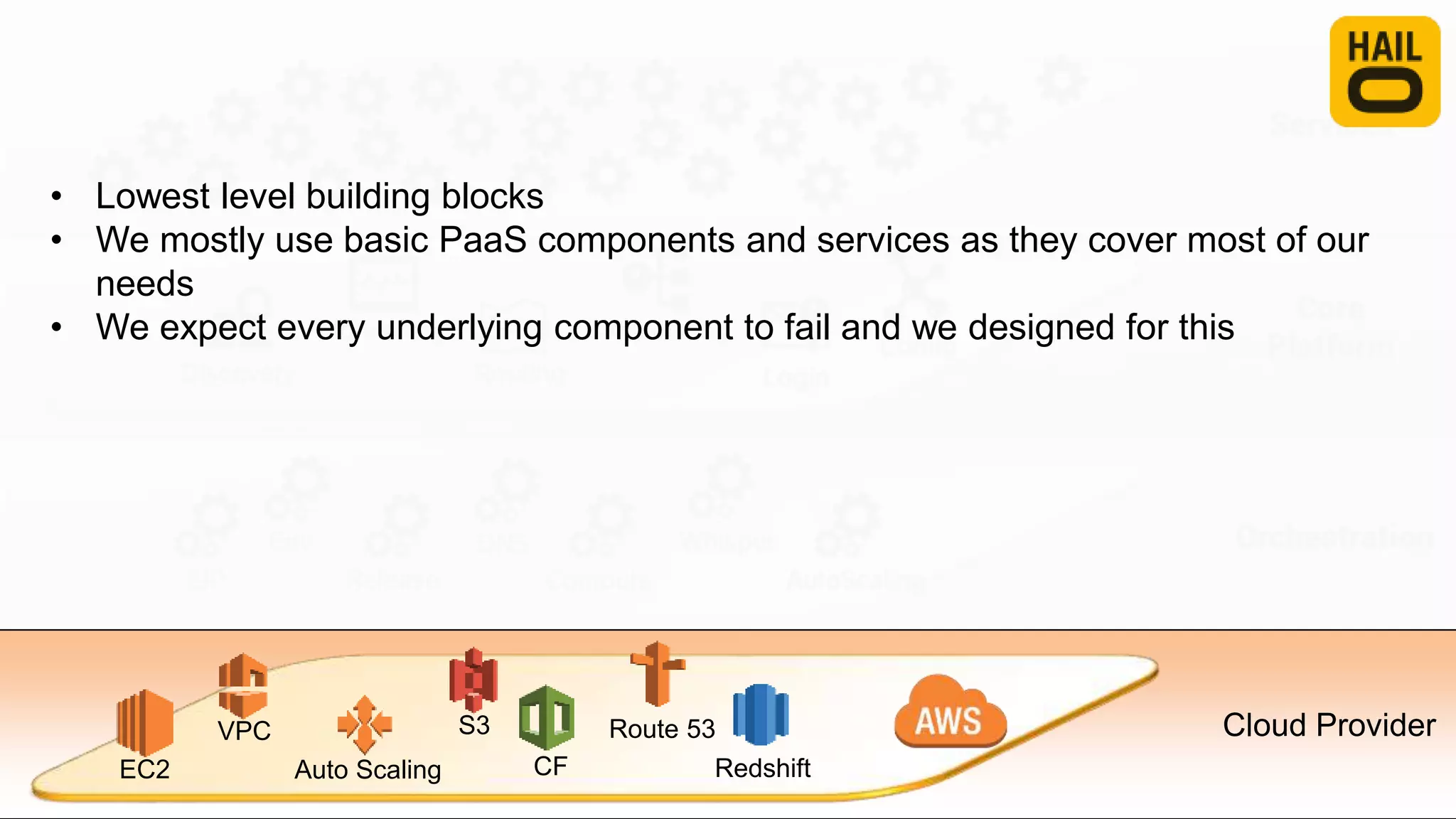

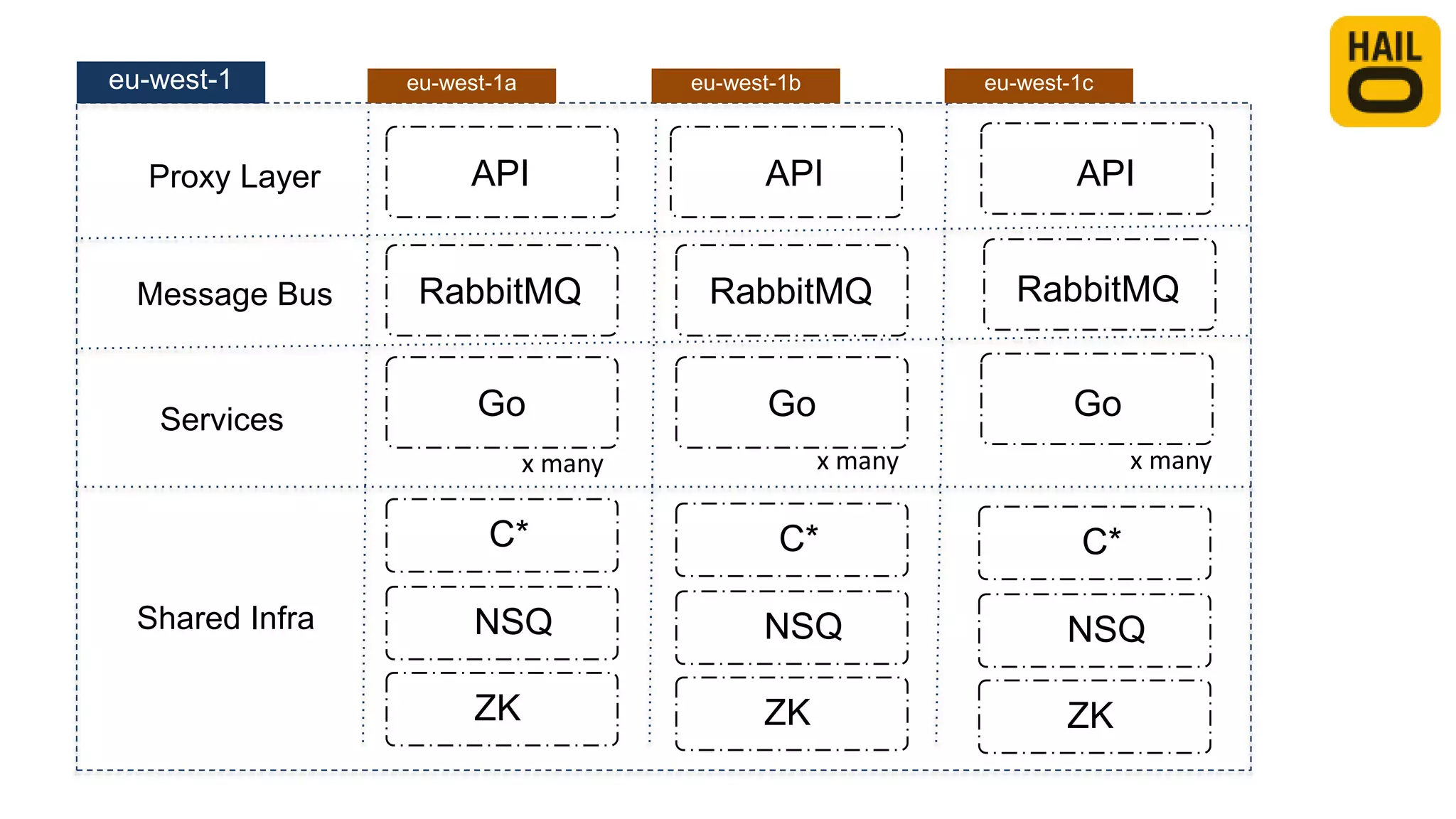

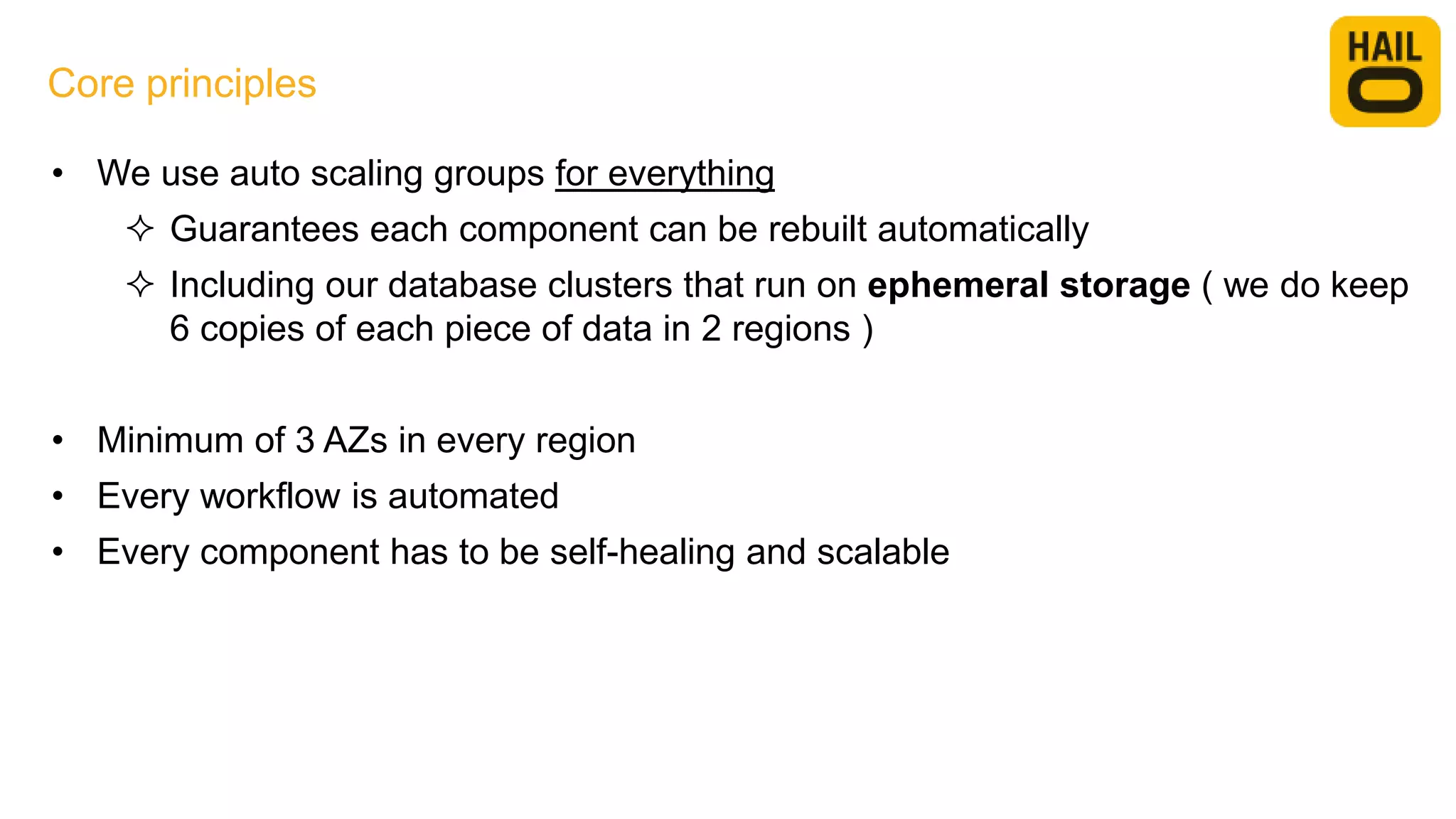

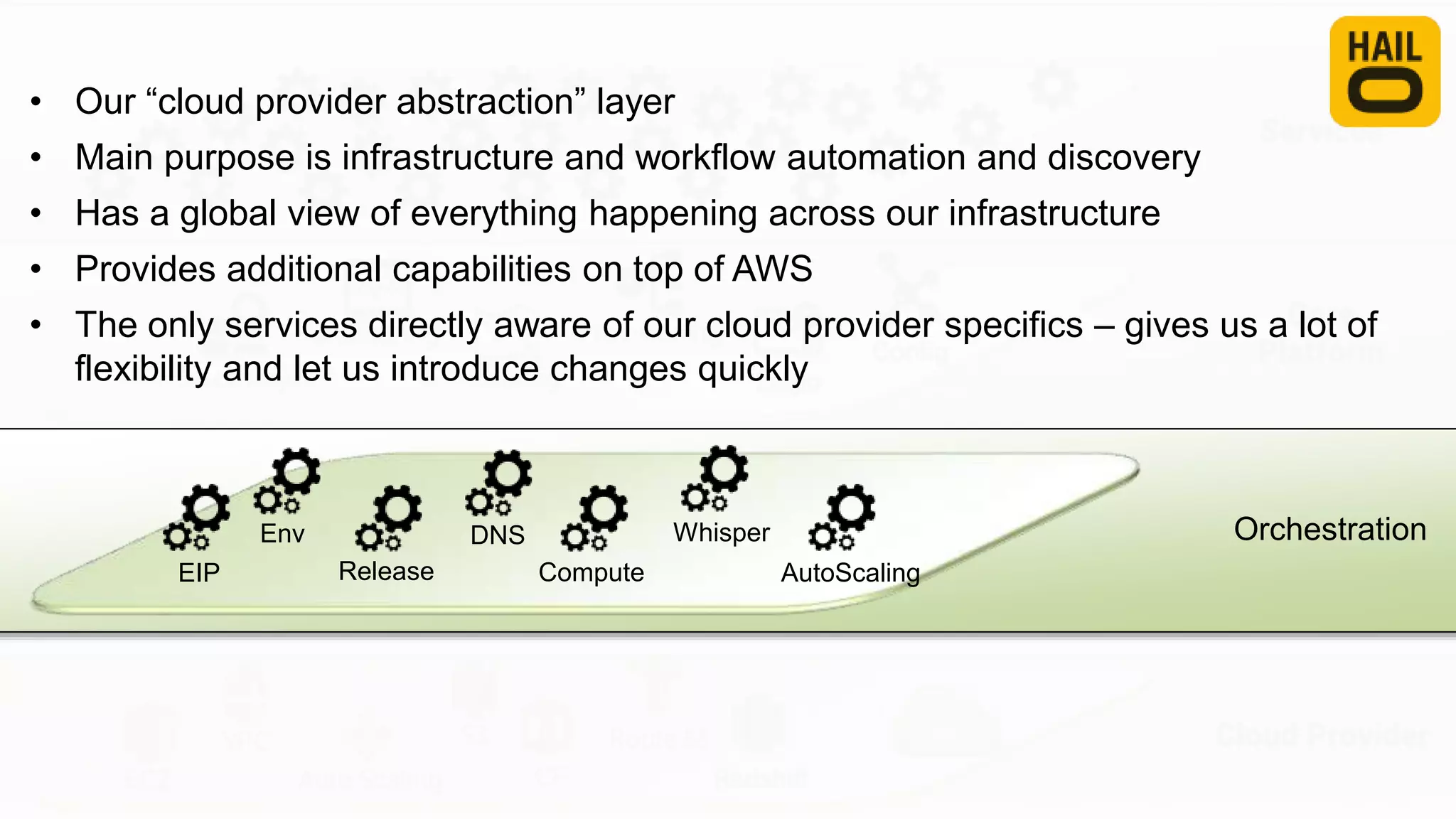

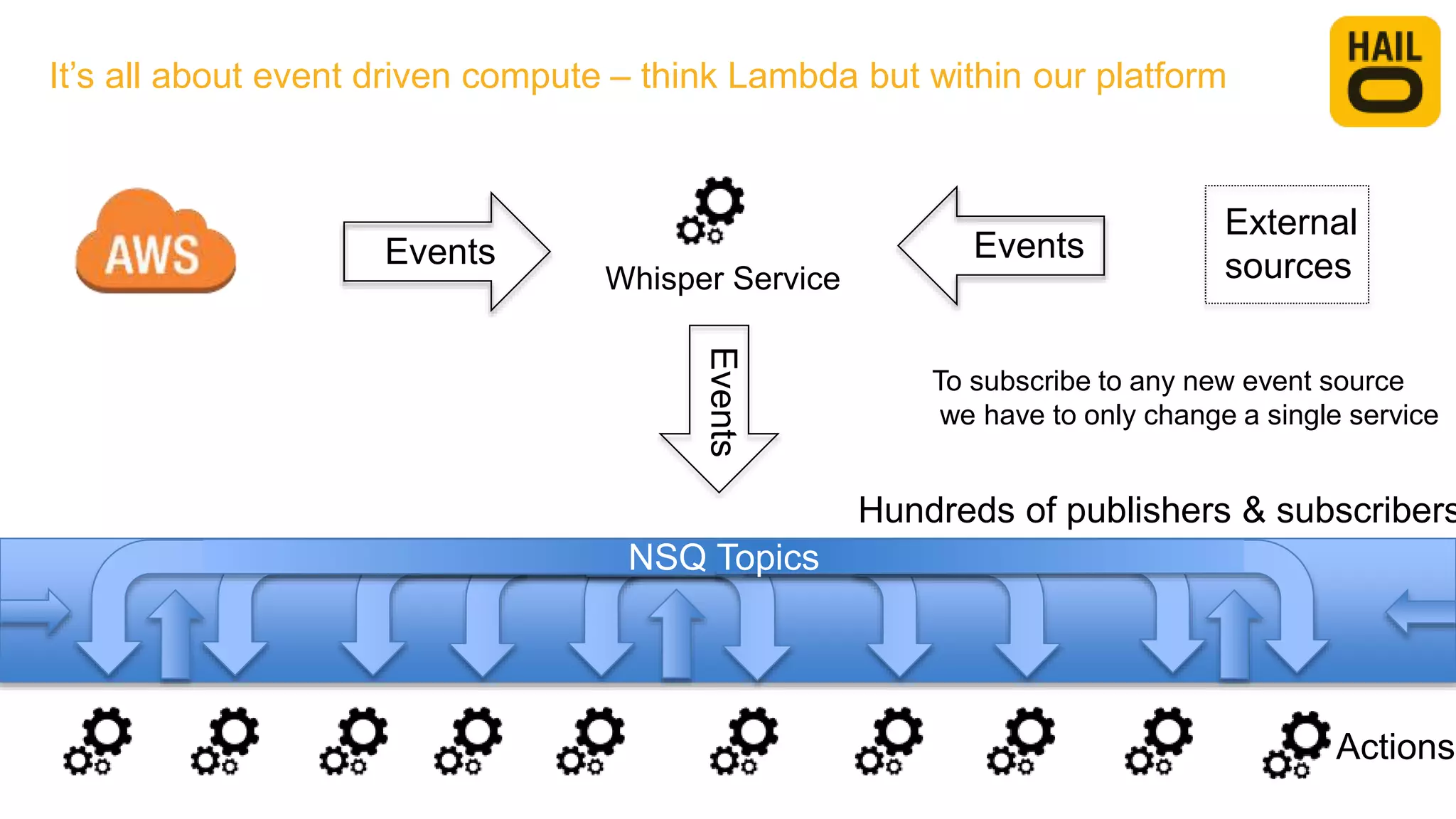

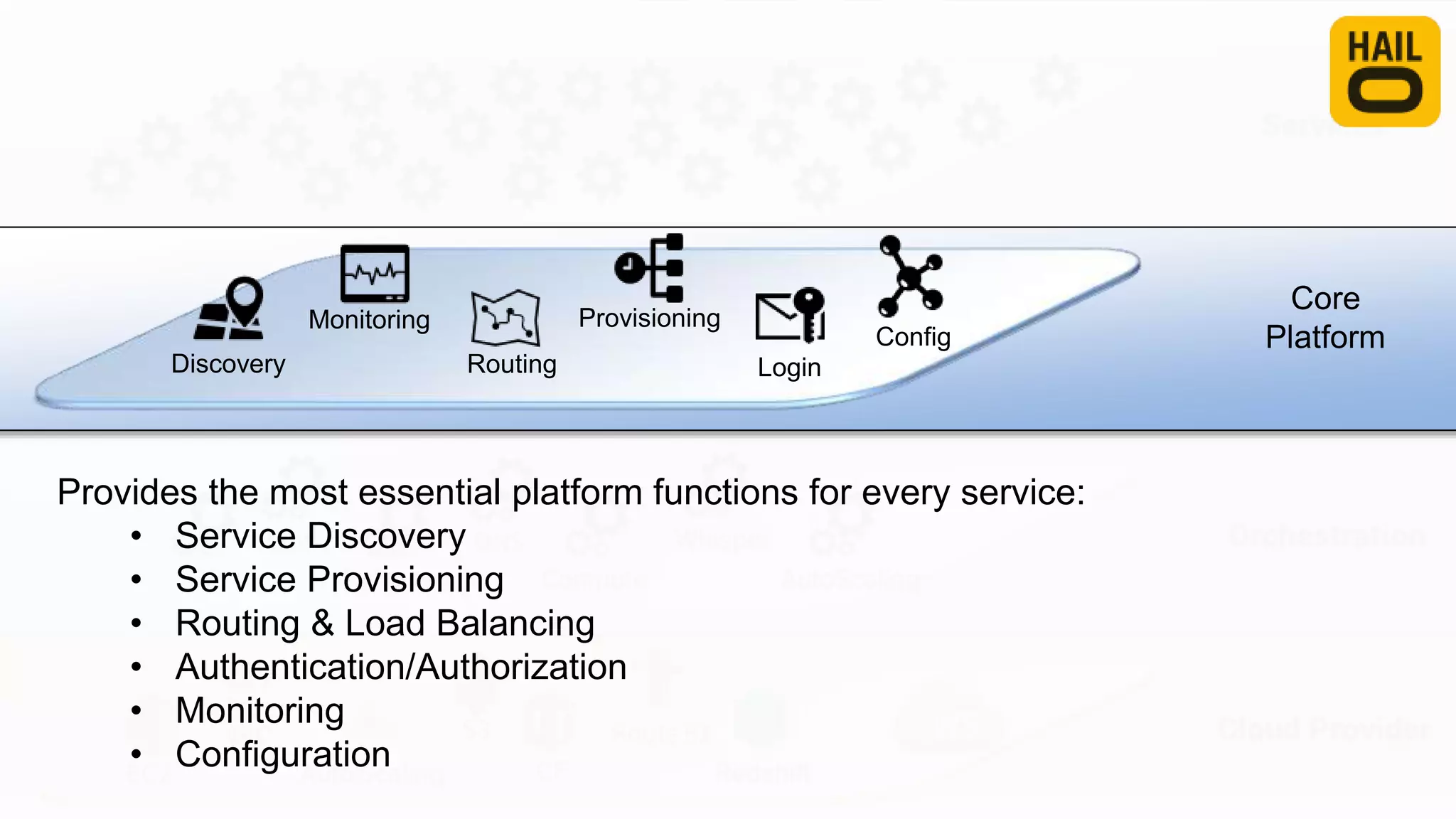

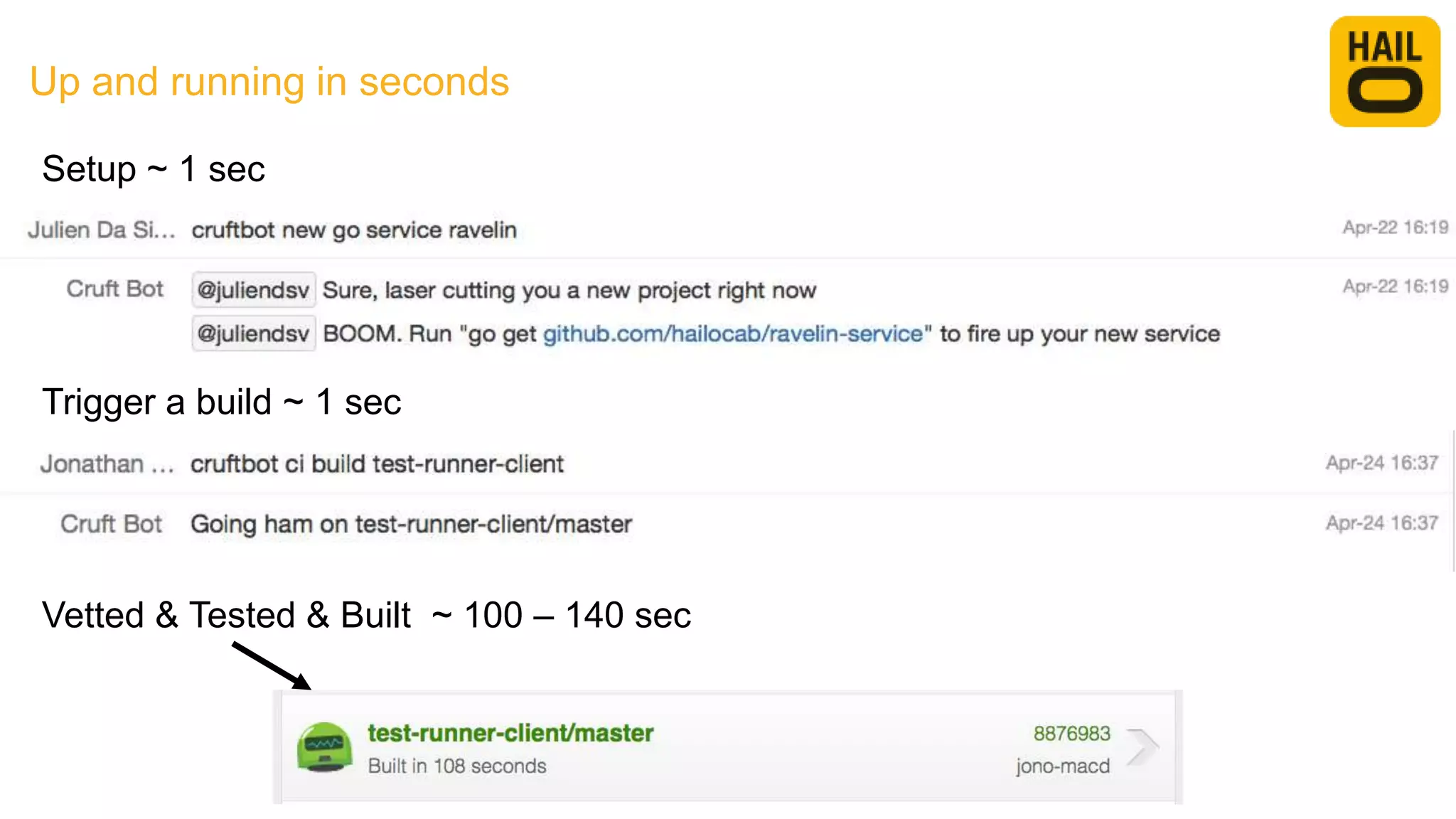

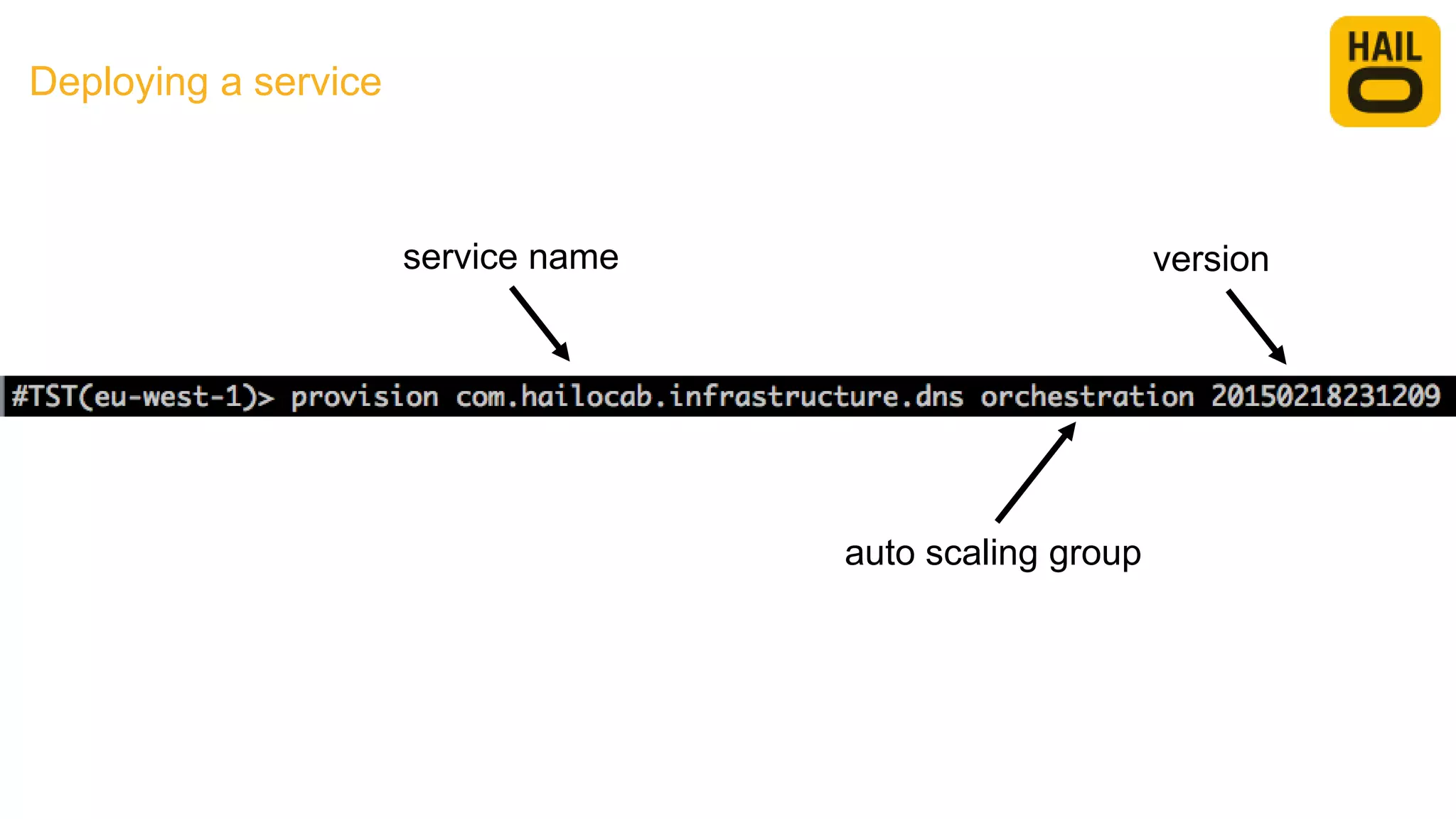

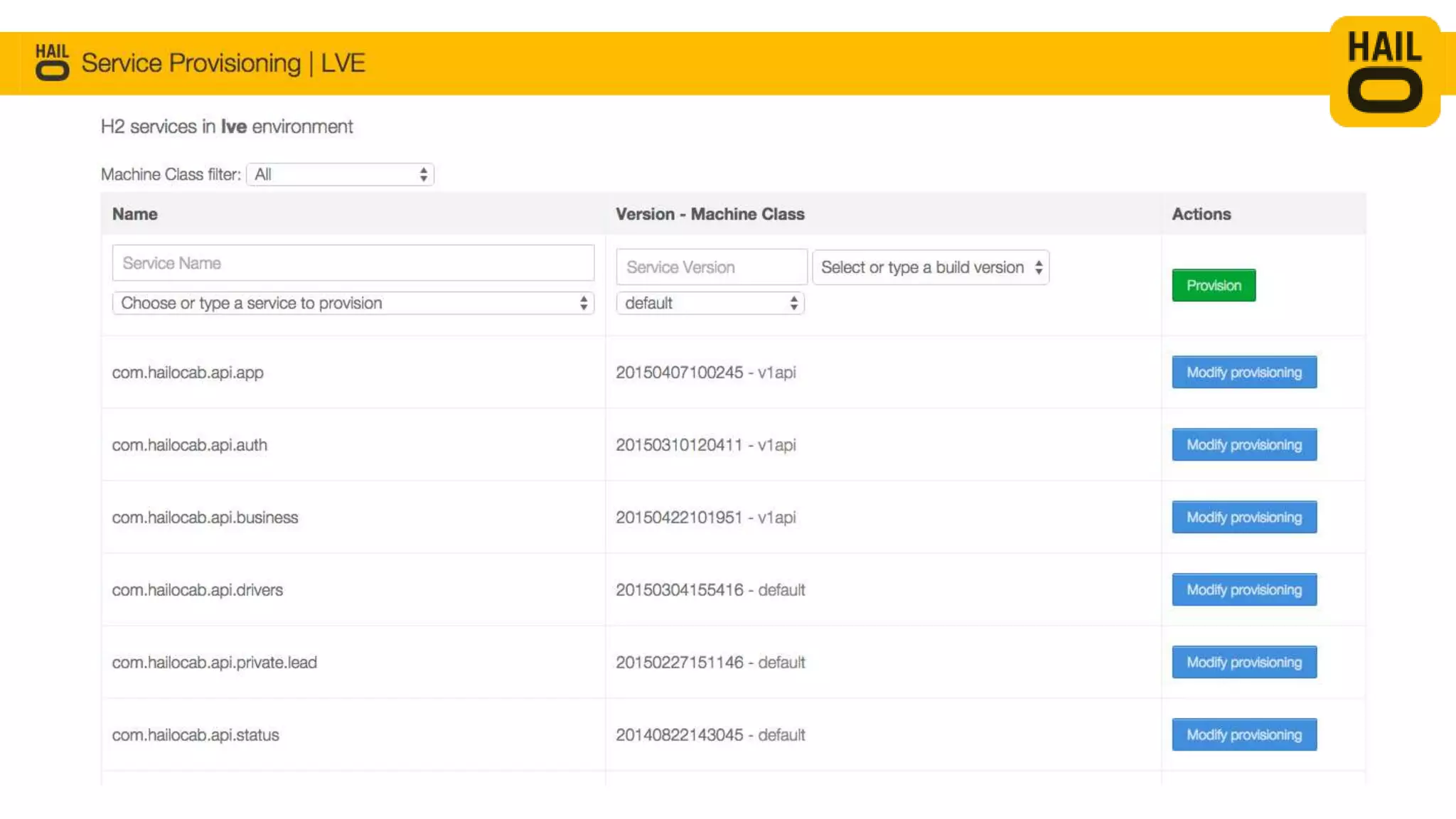

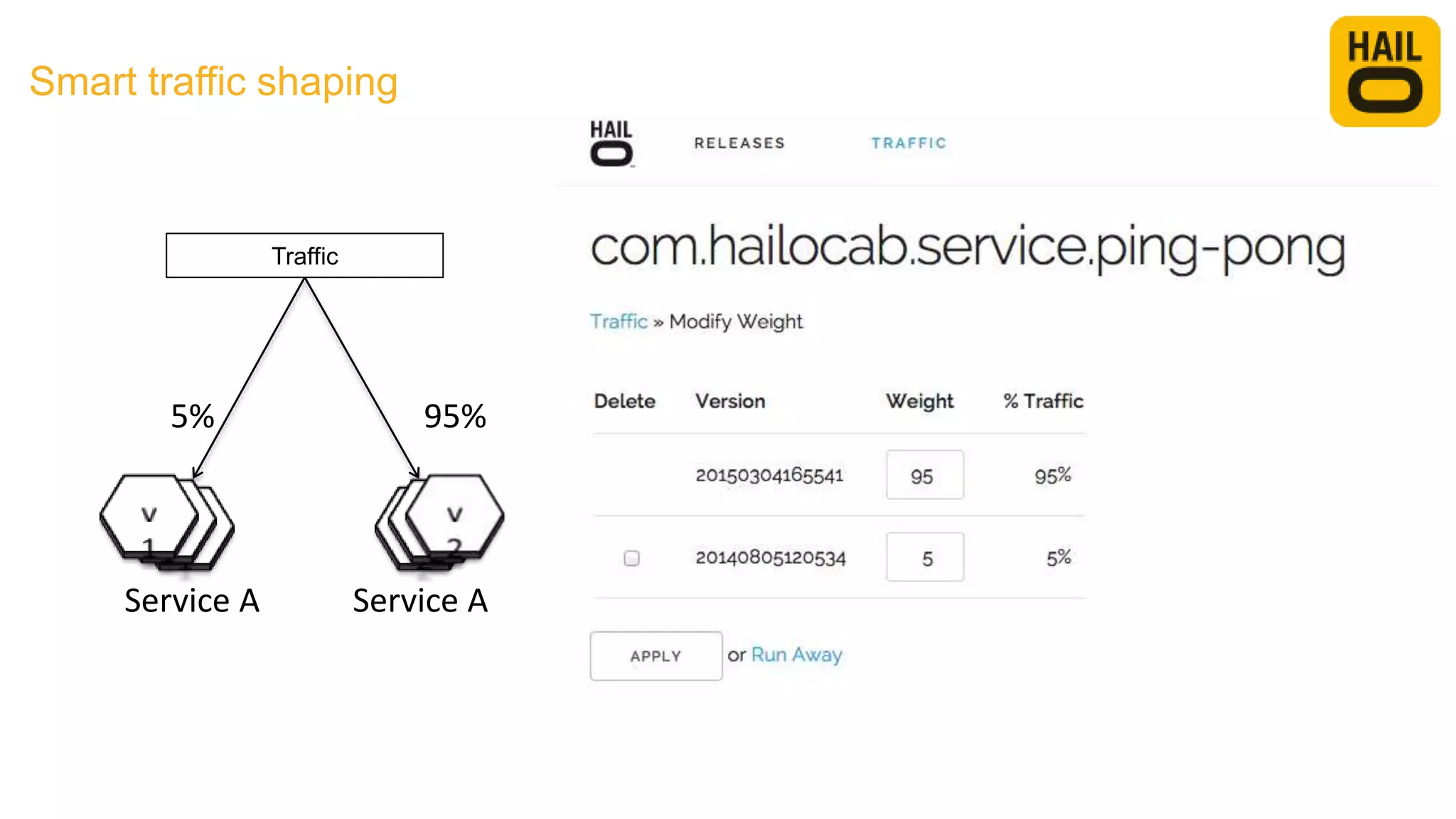

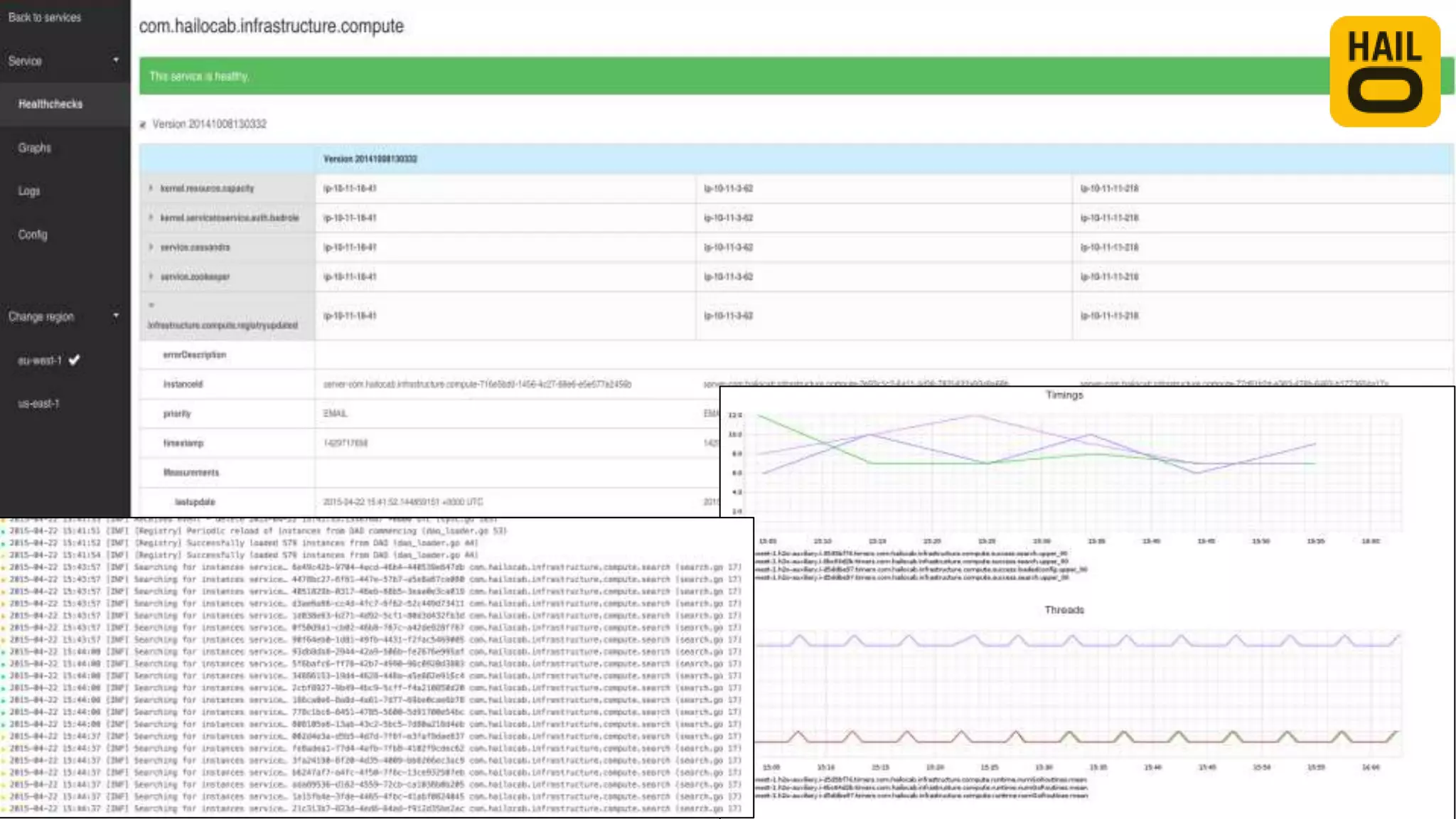

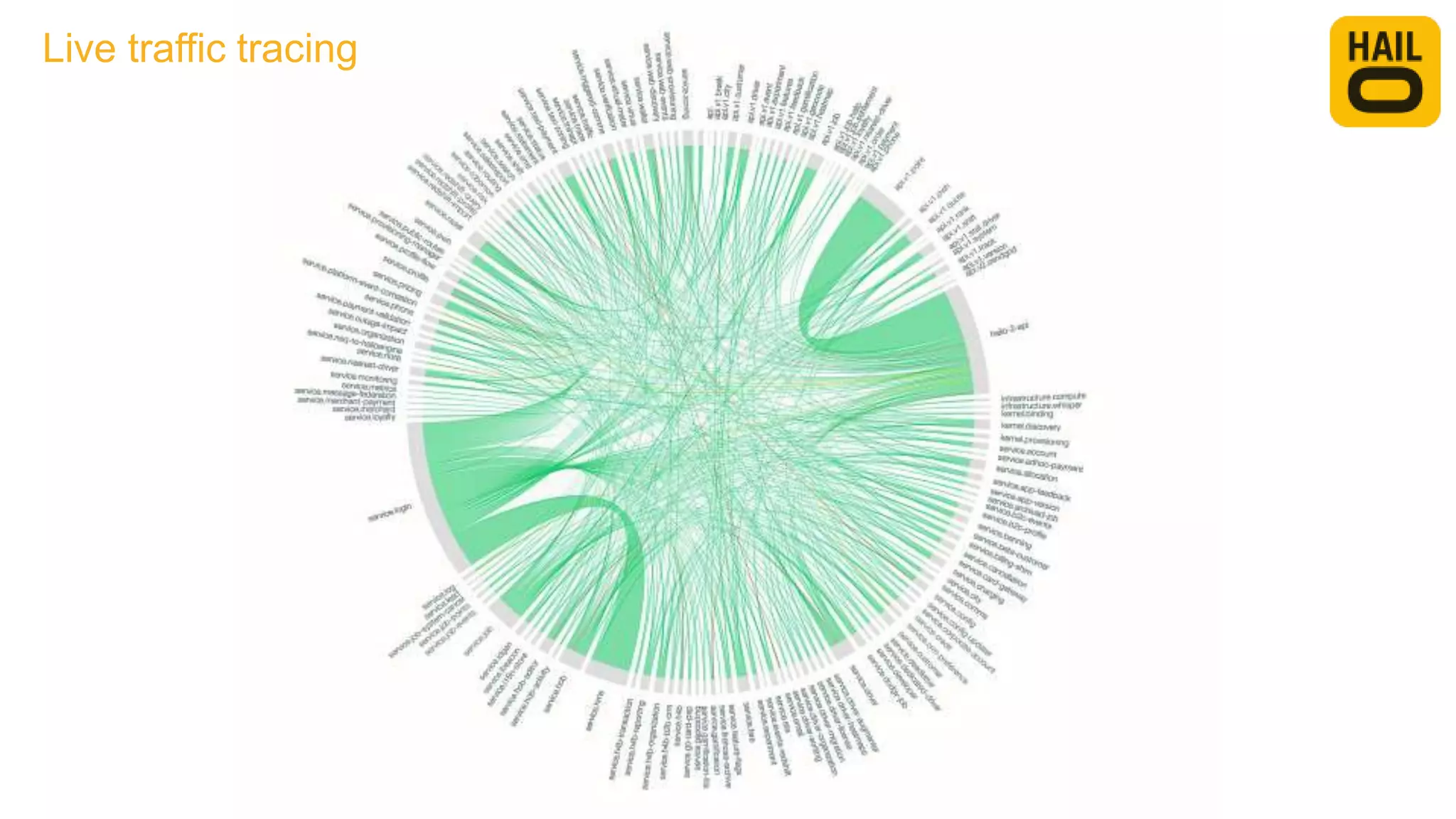

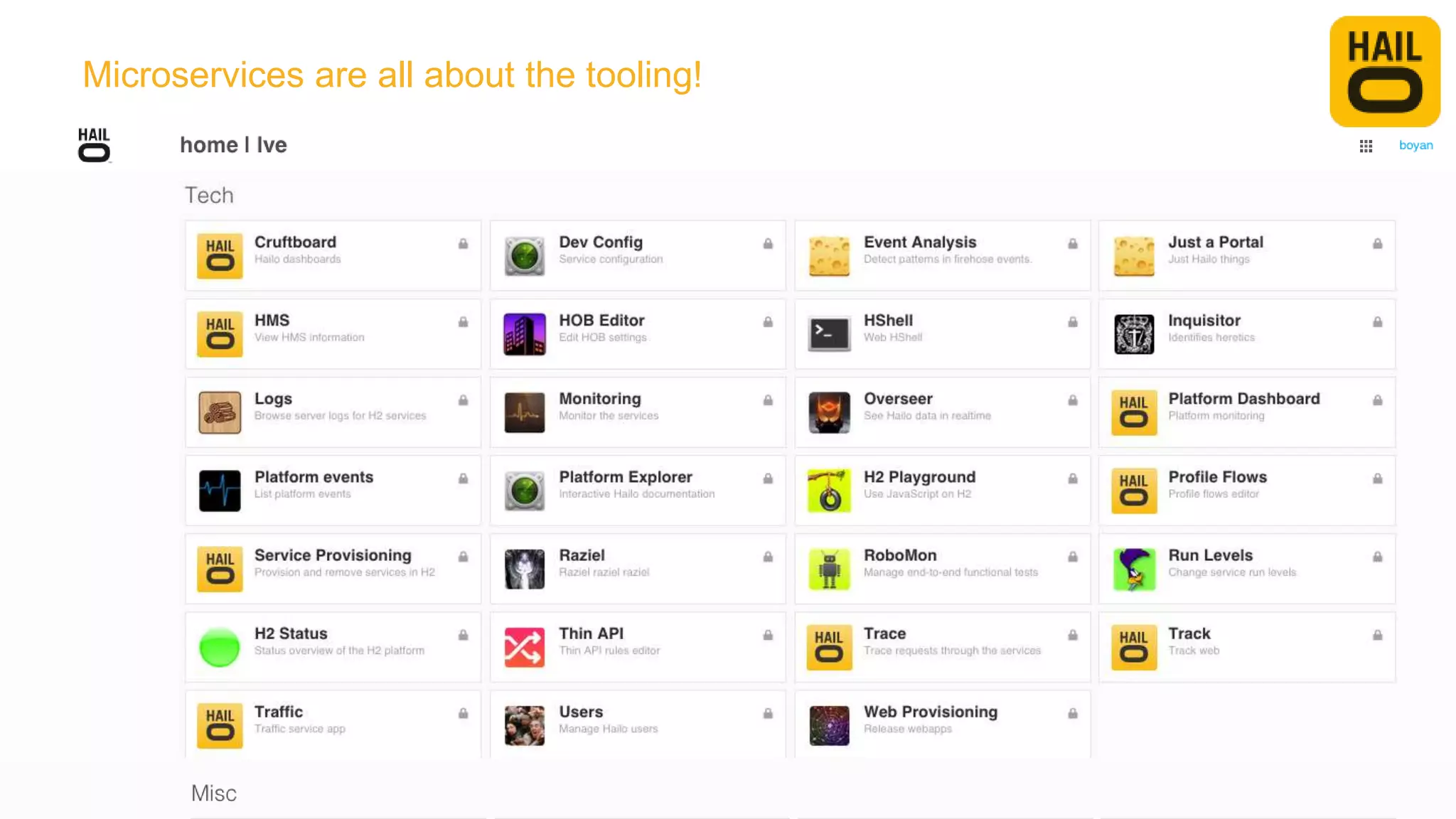

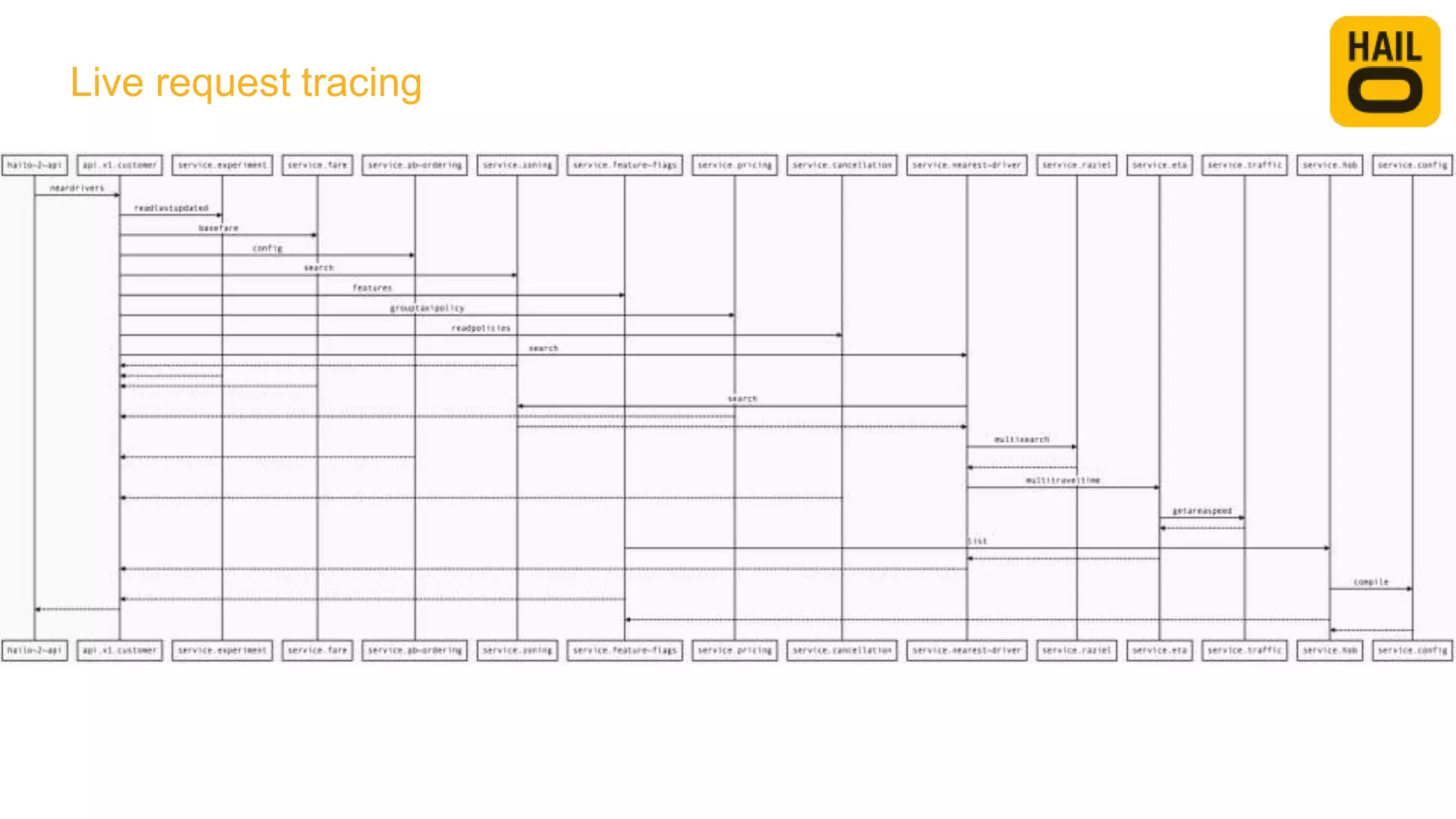

Moving to microservices was a transformational journey for the company as their system grew rapidly. They started with a monolithic architecture which became difficult to maintain and scale. This led them to redesign their system using microservices built with Go running on AWS (Amazon Web Services). They developed core platform capabilities to support automated provisioning, routing, discovery, monitoring and more. This allowed them to deploy new services rapidly and operate their distributed system more efficiently. The transition required changes to both their technology and organizational culture.