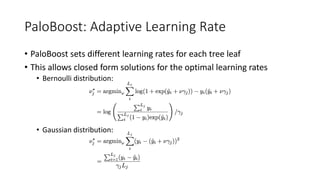

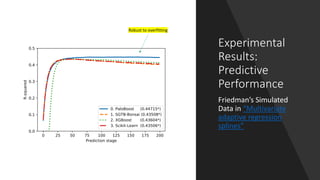

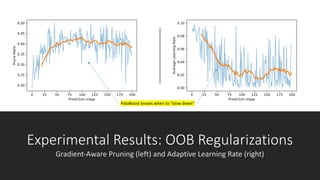

Paloboost is a novel treeboost algorithm that enhances the robustness against overfitting by effectively utilizing out-of-bag (oob) samples and implementing gradient-aware pruning and adaptive learning rates. It aims to reduce sensitivity to hyperparameters, achieving better predictive performance and feature importance estimation. The software is available within the bonsai-dt framework, with ongoing discussions for potential improvements.