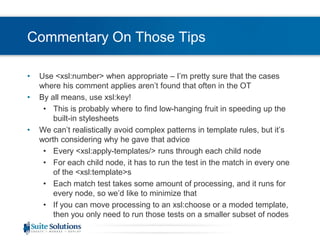

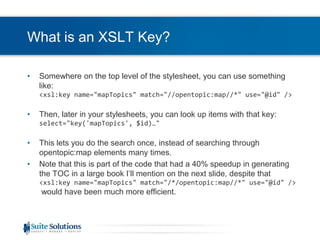

This document discusses techniques for improving the performance of DITA-OT processing. It identifies some common pain points with DITA-OT performance related to hardware, memory, and inefficient XSLT code. It provides tips for optimizing hardware configuration, Java memory settings, and writing efficient XSLT using techniques like caching parsed files, using keys instead of repeated searches, and avoiding inefficient patterns. Measurement of performance in different environments and with profiling tools is emphasized to identify the most impactful optimizations.

![XSLT PerformanceStylesheet developers don’t necessarily think about what needs to happen behind the scenesExample:<xsl:variable name=“example” select=“//*[@id=$refid]”/>This searches the whole document – fine if that’s what you want, but not if you mean:<xsl:variable name=“example” select=“..//*[@id=$refid]”/>In the context of a document where @id is unique, both would behave the same, but one would be slower than the otherExcept:this could theoretically be optimized if the @id attribute was an ID type, and you have a DTD, and the stylesheet processor has that optimization built in, which leads us back to …Measurement is also useful for stylesheetsSaxon comes in a free version and commercial versionsNot that expensive, with more optimizations, which might matter for your workload – or might not](https://image.slidesharecdn.com/otperformancewebinar-100819010933-phpapp01/85/Ot-performance-webinar-11-320.jpg)

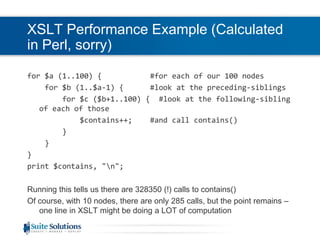

![XSLT Performance (2)XPath tends to have one line requests, but that one line can hide a lot of computationWhat needs to happen to process this?preceding-sibling::*[following-sibling::*[contains(@class, ‘ topic/ul ‘)]]Preceding-sibling has to check each previous siblingFor each one, following-sibling has to check every following-siblingAnd contains() itself can’t be that efficient because it needs to hunt within @class for ‘ topic/ul ‘Some numbers: Let’s look at 100 nodes, and let’s pretend that there is no topic/ul, so the test never succeeds. Let’s run this test on all 100 nodes in sequenceWe could do the math, but it’s easier to write a program](https://image.slidesharecdn.com/otperformancewebinar-100819010933-phpapp01/85/Ot-performance-webinar-13-320.jpg)

![Tips From Mike KayEight tips for how to write efficient XSLT:Avoid repeated use of "//item".Don't evaluate the same node-set more than once; save it in a variable.Avoid <xsl:number> if you can. For example, by using position().Use <xsl:key>, for example to solve grouping problems.Avoid complex patterns in template rules. Instead, use <xsl:choose> within the rule.Be careful when using the preceding[-sibling] or following[-sibling] axes. This often indicates an algorithm with n-squared performance.Don't sort the same node-set more than once. If necessary, save it as a result tree fragment and access it using the node-set() extension function.To output the text value of a simple #PCDATA element, use <xsl:value-of> in preference to <xsl:apply-templates>.](https://image.slidesharecdn.com/otperformancewebinar-100819010933-phpapp01/85/Ot-performance-webinar-15-320.jpg)

![More On Slow XSLTConsider what’s inside a loopExample:If you have a template, and the template defines a variable:<xsl:variable name=“topicrefs” select=“//*[contains(@class, ‘ map/topicref ‘)]”/>(This isn’t a good idea to start with because of //)This variable will have the same value every timeSo why not only construct it once?Move it out of the template and make it a global variableOne customer speeded up TOC generation by around 40% on a huge book](https://image.slidesharecdn.com/otperformancewebinar-100819010933-phpapp01/85/Ot-performance-webinar-18-320.jpg)