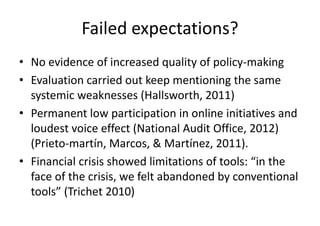

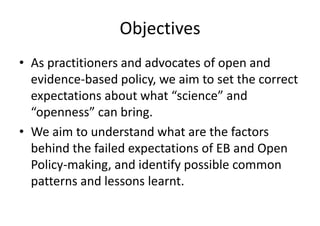

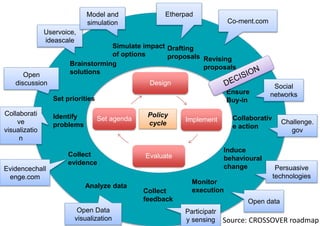

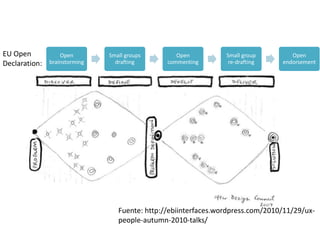

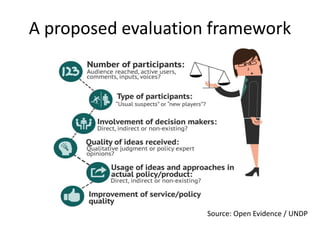

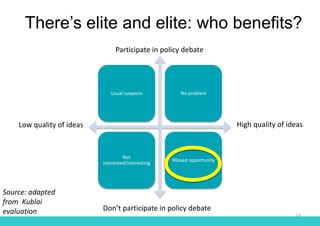

Evidence-based and open policy-making approaches have failed to meet expectations due to unrealistic assumptions about their ability to substitute for political decision-making and an overemphasis on data-driven solutions. Both approaches work best when they are integrated and support rather than replace the policy process and roles of policymakers. A more realistic perspective is needed that accounts for the complexity of decision-making and considers the full policy cycle, not just decisions. Evaluation frameworks should also assess how open and evidence-based initiatives impact different stakeholders and whether they truly benefit the public interest.