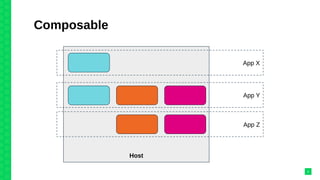

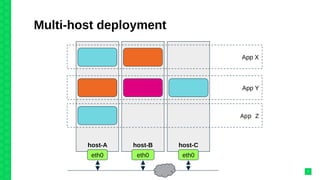

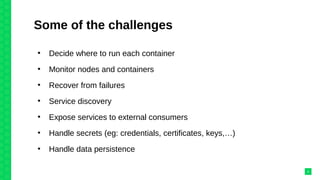

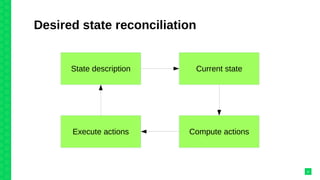

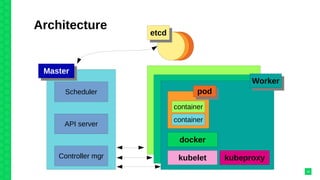

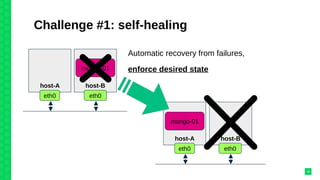

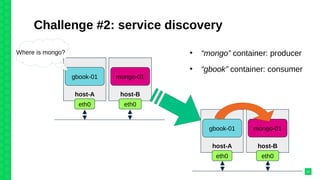

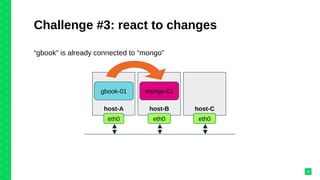

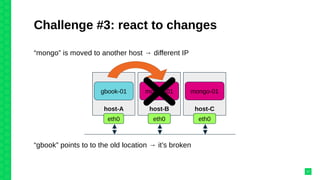

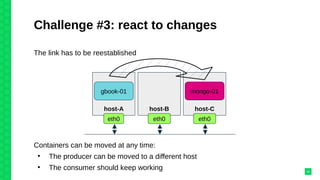

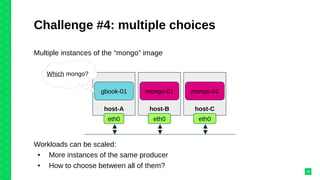

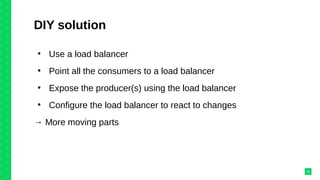

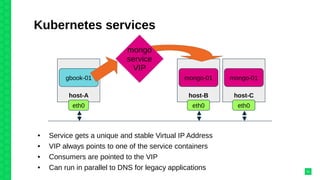

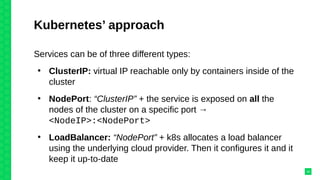

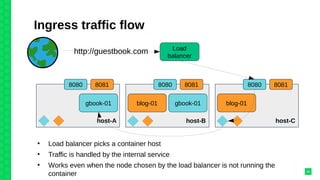

The document discusses orchestrating Linux containers with a focus on developing scalable microservices architectures across hybrid cloud environments. It highlights the challenges of container deployment, including service discovery, self-healing, and data persistence, while presenting Kubernetes as a robust orchestration engine that manages application states and simplifies these issues. Practical examples are provided, such as managing a guestbook application with MongoDB, detailing how to effectively handle changes and resource allocation for containers.