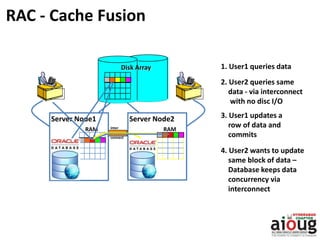

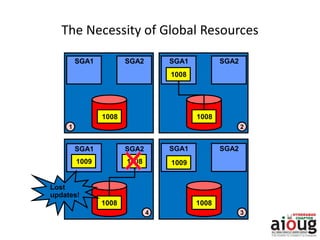

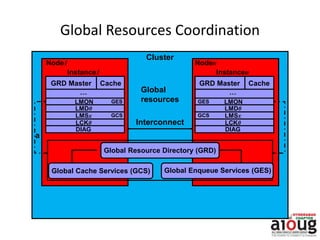

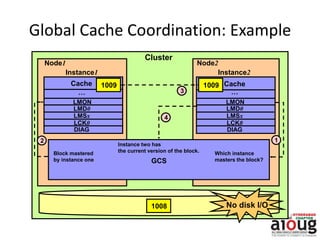

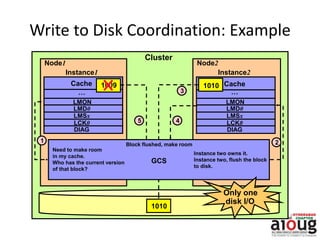

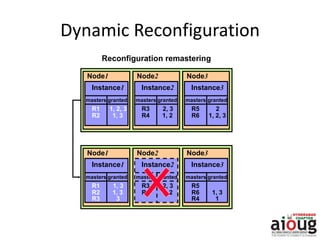

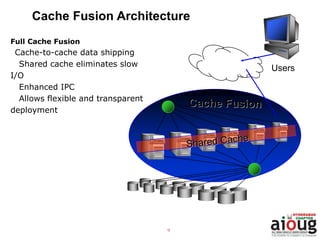

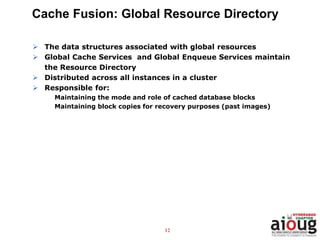

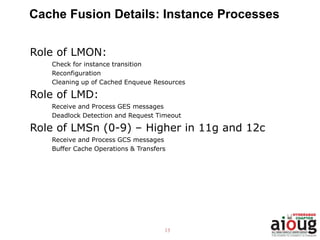

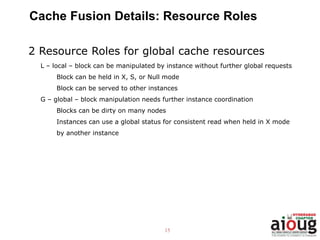

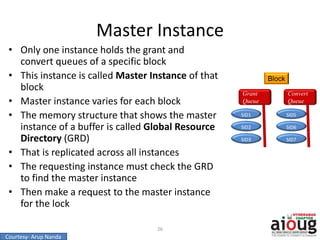

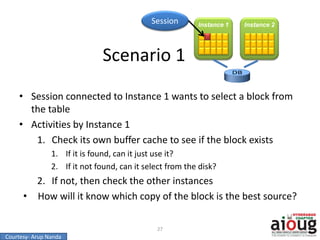

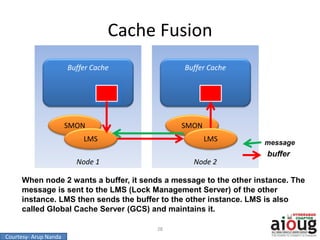

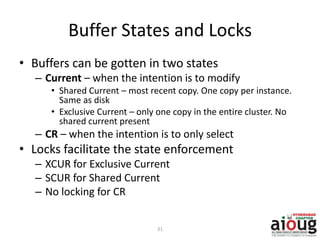

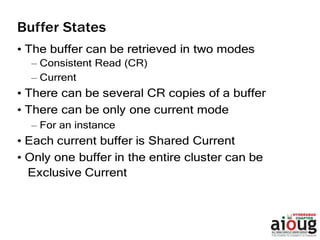

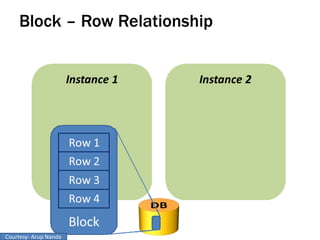

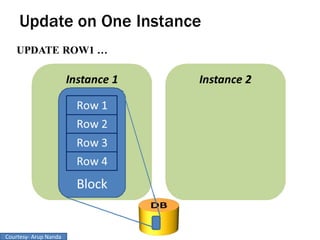

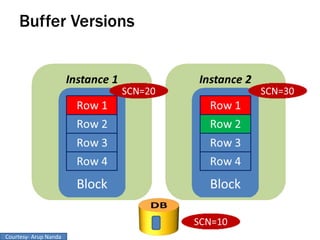

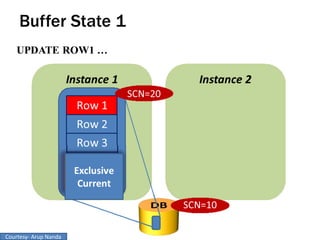

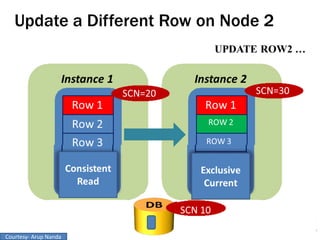

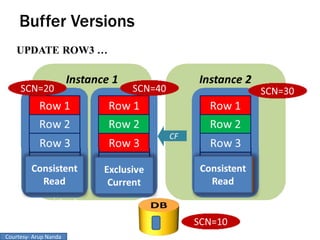

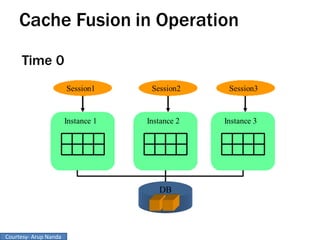

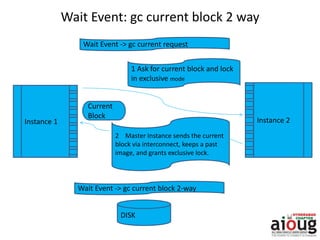

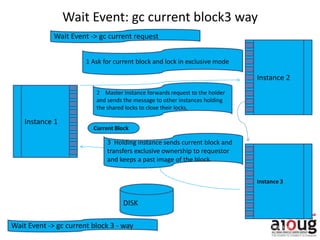

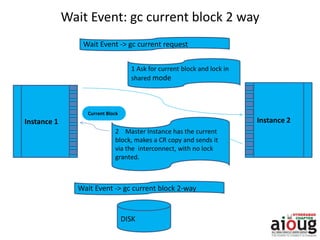

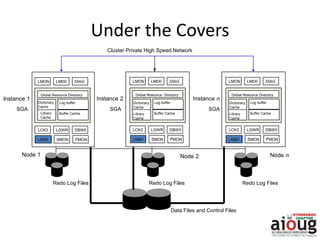

RAC Cache Fusion allows Oracle Real Application Clusters instances to share cached data in memory to avoid disk I/O and improve performance. Key aspects of Cache Fusion include global cache services coordinating cached data across instances, maintaining data consistency through modes and roles for cached blocks, and keeping past images of dirty blocks for recovery purposes. Cache blocks can be accessed locally or globally depending on their assigned role and mode.