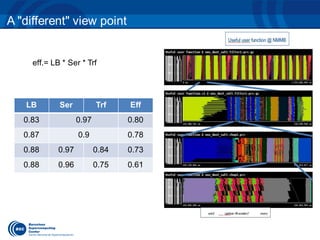

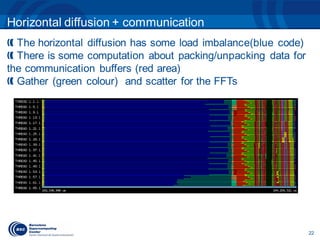

The document discusses the optimization of an Earth science atmospheric application using the OMPSs programming model, with an emphasis on improving the performance and scalability of the NMBB/BSC-CTM model. It presents performance metrics, parallelization strategies, and the application of incremental methodologies in parallel programming to achieve significant speedups. Future work includes investigating multithreaded MPI and further porting of code to OMPSs for data assimilation simulations.