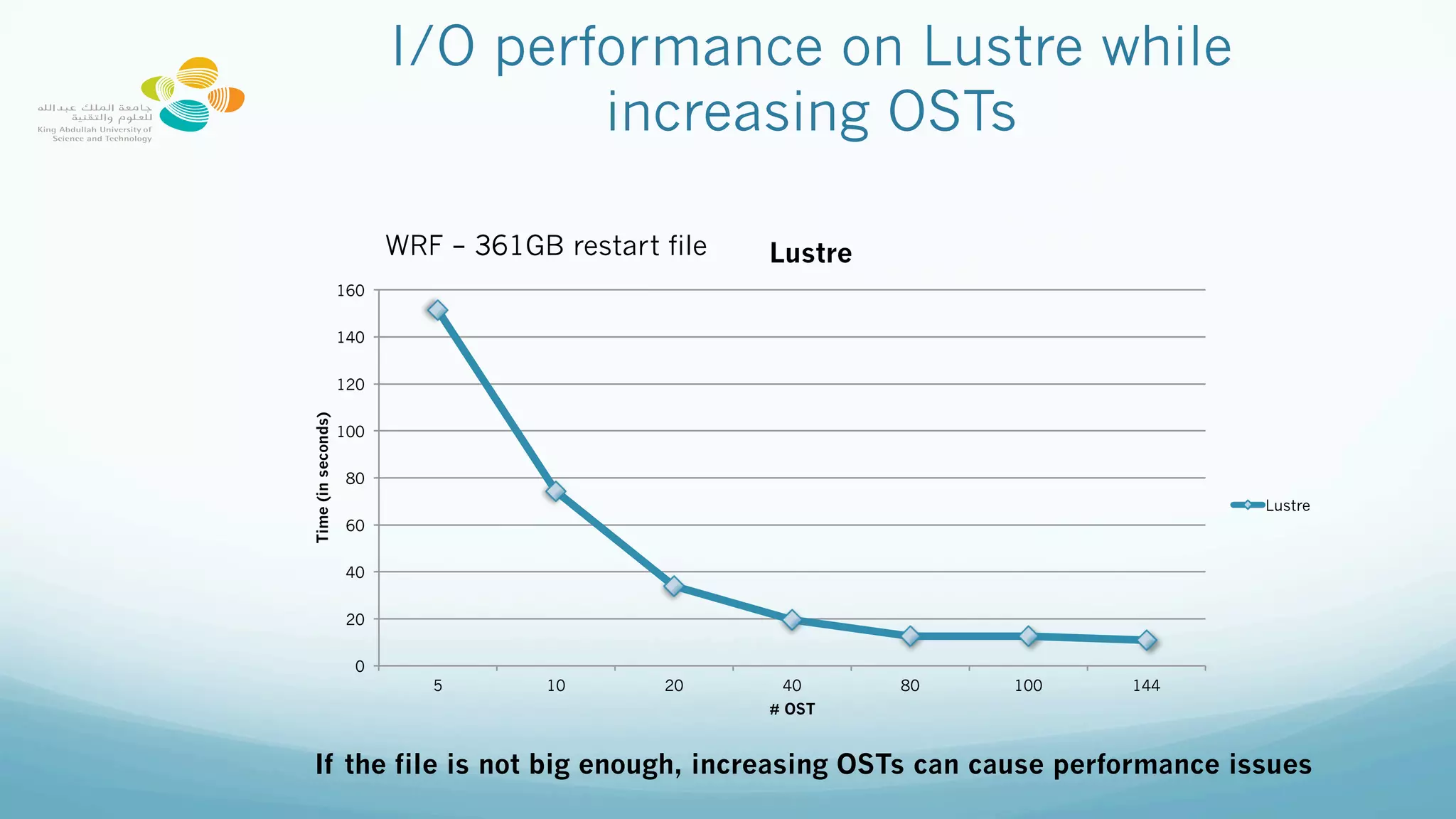

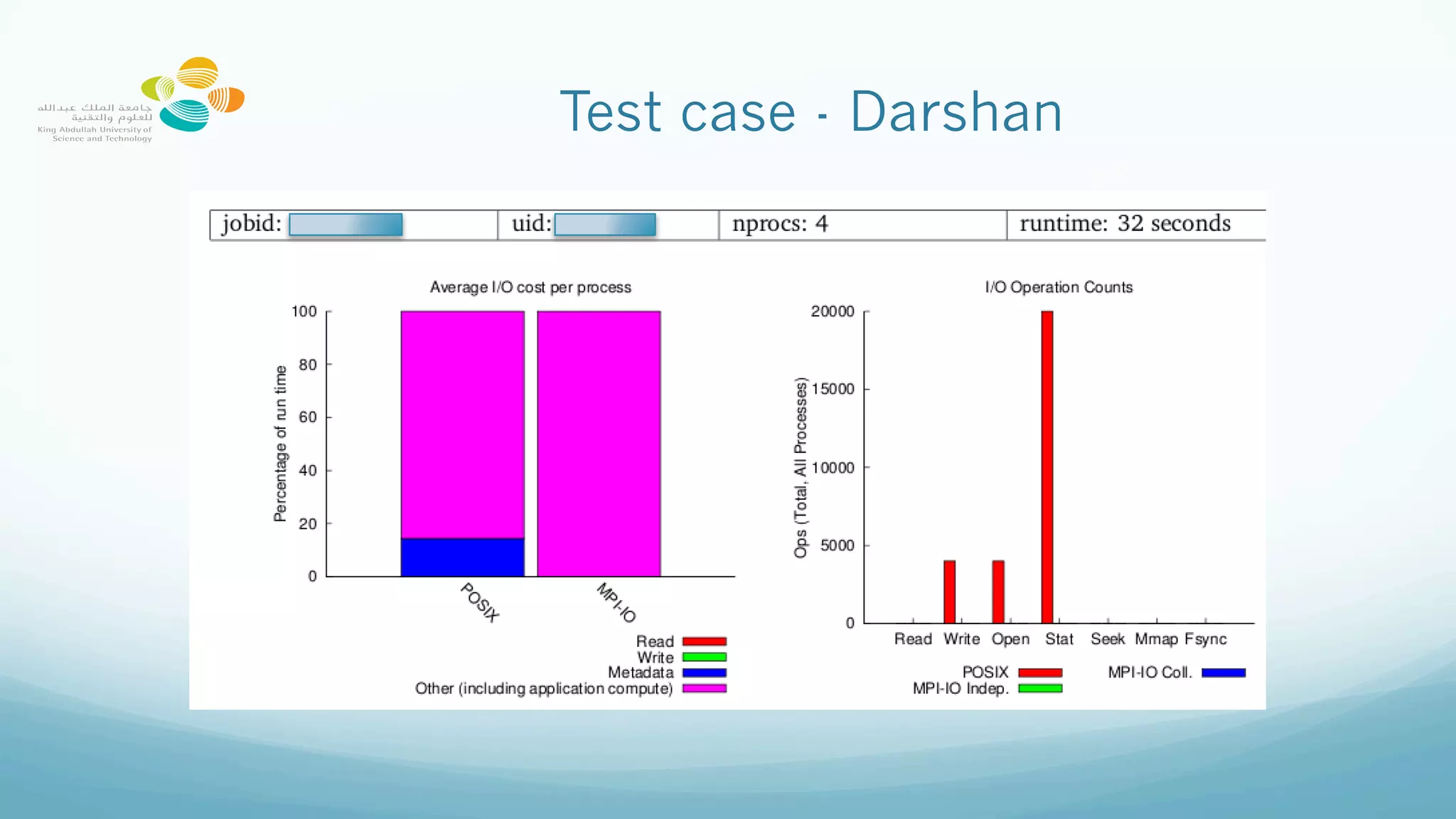

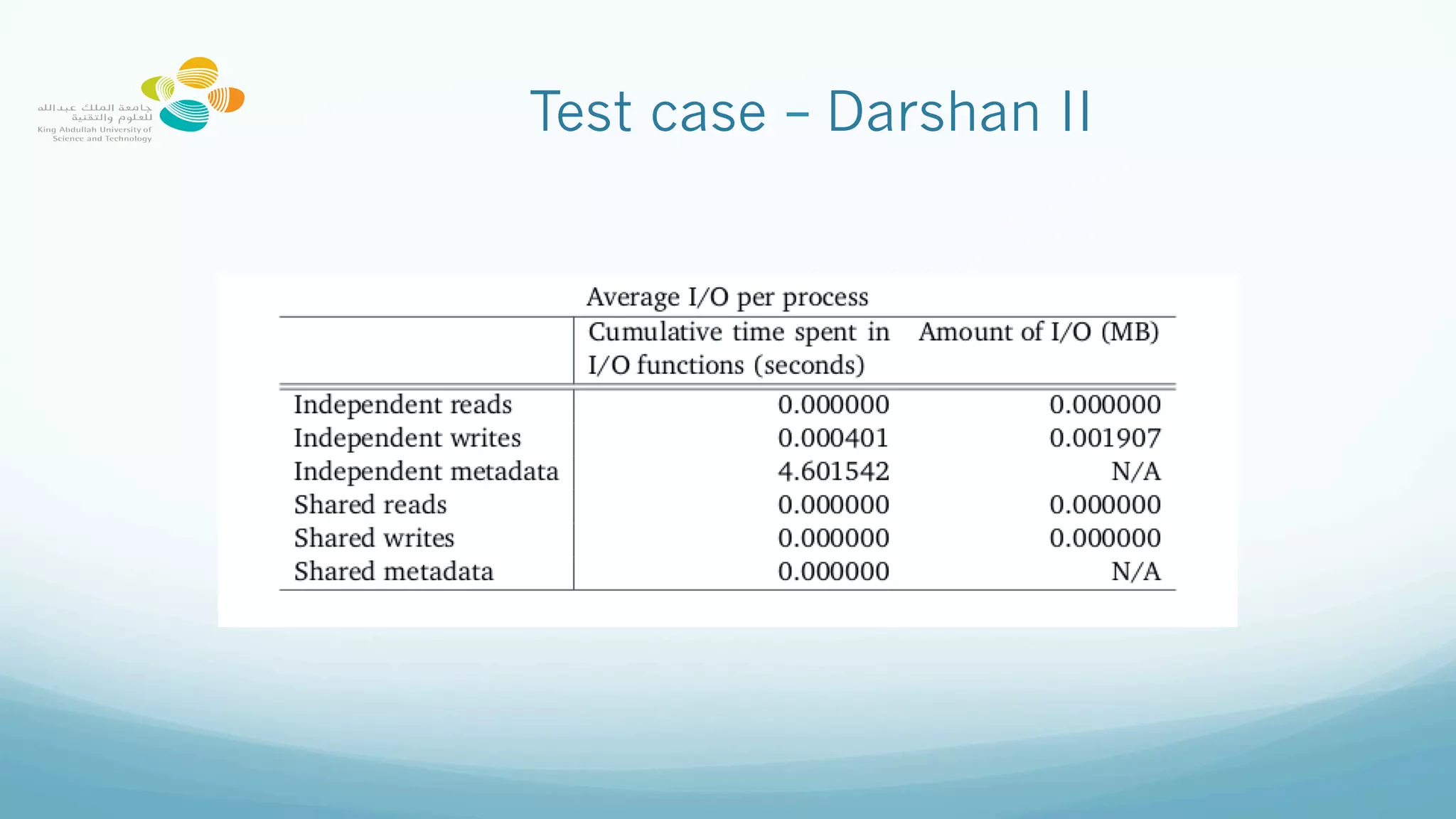

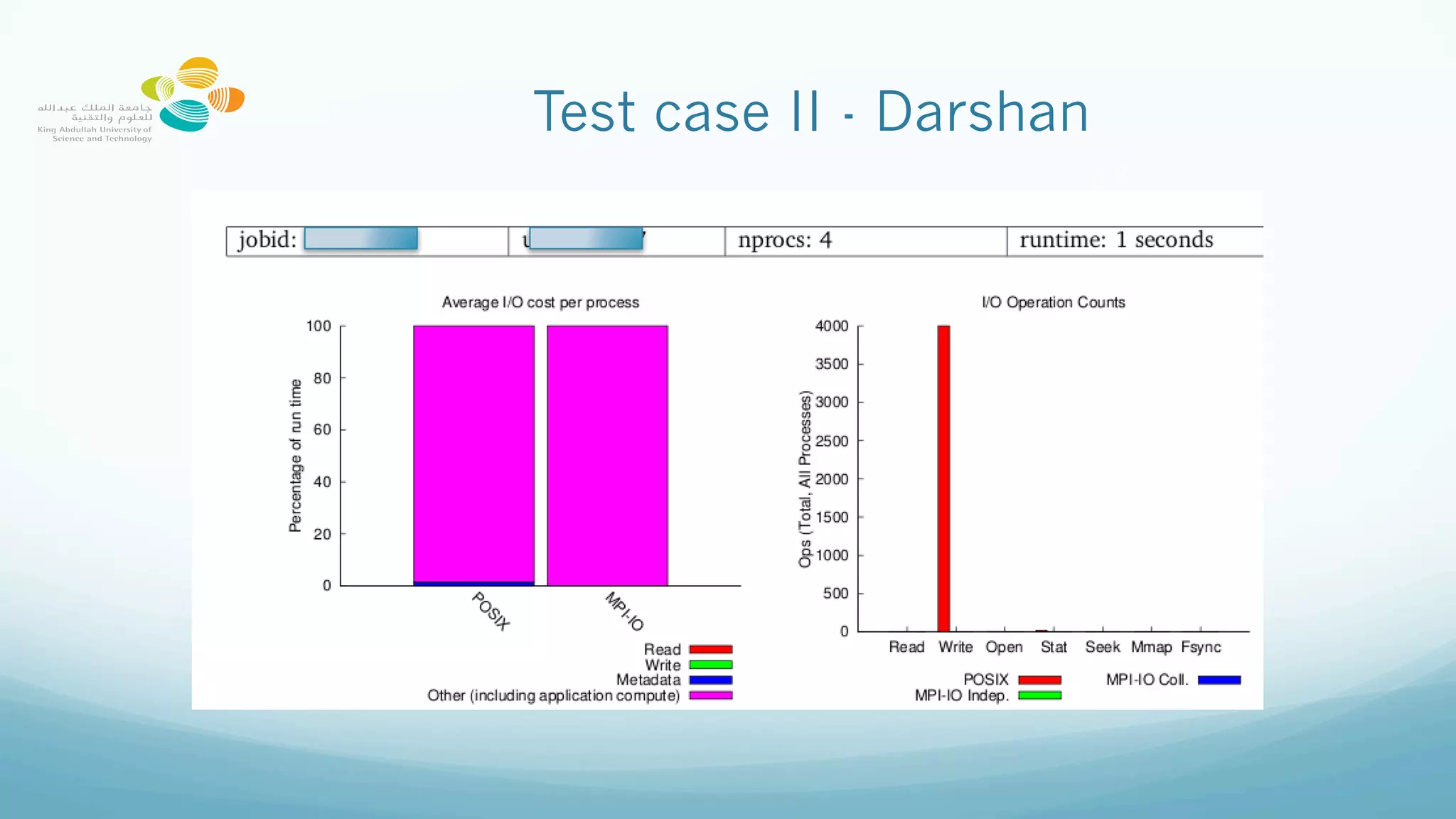

This document provides best practices for optimizing I/O performance on the Lustre parallel filesystem, emphasizing file organization, striping techniques, and appropriate use of MPI processes. Key recommendations include avoiding excessive small file access, leveraging collective buffering for parallel I/O, and strategic use of striping to enhance data throughput. The conclusions highlight the complexity of maximizing performance and the importance of experimentation to find optimal configurations.