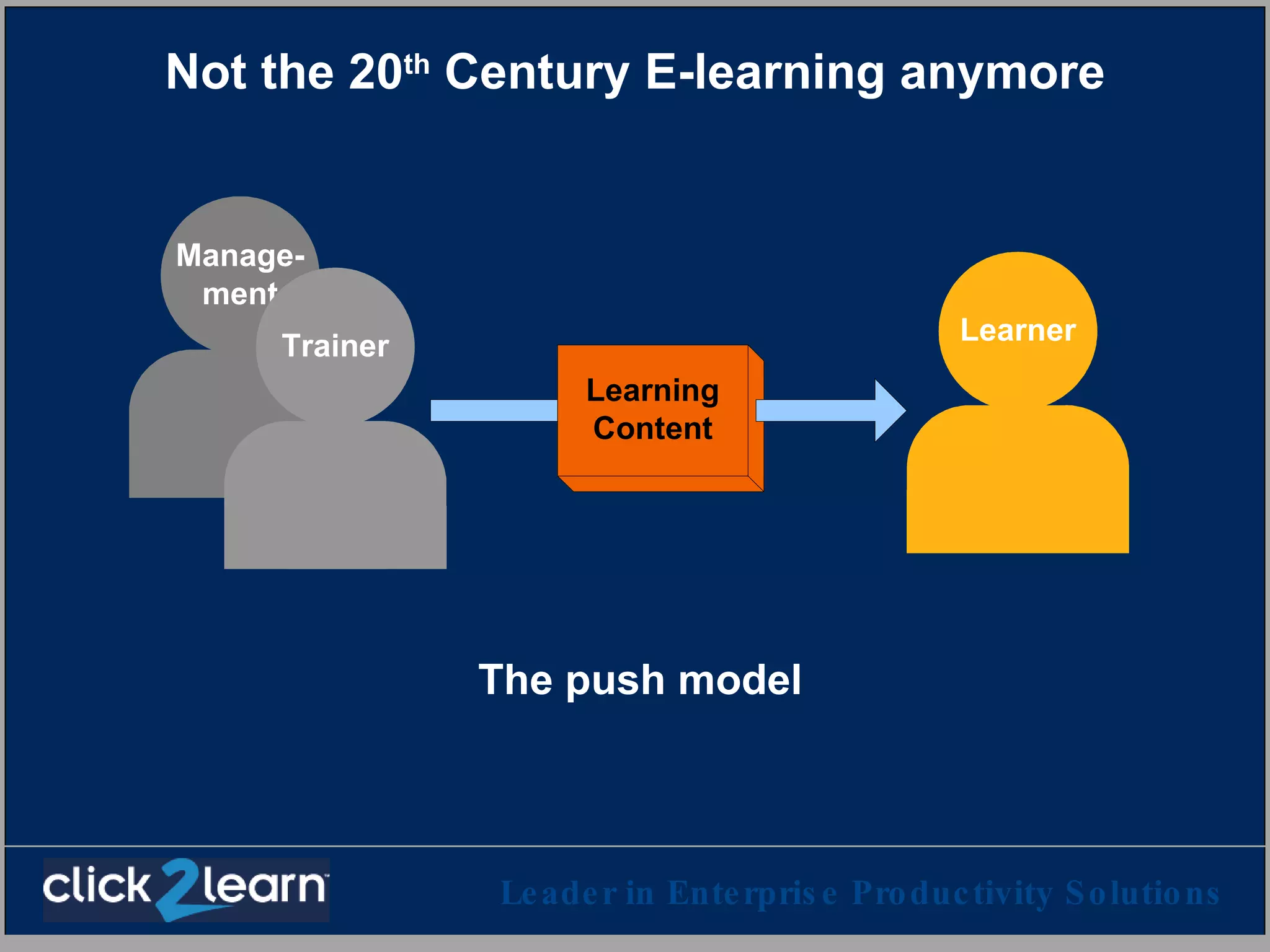

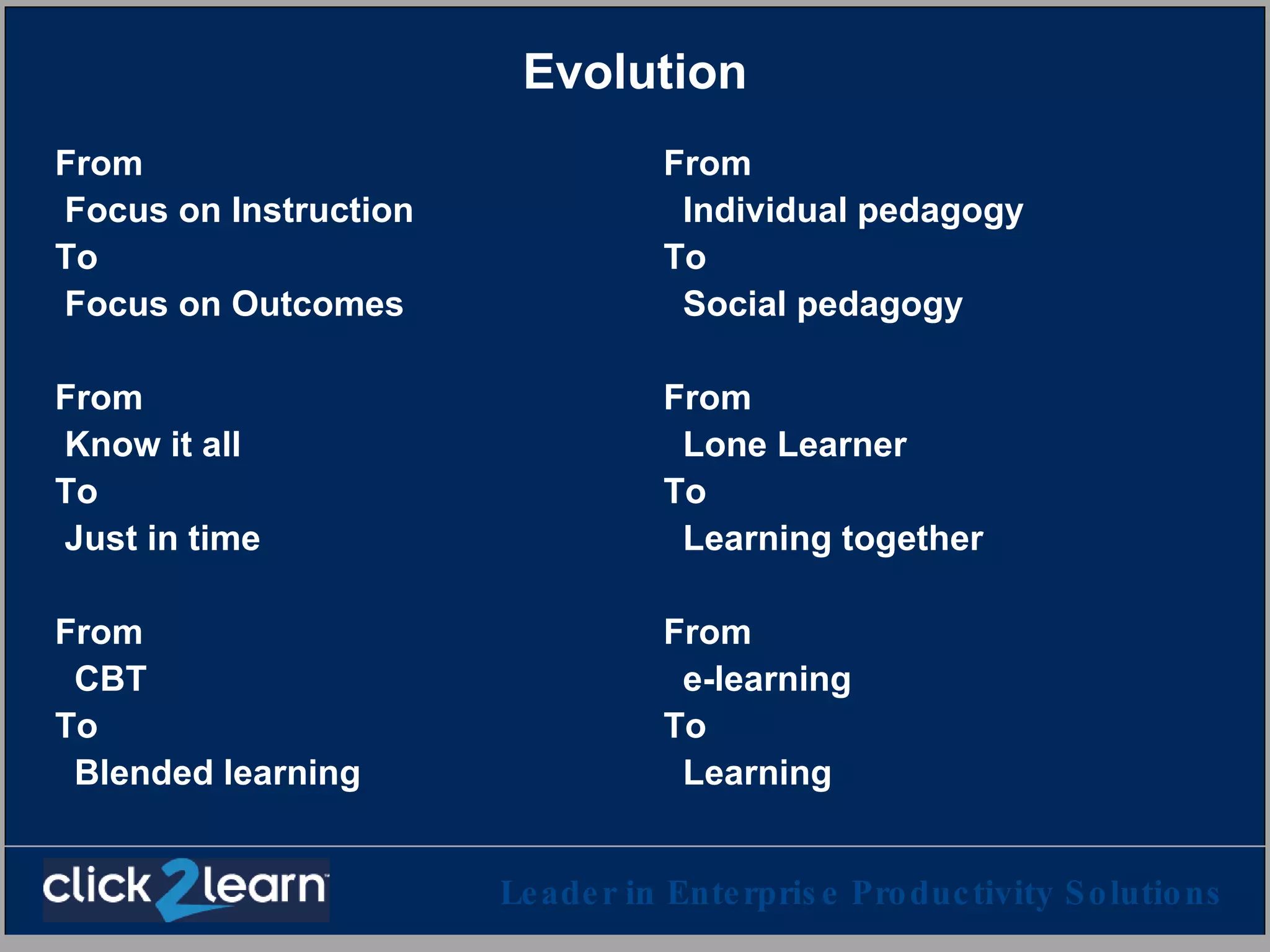

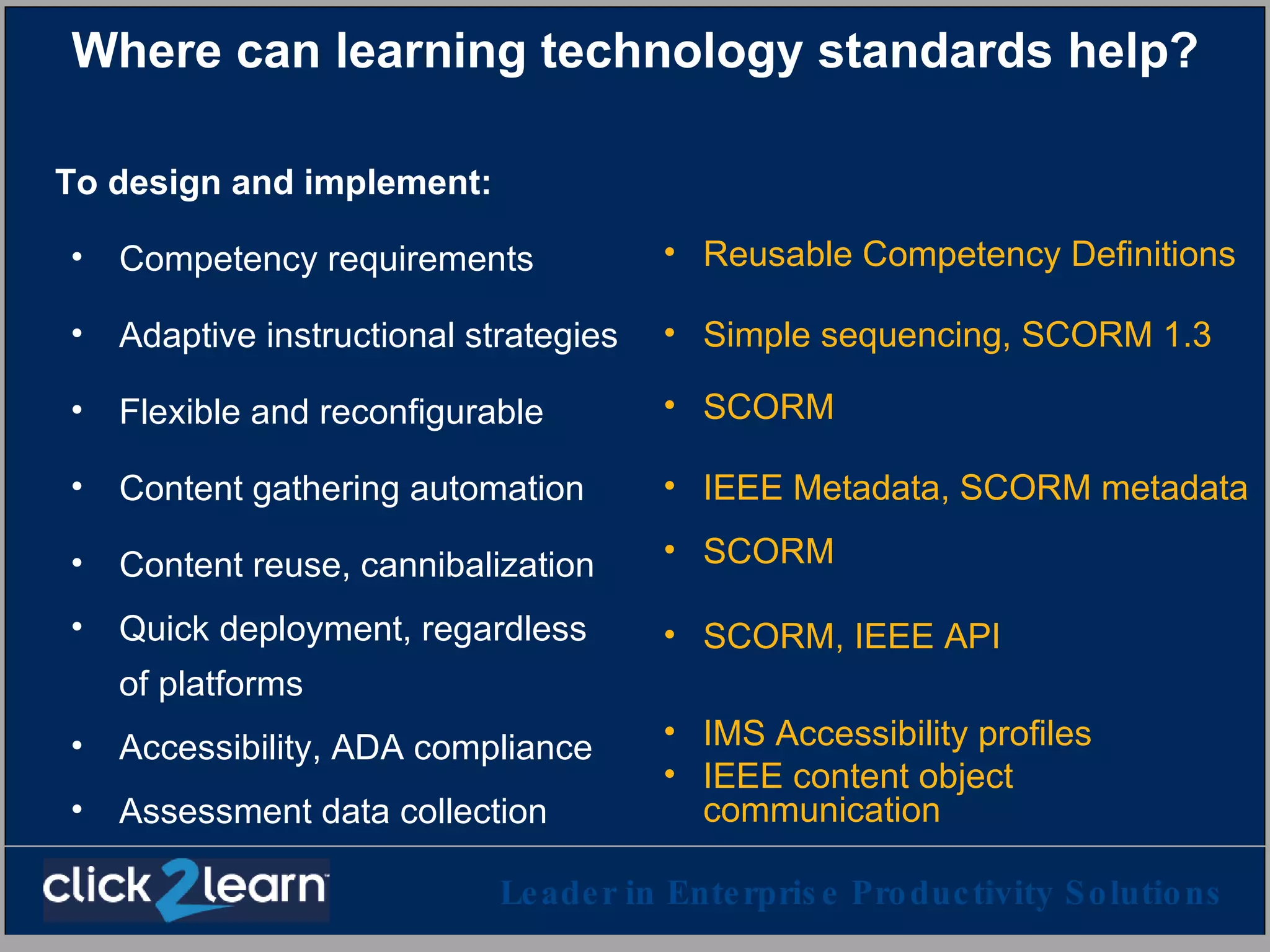

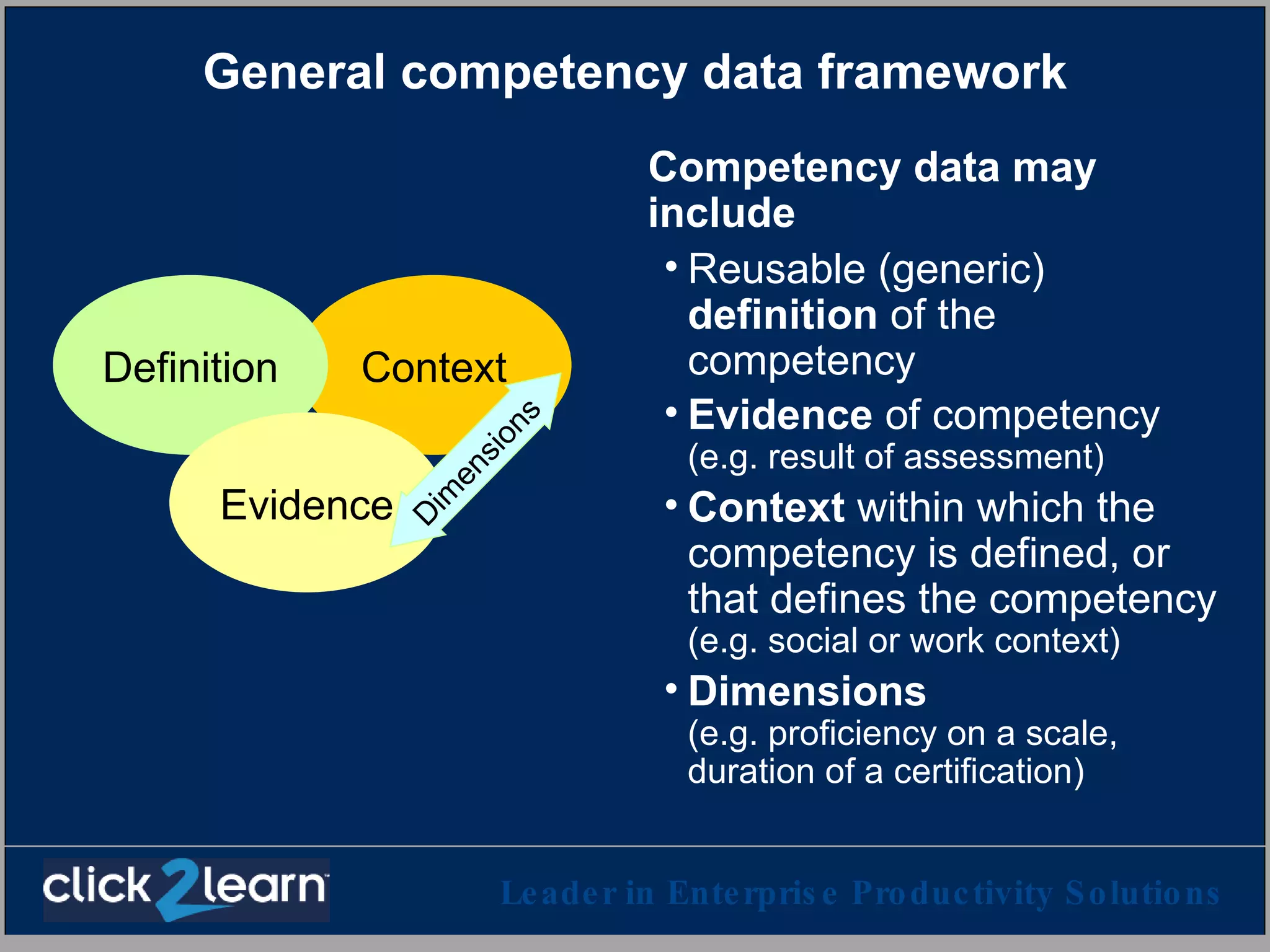

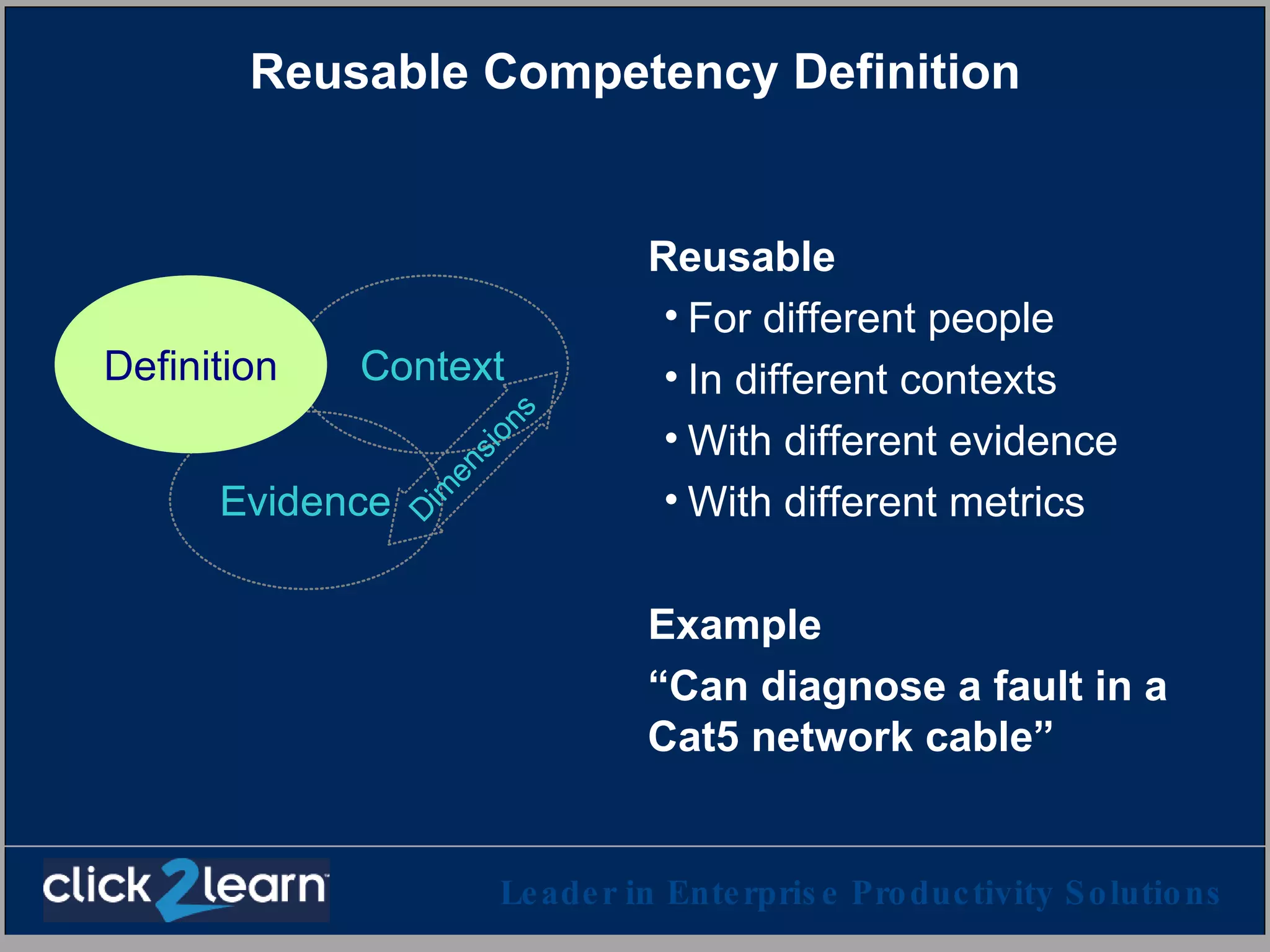

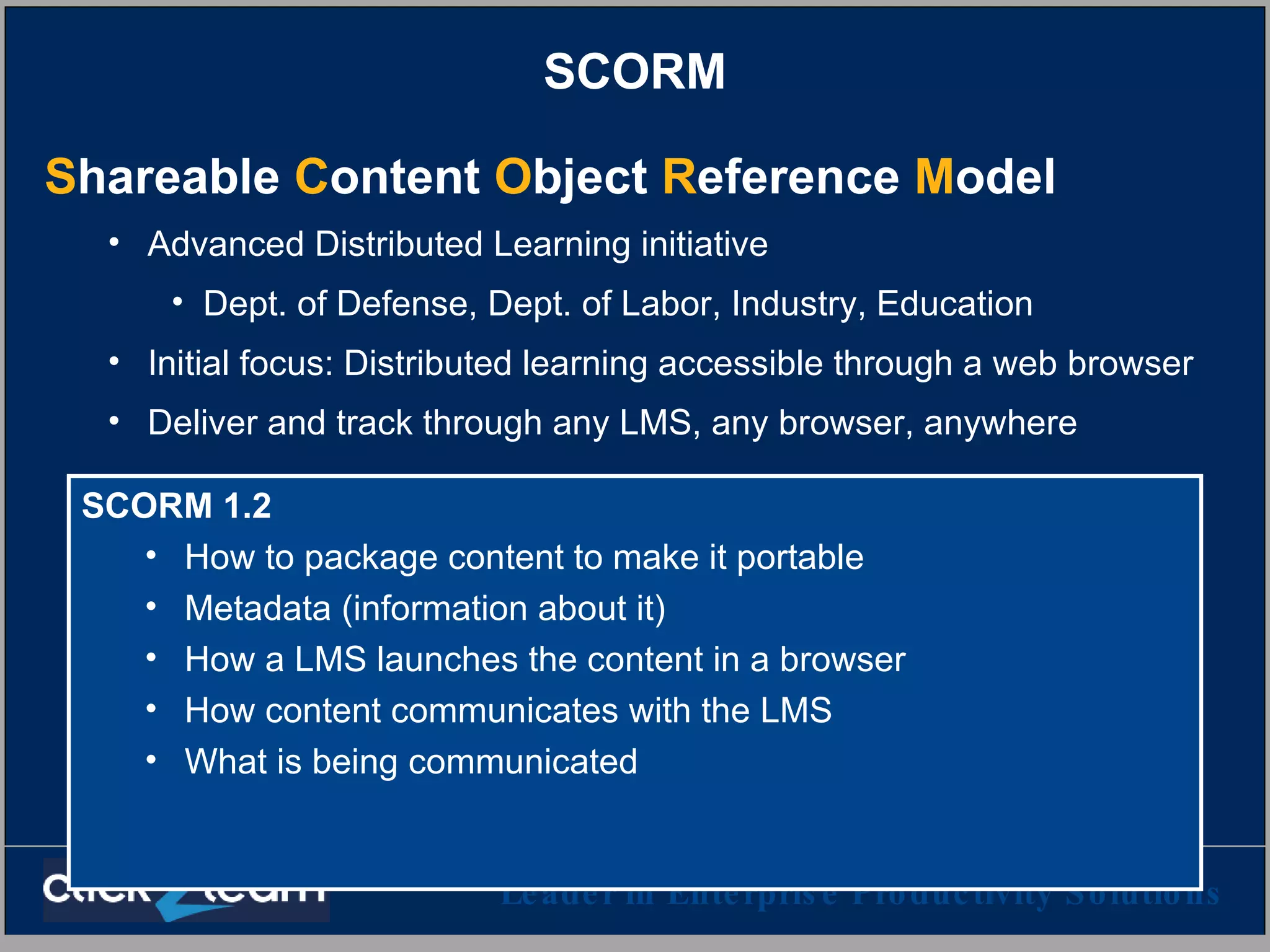

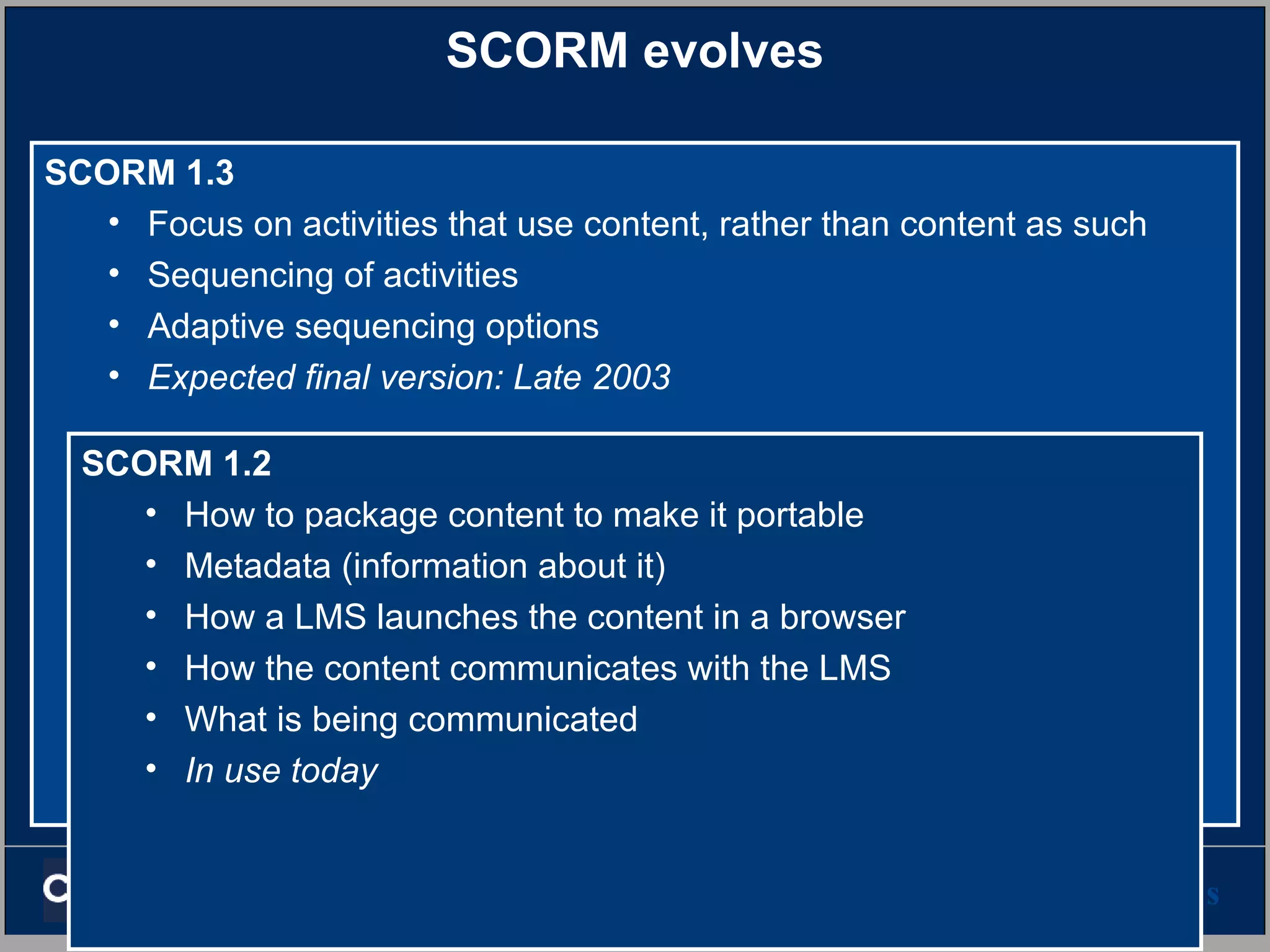

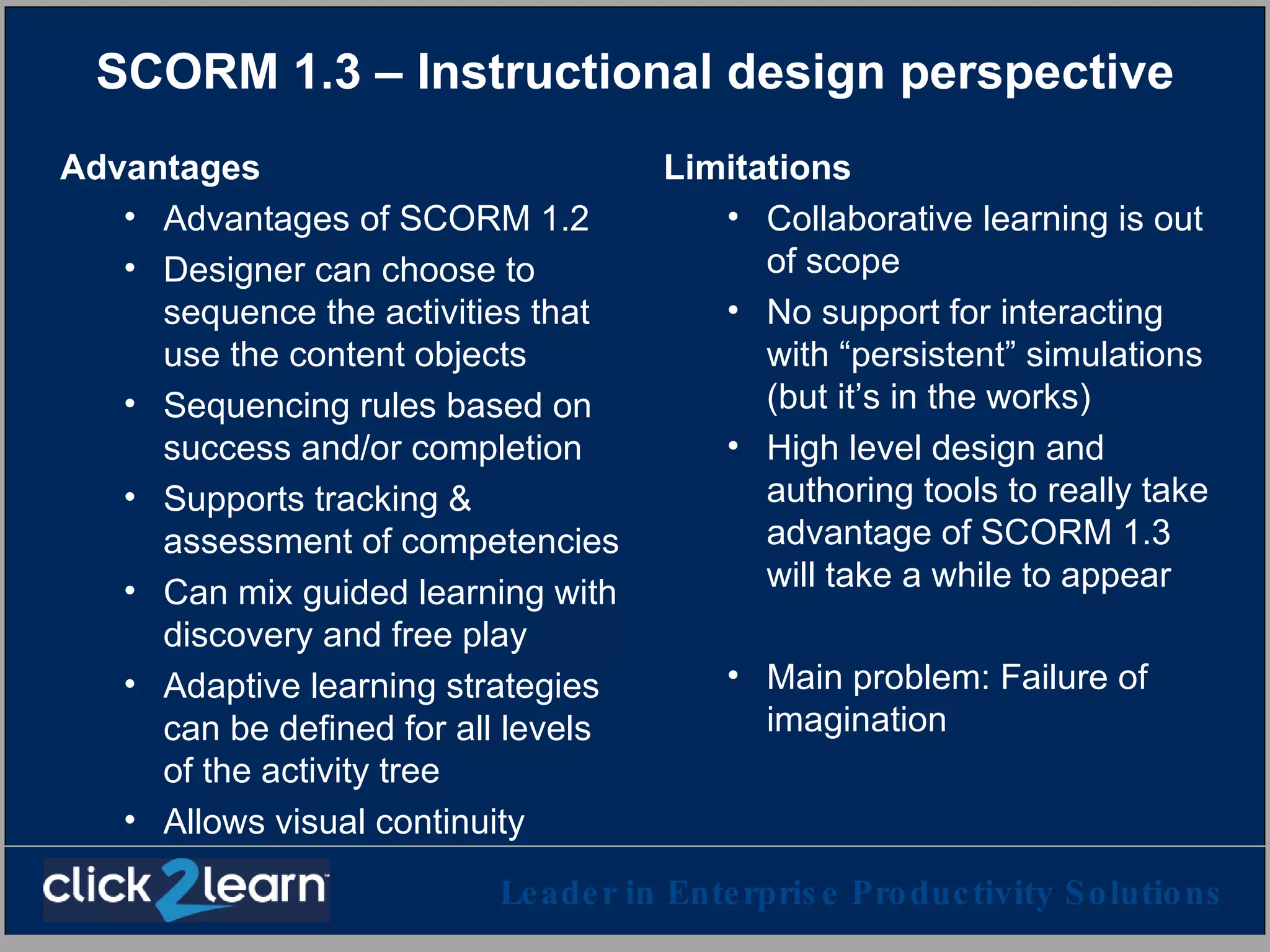

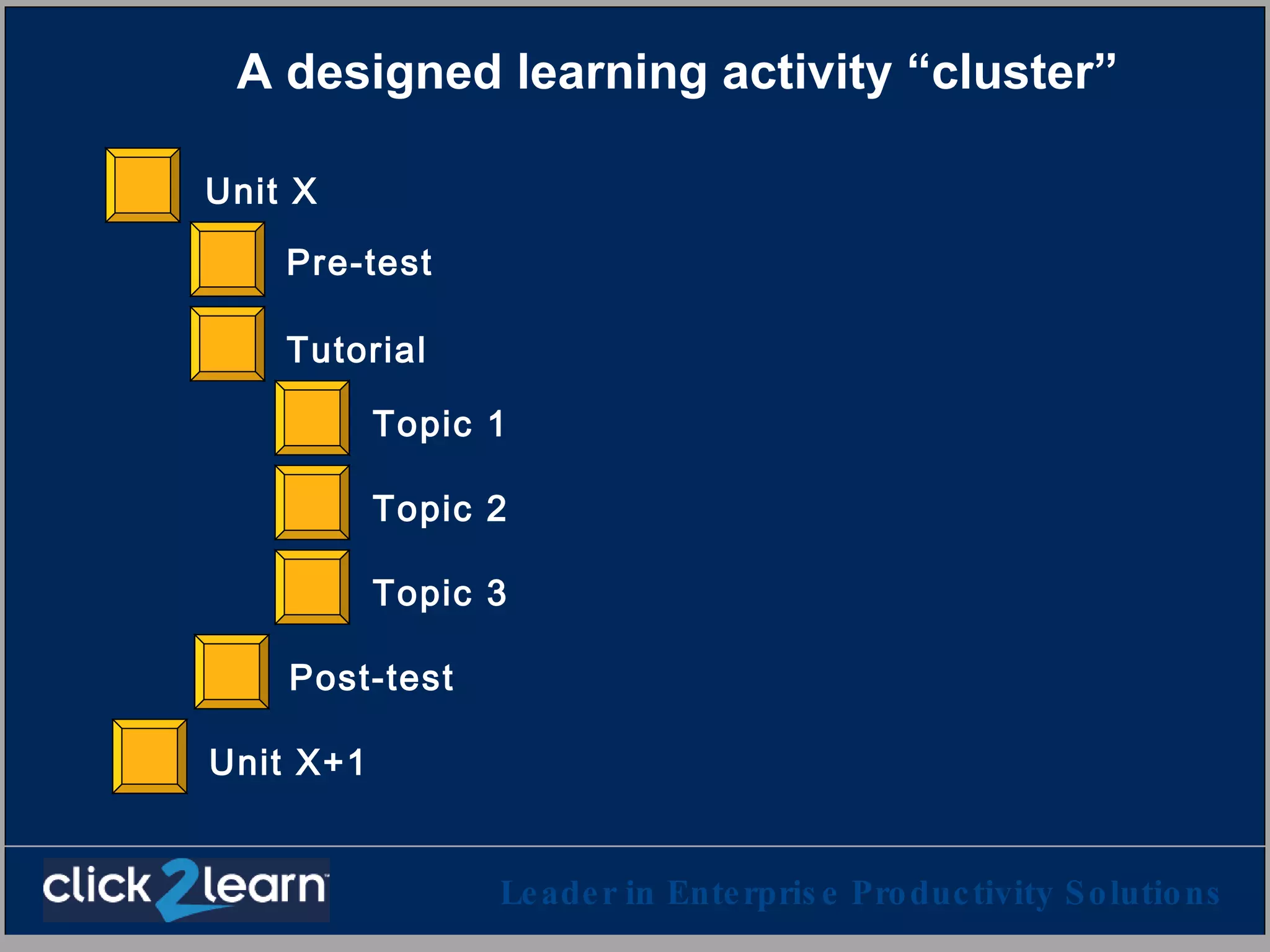

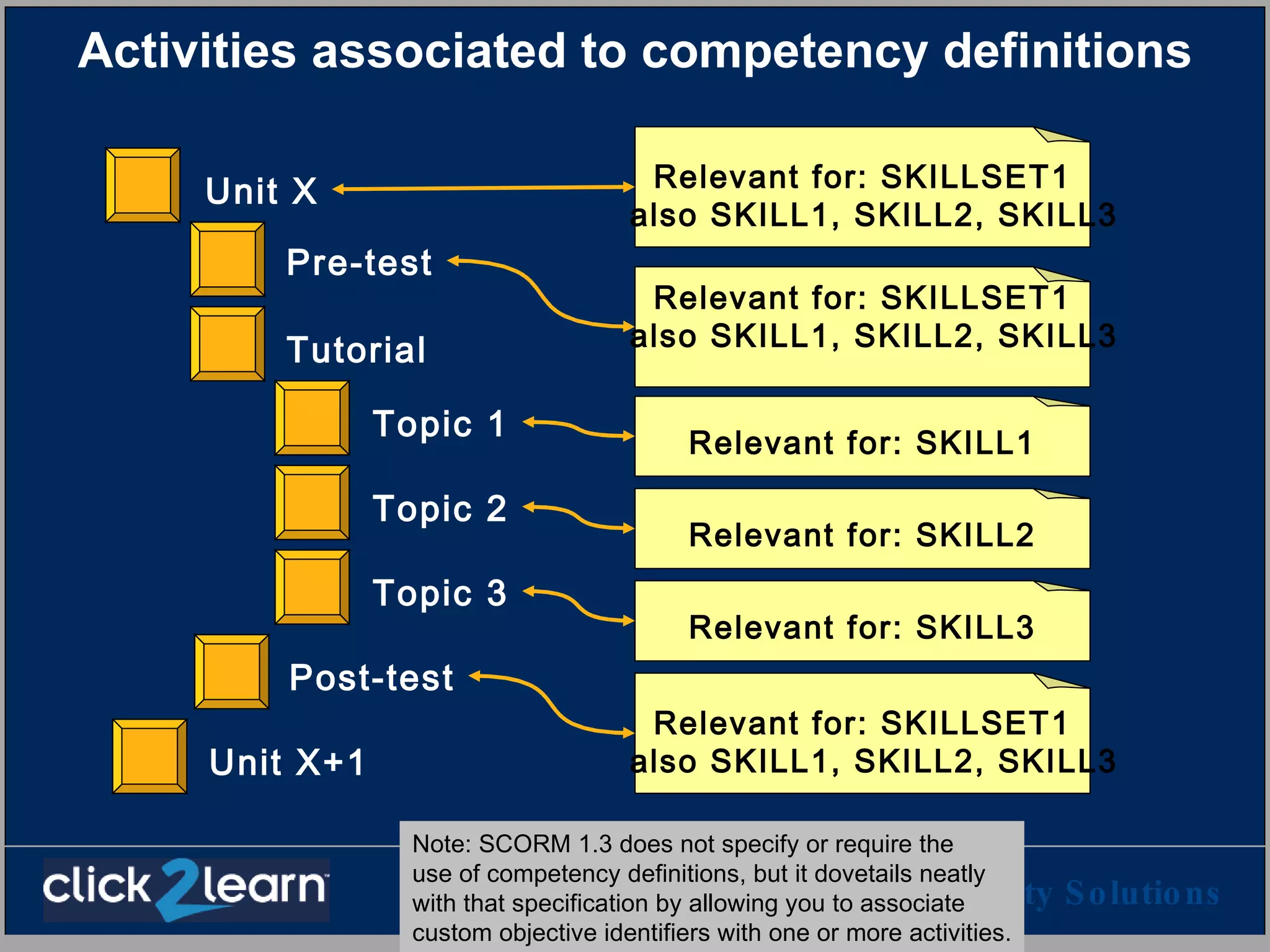

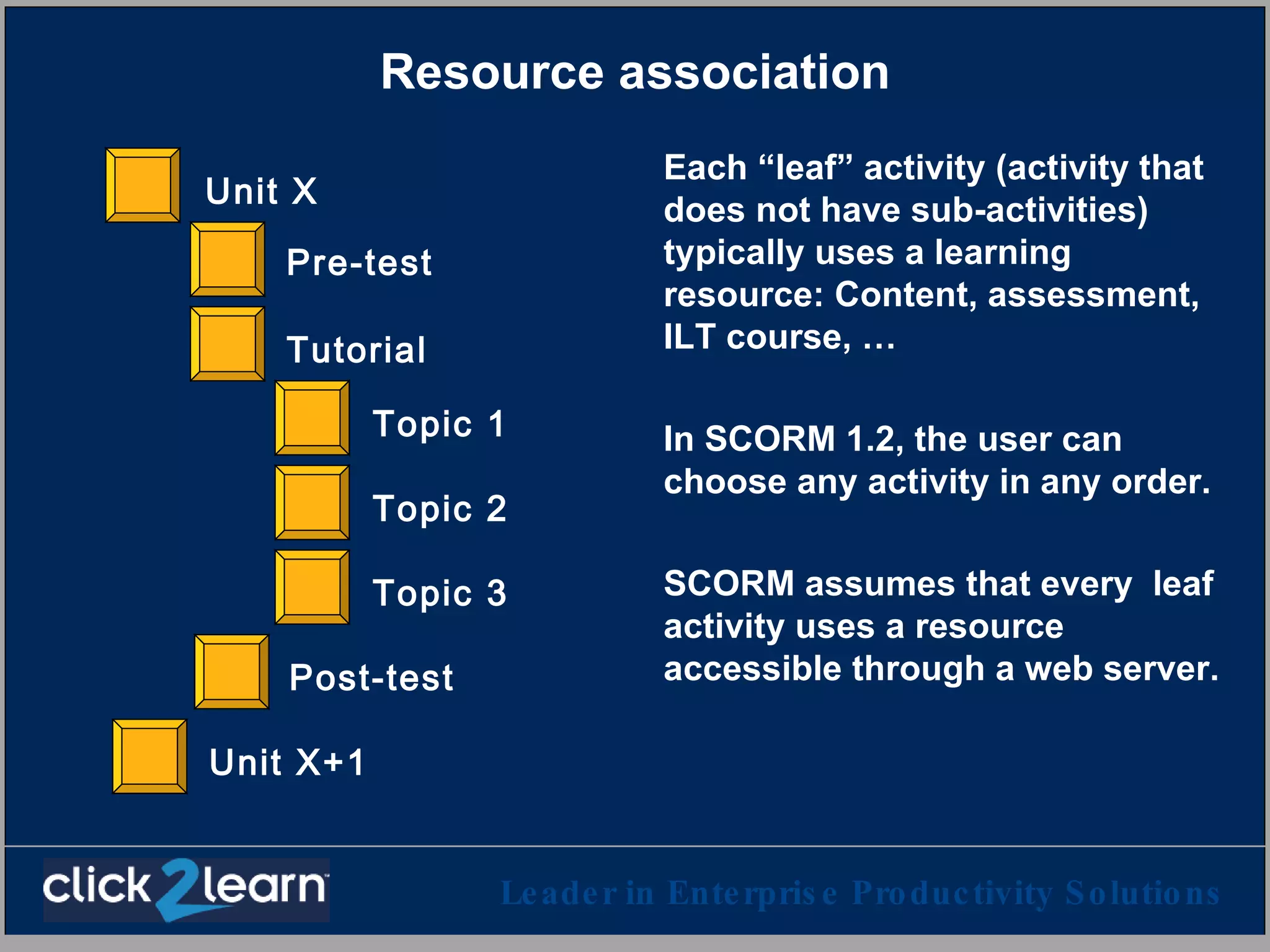

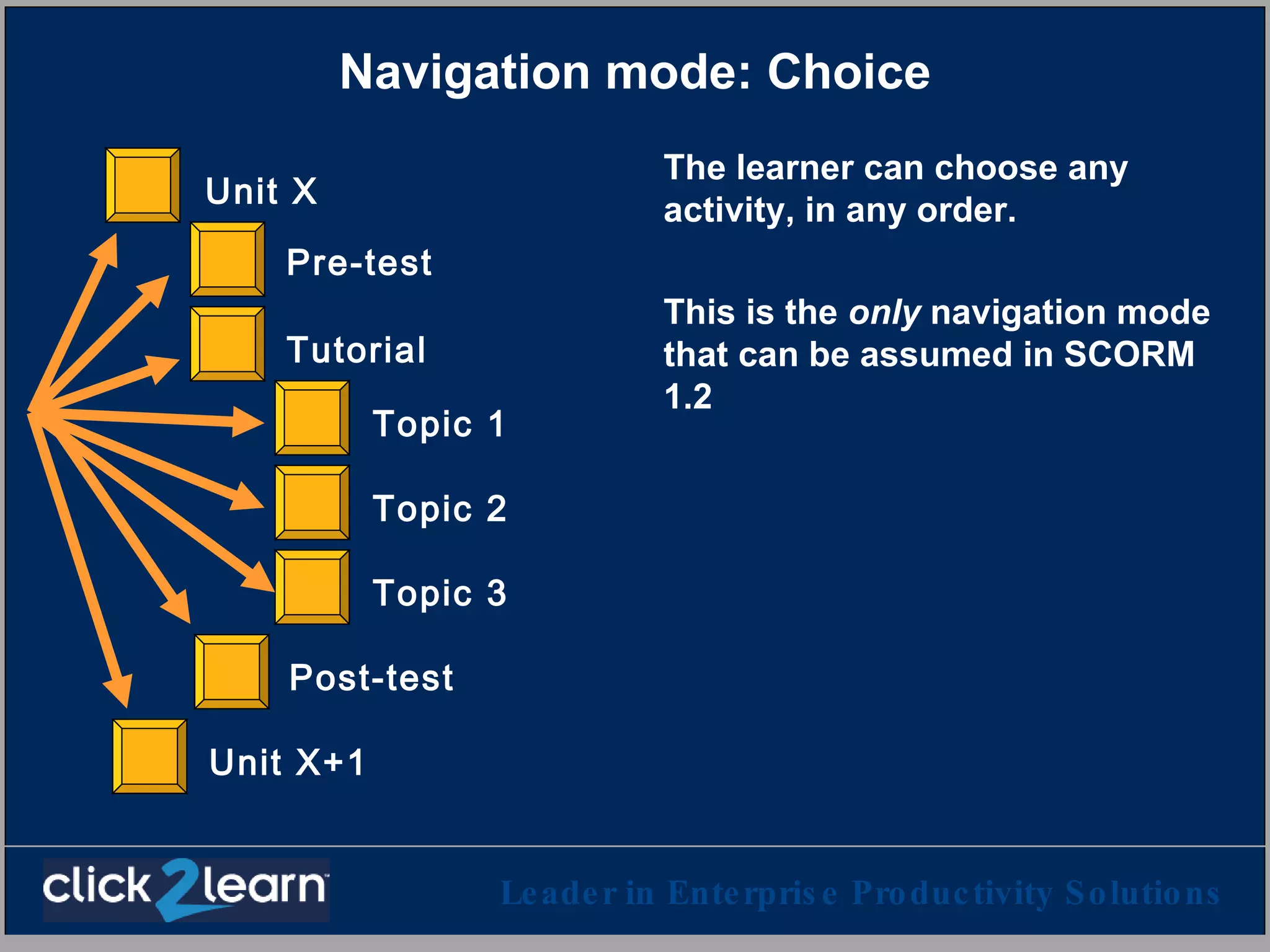

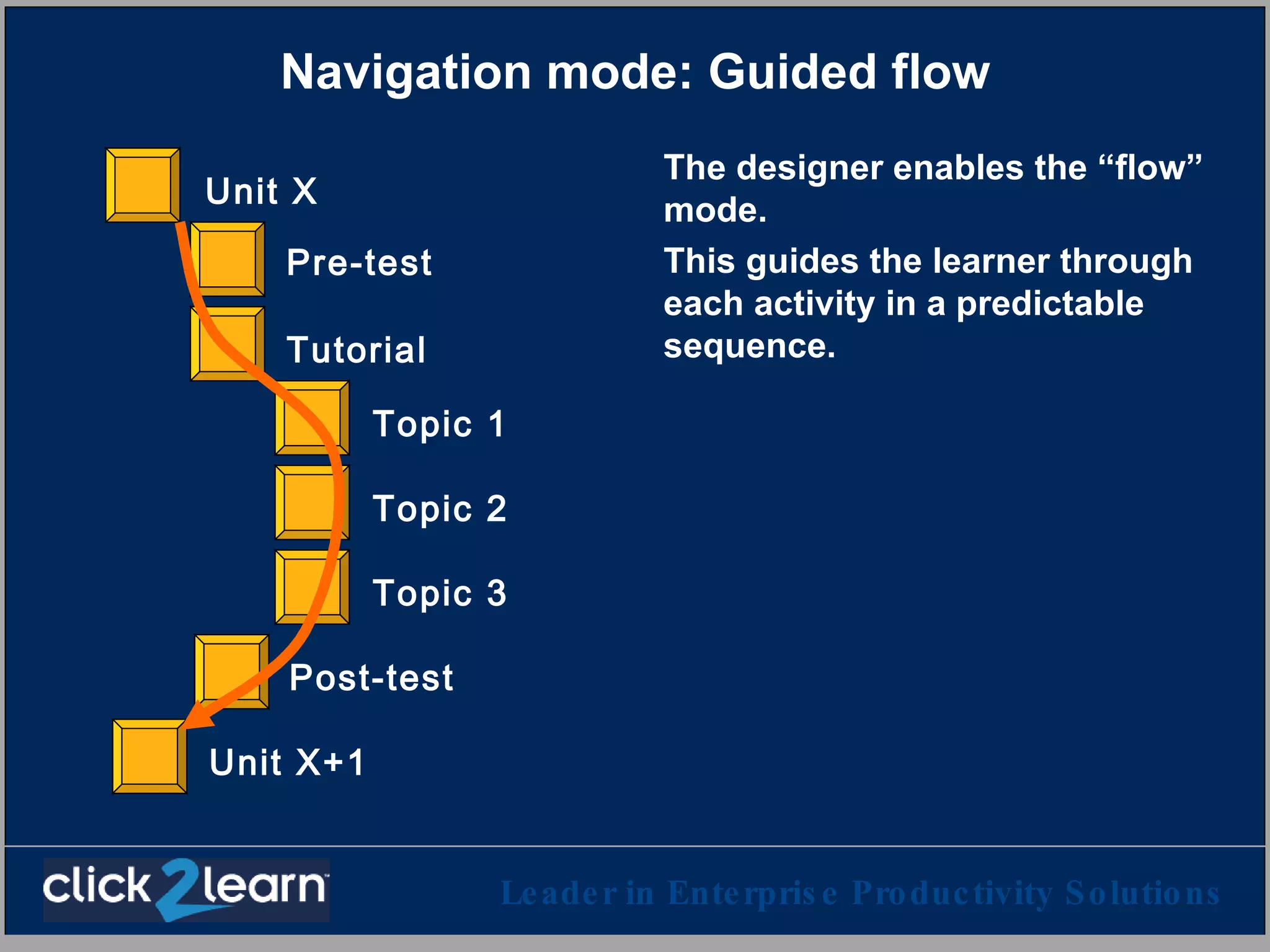

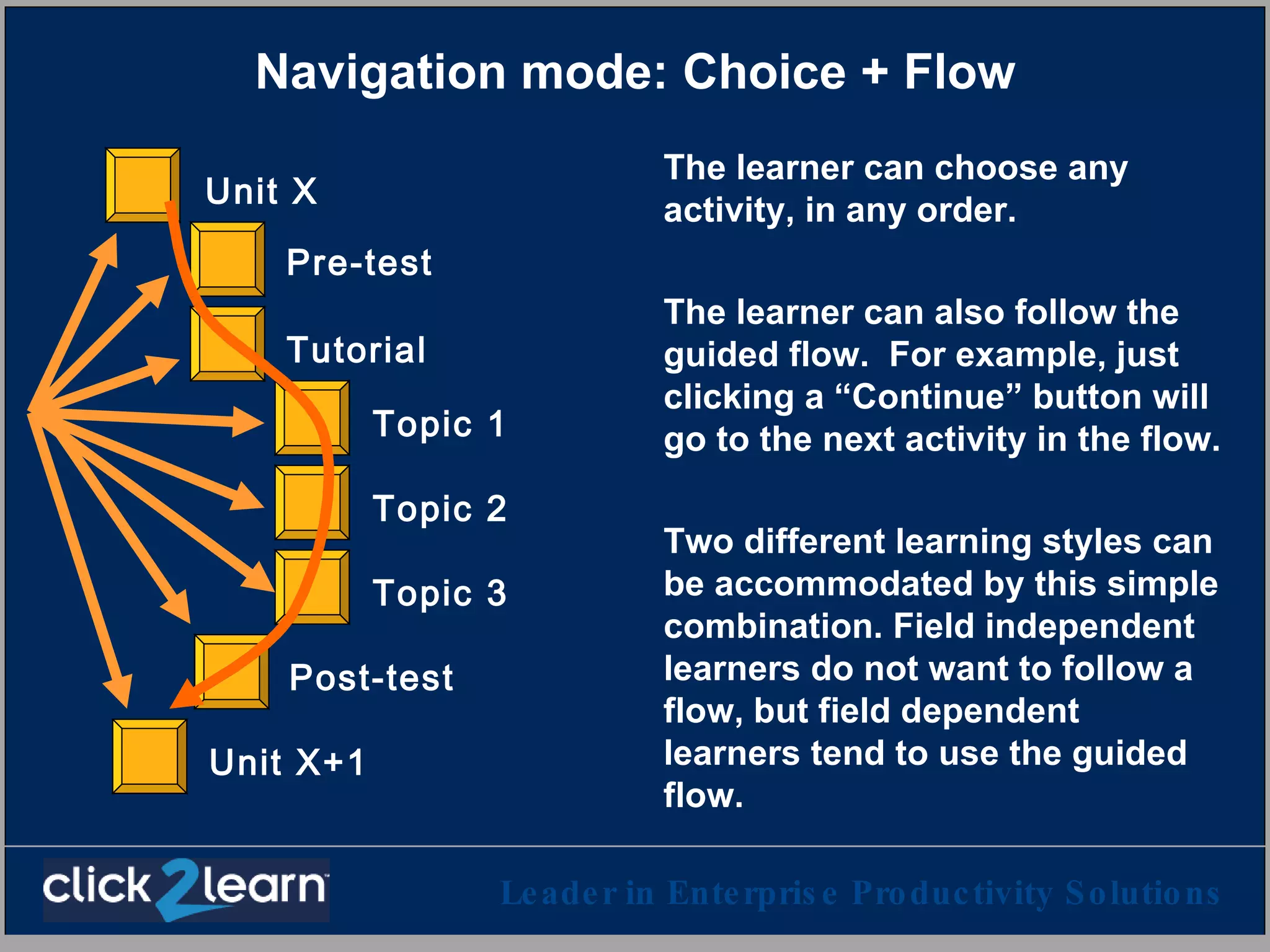

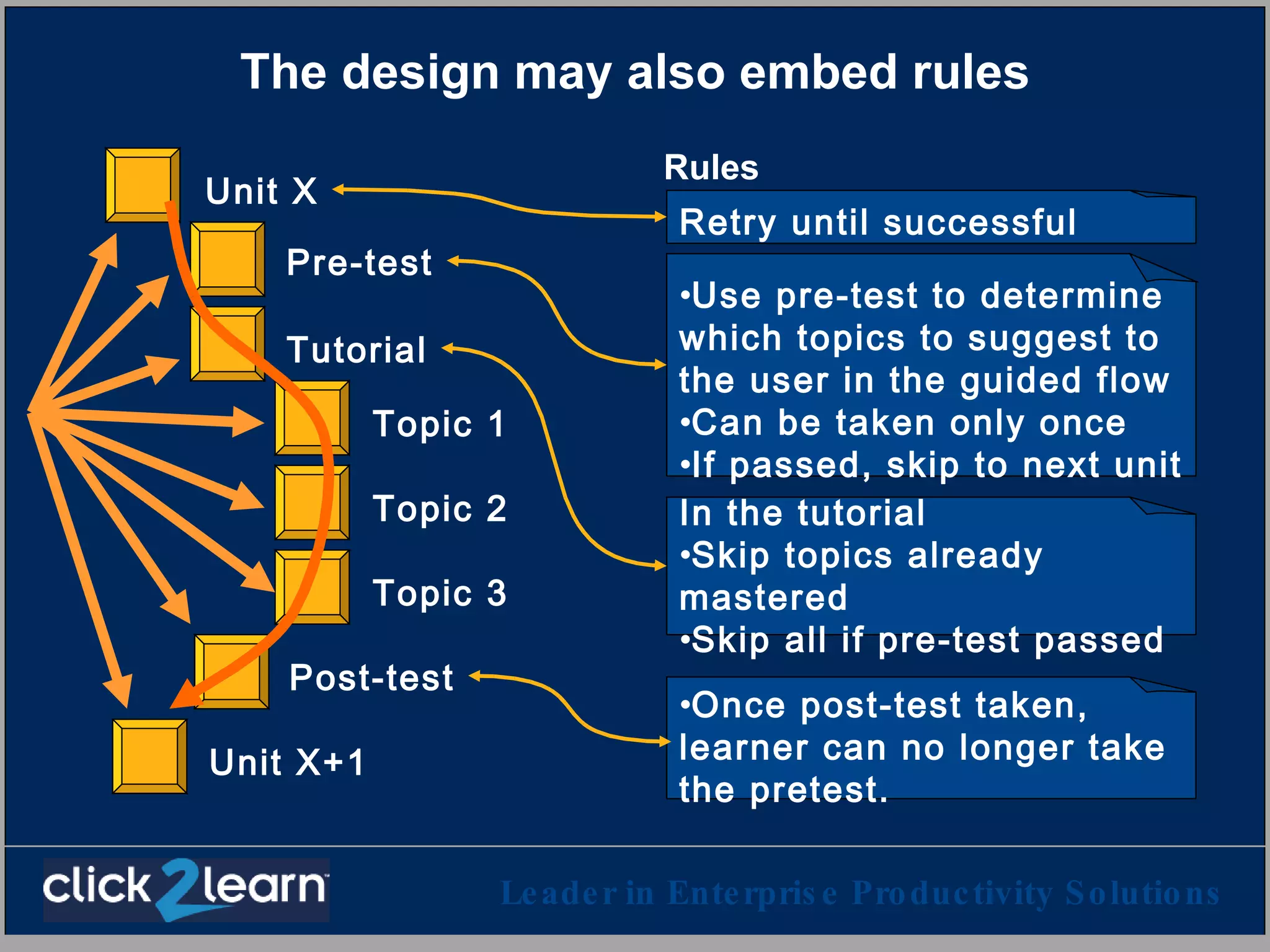

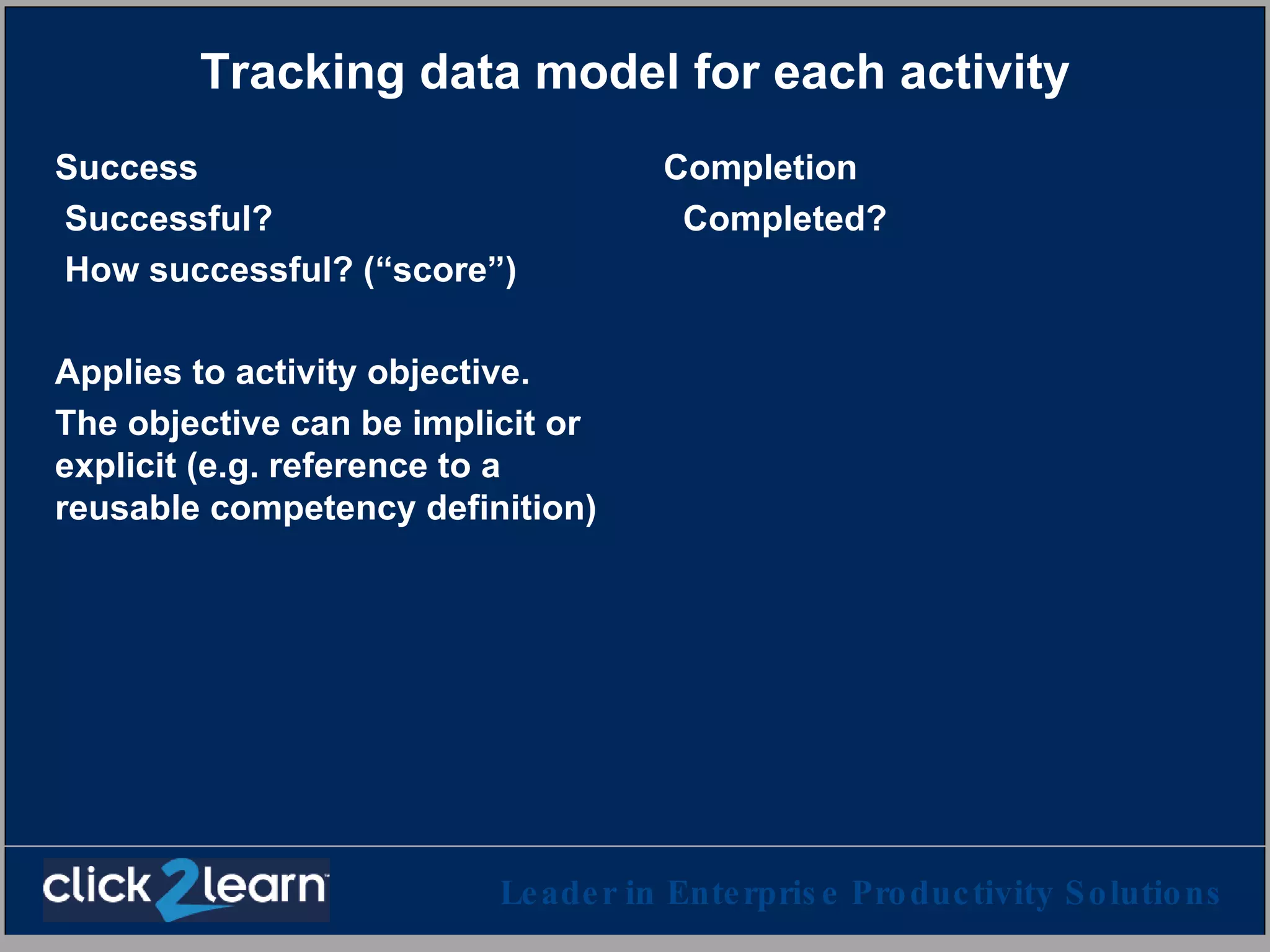

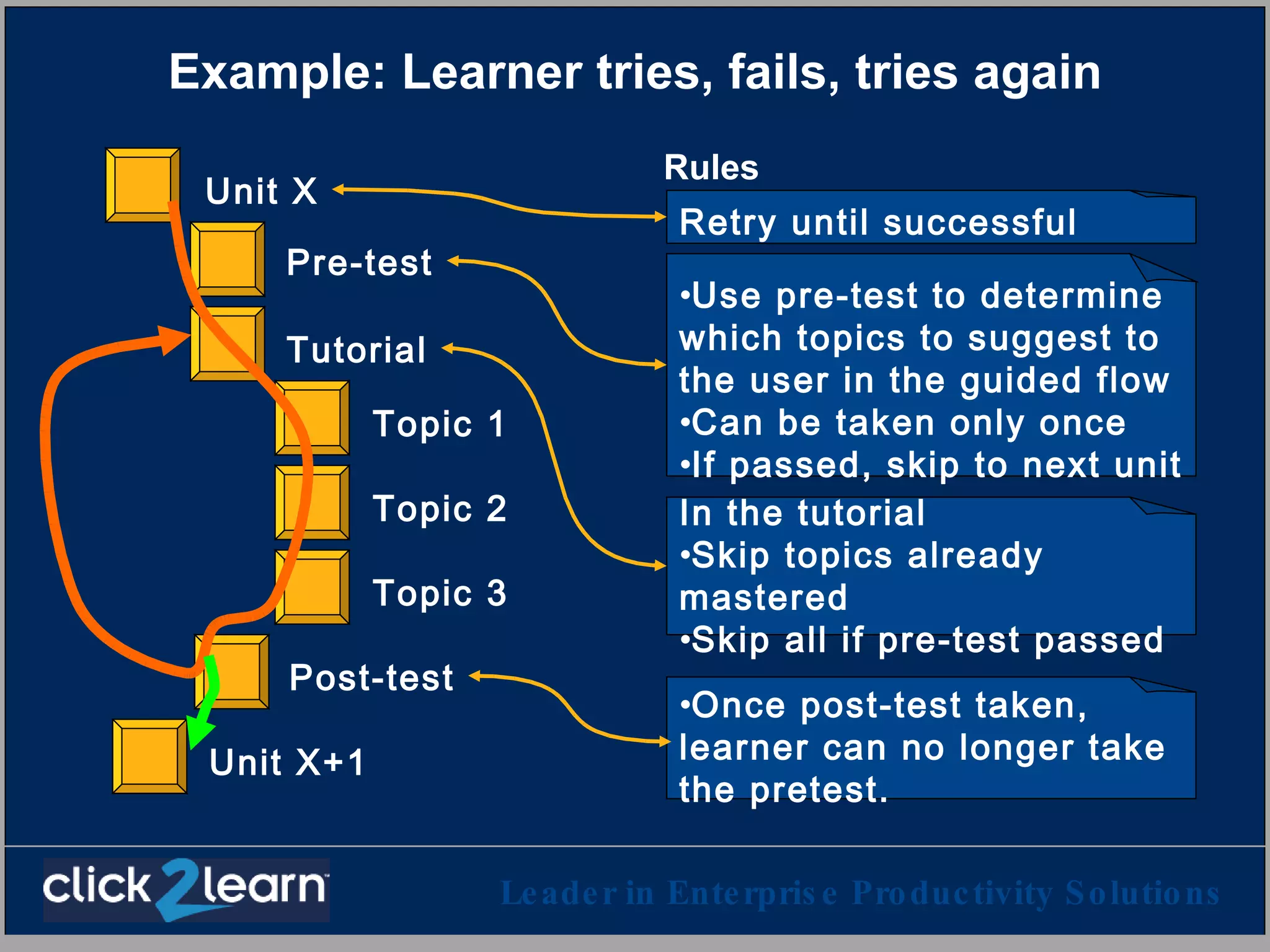

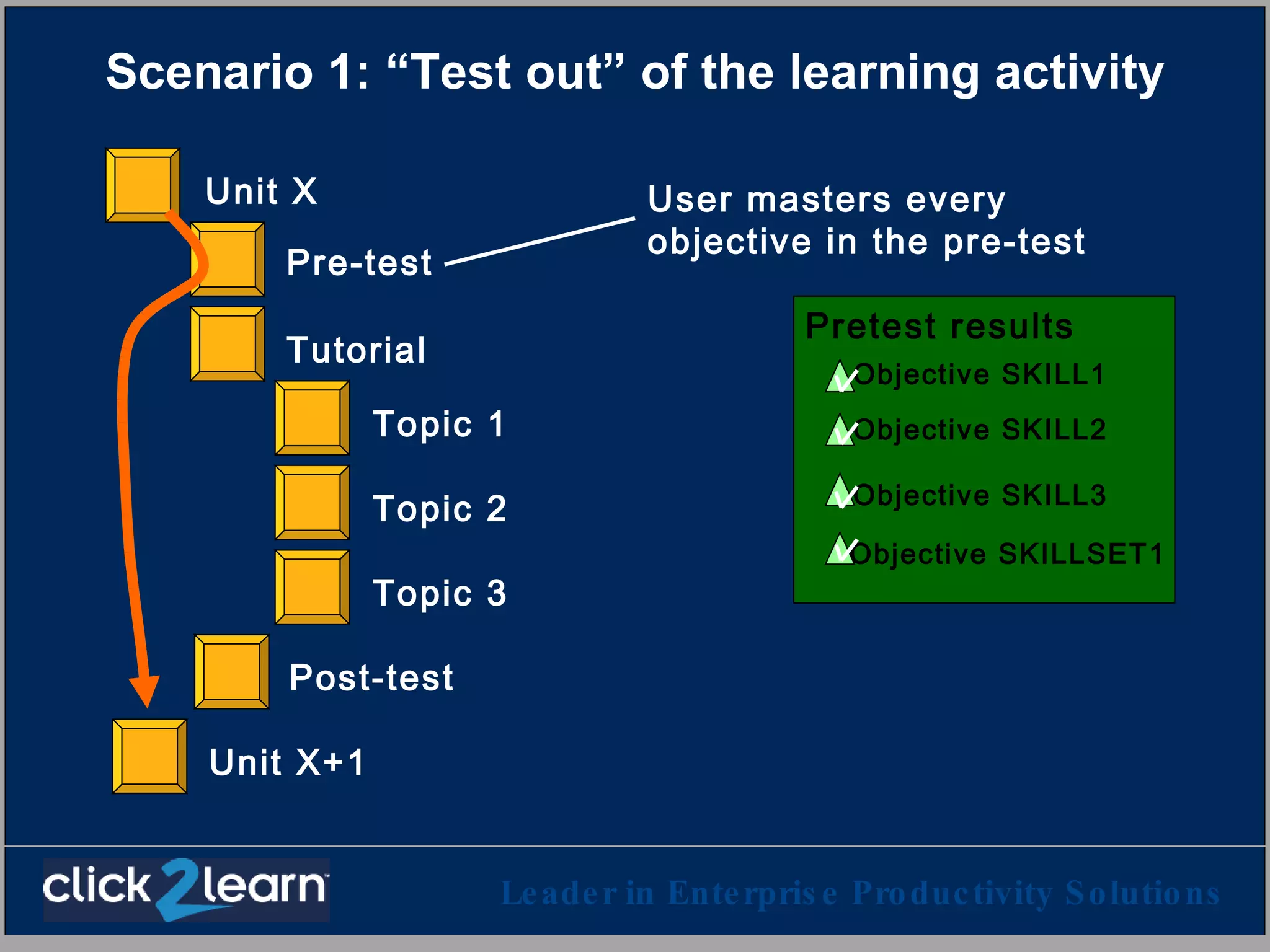

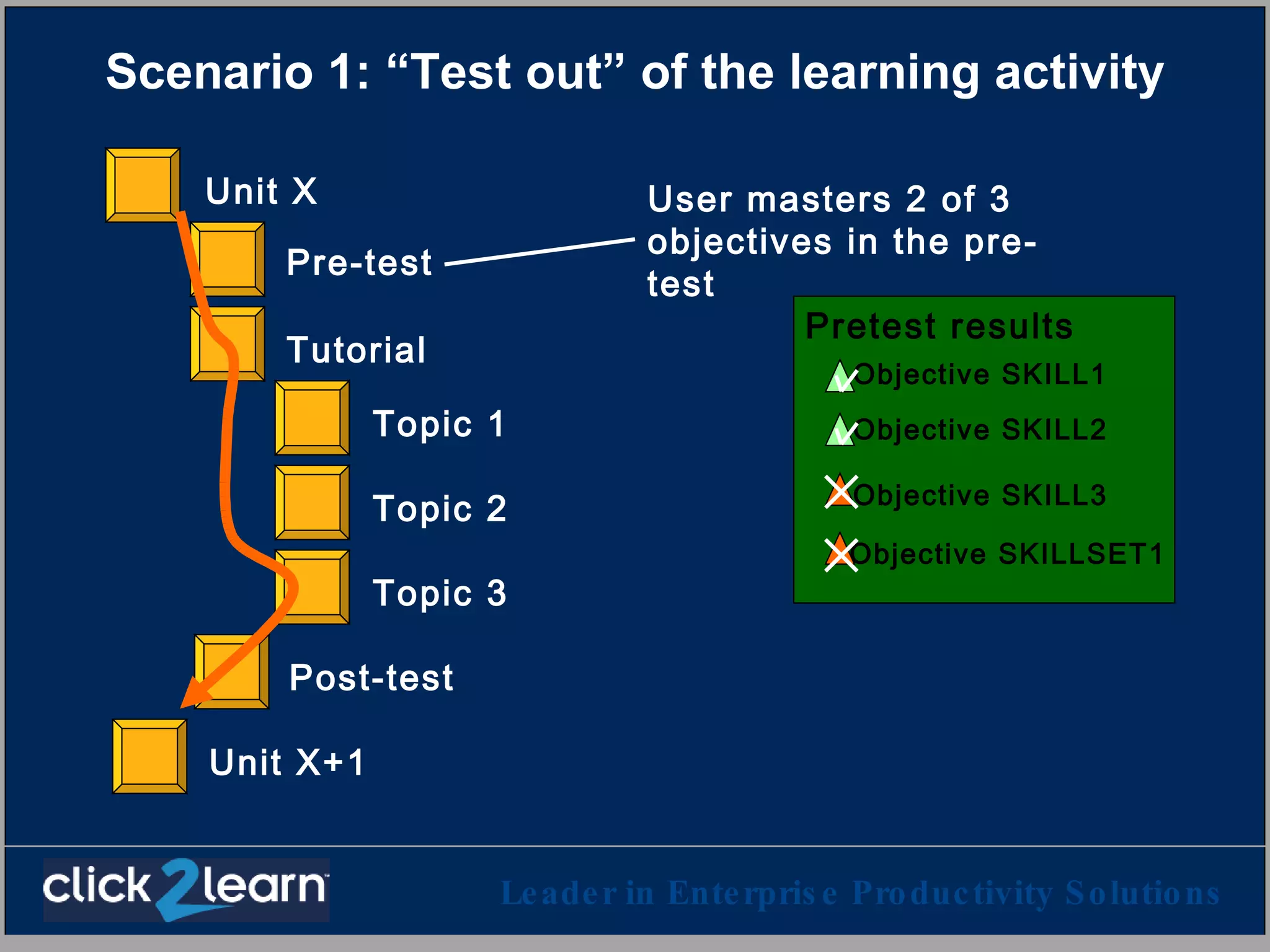

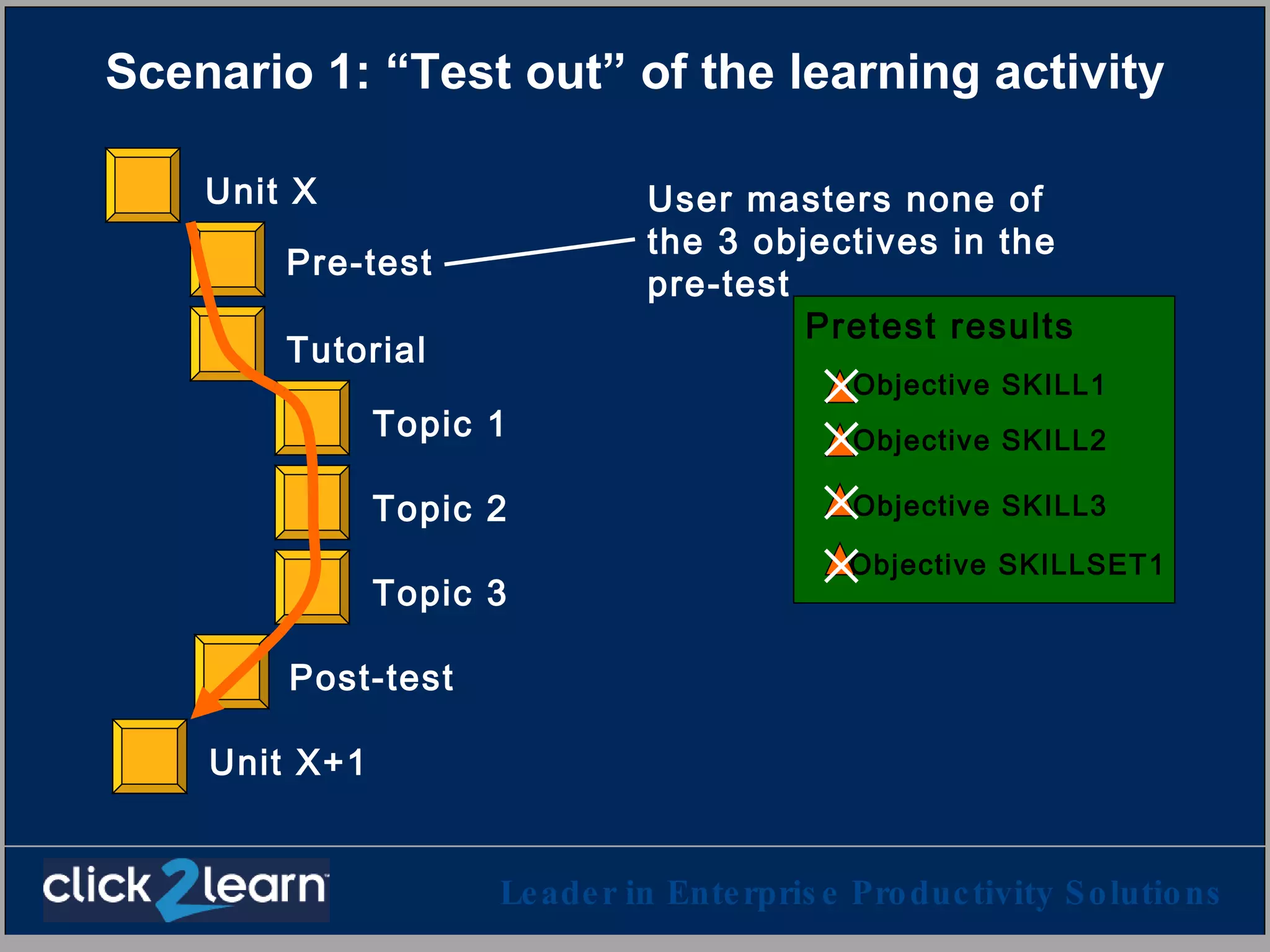

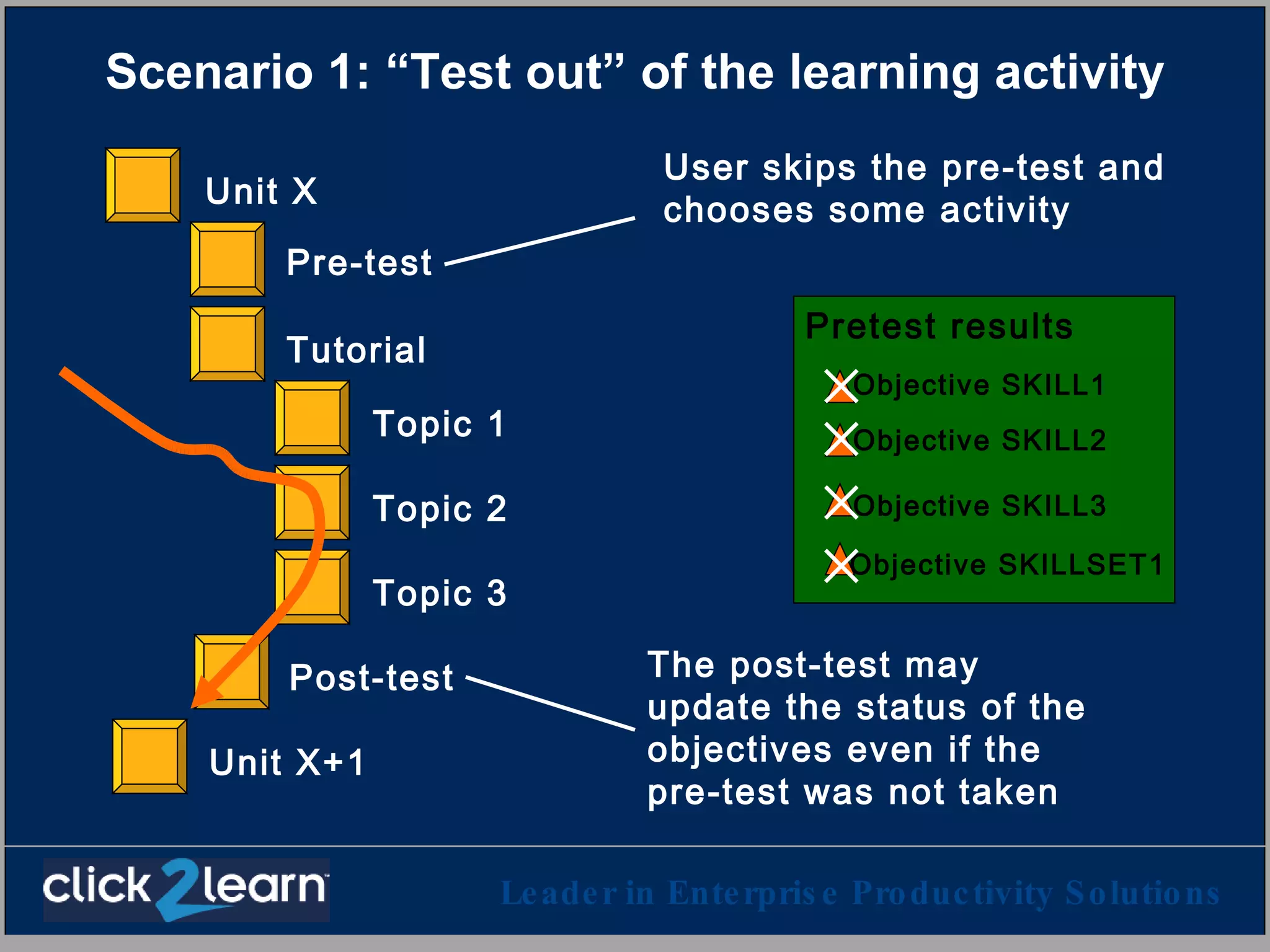

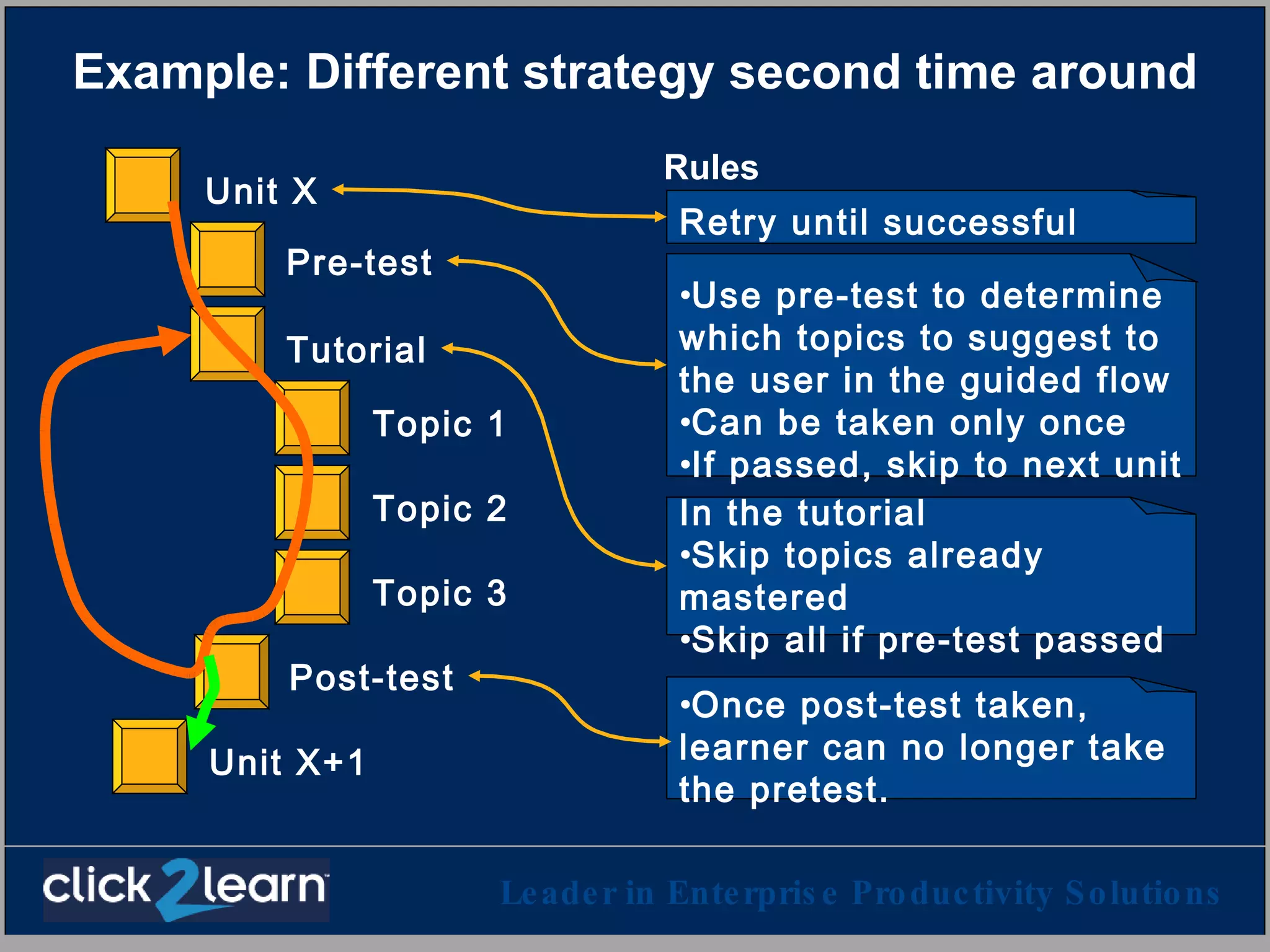

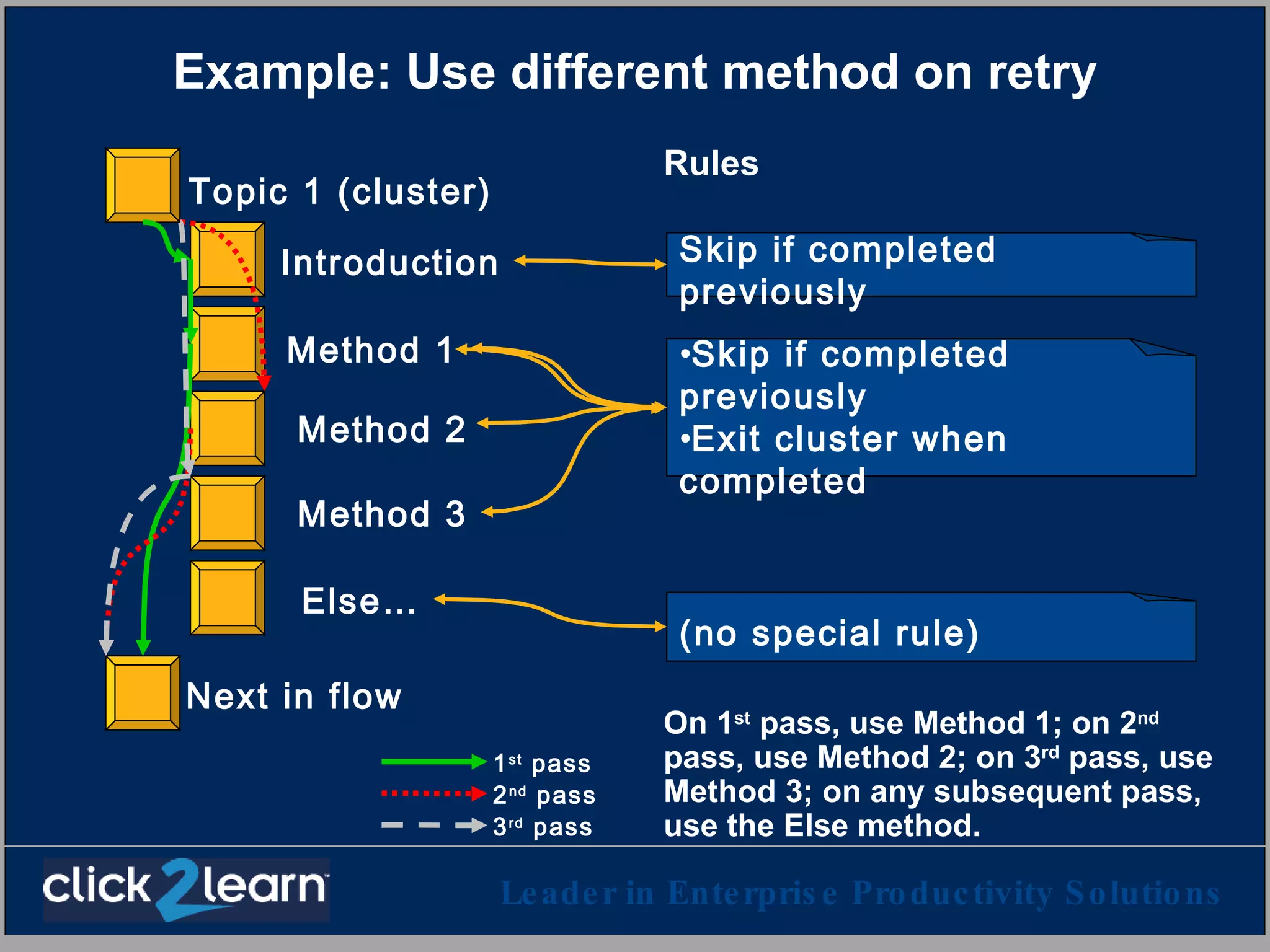

The document summarizes an online presentation about online learning standards SCORM and reusable competency definitions. It discusses how SCORM allows content to be delivered through any learning management system and supports sequencing of activities. It provides examples of how sequencing rules in SCORM 1.3 can guide learners through pre-tests, topics, and assessments in different orders based on their skills and completion of prior activities.

![Thank you [email_address] (See next slide for some acronyms, buzzword definitions and links)](https://image.slidesharecdn.com/onlinelearningintheageofscorm-101020071238-phpapp01/75/Online-learning-in-the-age-of-scorm-44-2048.jpg)