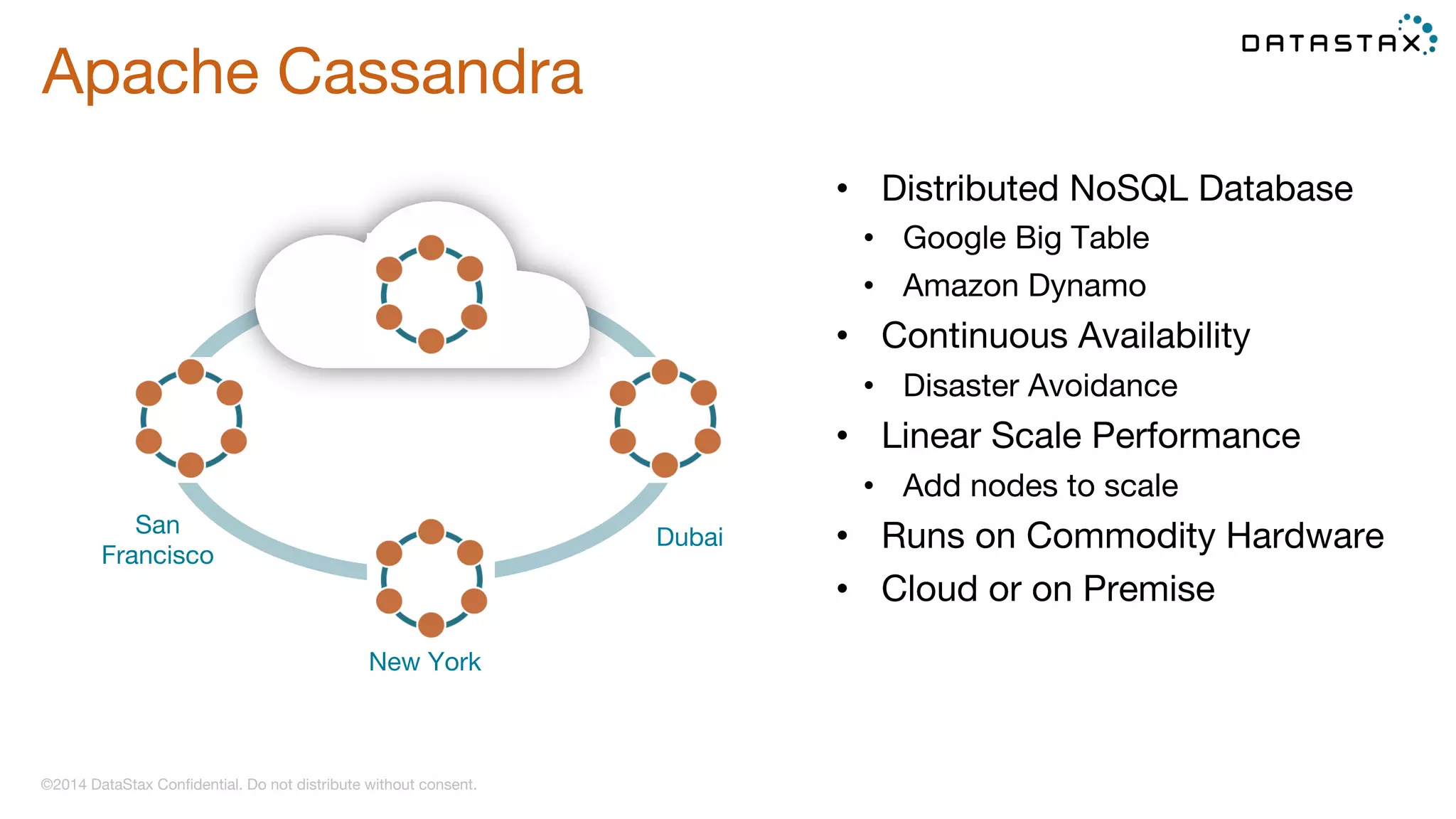

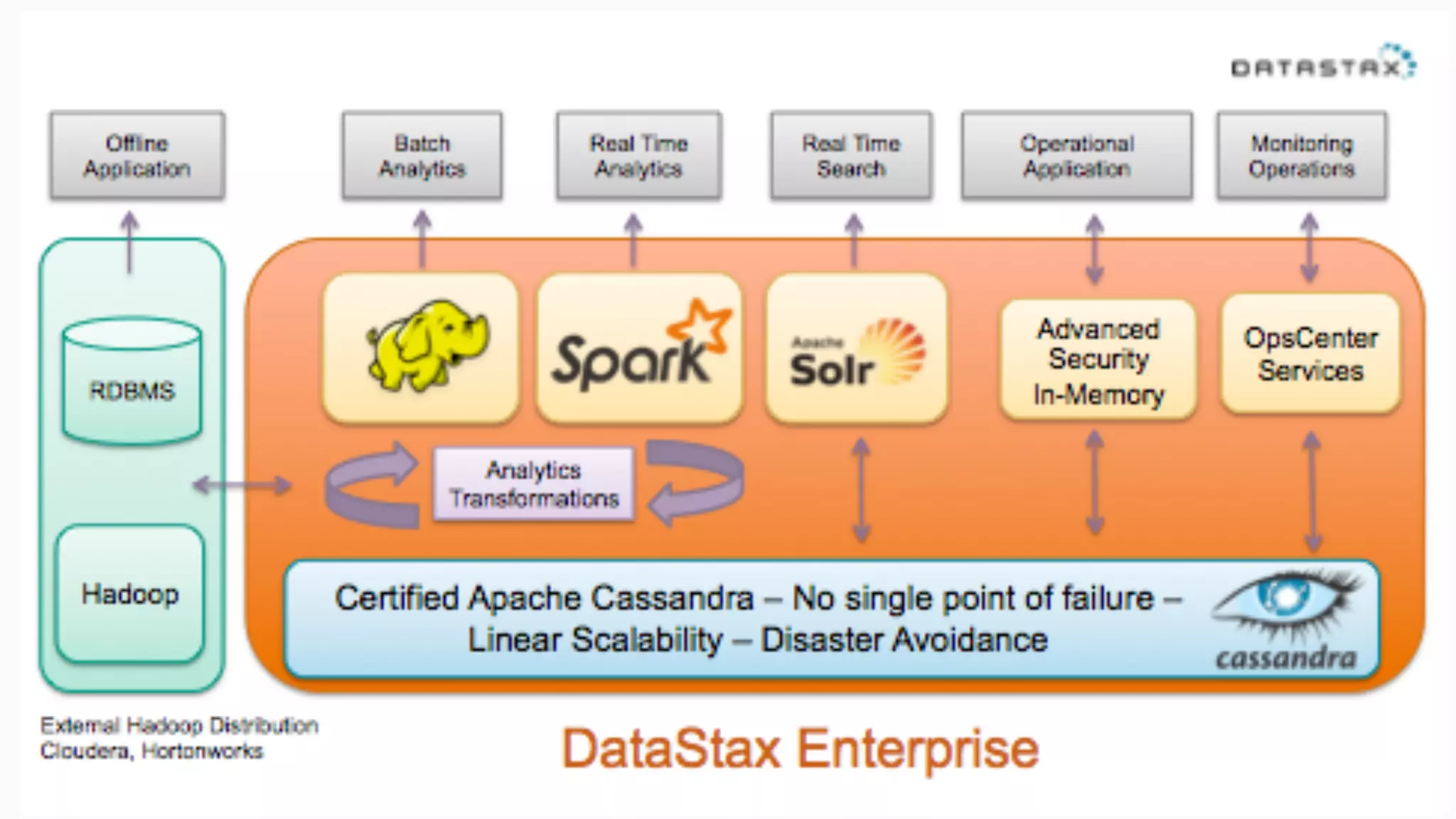

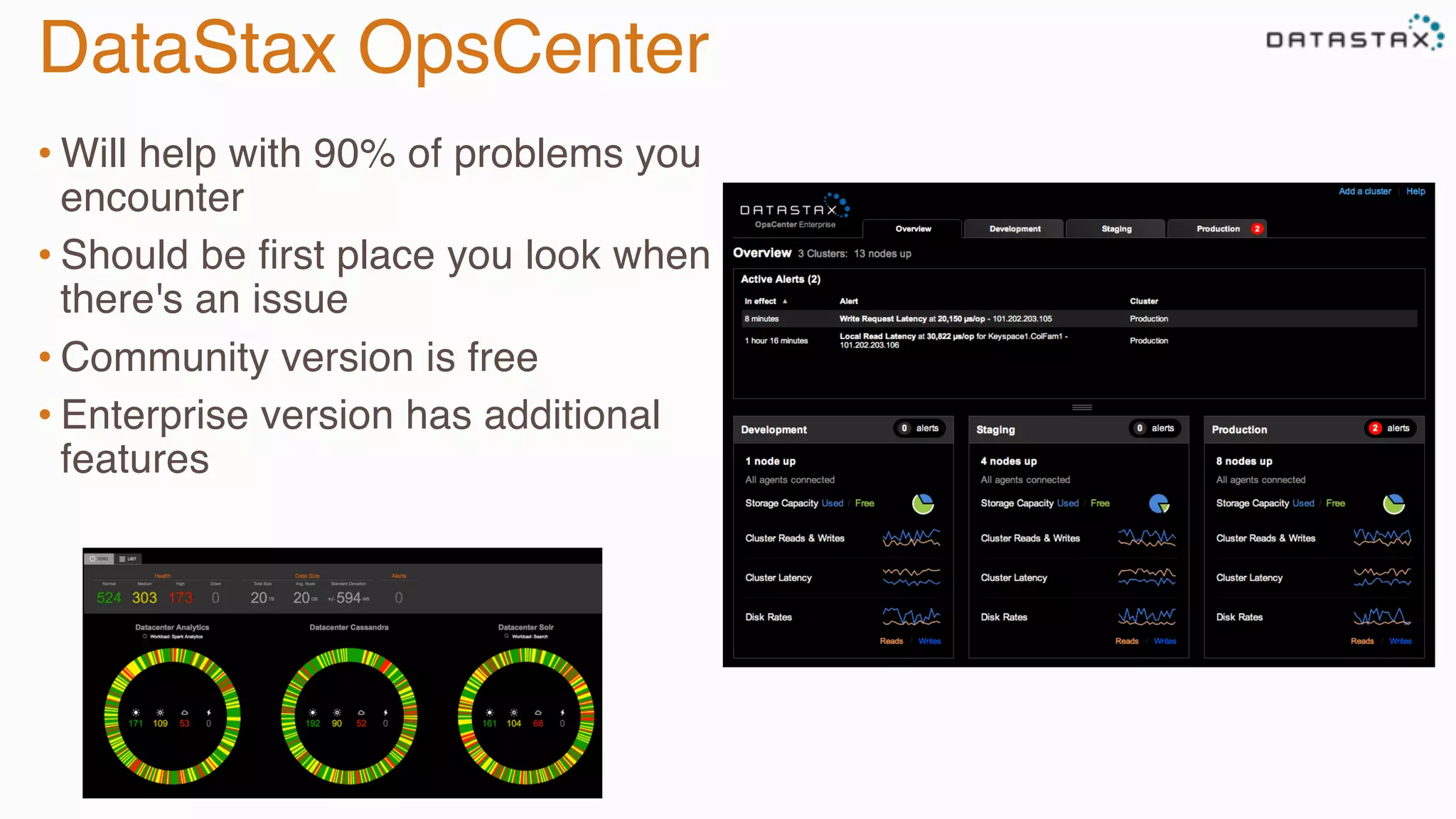

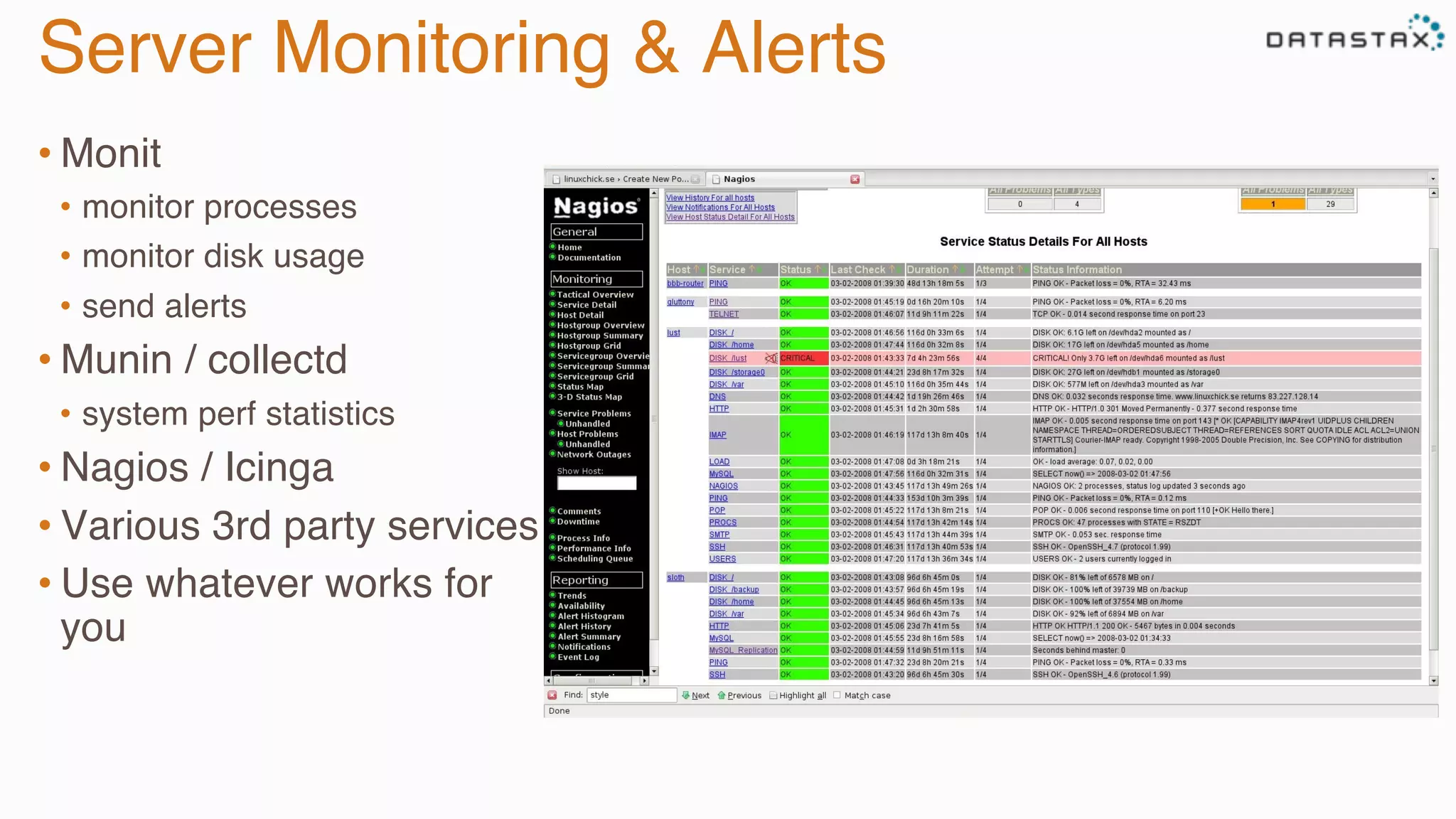

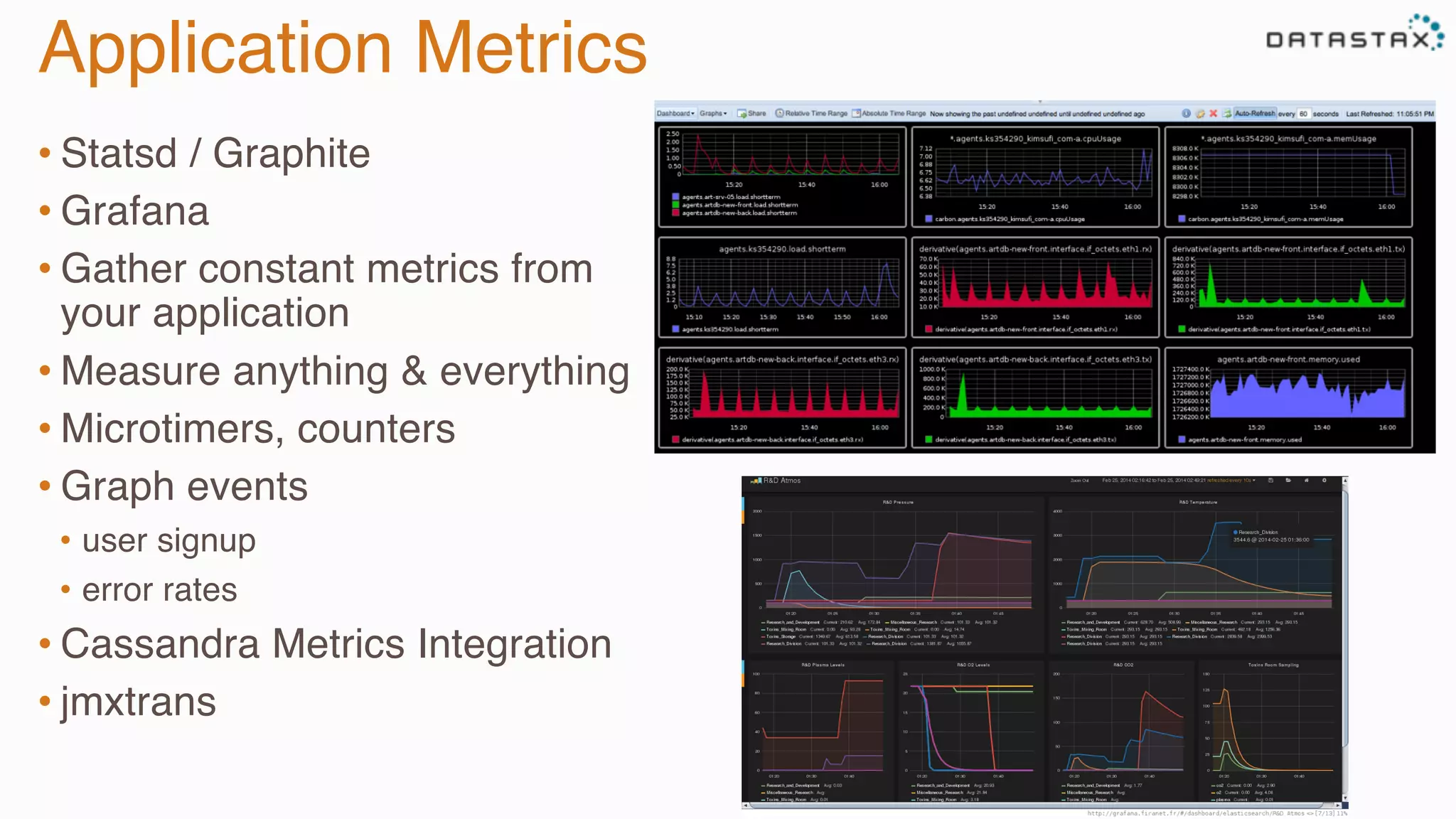

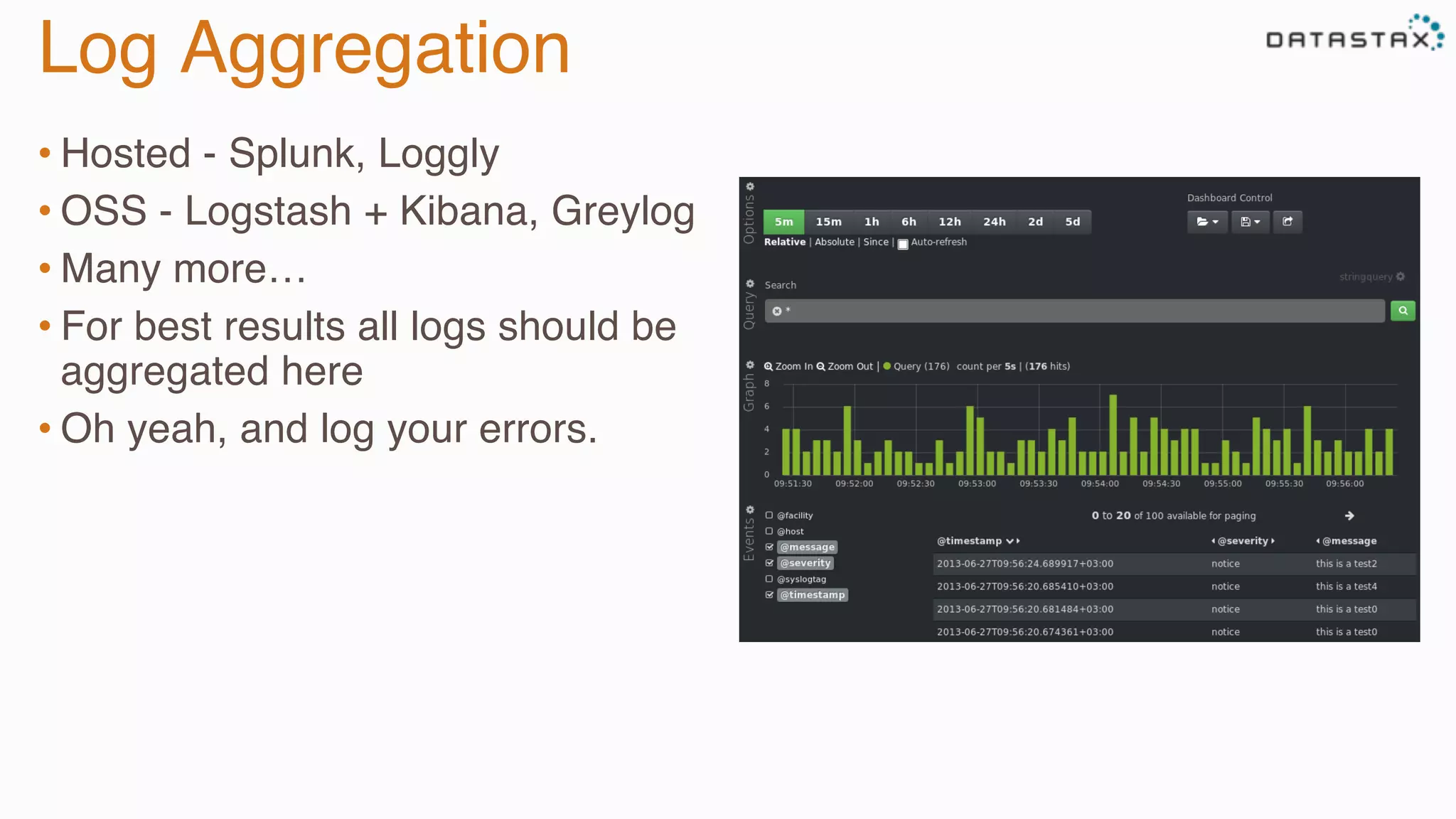

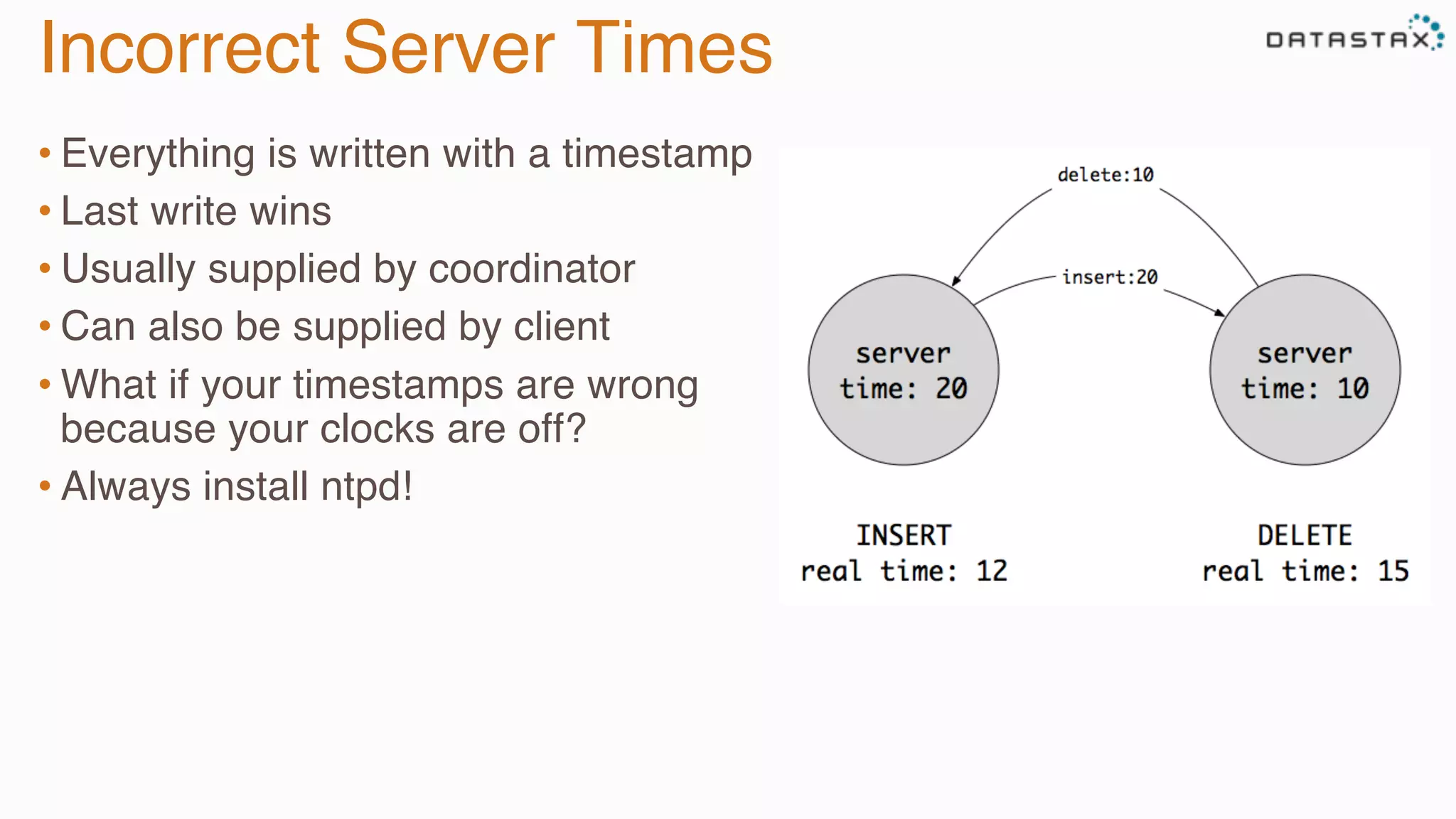

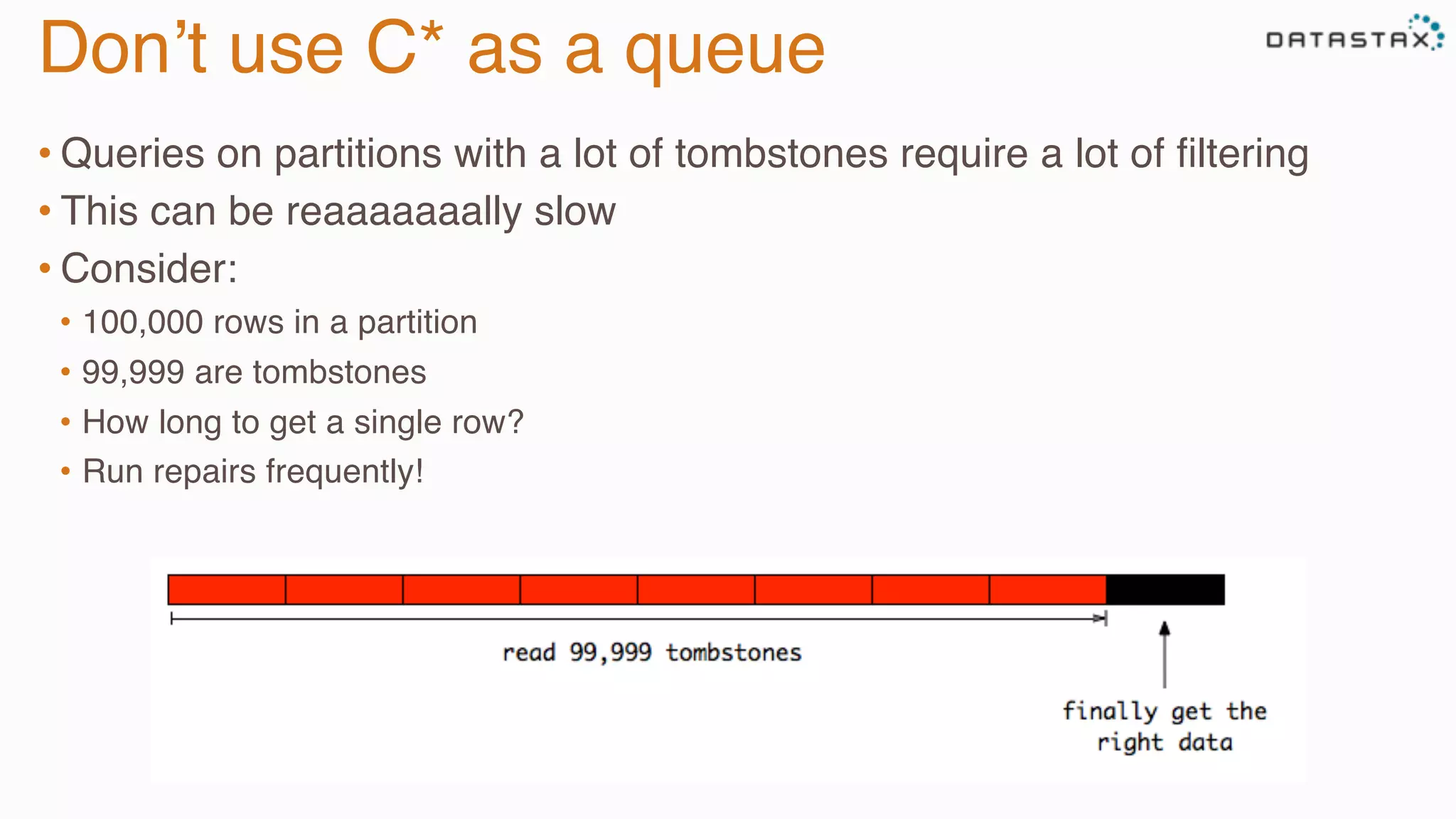

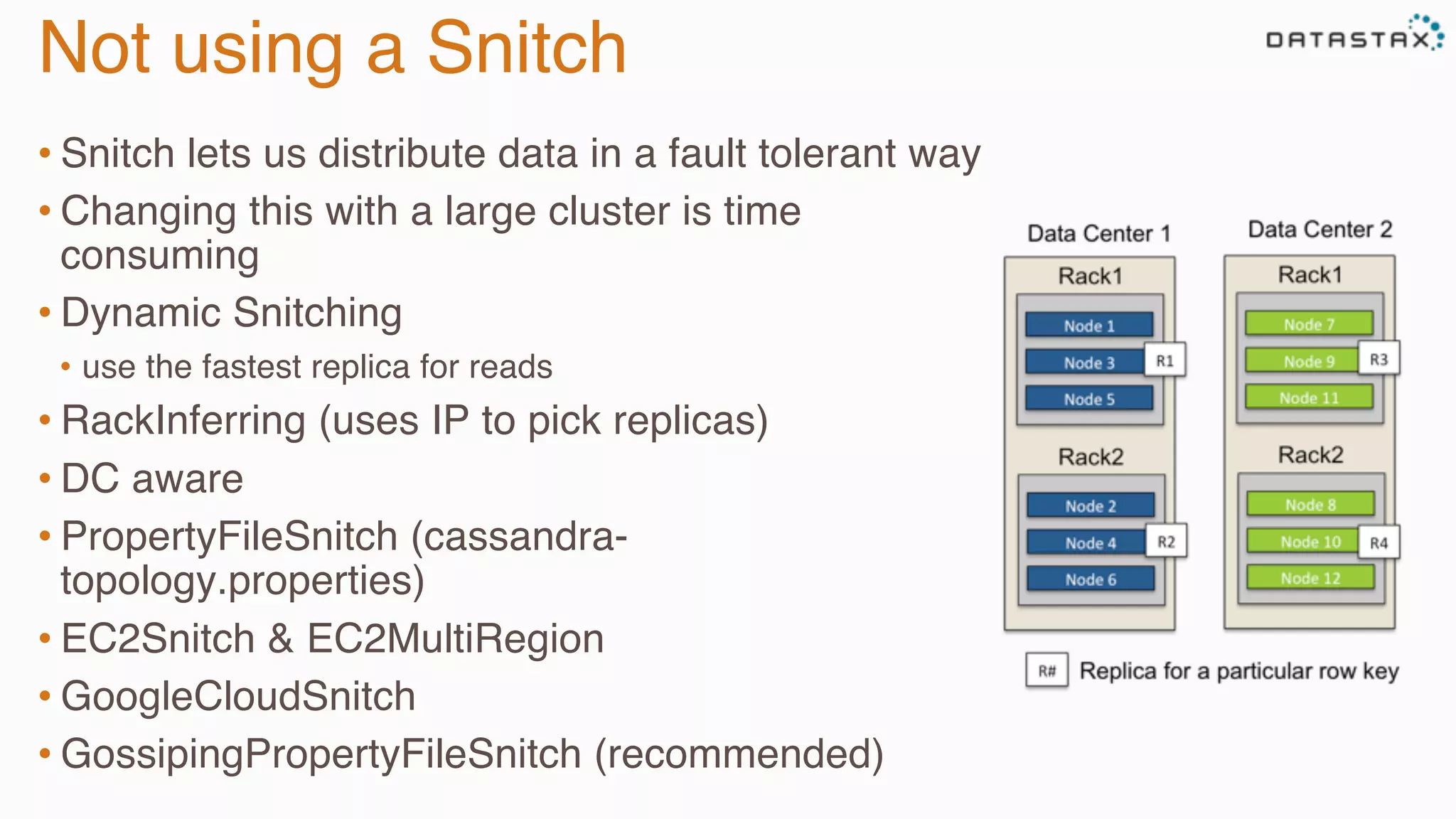

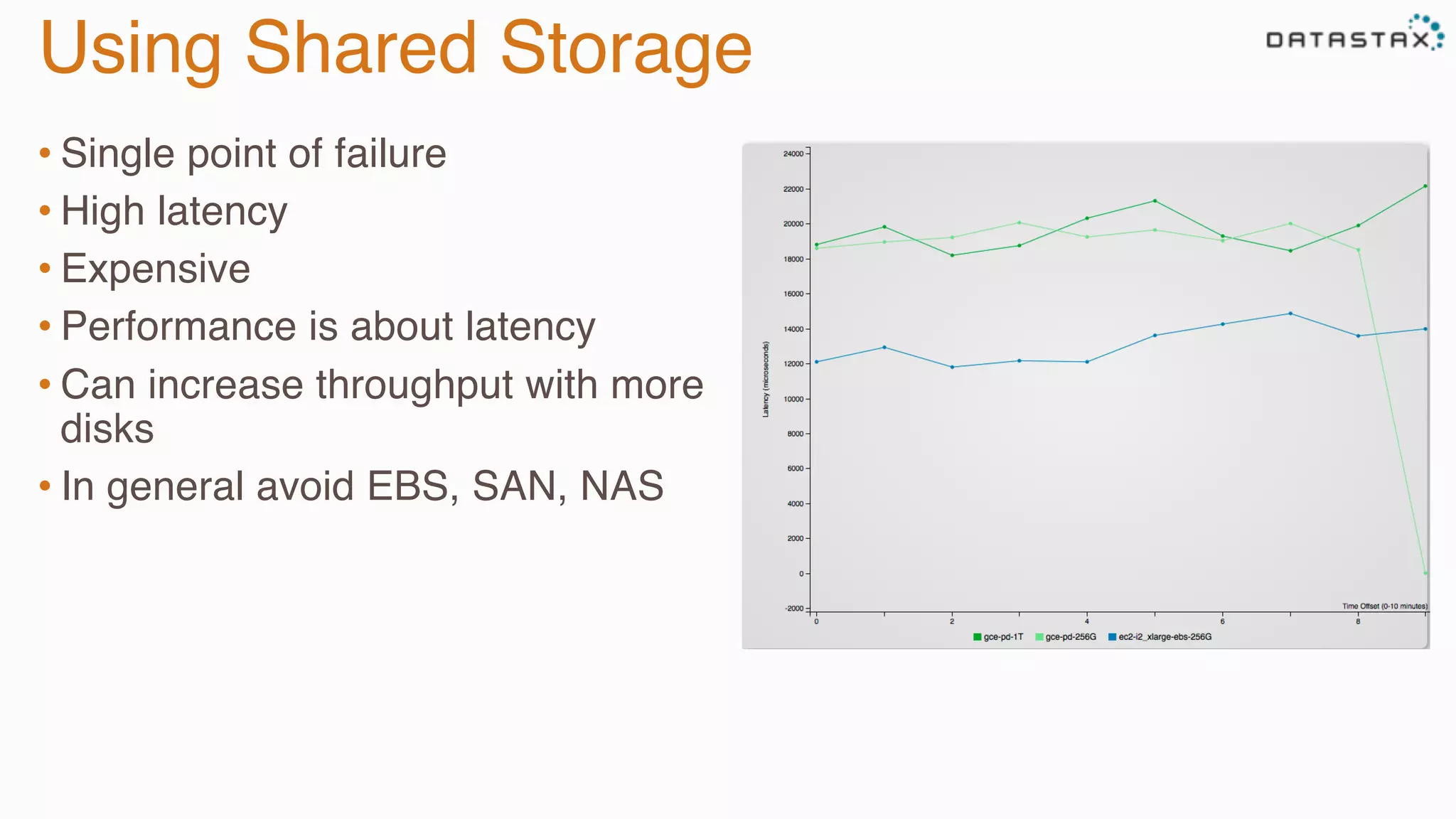

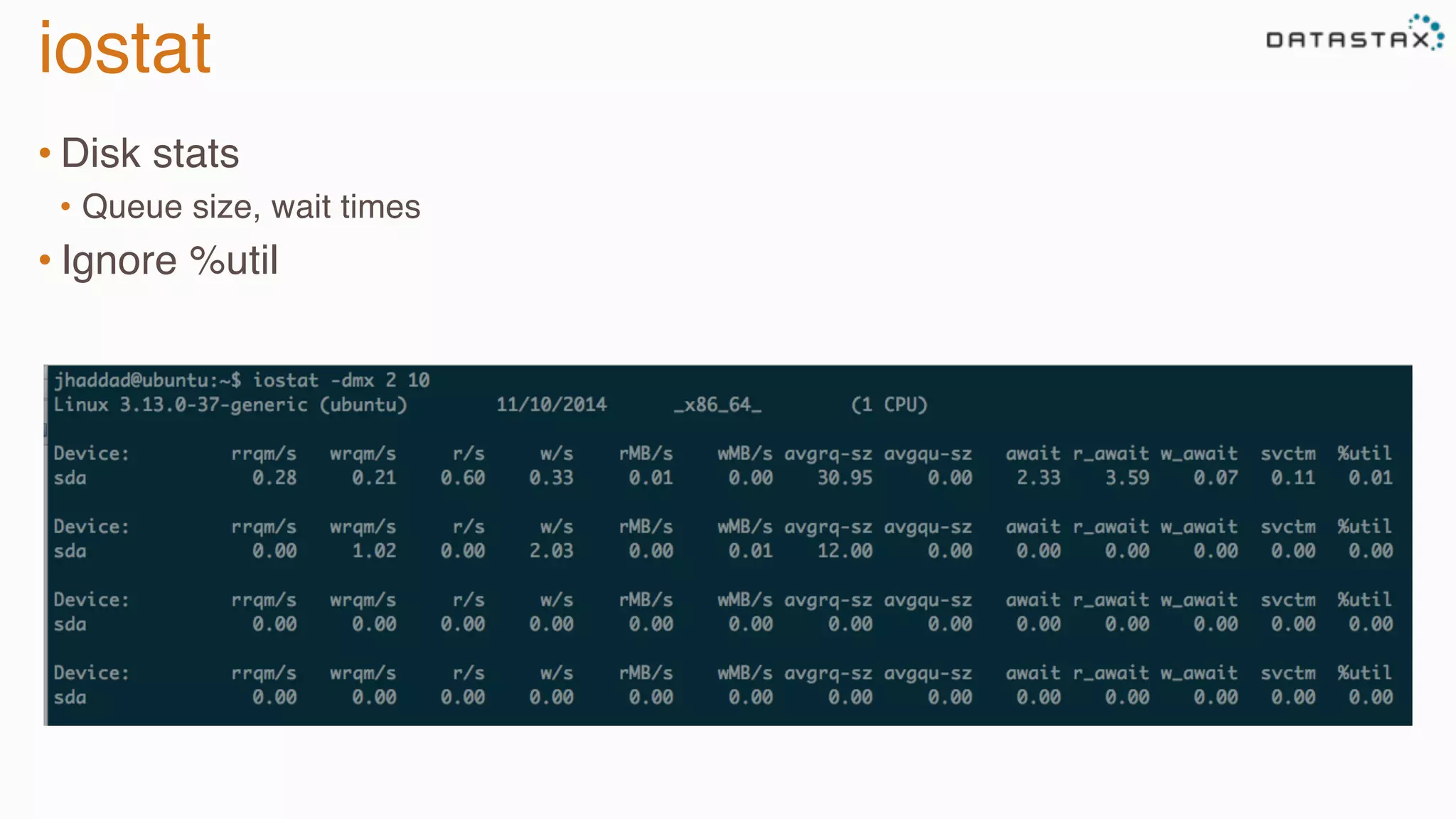

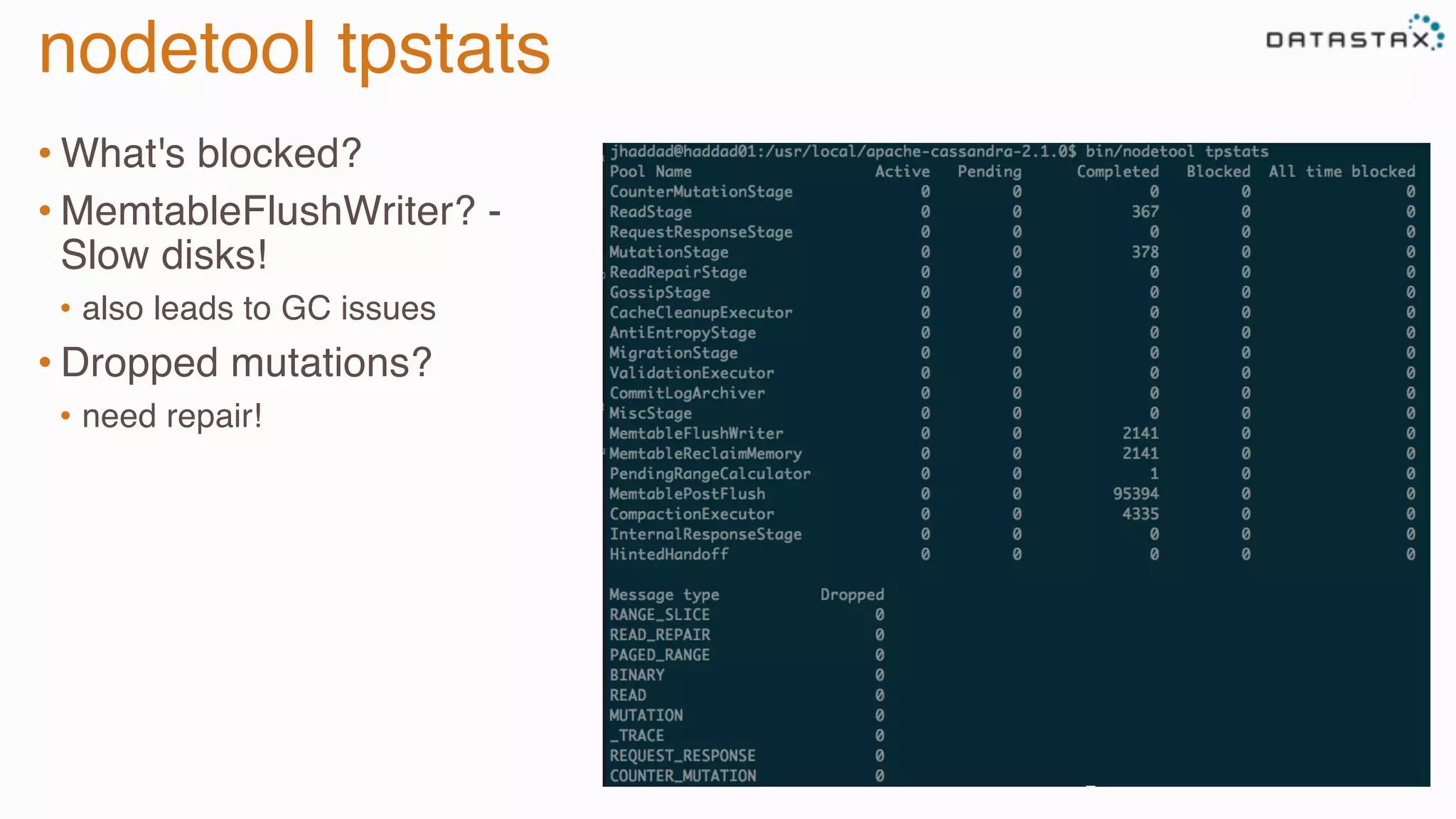

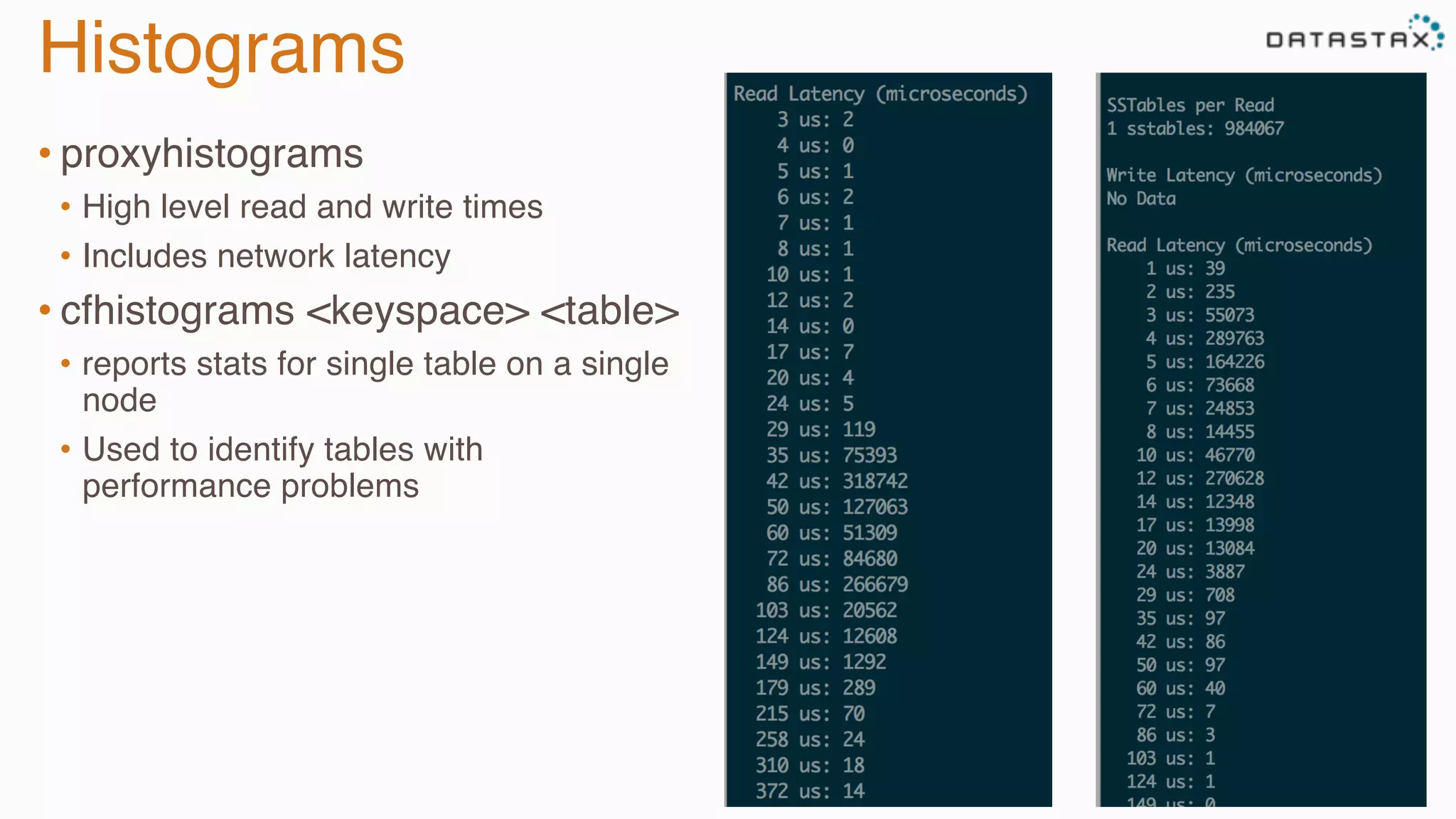

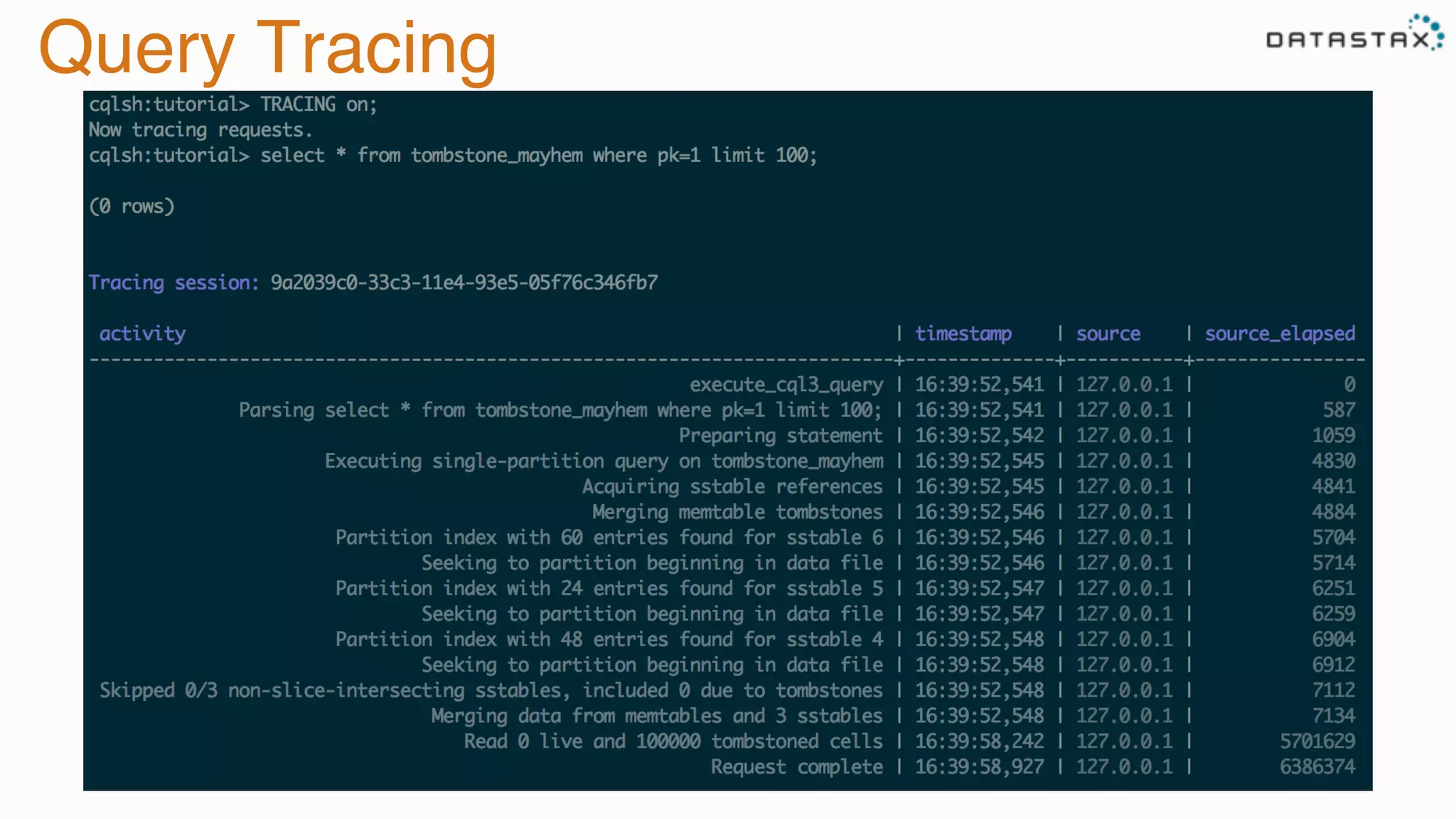

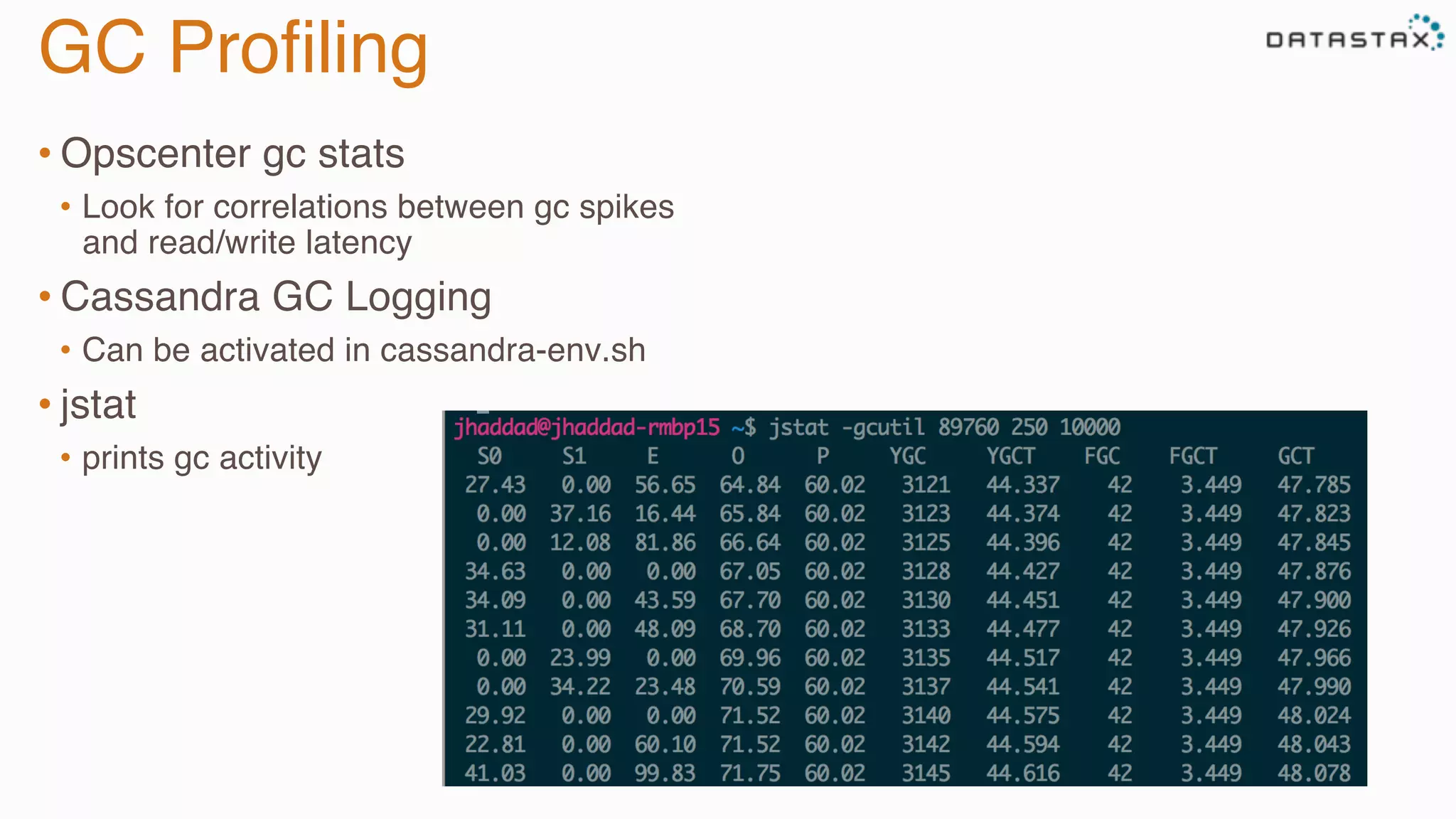

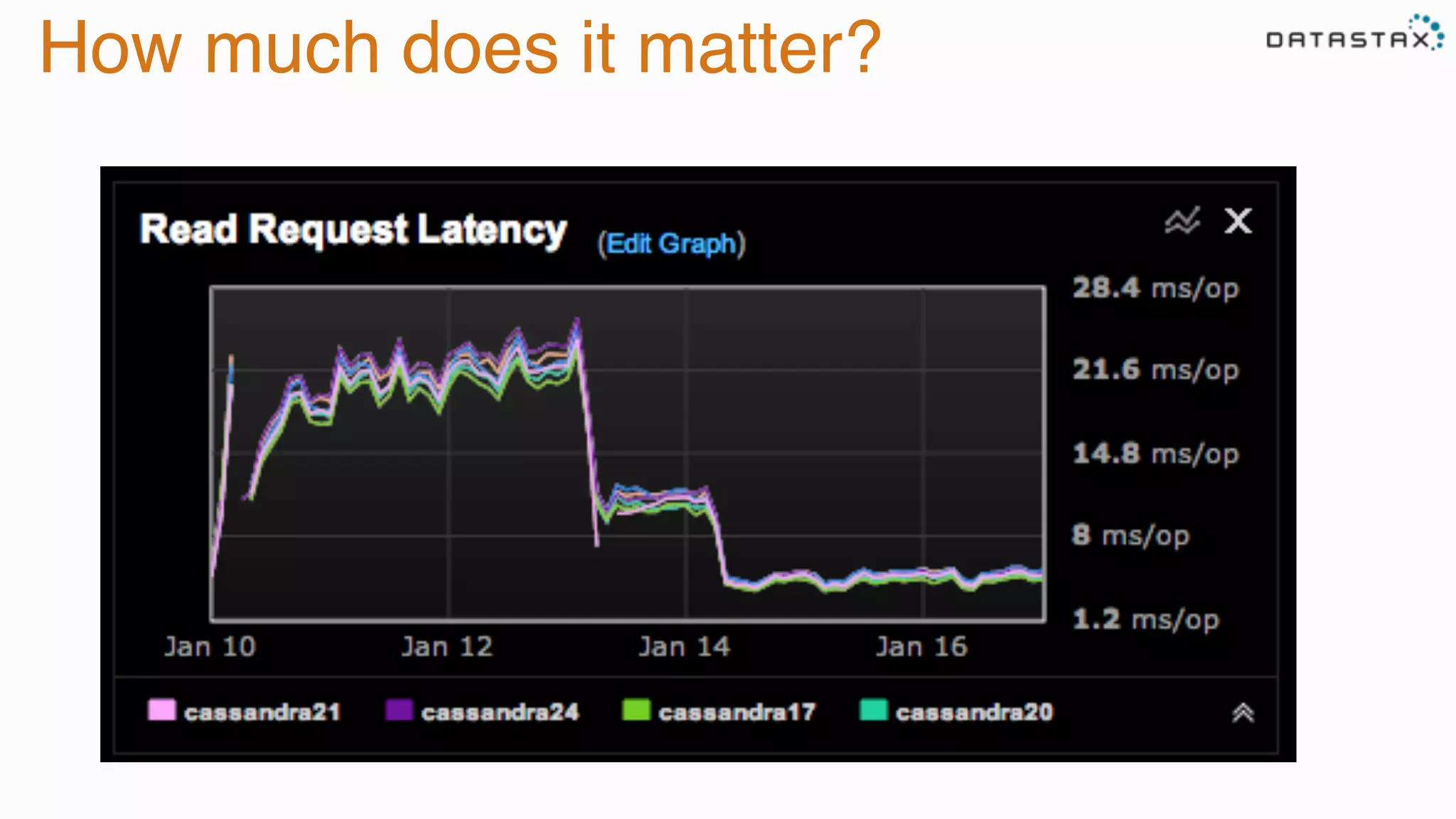

The document provides guidance on diagnosing and solving problems in production environments using Apache Cassandra, a distributed NoSQL database. It emphasizes the importance of preparation, monitoring, and debugging tools, as well as common pitfalls such as incorrect server timestamps and improper use of tombstones. Recommendations include utilizing DataStax OpsCenter, running regular maintenance like nodetool cleanup, and monitoring key metrics to identify performance issues.