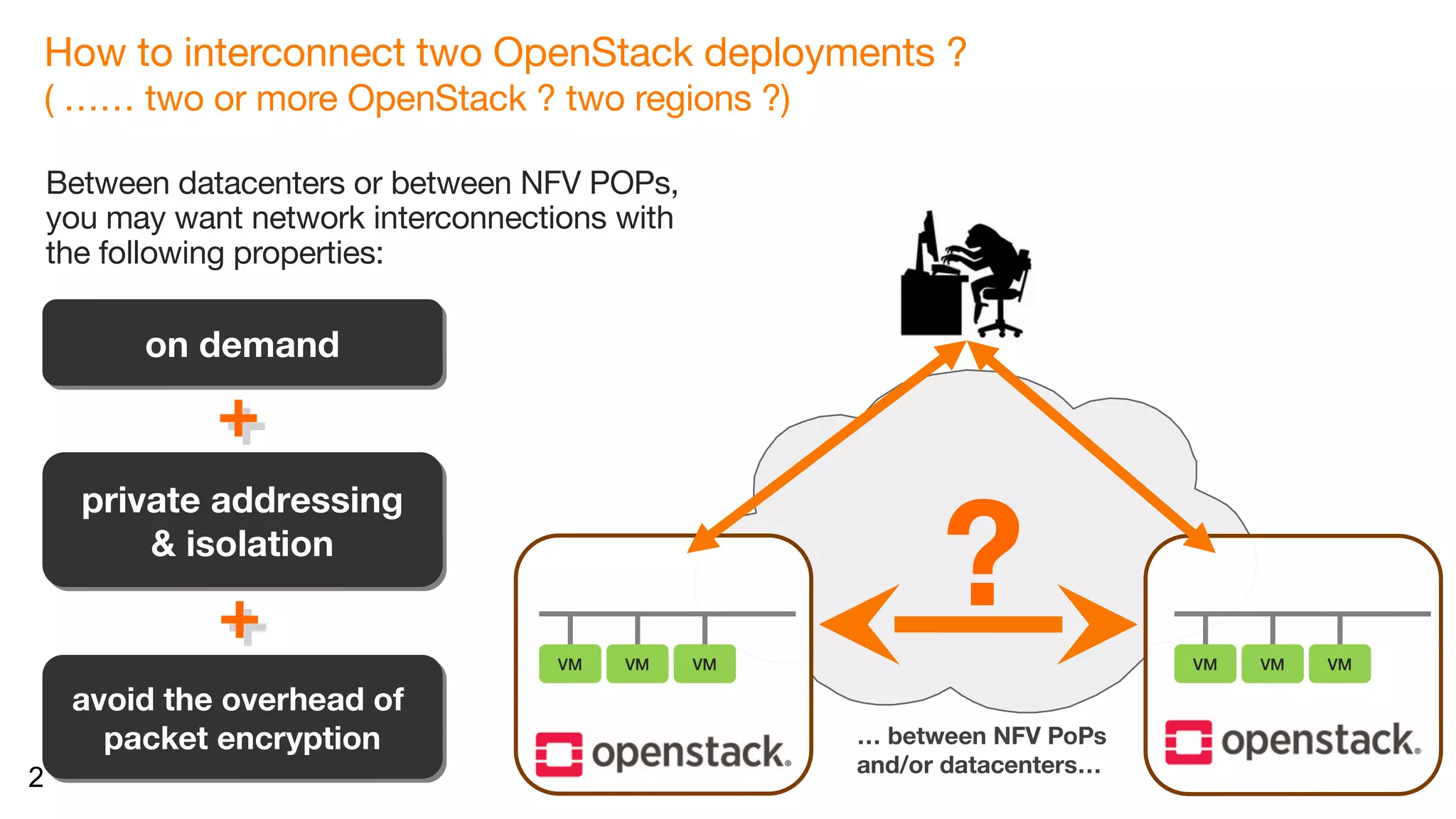

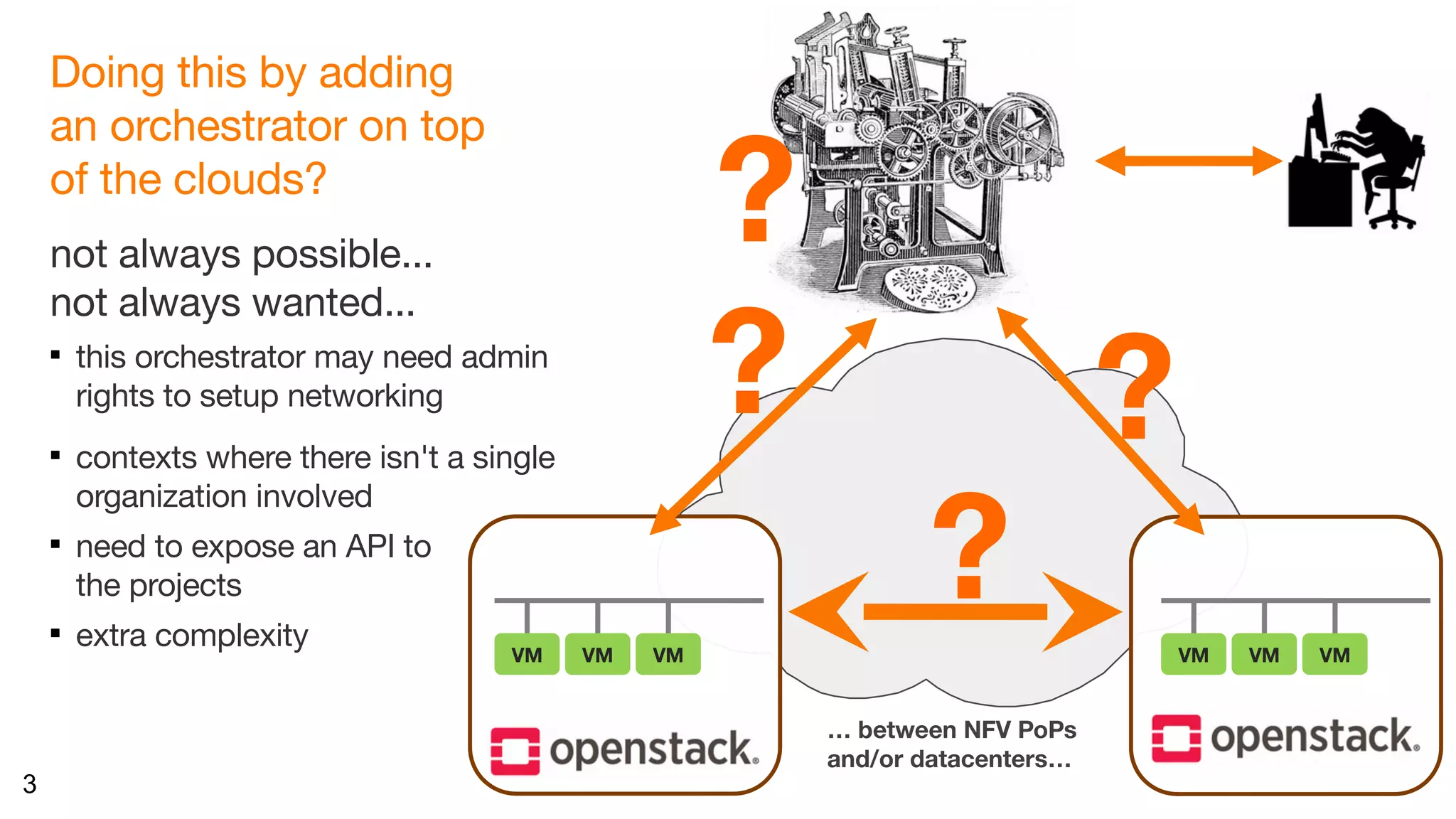

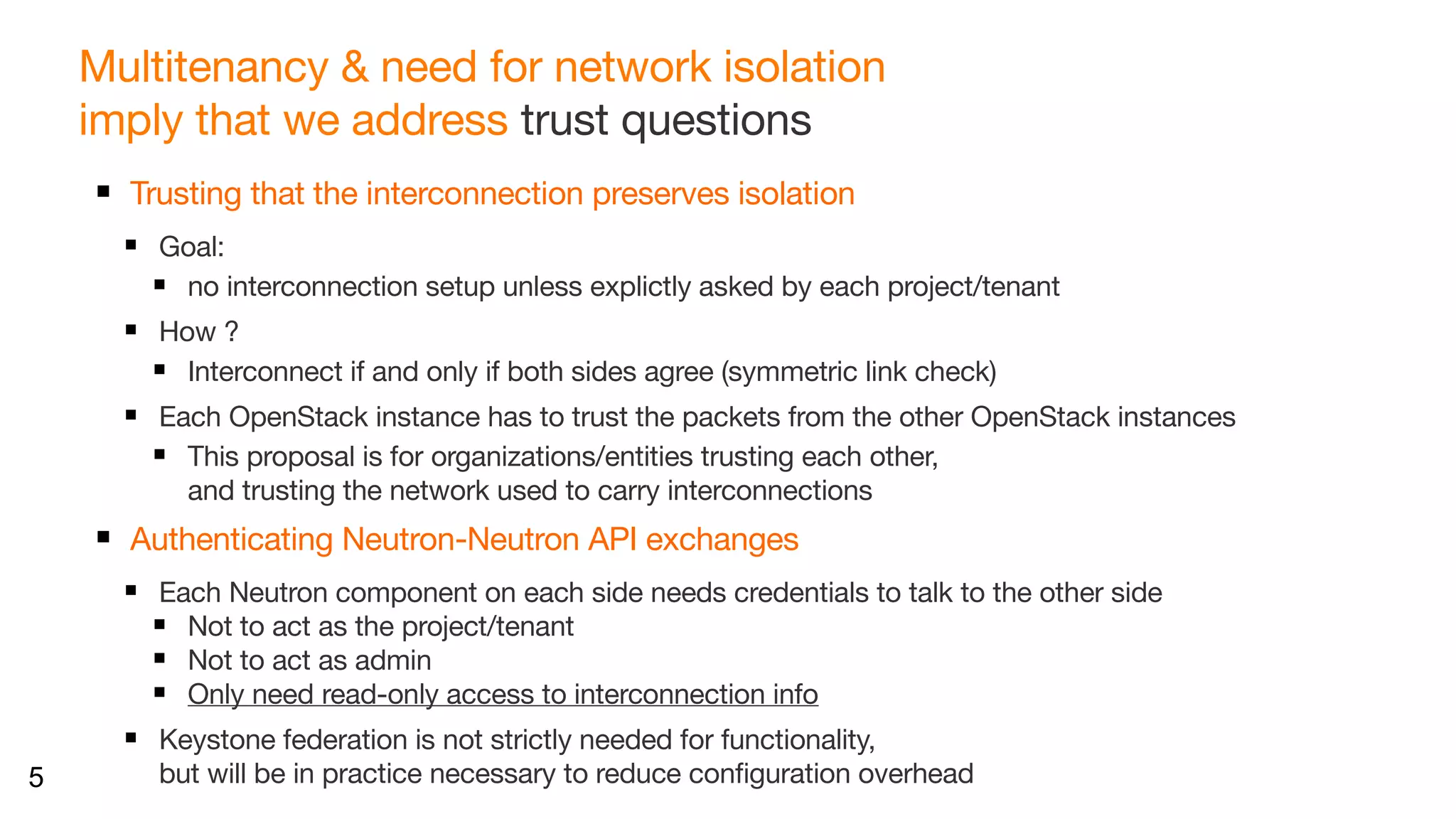

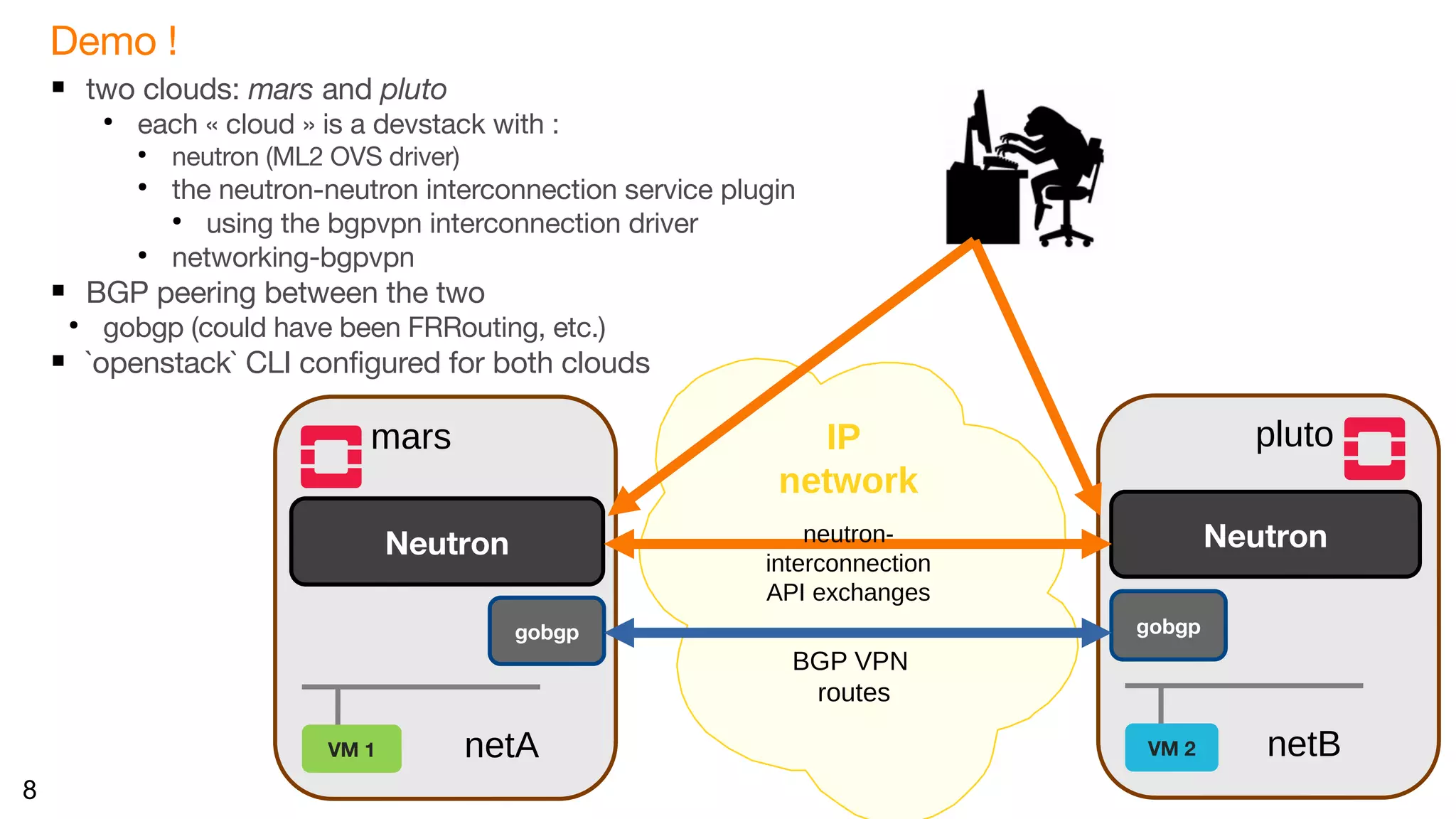

The document discusses the interconnection of multiple OpenStack deployments through the 'neutron-neutron' API, which enables on-demand networking between OpenStack instances across different regions or clouds while maintaining private addressing and isolation. It outlines various interconnection techniques and emphasizes the importance of trust among organizations involved in such connections. The proposal aims to simplify the process without needing additional orchestrators and allows development of drivers for various interconnection methods, making it adaptable to different SDN controllers.

![9

What happened behind the scene with this 'bgpvpn' driver ?

Preliminary configuration: not much ! /etc/neutron/neutron.conf

pluto: mars:

… …

[neutron_interconnection] [neutron_interconnection]

router_driver = bgpvpn router_driver = bgpvpn

network_l3_driver = bgpvpn network_l3_driver = bgpvpn

network_l2_driver = bgpvpn network_l2_driver = bgpvpn

bgpvpn_rtnn = 5000,5999 bgpvpn_rtnn = 3000,3999

Information exchanges:

Each side advertises the BGP VPN Route Target that it uses to advertise its own routes

To send trafic, the other side will import the routes carrying this Route Target into the relevant network

How is this done ?

(on each side:) the driver for the interconnection service uses the already existing Neutron BGPVPN API

to create BGPVPNs and associate them to the network](https://image.slidesharecdn.com/20181114-openstacksummit-berlin-neutronneutronx-06-181120104258/75/Neutron-to-Neutron-interconnecting-multiple-OpenStack-deployments-9-2048.jpg)