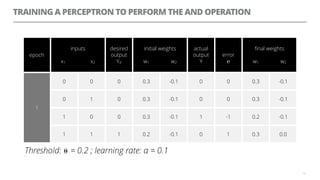

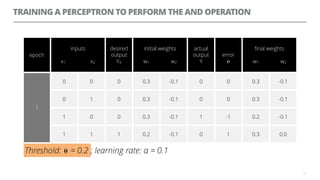

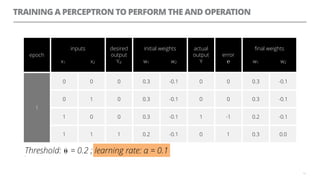

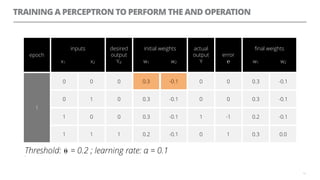

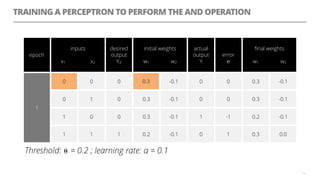

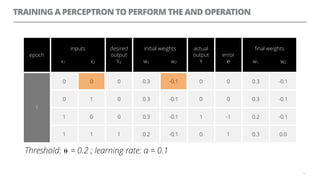

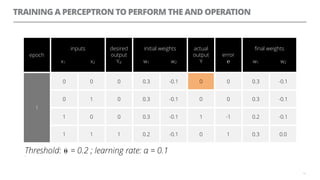

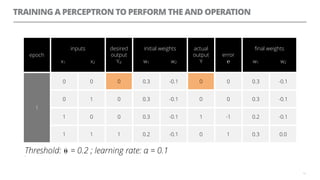

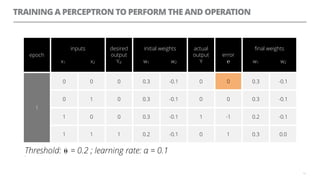

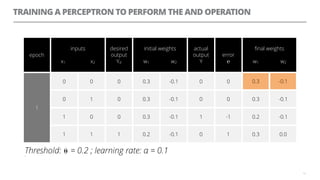

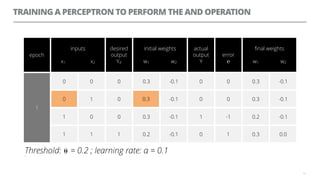

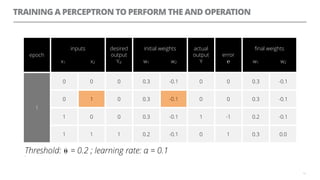

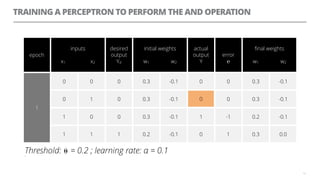

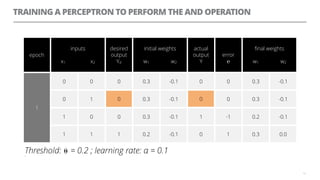

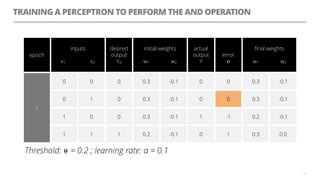

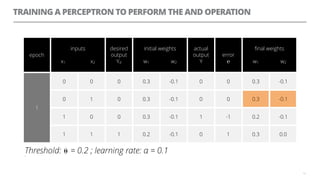

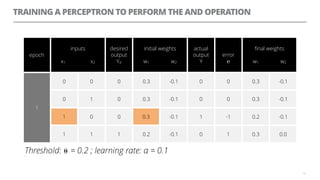

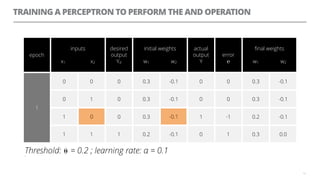

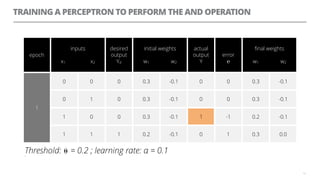

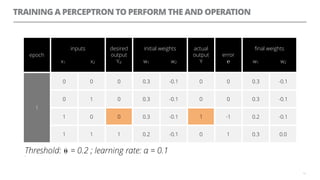

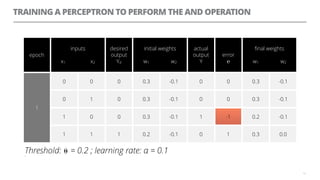

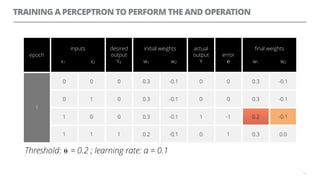

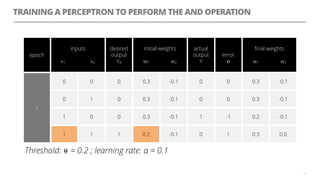

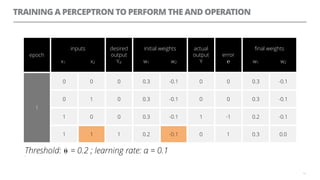

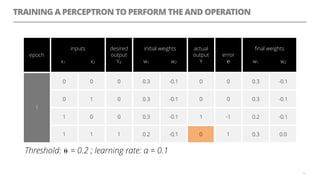

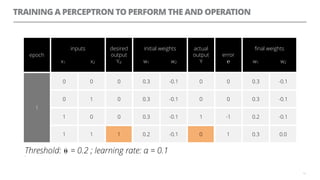

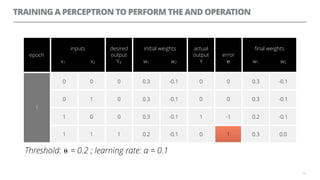

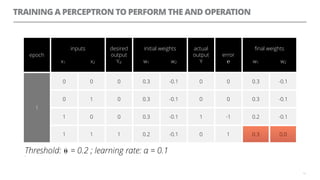

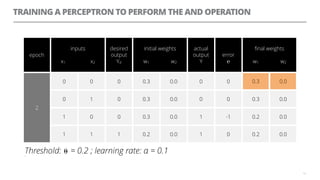

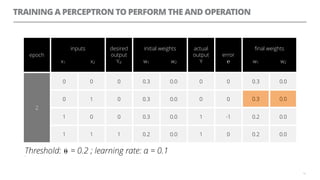

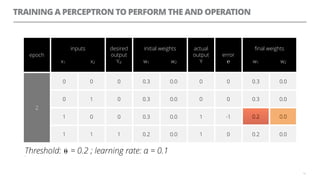

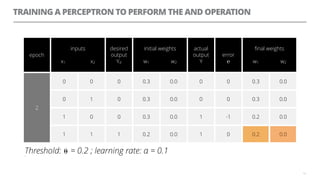

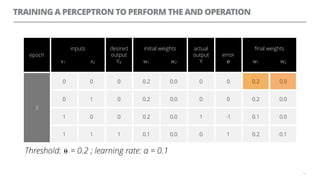

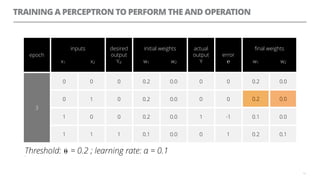

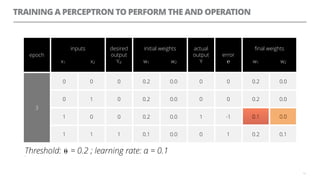

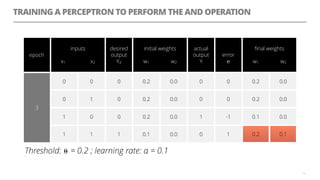

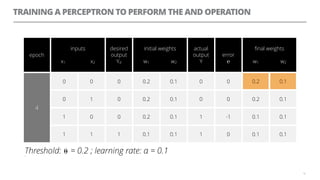

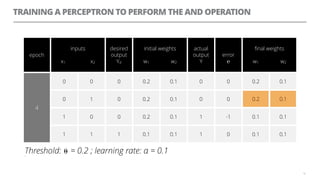

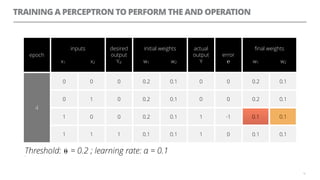

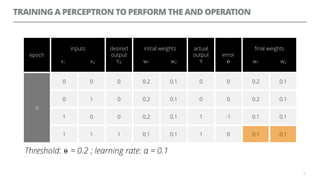

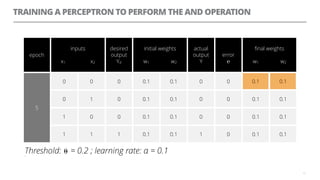

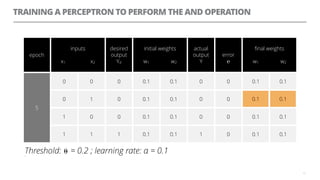

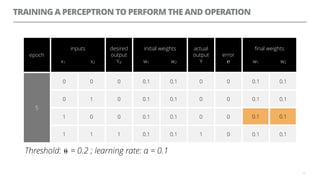

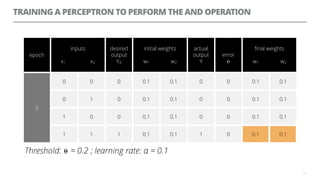

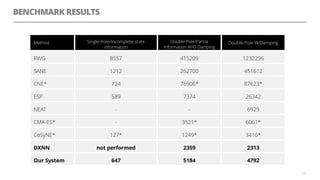

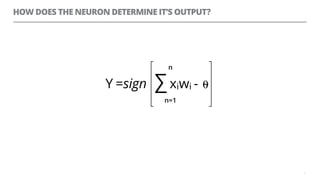

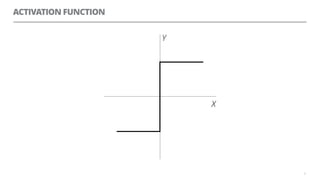

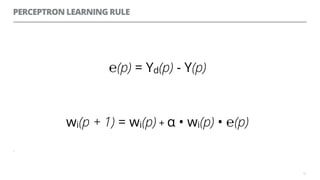

The document discusses artificial neural networks and how they can be trained using a perceptron learning algorithm. It provides an example of training a perceptron to perform the logical AND operation. Over multiple epochs, the perceptron's weights are adjusted based on its errors to correctly output a 1 only when both the x1 and x2 inputs are 1.

![PERCEPTRON TRAINING ALGORITHM

11

weight training

start

stop

weights converged? yes

no

set weights and threshold to

random values [-0.5, 0.5]

activate the perceptron](https://image.slidesharecdn.com/neuroevolution-160127090627/85/A-hitchhiker-s-guide-to-neuroevolution-in-Erlang-12-320.jpg)