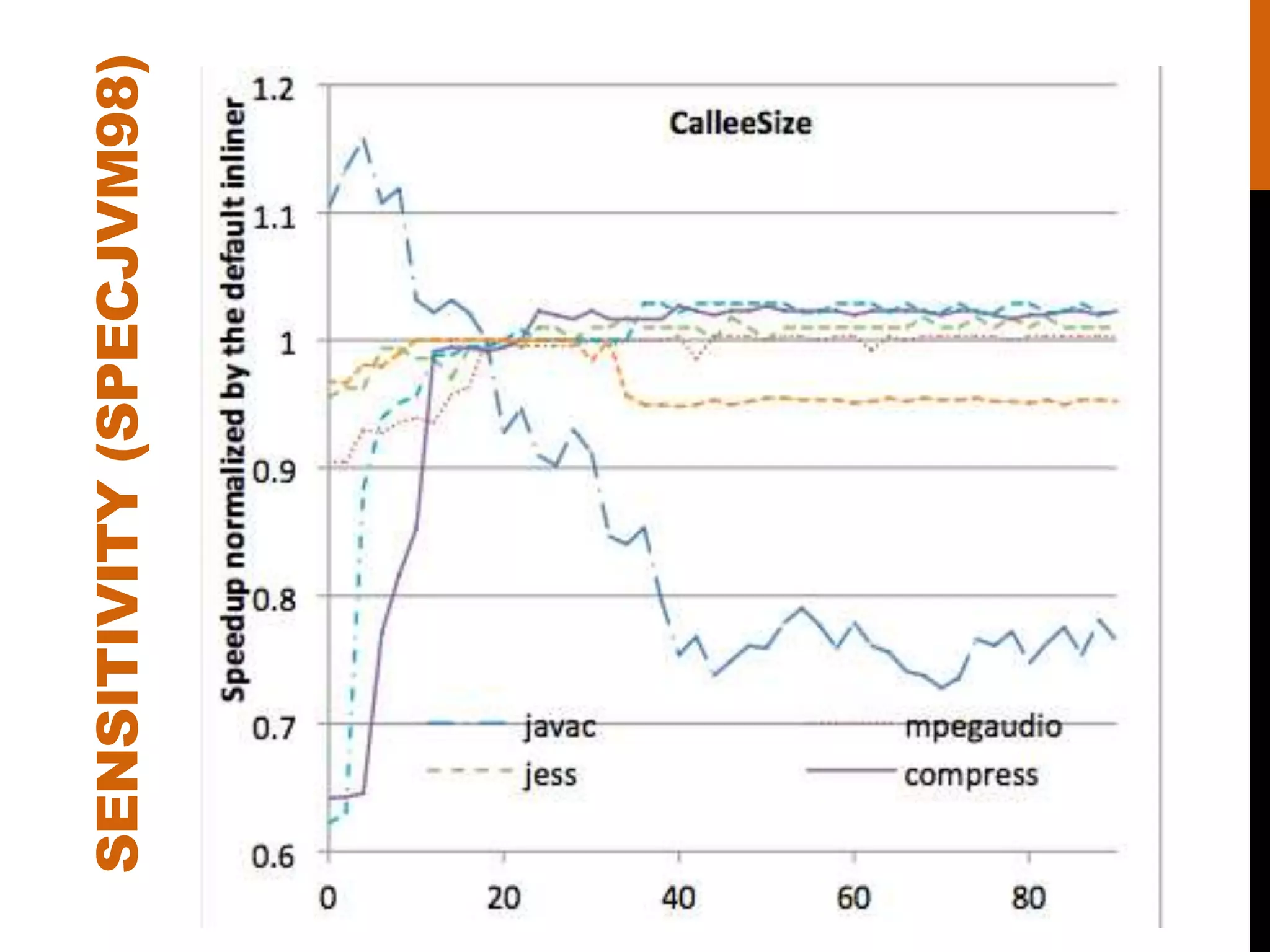

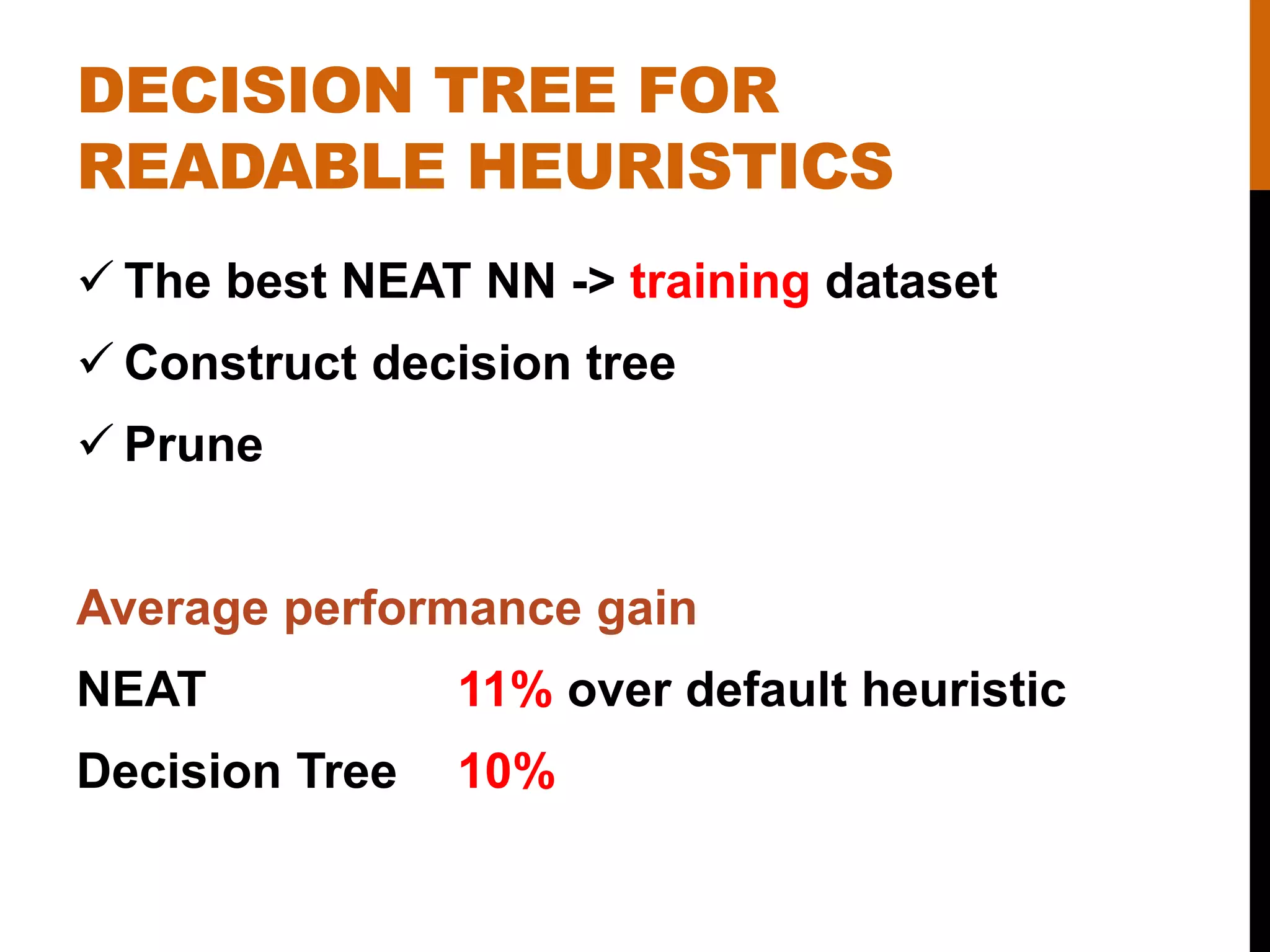

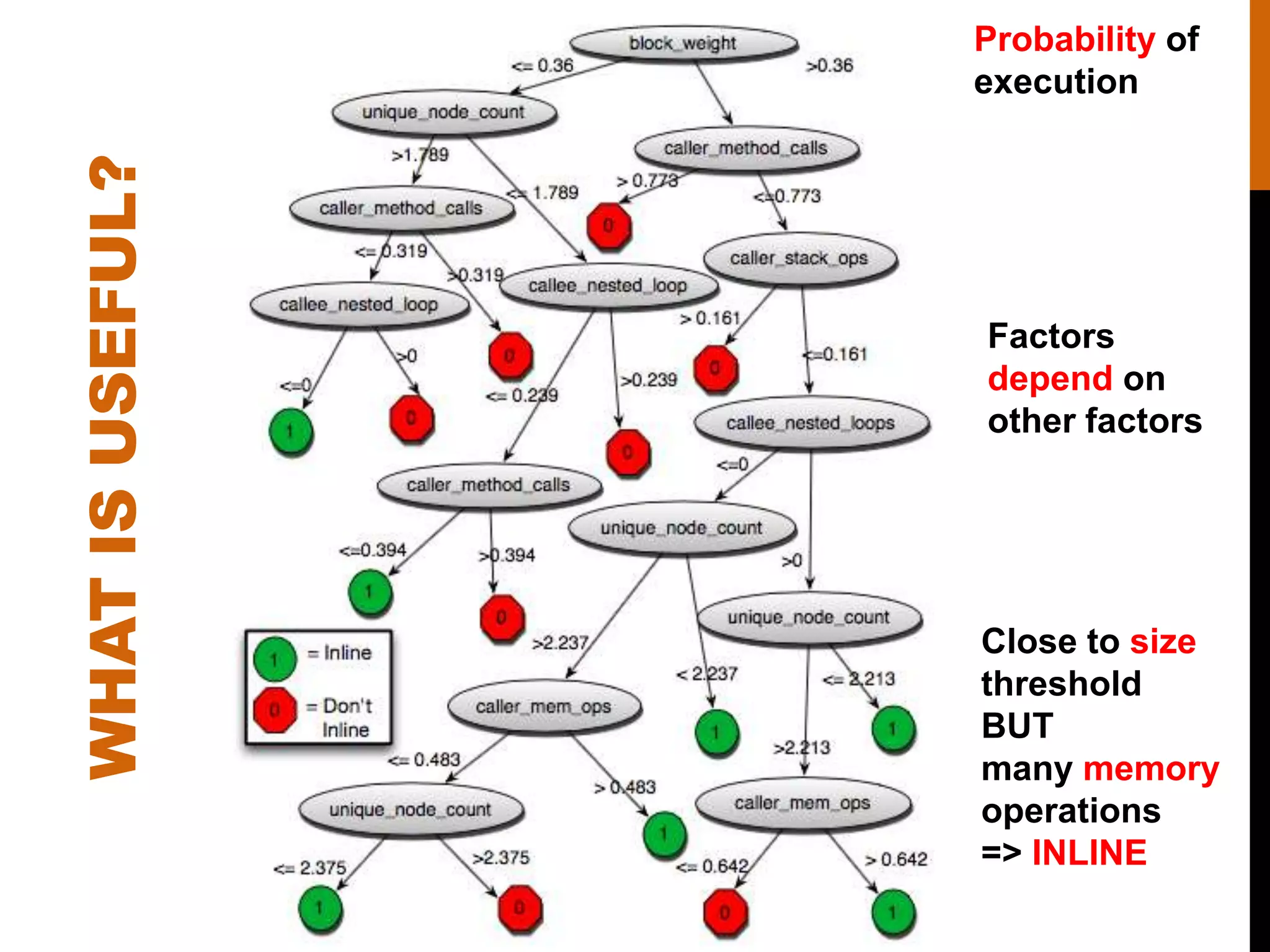

This paper proposes using machine learning techniques to automatically construct inlining heuristics that improve performance over default heuristics. The researchers used NeuroEvolution of Augmenting Topologies (NEAT) to evolve neural networks that determine whether to inline functions based on call site features. Their best NEAT-generated heuristic provided an 11% average speedup over the Java HotSpot VM heuristic. They also generated a decision tree heuristic that explained the NEAT heuristic and provided 10% speedup. The machine-learned heuristics outperformed default heuristics by considering a wider range of inlining factors.