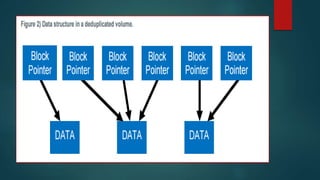

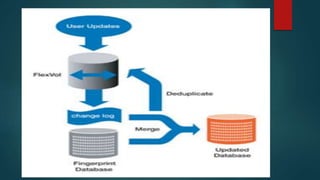

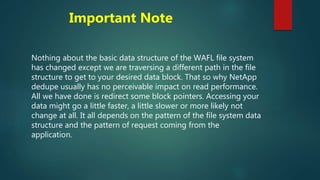

NetApp deduplication provides block-level deduplication within flexible volumes to reduce storage capacity needs. It works at the 4KB block level on active file systems and can be configured to run automatically or manually. Deduplication uses fingerprints that are unique digital signatures for blocks. During deduplication, fingerprints are compared and any duplicate blocks are replaced with a pointer to the existing data block to save space. Up to 4% additional space may be needed in a volume and up to 3% in an aggregate for deduplication metadata.