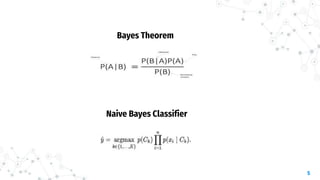

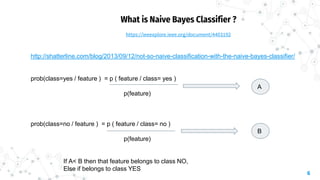

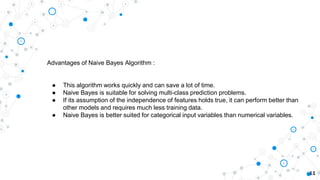

This document provides an overview of the Naive Bayes algorithm. It introduces naive Bayes classification and conditional probability. The document then explains Bayes' theorem and how the naive Bayes algorithm uses conditional probabilities to classify data. Several examples are provided. The advantages of naive Bayes including its ability to handle multiple classes and require less training data are discussed. Limitations around its independence assumptions are also outlined.