Introduction

Naive Bayes is a family of probabilistic algorithms based on Bayes' Theorem. It’s particularly suited for classification tasks and is widely used in natural language processing (NLP), spam detection, sentiment analysis, and recommendation systems.

The term “naive” comes from the assumption that features are conditionally independent given the class label, which simplifies the computation but is rarely true in real-world data. Despite this simplification, Naive Bayes often performs surprisingly well.

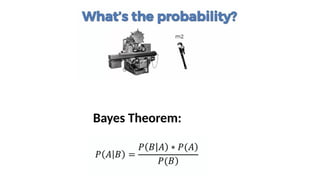

Bayes’ Theorem

At the core of Naive Bayes lies Bayes' Theorem:

�

(

�

∣

�

)

=

�

(

�

∣

�

)

�

(

�

)

�

(

�

)

P(A∣B)=

P(B)

P(B∣A)P(A)

In classification terms:

�

(

�

∣

�

)

P(A∣B): Posterior probability of class A given data B.

�

(

�

∣

�

)

P(B∣A): Likelihood of data B given class A.

�

(

�

)

P(A): Prior probability of class A.

�

(

�

)

P(B): Probability of data B.

Naive Bayes Classifier: Principle

Given a set of features

�

=

{

�

1

,

�

2

,

.

.

.

,

�

�

}

X={x

1

,x

2

,...,x

n

} and a class variable

�

C, Naive Bayes calculates the probability of each class

�

�

C

i

given the features and selects the class with the highest probability:

�

^

=

arg

max

�

�

�

(

�

�

)

∏

�

=

1

�

�

(

�

�

∣

�

�

)

C

^

=arg

C

i

max

P(C

i

)

j=1

∏

n

P(x

j

∣C

i

)

This formula assumes that all features

�

�

x

j

are independent given the class

�

�

C

i

.

Types of Naive Bayes Classifiers

1. Gaussian Naive Bayes

Used when features are continuous and follow a Gaussian distribution.

�

(

�

�

∣

�

)

=

1

2

�

�

�

2

exp

(

−

(

�

�

−

�

�

)

2

2

�

�

2

)

P(x

i

∣y)=

2πσ

y

2

1

exp(−

2σ

y

2

(x

i

−μ

y

)

2

)

2. Multinomial Naive Bayes

Used for discrete features such as word counts in a document (common in text classification).

3. Bernoulli Naive Bayes

Used when features are binary (presence/absence of a feature, e.g., word in a document).

Applications of Naive Bayes

Text Classification: Spam filtering, sentiment analysis.

Medical Diagnosis: Predicting diseases based on symptoms.

Document Categorization: Grouping articles into categories.

Recommendation Systems: Predicting user preferences.

Credit Scoring: Classifying customers as risky or not.

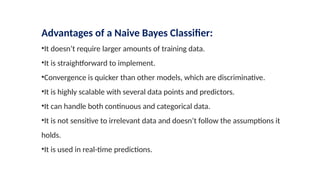

Advantages of Naive Bayes

Simple and easy to implement.

Requires less training data.

Handles both continuous and discrete data.

Works well with high-dimensional data.

Robust to irrelevant features.

Disadvantages

Assumes feature independence.

Performance may degrade if features are highly correlated.

Poor estimates for zero probabilities (handled using Laplace smoothing).

Laplace Smoothing

To avoid assigning zero probability to unseen features:

�

(

�

�

∣

�

)

=

�

�

�

�

�

(

�

�

,

�

)

+

1

�

�

�

�

�

(

�

)

+

∣

�

∣

P(x

i

∣y)=

count(y)+∣X∣

count(x

i

,y)+1

Where |X| is the total number of unique features.

Model Training and Prediction

Training Phase:

Compute prior probabilities

�

(

�

)

P(C)

Compute conditional probabilities

�

(