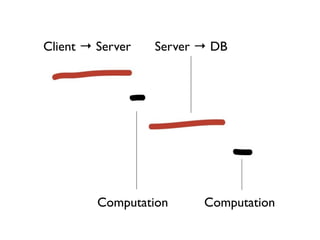

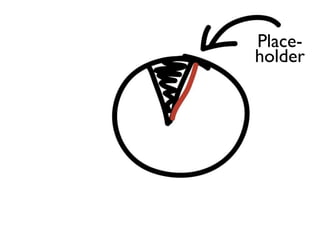

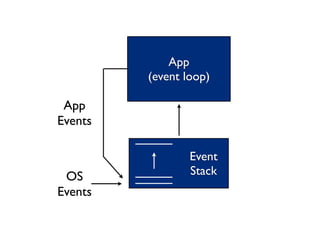

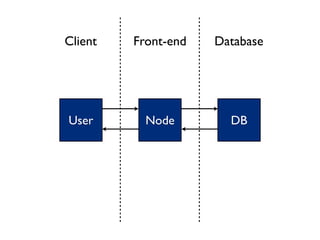

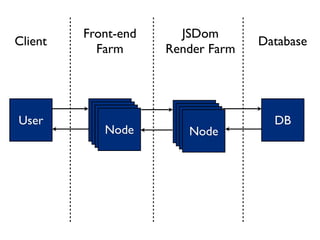

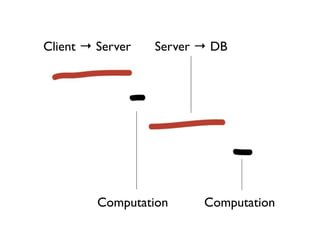

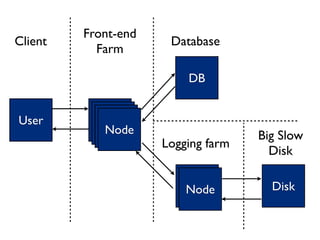

1. The document discusses multi-tiered Node.js architectures to improve scalability and efficiency. It suggests moving non-client facing work like logging and processing to separate "farms" or clusters to avoid blocking the main event loop.

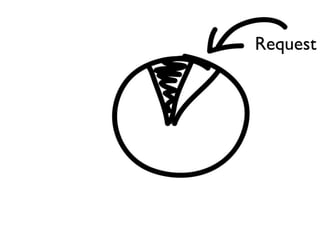

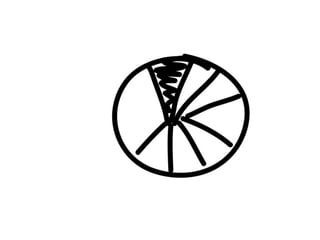

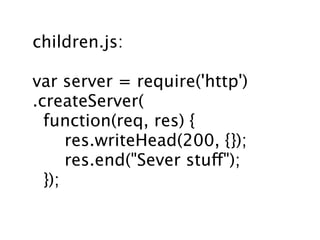

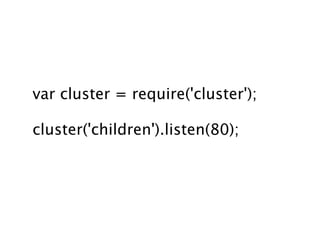

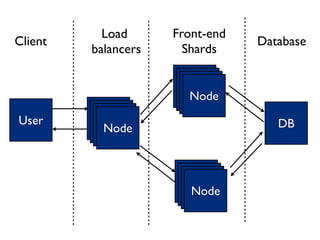

2. Another approach presented is to use front-end clusters or "shards" to distribute client requests across multiple Node processes to take advantage of parallel processing. This improves response times.

3. The key goals are to minimize client response times by keeping the main event loop available, while maximizing server resource efficiency by moving heavy processing tasks out of the main process.