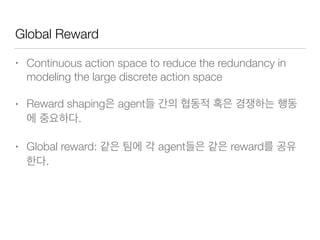

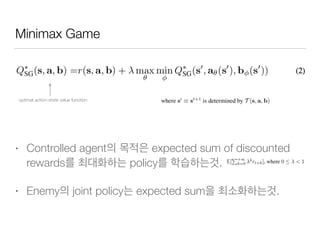

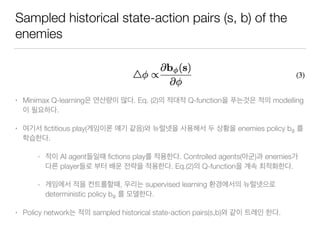

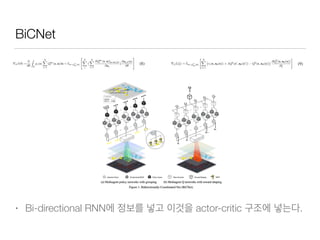

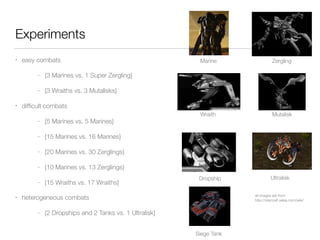

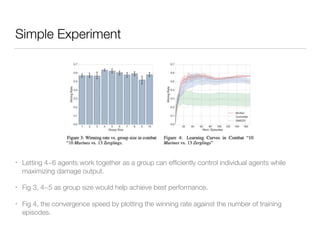

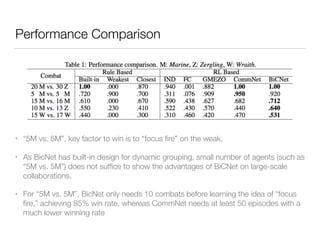

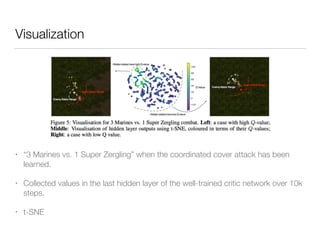

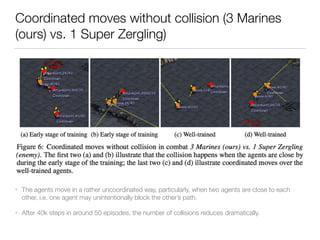

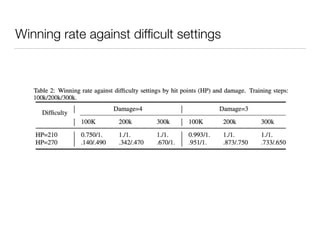

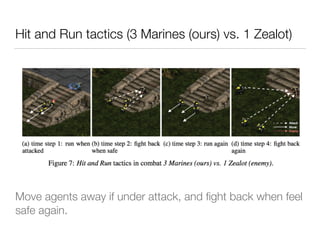

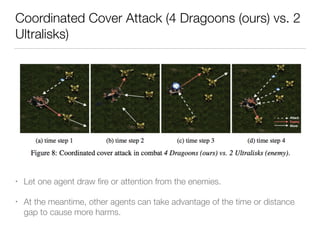

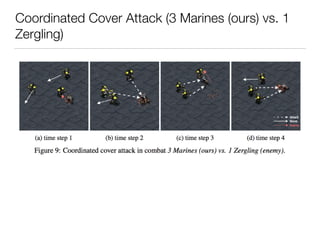

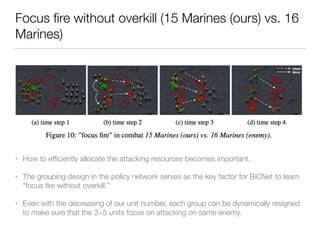

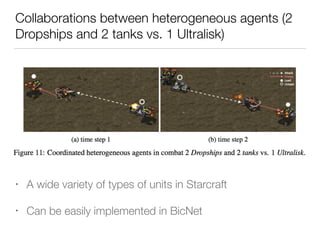

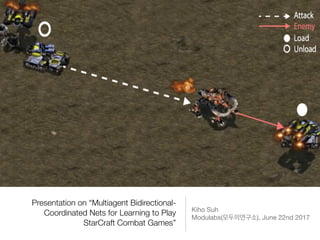

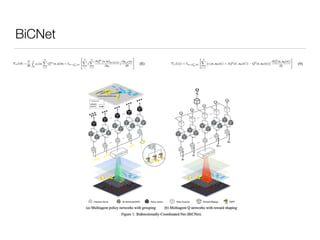

The document summarizes a presentation on a paper about using multiagent bidirectional-coordinated networks (BiCNet) to develop AI agents that can learn to play combat games in StarCraft. The paper introduces BiCNet, which uses bidirectional RNNs to allow agents to communicate and coordinate their actions. Experiments show BiCNet agents outperform independent and other cooperative agents in different combat scenarios in StarCraft, developing strategies like focus firing and coordinated attacks. Visualizations of agent coordination and additional areas for investigation are also discussed.

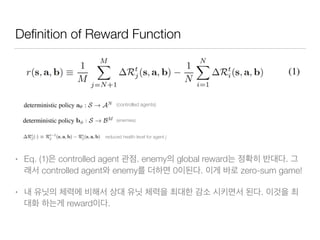

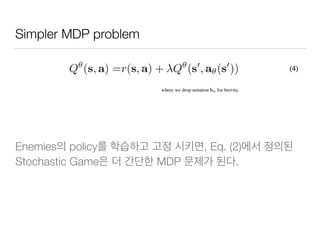

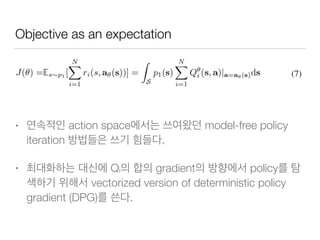

![Stochastic Game of N agents and M opponents

• S agent state space

• Ai Controller agent i action space, i ∈ [1, N]

• Bj enemy j action space, j ∈ [1, M]

• T : S x A

N

x B

M

-> S environment deterministic transition

function

• Ri : S x A

N

x B

M

-> R agent/enemy i reward function, i ∈ [1, N+M]

* agent( , ) action space .](https://image.slidesharecdn.com/multiagentbidirectional-coordinatednetsforlearningtoplaystarcraftcombatgames-170623022918/85/Multiagent-Bidirectional-Coordinated-Nets-for-Learning-to-Play-StarCraft-Combat-Games-13-320.jpg)