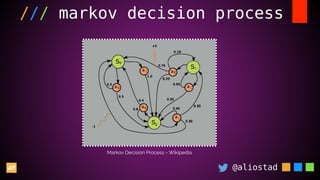

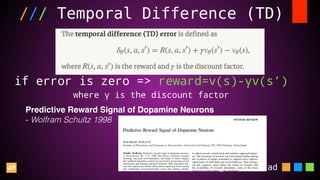

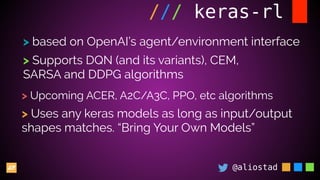

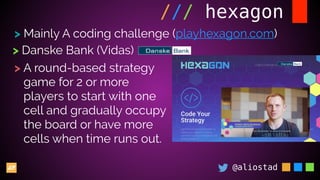

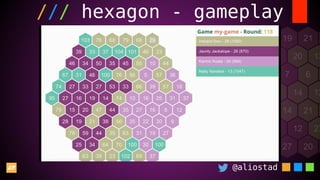

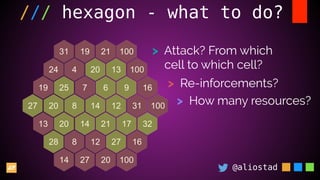

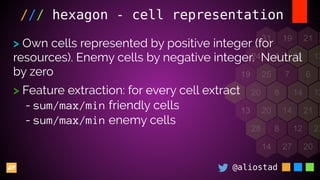

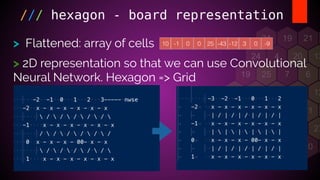

The document discusses the development of intelligent agents through deep reinforcement learning, highlighting historical milestones and foundational concepts. It covers various methods in deep learning, includes practical applications in games like Atari and Dota 2, and introduces a coding challenge called 'Hexagon' that combines strategy gaming and reinforcement learning models. Additionally, it outlines the structures and algorithms needed to implement these models effectively.

![@aliostad

/// DQN in keras-rl -2

INPUT

I N P U T

DENSE

DENSE

O U T P U T

[0.8, 0.9,…-0.3]

[0, 0, 1, 0]

DENSE

FLATTEN](https://image.slidesharecdn.com/autonomousagentswithdeepreinforcementlearning-oredev-181121135509/85/Autonomous-agents-with-deep-reinforcement-learning-Oredev-2018-29-320.jpg)

![@aliostad

/// hexagon - heuristics

self.attackPotential = self.resources *

math.sqrt(max(self.resources -

safeMin([n.resources for n in self.nonOwns]), 1)) /

math.log(sum([n.resources for n in self.enemies], 1)

+ 1, 5)

# how suitable is a cell for receiving boost

self.boostFactor = math.sqrt(sum((n.resources for n in self.enemies), 1)) *

safeMax([n.resources for n in self.enemies], 1) /

(self.resources + 1)

def getGivingBoostSuitability(self):

return (self.depth + 1) * math.sqrt(self.resources + 1) *

(1.7 if self.resources == 100 else 1)](https://image.slidesharecdn.com/autonomousagentswithdeepreinforcementlearning-oredev-181121135509/85/Autonomous-agents-with-deep-reinforcement-learning-Oredev-2018-41-320.jpg)

![@aliostad

/// hexagon - heuristics??

> Score functions are arbitrary: they do not necessarily

represent the underlying mechanics of the game

> No easy way to learn parameters and and

testing all combinations impossible

> When it does not work, it is hard to

know which parameter to tune.

Got to be a better way…

self.attackPotential = self.resources *

math.sqrt(max(self.resources -

safeMin([n.resources for n in self.nonOwns]), 1)) /

math.log(sum([n.resources for n in self.enemies], 1)

+ 1, 5)](https://image.slidesharecdn.com/autonomousagentswithdeepreinforcementlearning-oredev-181121135509/85/Autonomous-agents-with-deep-reinforcement-learning-Oredev-2018-43-320.jpg)

![@aliostad

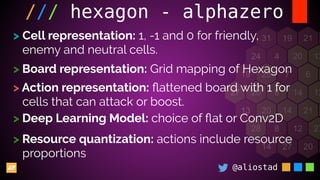

/// hexagon - alphazero

> default: python hexagon_alphazero train —radius 4

> model: python hexagon_alphazero train -m [f|cm|cam]

> default: python hexagon_alphazero test -p fm -q a

> quantization: python hexagon_alphazero -p fmz -a a -z 4

> rounds: python hexagon_alphazero -p cmz -q a -x 200

Training

Testing](https://image.slidesharecdn.com/autonomousagentswithdeepreinforcementlearning-oredev-181121135509/85/Autonomous-agents-with-deep-reinforcement-learning-Oredev-2018-51-320.jpg)