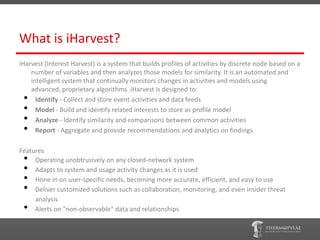

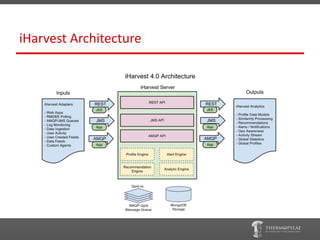

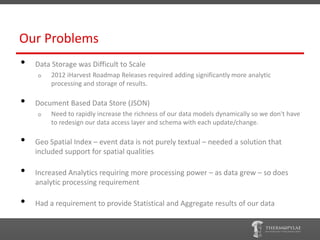

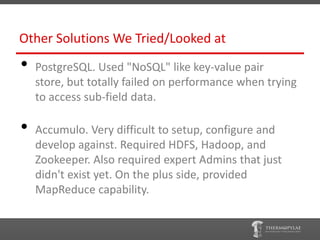

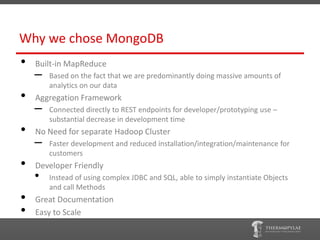

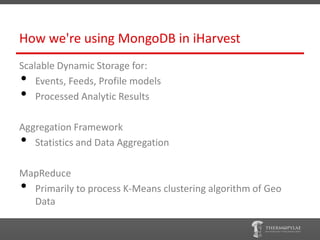

The document outlines the adoption of MongoDB in iharvest, a system developed to analyze and visualize spatio-behavioral data. Key reasons for selecting MongoDB include its scalability, built-in mapreduce capabilities, and user-friendly nature, which facilitated dynamic storage and efficient analytics processing. Lessons learned emphasize the importance of changing the mindset when transitioning from relational to NoSQL databases and optimizing usage of MongoDB's features for data processing and aggregation.