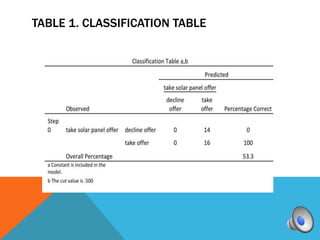

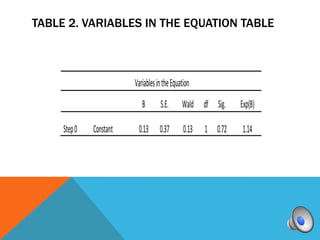

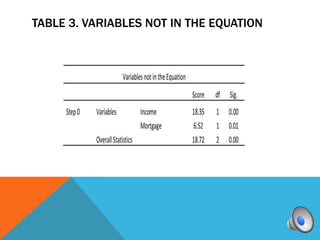

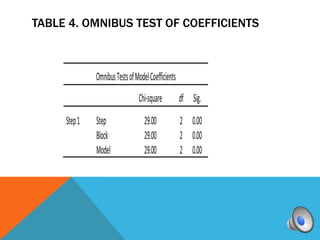

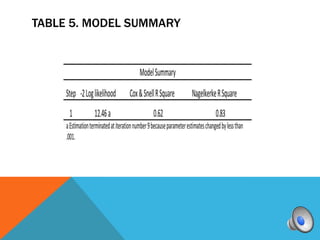

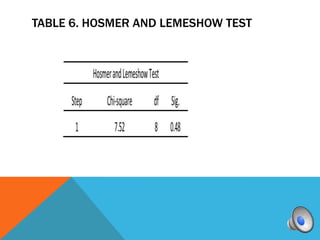

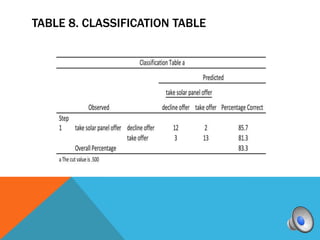

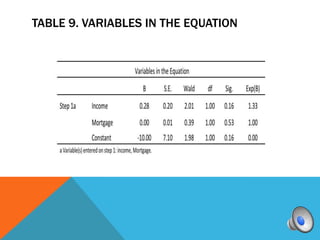

A logistic regression was conducted to predict if homeowners would accept or decline a solar panel offer based on household income and monthly mortgage. The full model was a good fit compared to the constant model. While the predictors were not individually significant, they correctly classified 83.3% of cases overall. Higher income was associated with higher odds of accepting the offer.