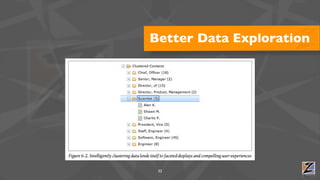

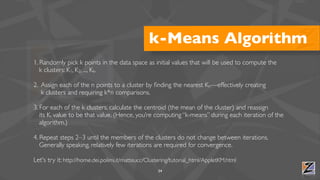

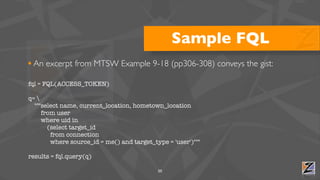

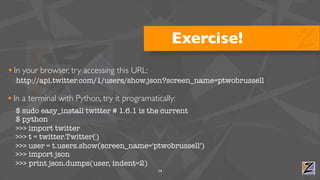

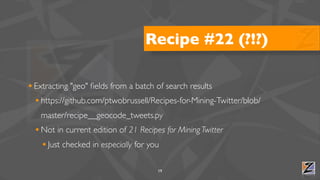

This document provides an overview and schedule for a workshop on analyzing and visualizing geo data from social media sources. It discusses extracting geo data from microformats, Twitter, LinkedIn, and Facebook. Hands-on exercises are demonstrated for analyzing Twitter data using Python scripts and visualizing results. Clustering approaches for grouping geo data are also introduced.

![A Tweet as JSON

{

"user" : {

"name" : "Matthew Russell",

"description" : "Author of Mining the Social Web; International Sex Symbol",

"location" : "Franklin, TN",

"screen_name" : "ptwobrussell",

...

},

"geo" : { "type" : "Point", "coordinates" : [36.166, 86.784]},

"text" : "Franklin, TN is the best small town in the whole wide world #WIN",

...

}

13](https://image.slidesharecdn.com/miningthegeoneedlesinthesocialhaystack-where20-2011-110419104714-phpapp02/85/Mining-the-Geo-Needles-in-the-Social-Haystack-13-320.jpg)

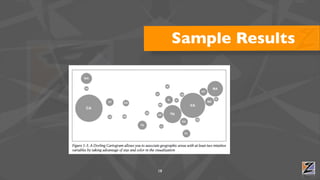

![Sample Results

• Unfortunately (???), "geo" data for

[None, None, None, None, None, None, None, None, None, None,

tweets seems really scarce None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

• Varies according to a particular None, None, {u'type': u'Point', u'coordinates':

[32.802900000000001, -96.828100000000006]}, {u'type':

u'Point', u'coordinates': [33.793300000000002, -117.852]},

None, None, None, None, None, None, None, None, None, None,

user's privacy mindset? None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, {u'type': u'Point', u'coordinates':

[35.512099999999997, -97.631299999999996]}, None, None,

• Examining only Twitter users who None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

enable "geo" would be interesting None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

None, None, None, None, None, None, None, None, None, None,

in and of itself 20

None]](https://image.slidesharecdn.com/miningthegeoneedlesinthesocialhaystack-where20-2011-110419104714-phpapp02/85/Mining-the-Geo-Needles-in-the-Social-Haystack-20-320.jpg)