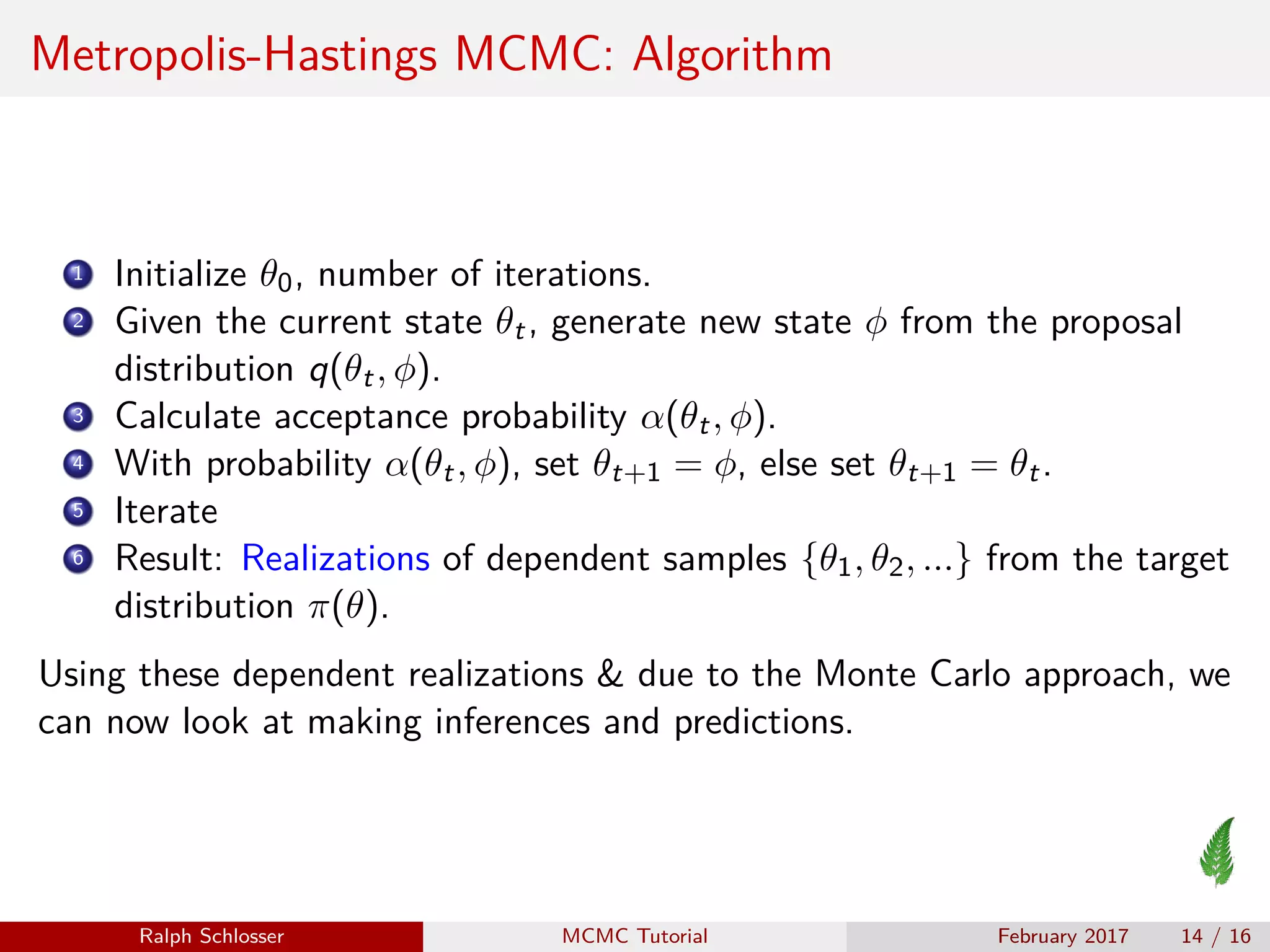

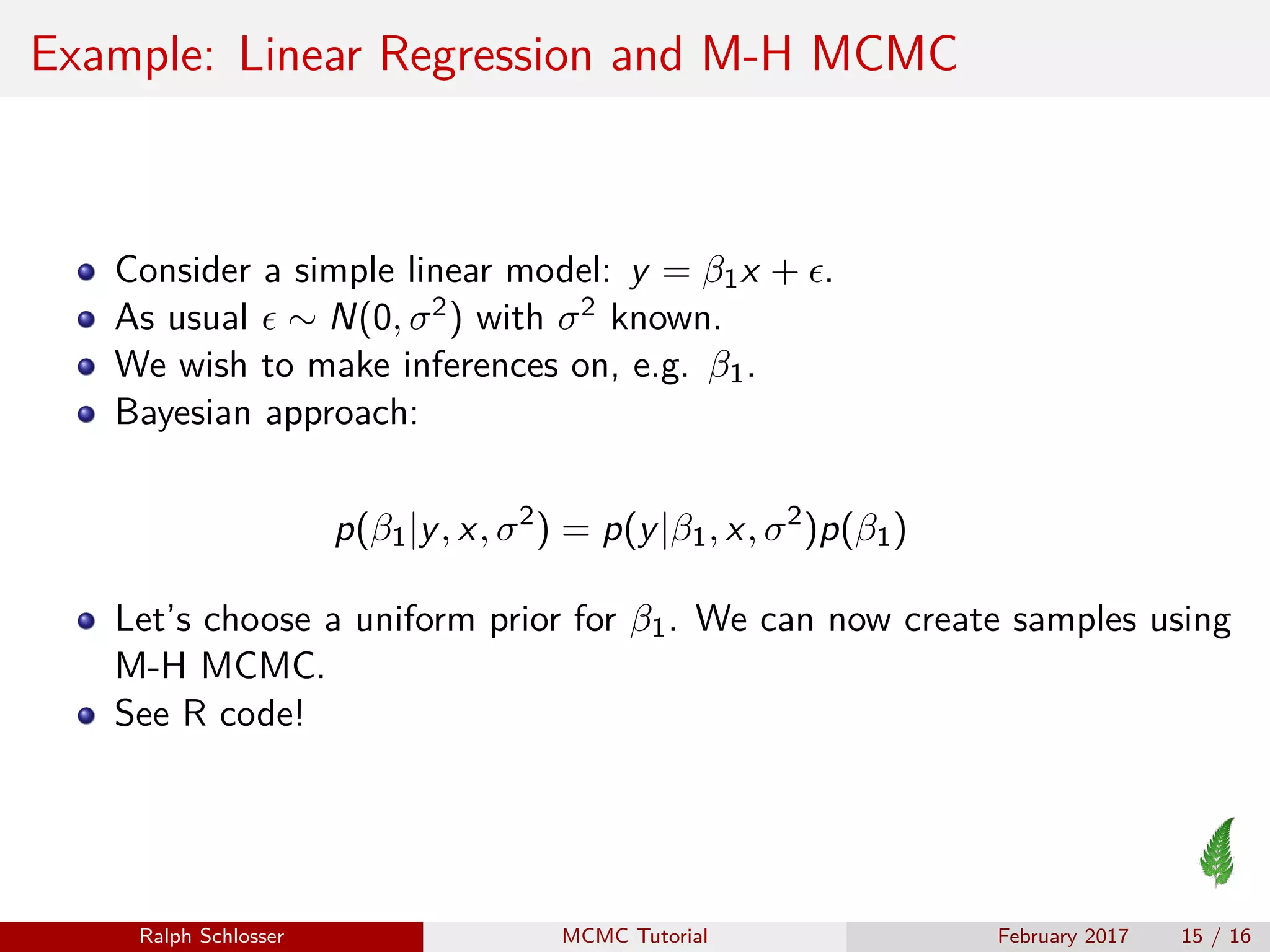

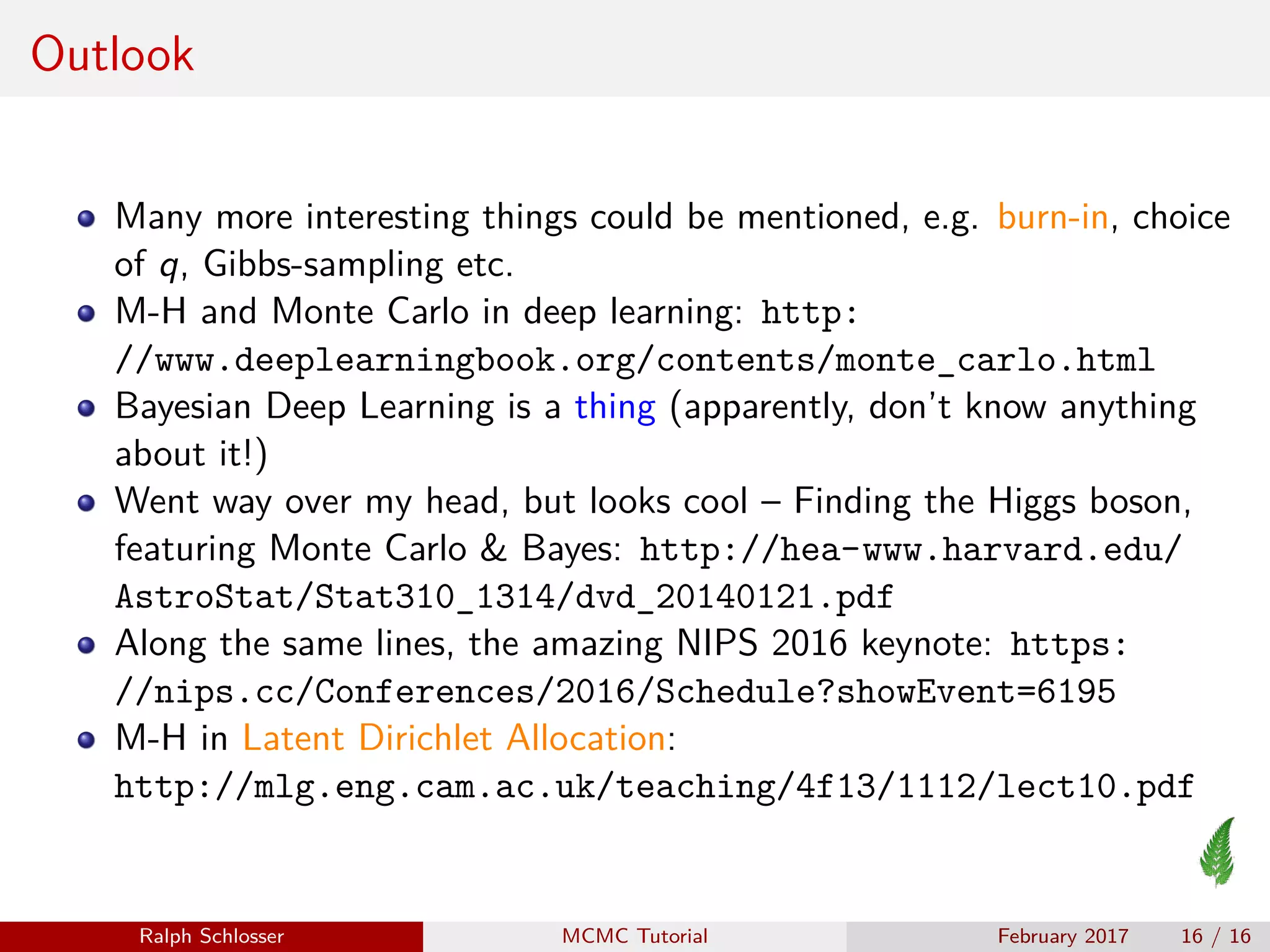

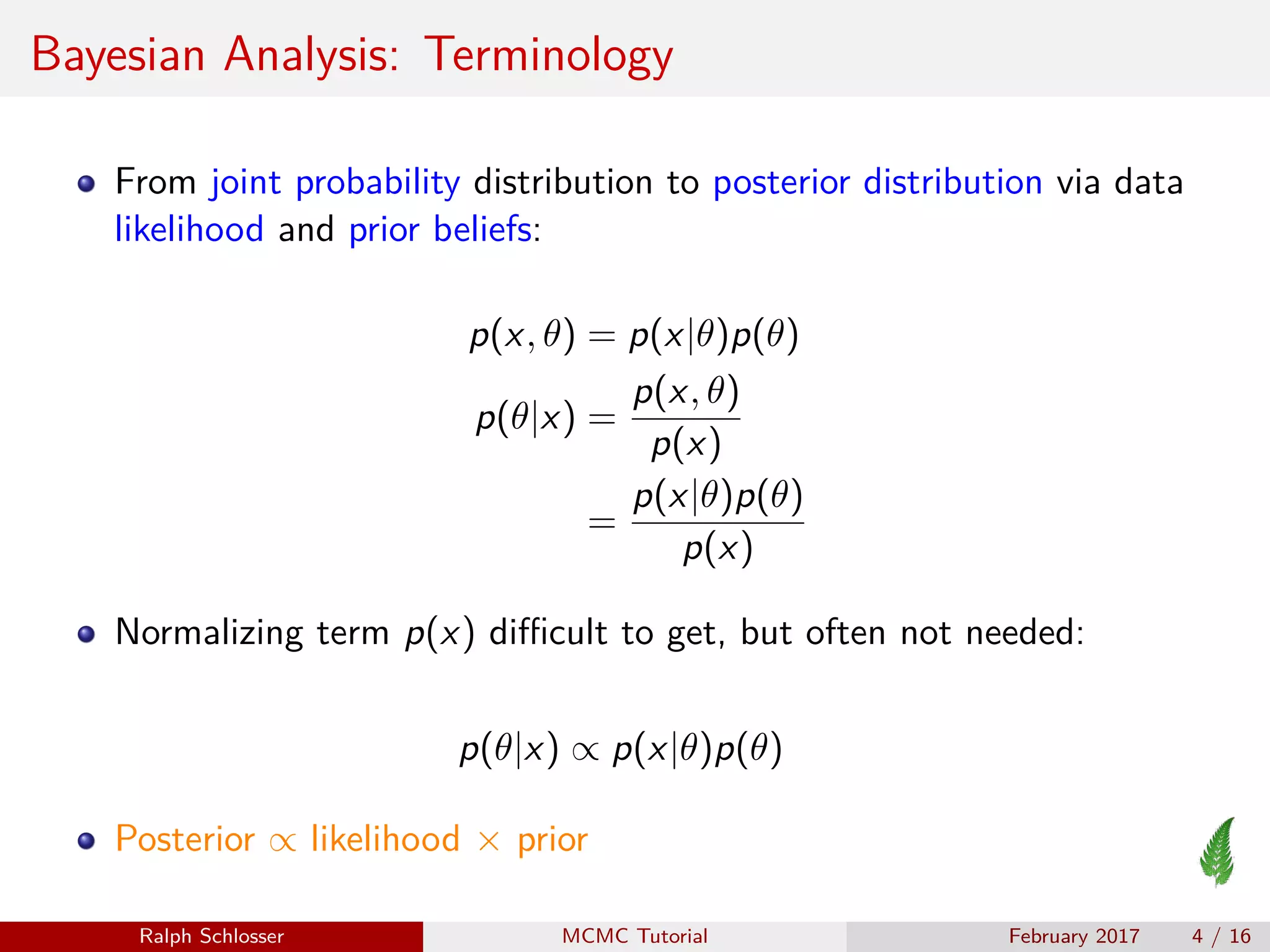

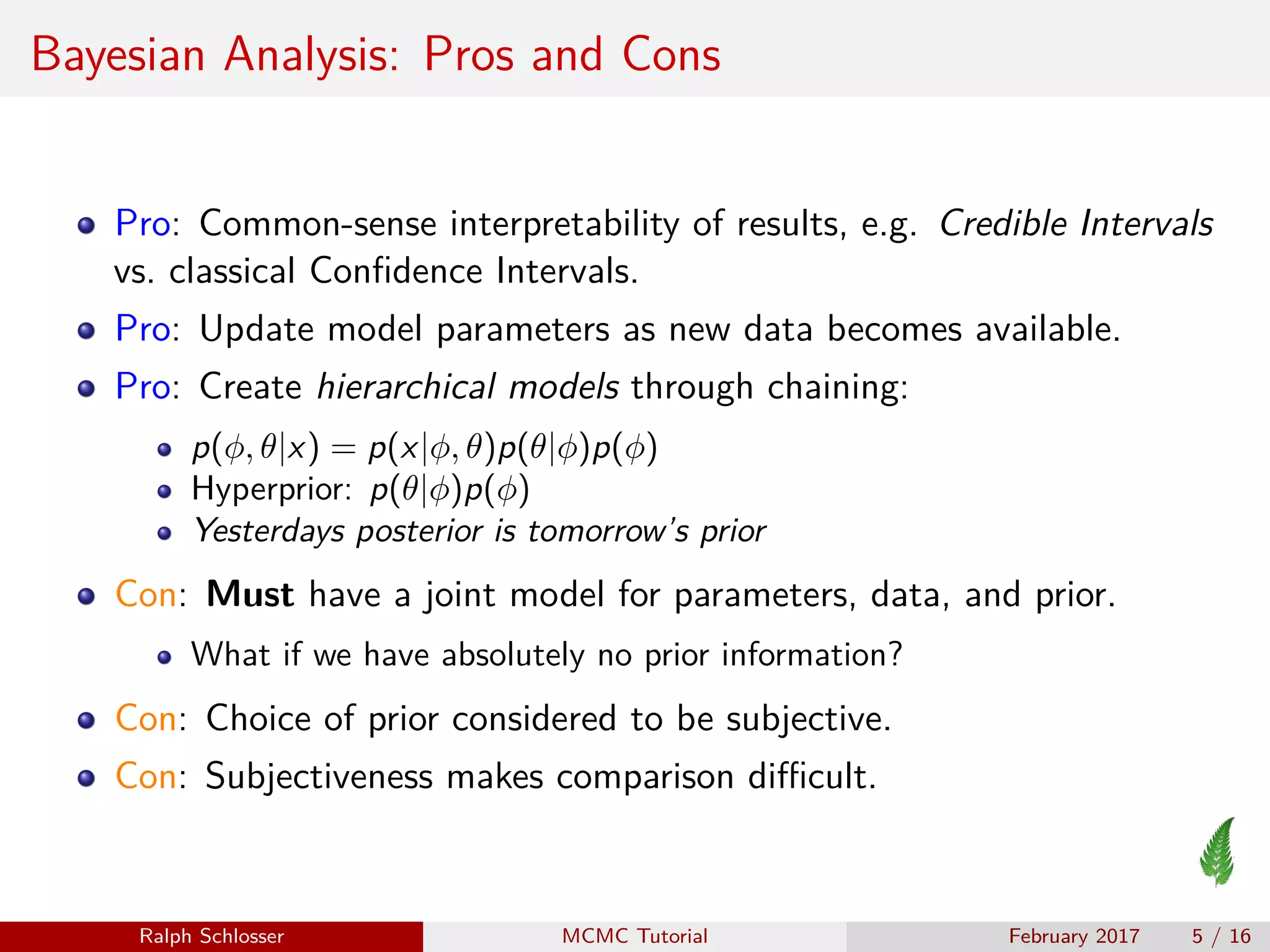

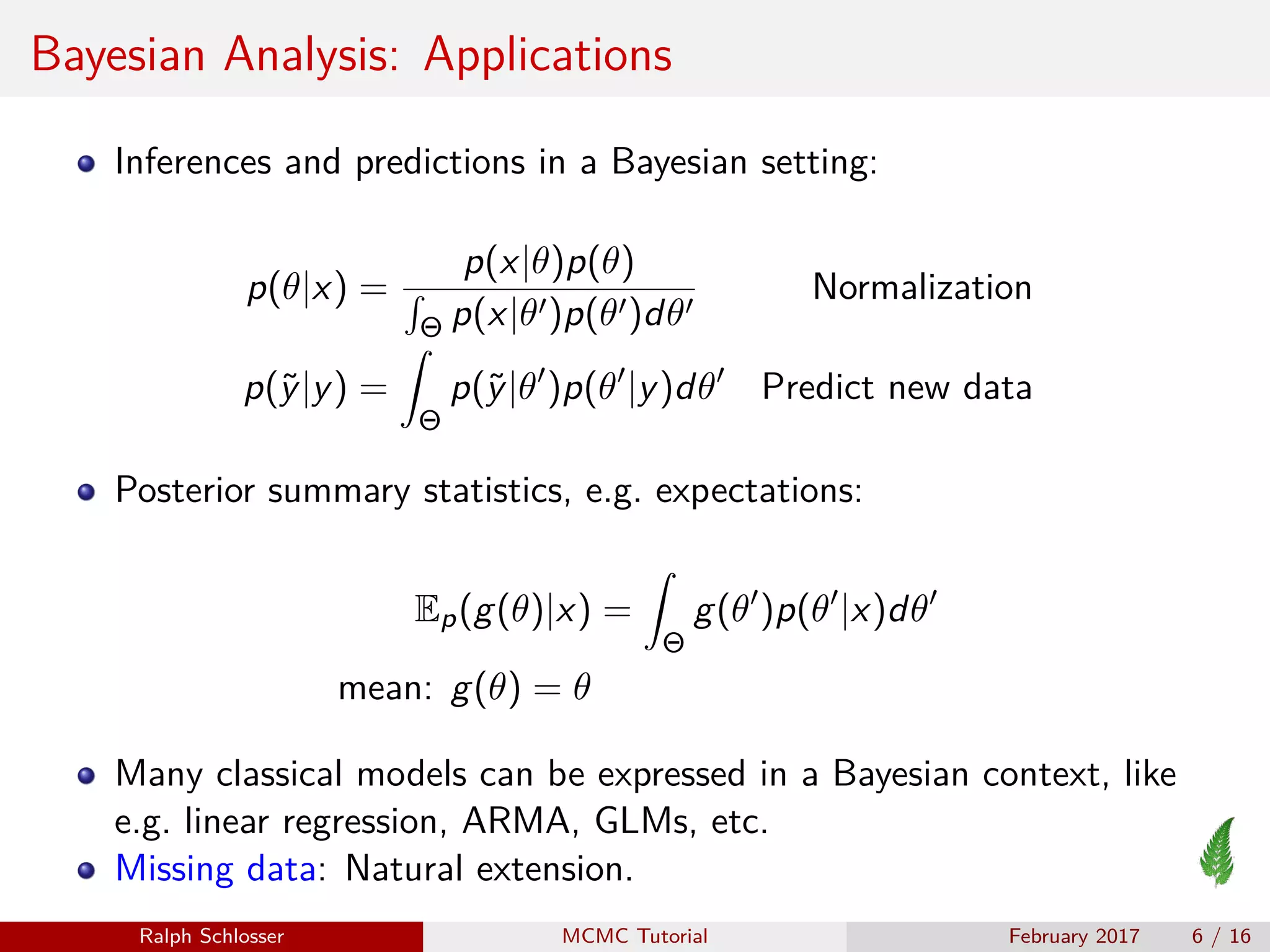

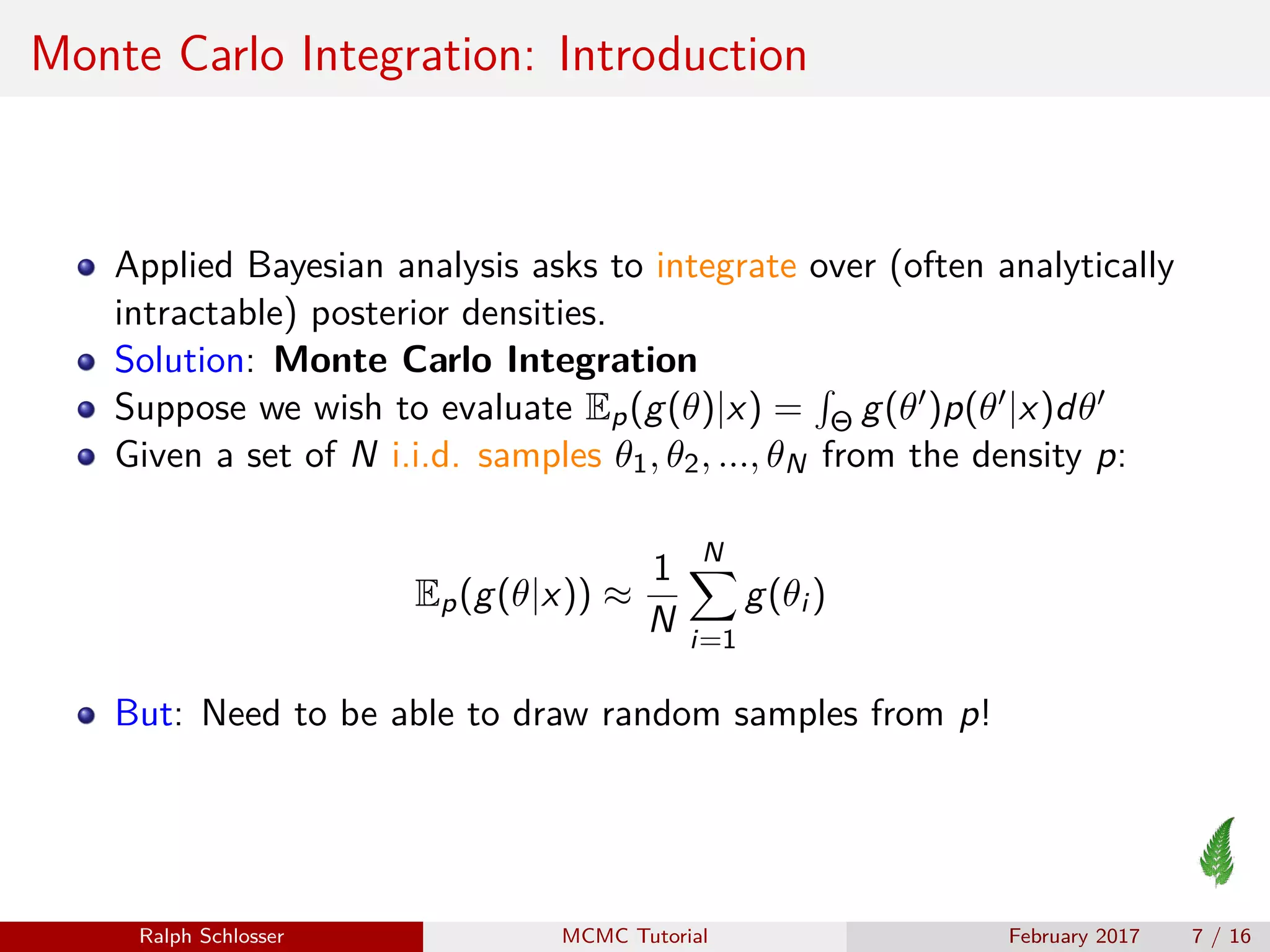

This document provides an introduction to Bayesian analysis and Metropolis-Hastings Markov chain Monte Carlo (MCMC). It explains the foundations of Bayesian analysis and how MCMC sampling methods like Metropolis-Hastings can be used to draw samples from posterior distributions that are intractable. The Metropolis-Hastings algorithm works by constructing a Markov chain with the target distribution as its stationary distribution. The document provides an example of using MCMC to perform linear regression in a Bayesian framework.

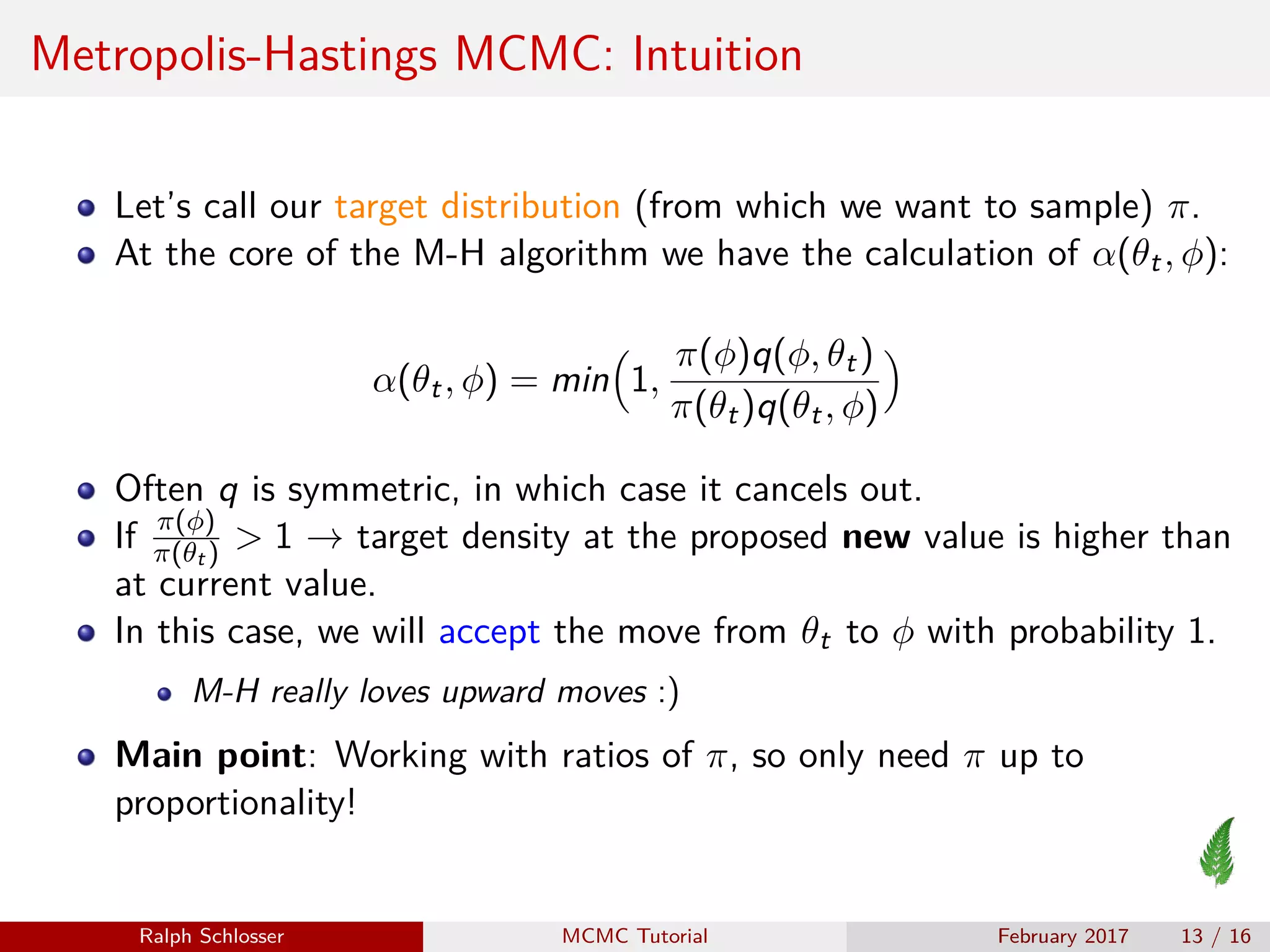

![Monte Carlo Integration: Example

Simulate N = 10000 draws from a univariate standard normal, i.e.

X ∼ N(0, 1). Let p(x) be the normal density. Then:

P(X ≤ 0.5) =

0.5

−∞

p(x)dx

set.seed(123)

data <- rnorm(n = 10000)

prob.in <- data <= 0.5

sum(prob.in) / 10000

## [1] 0.694

pnorm(0.5)

## [1] 0.6914625

Ralph Schlosser MCMC Tutorial February 2017 8 / 16](https://image.slidesharecdn.com/mcmc-tutorial-170215100830/75/Metropolis-Hastings-MCMC-Short-Tutorial-8-2048.jpg)