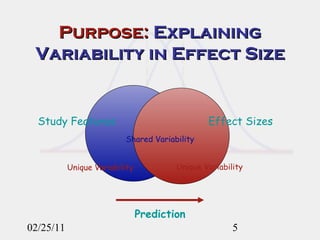

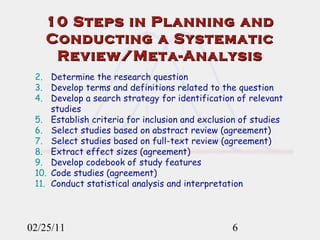

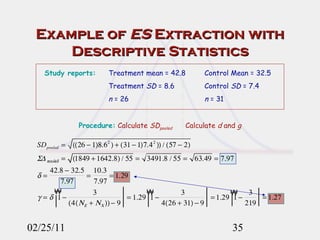

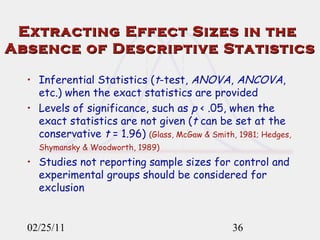

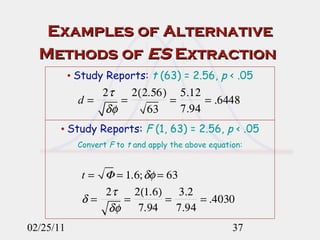

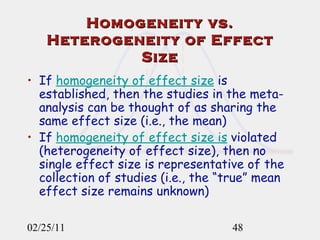

This document provides an overview of the steps involved in conducting a systematic review and meta-analysis. It discusses defining a research question, developing search strategies to identify relevant studies, establishing inclusion/exclusion criteria, selecting studies, extracting effect sizes from studies, and conducting a statistical analysis to summarize results. The goal is to synthesize research evidence in a transparent, reproducible manner to answer the research question.

![02/25/11 Preliminary Searches Reference Sources: Purpose : To obtain definitions for the terms; creativity, critical thinking, decision making, divergent thinking, intelligence; problem solving, reasoning, thinking. Sources: Bailin, S. (1998). Critical Thinking: Philosophical Issues . [CD-ROM] Education: The Complete Encyclopedia. Elsevier Science, Ltd. Barrow, R., & Milburn, G. (1990). A critical dictionary of educational concepts: An appraisal of selected ideas and issues in educational theory and practice (2 nd ed.) . Hertfordshire, UK: Harvester Wheatsheaf Colman (2001). Dictionary of Psychology (complete reference to be obtained) Corsini, R. J. (1999). The dictionary of psychology . Philadelphia, PA: Brunner/Mazel Dejnoka, E. L., & Kapel, D. E. (1991). American educator’s encyclopedia . Westport, CT: Greenwood Press. …… (see handout)](https://image.slidesharecdn.com/bernardwademetaanalysisworkshop06copia-111102124148-phpapp02/85/meta-analysis-14-320.jpg)

![Selected References Bernard, R. M., Abrami, P. C., Lou, Y. Borokhovski, E., Wade, A., Wozney, L., Wallet, P.A., Fiset, M., & Huang, B. (2004). How Does Distance Education Compare to Classroom Instruction? A Meta-Analysis of the Empirical Literature. Review of Educational Research, 74 (3), 379-439. Glass, G. V., McGaw, B., & Smith, M. L. (1981). Meta-analysis in social research . Beverly Hills, CA: Sage. Hedges, L. V., & Olkin, I. (1985). Statistical methods for meta-analysis . Orlando, FL: Academic Press. Hedges, L. V., Shymansky, J. A., & Woodworth, G. (1989). A practical guide to modern methods of meta-analysis . [ERIC Document Reproduction Service No. ED 309 952]. 02/25/11](https://image.slidesharecdn.com/bernardwademetaanalysisworkshop06copia-111102124148-phpapp02/85/meta-analysis-54-320.jpg)