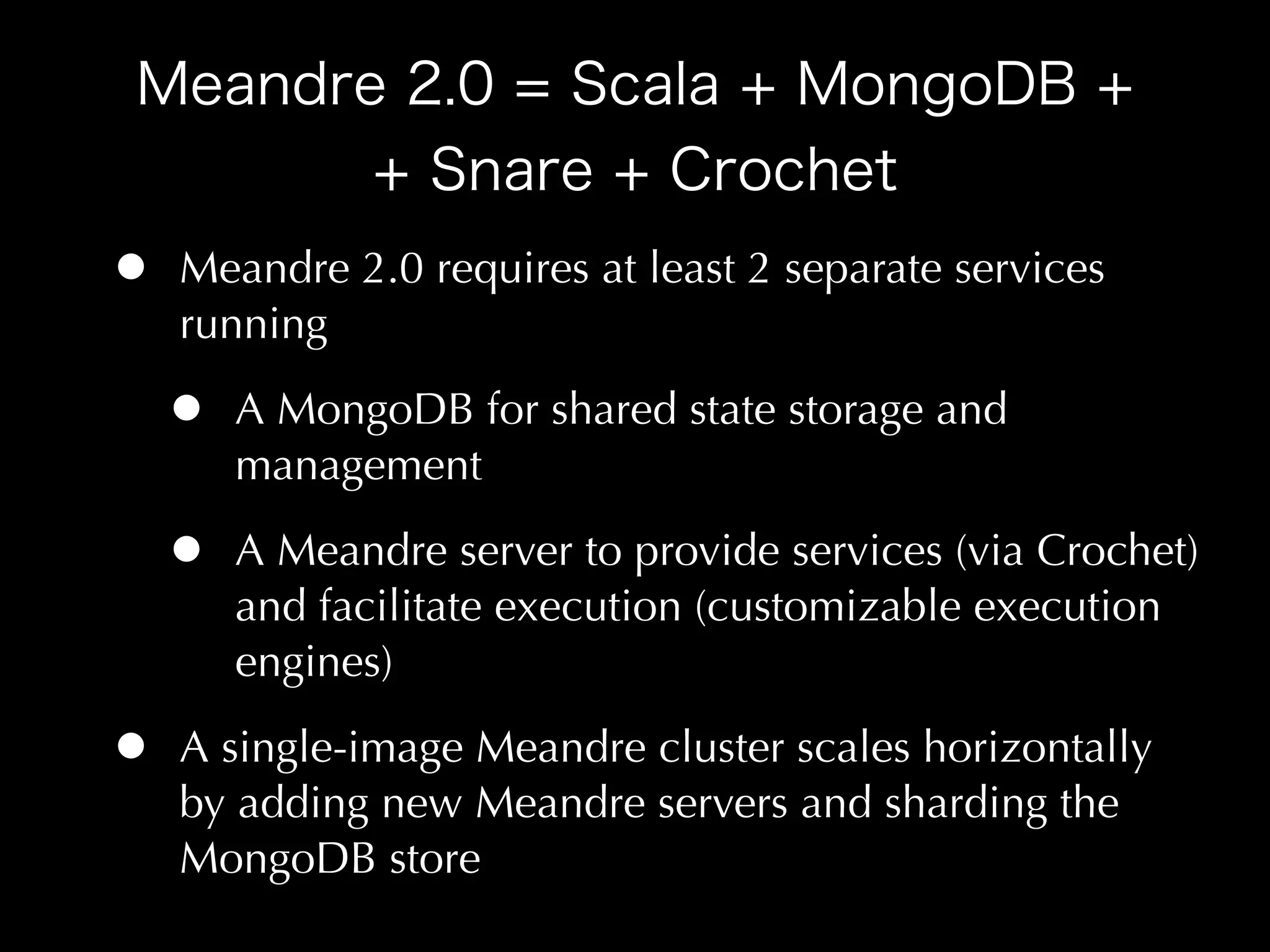

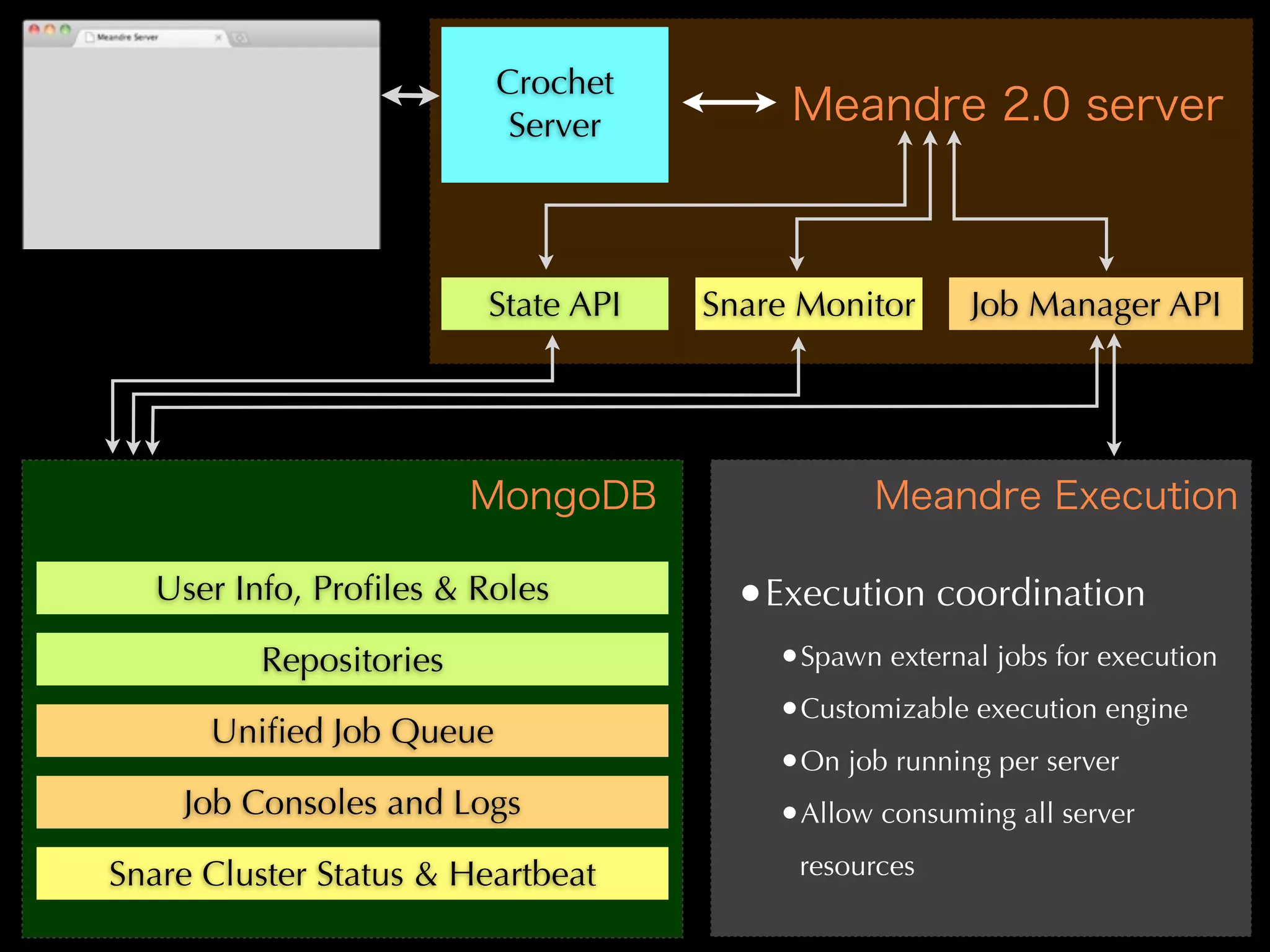

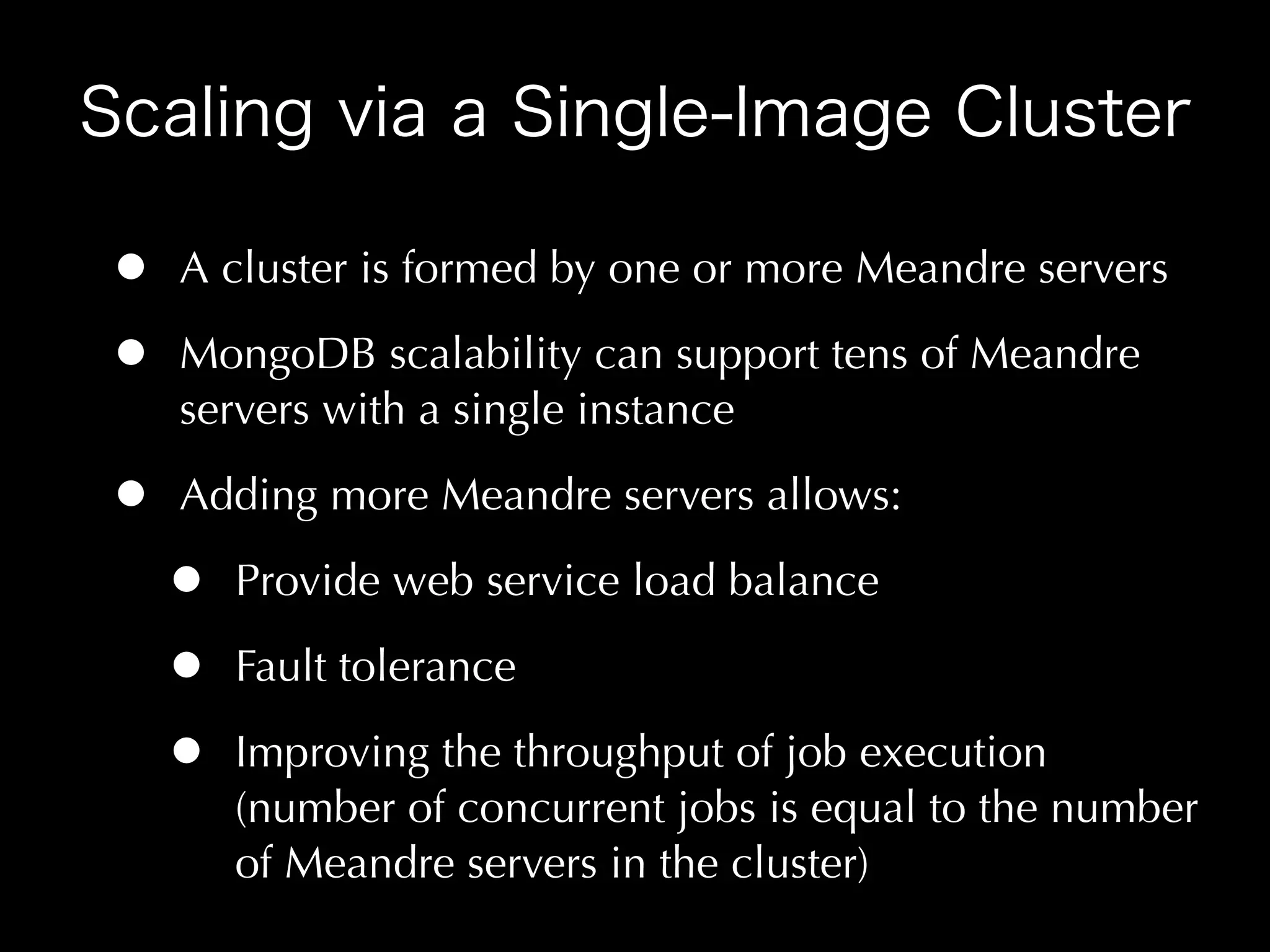

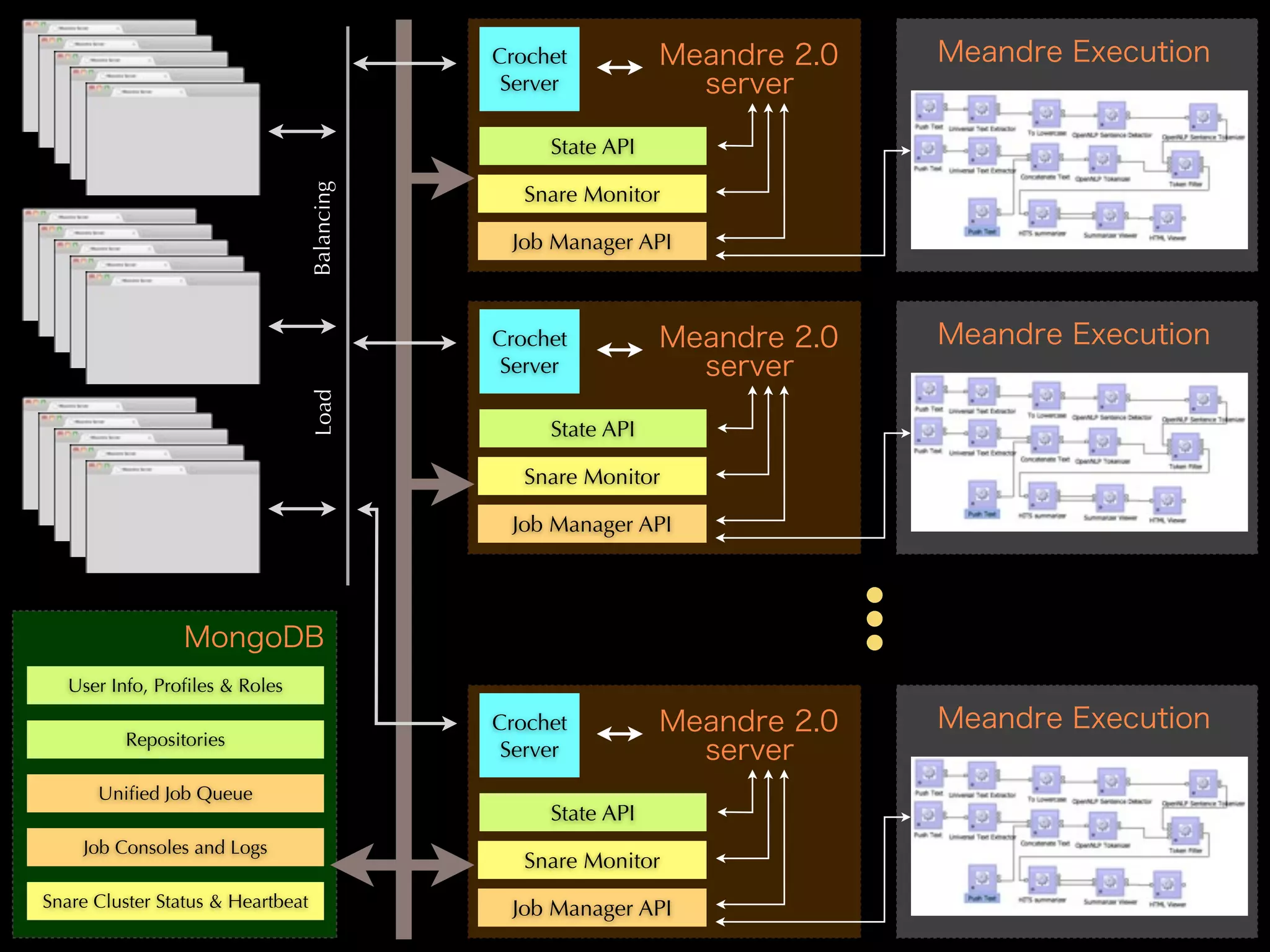

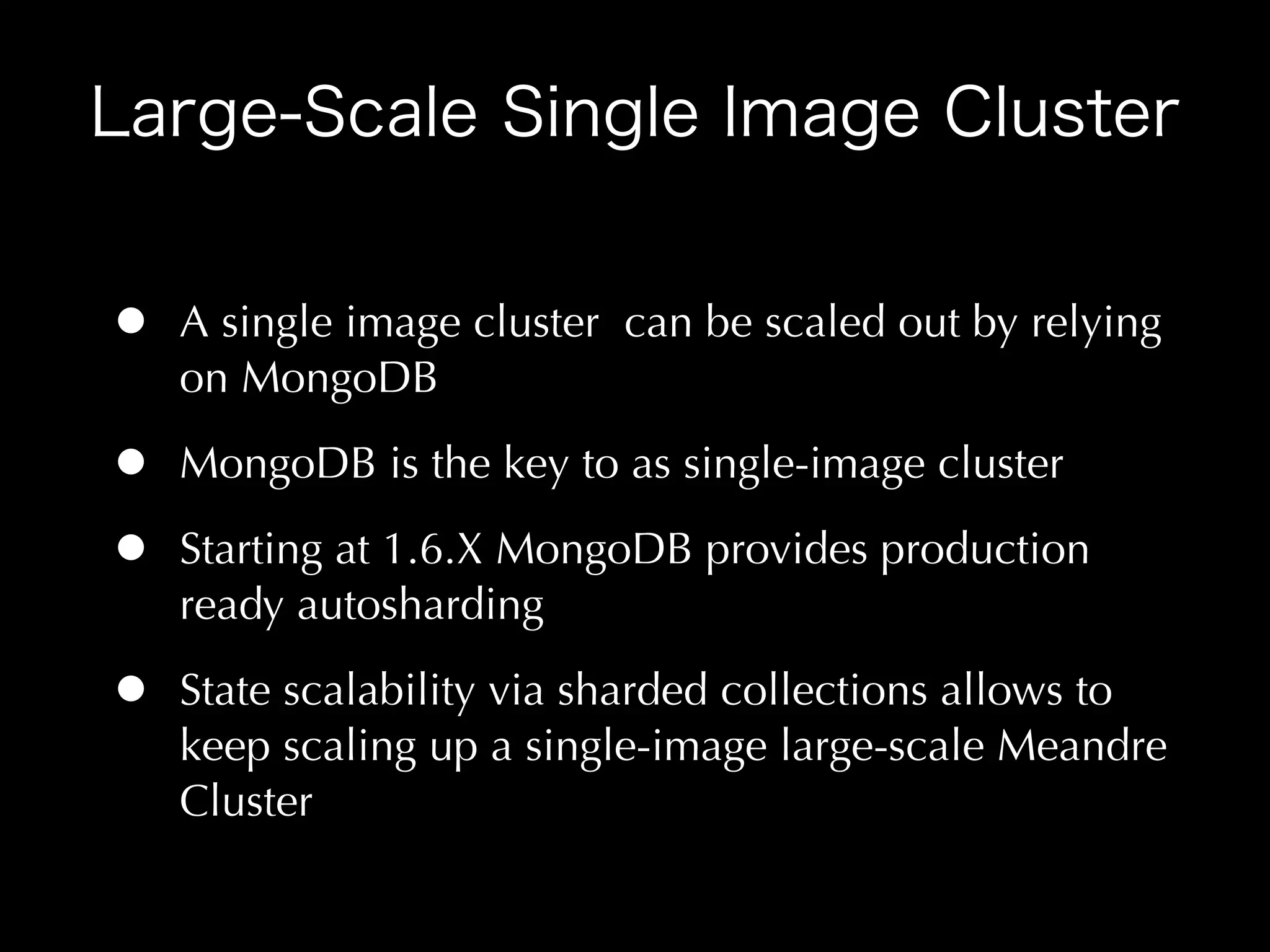

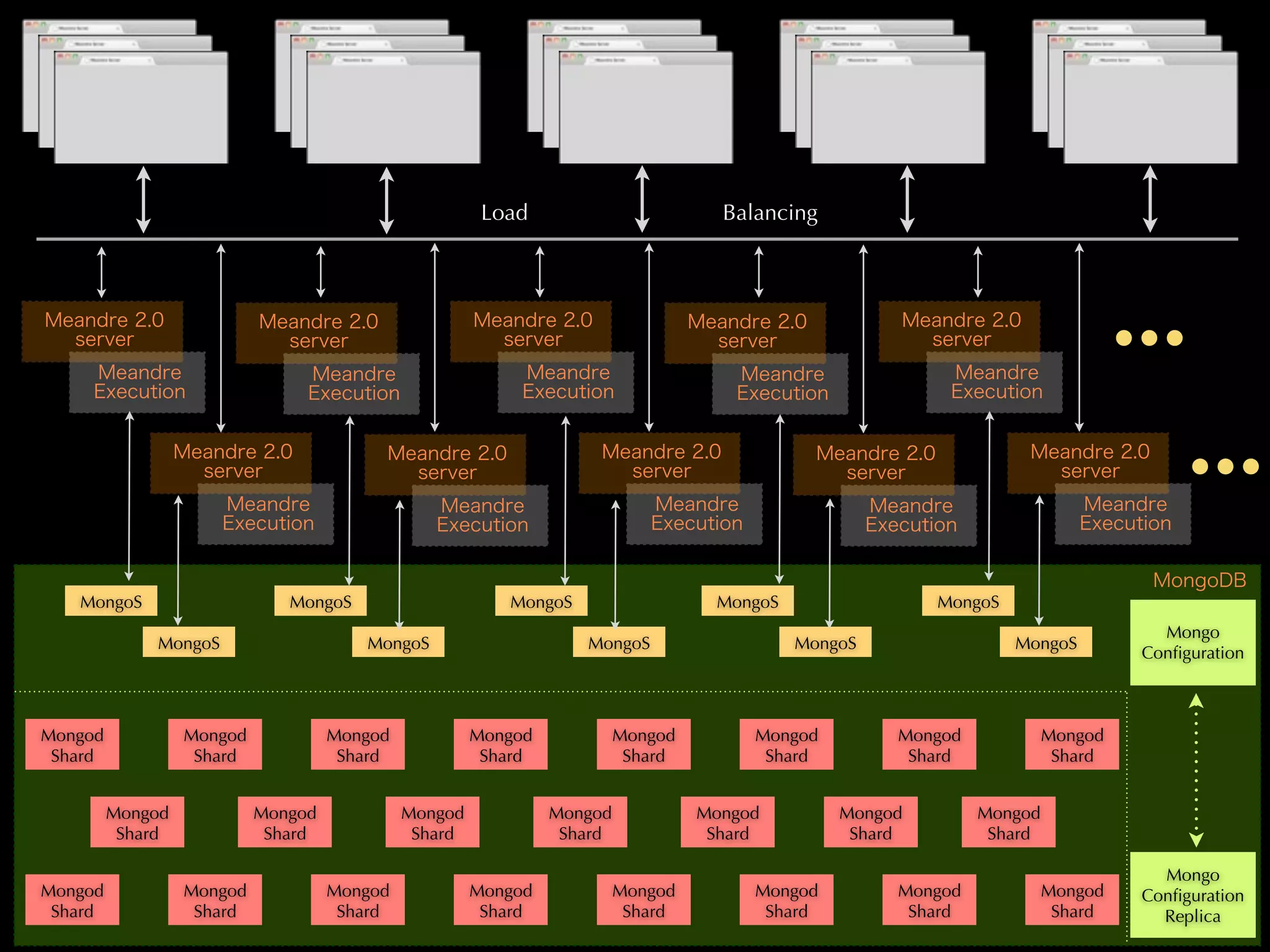

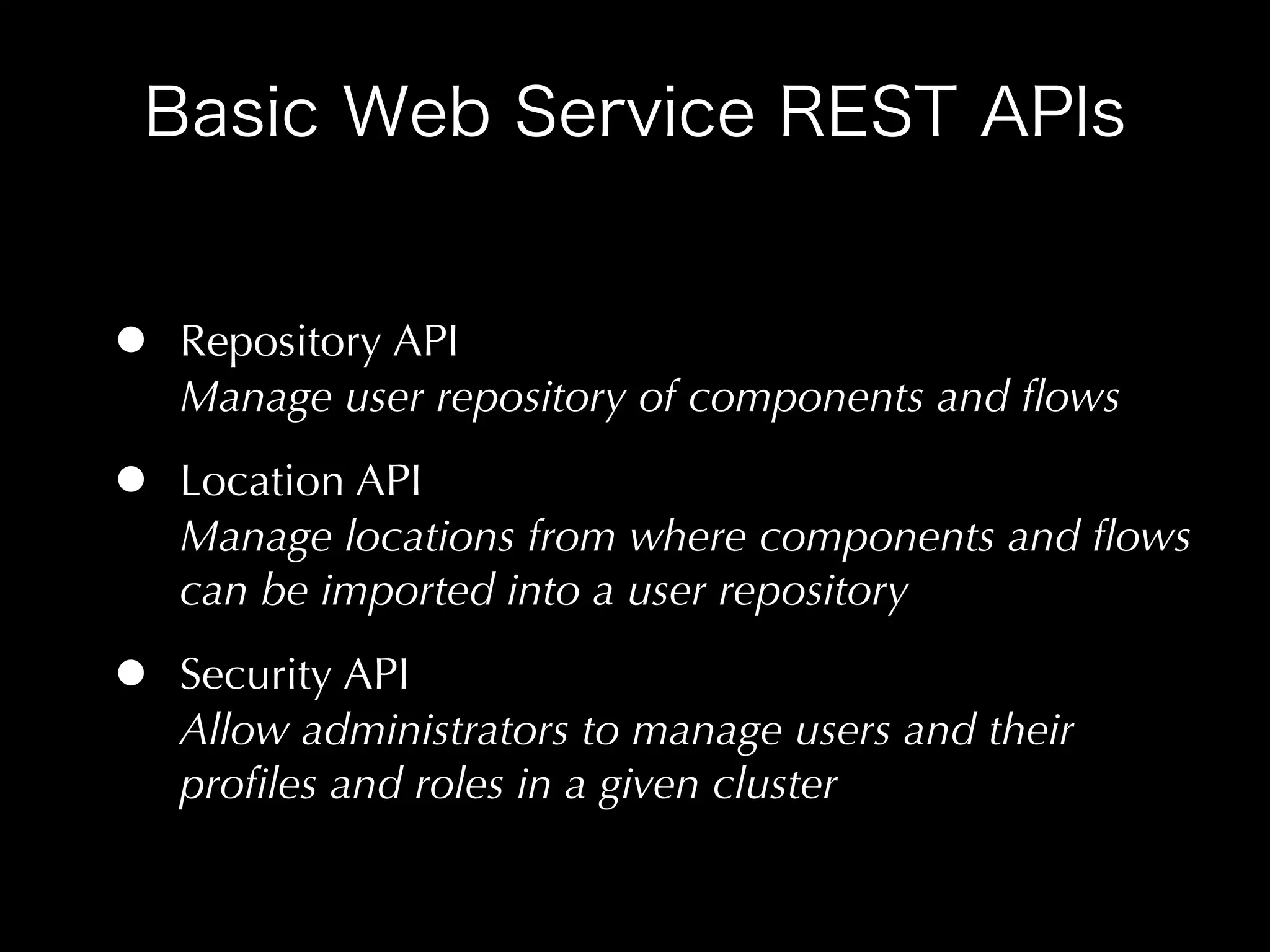

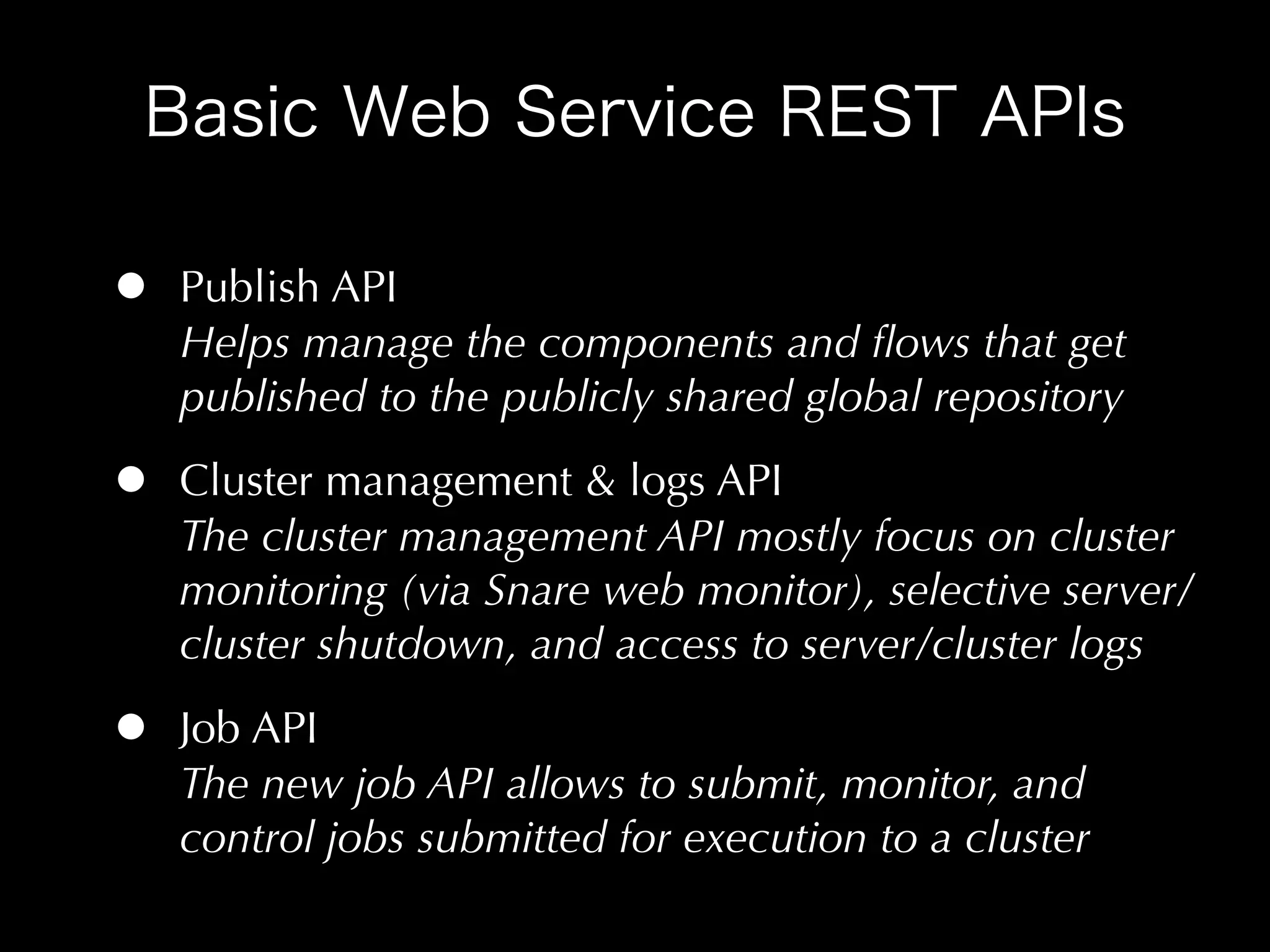

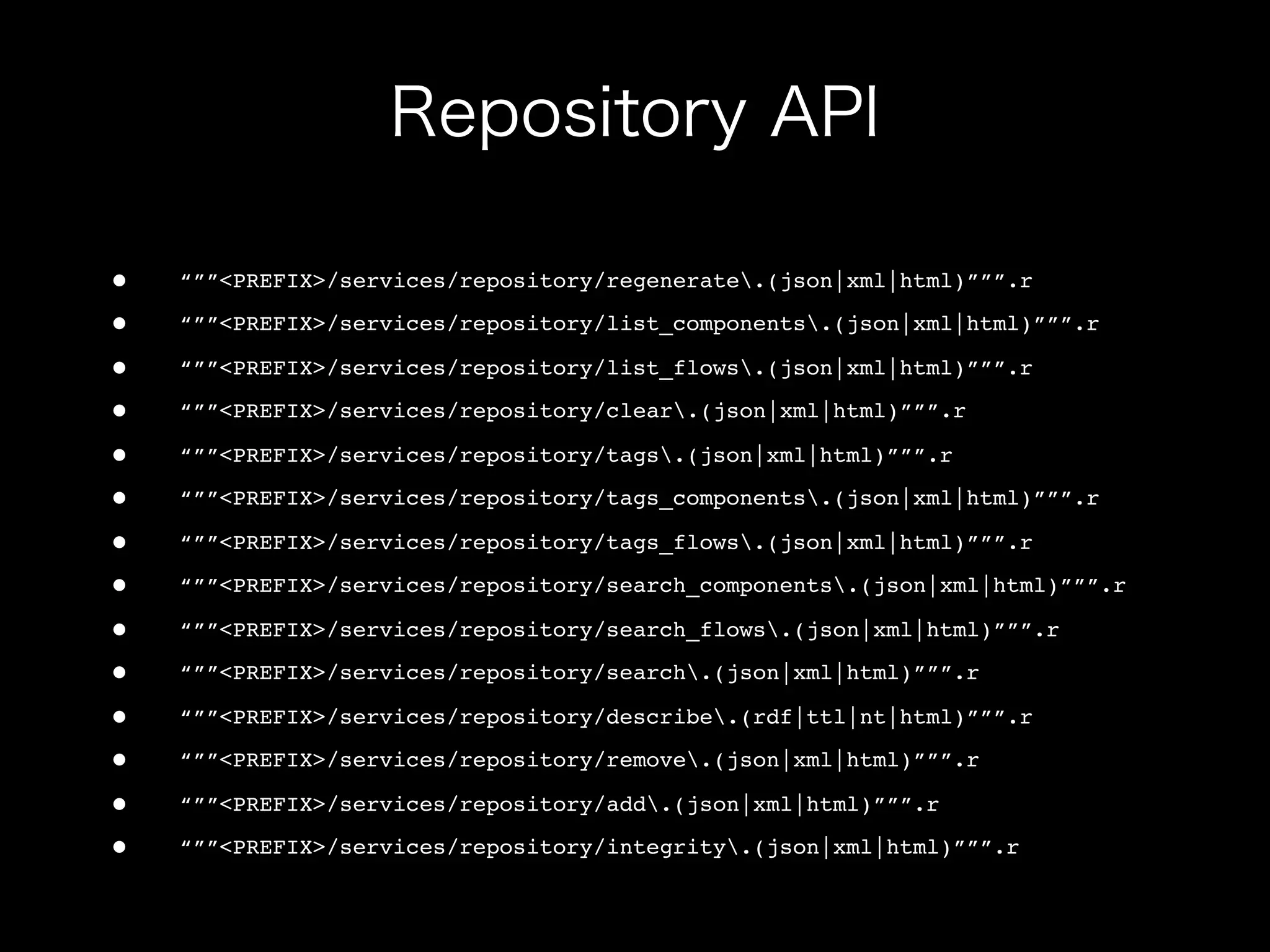

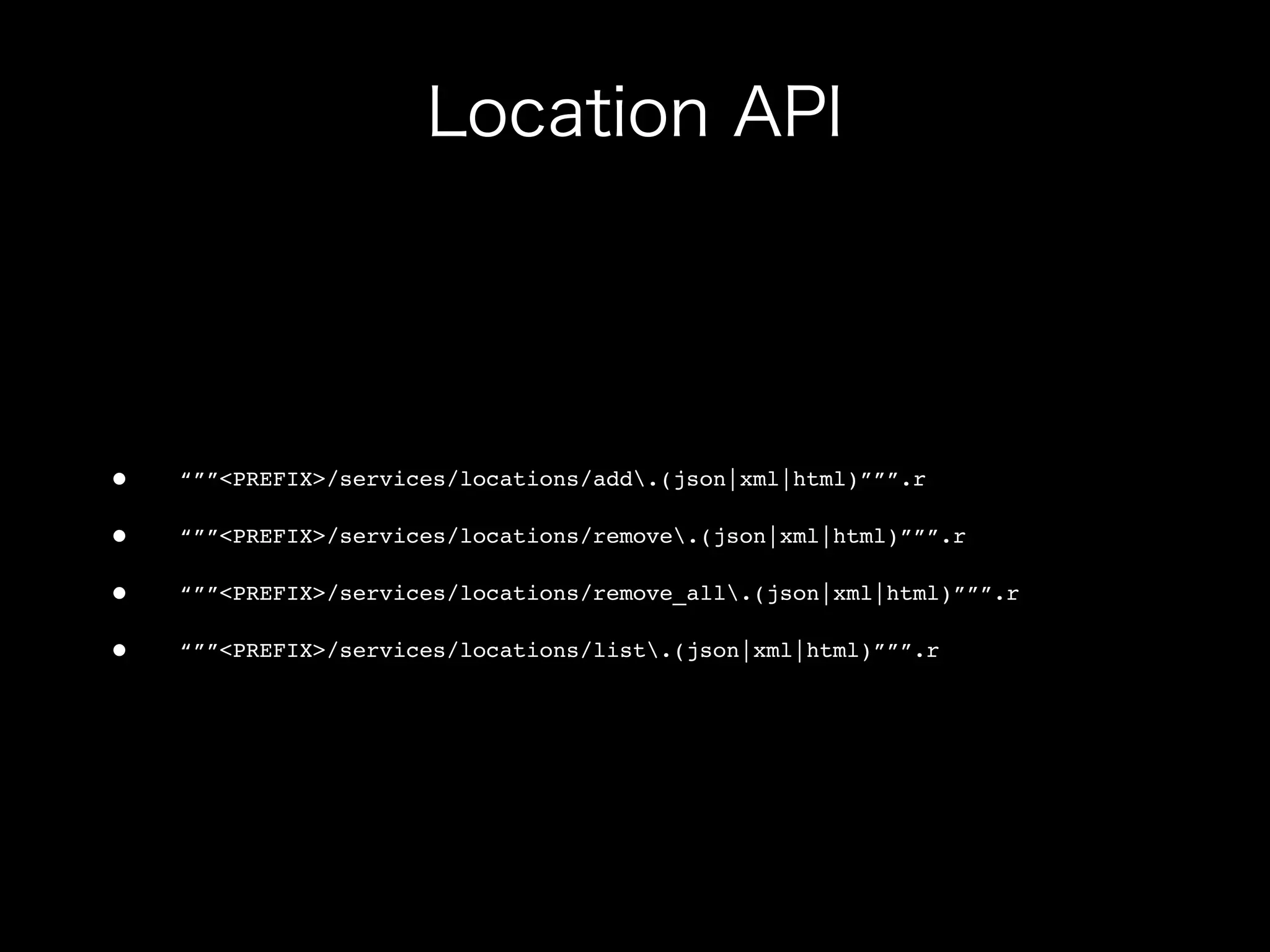

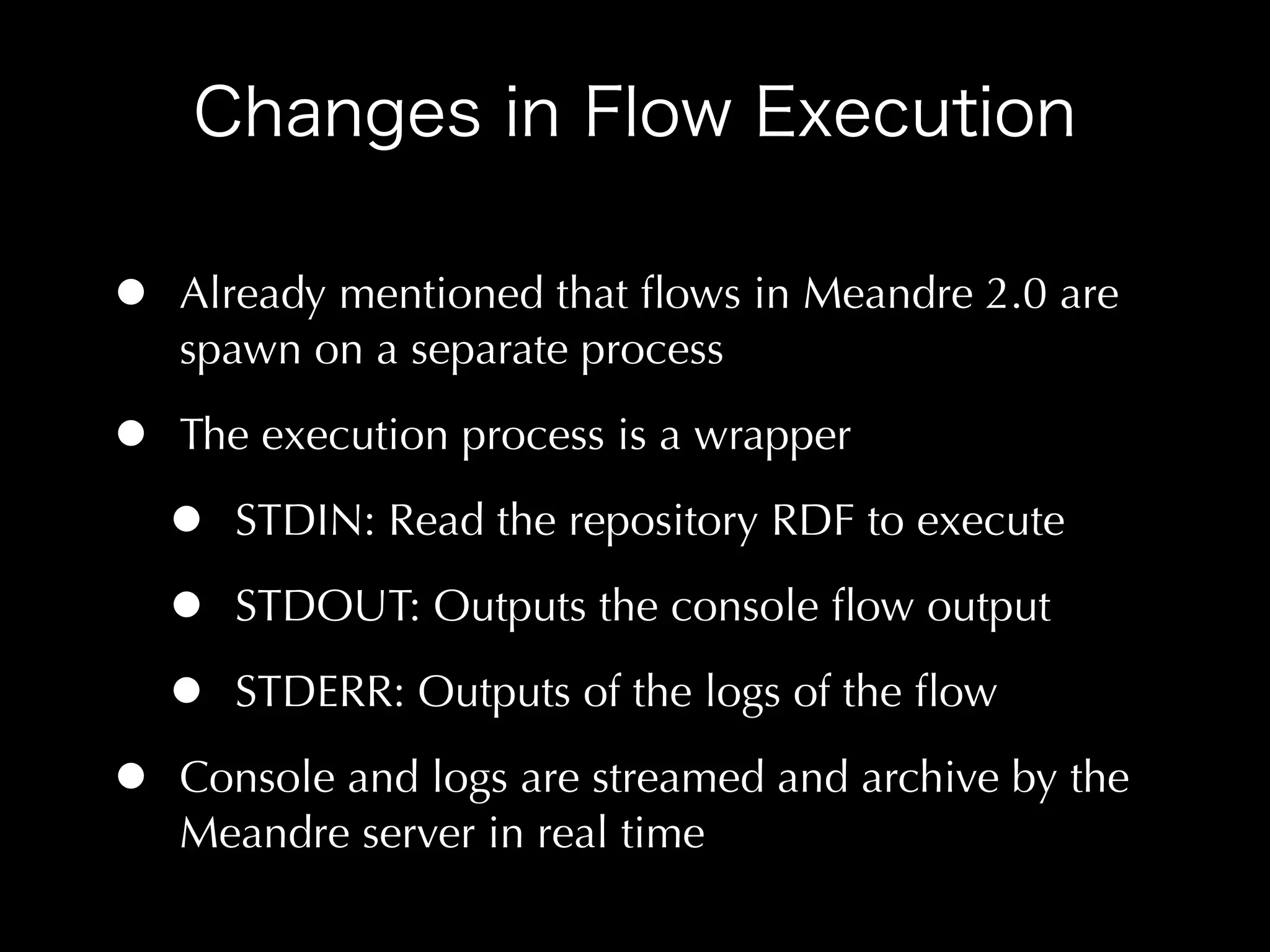

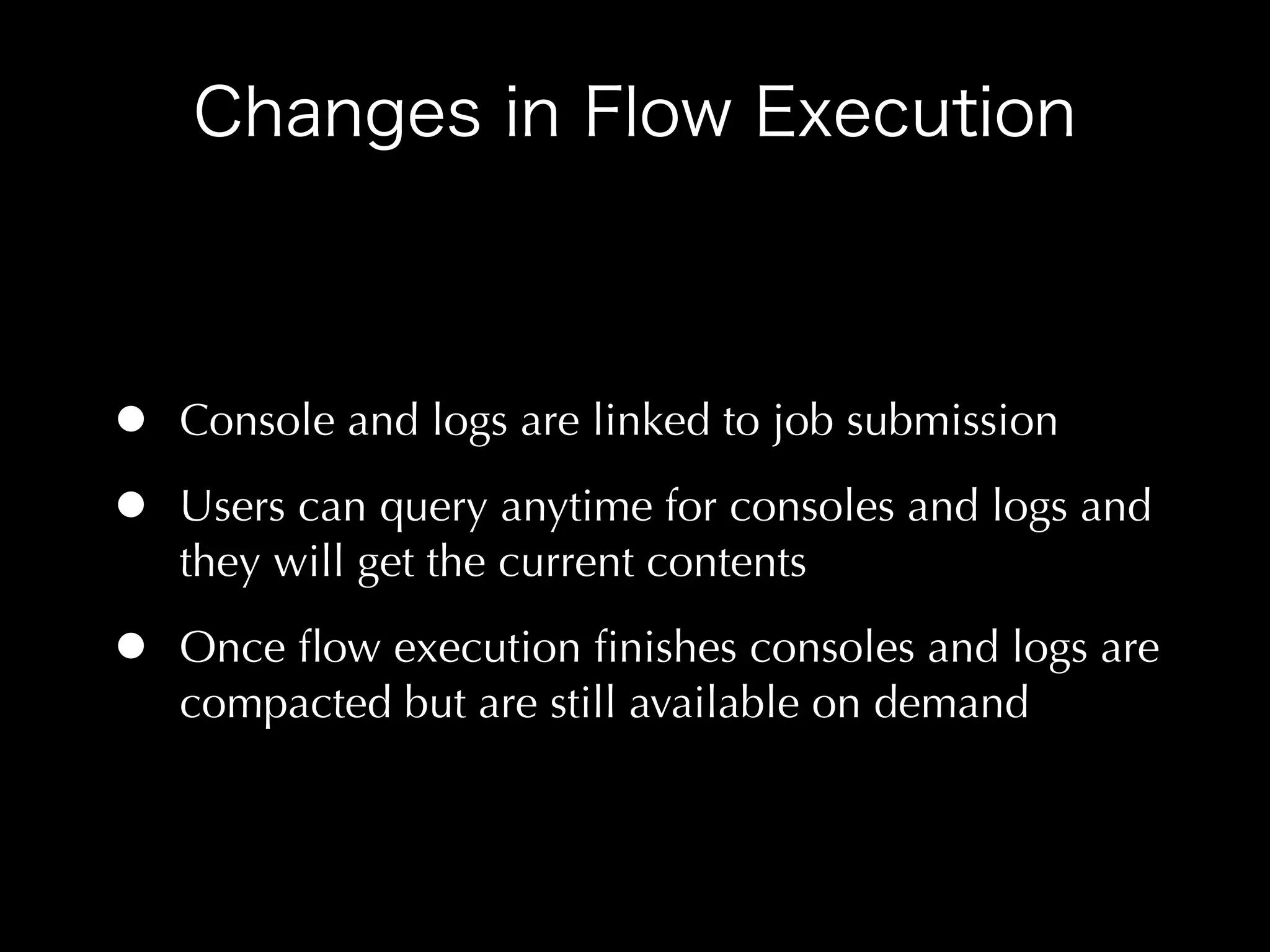

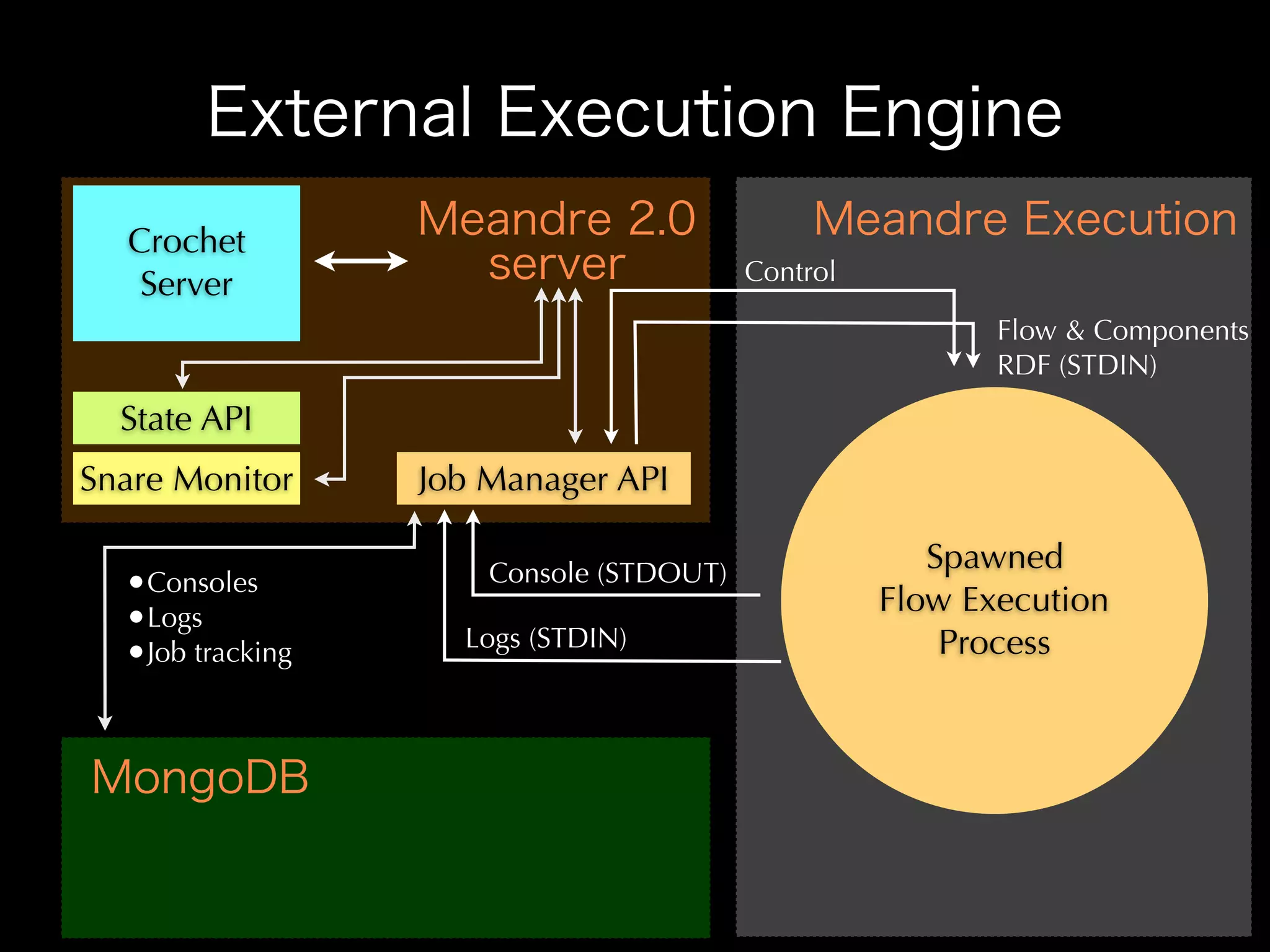

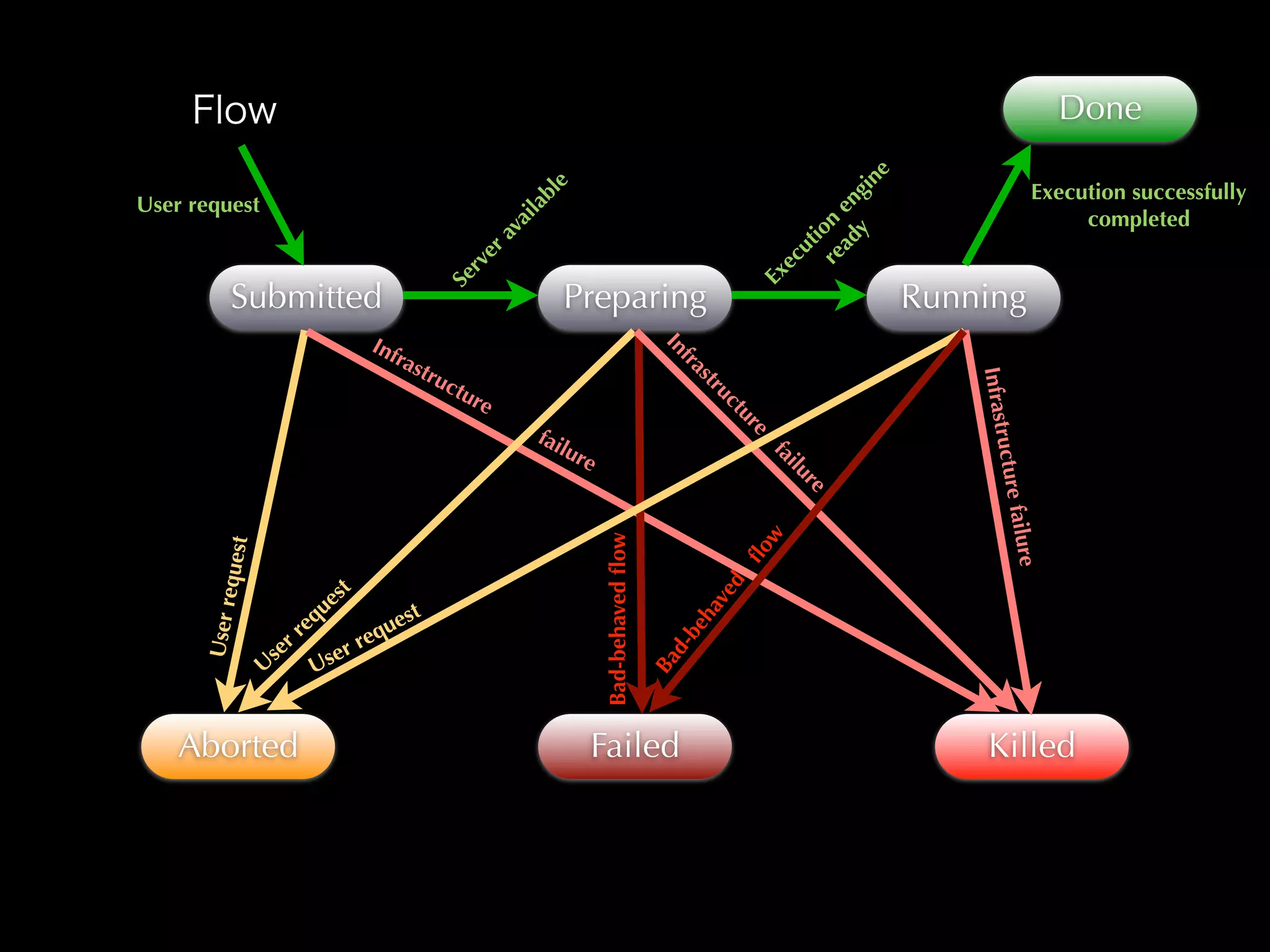

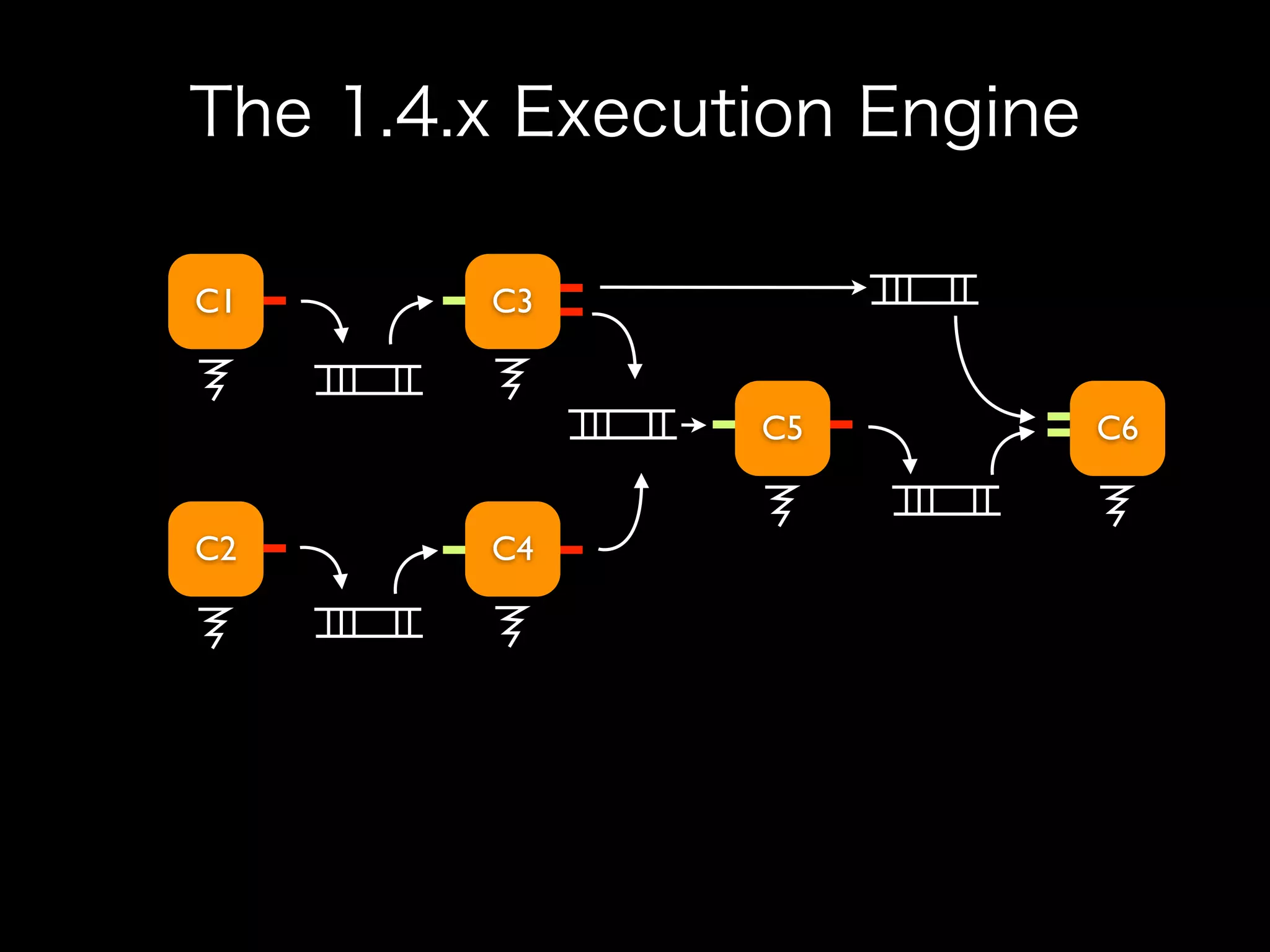

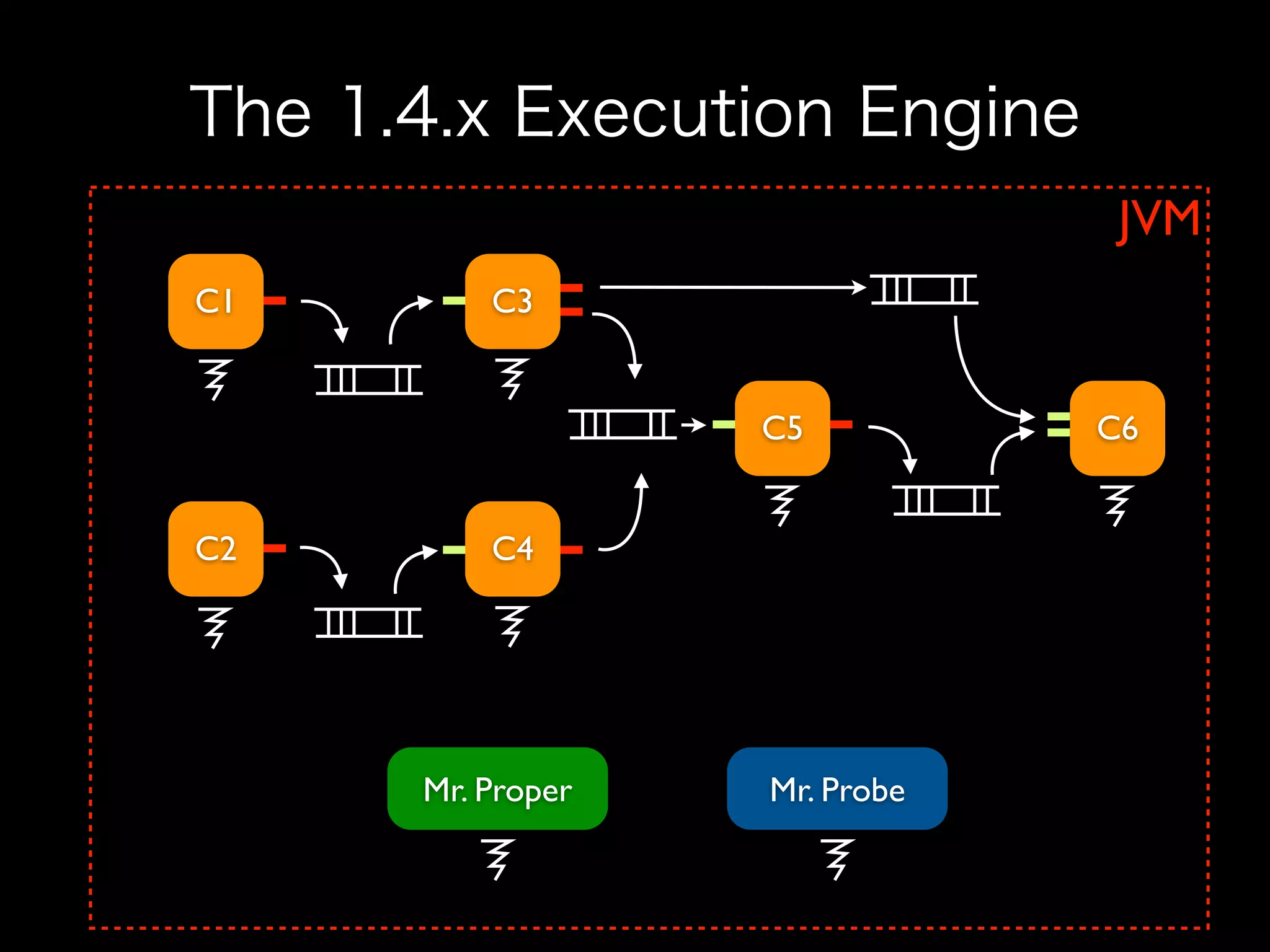

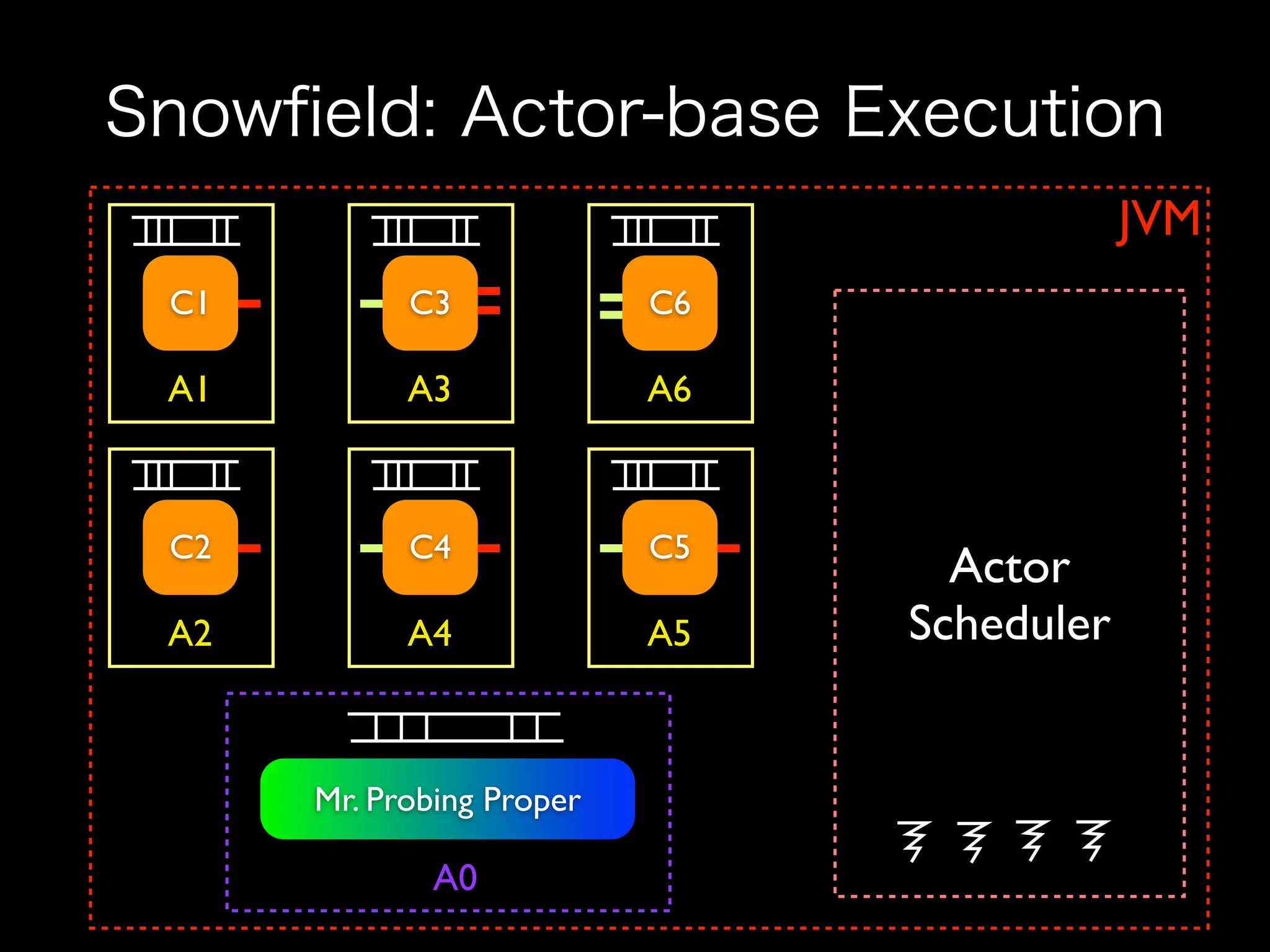

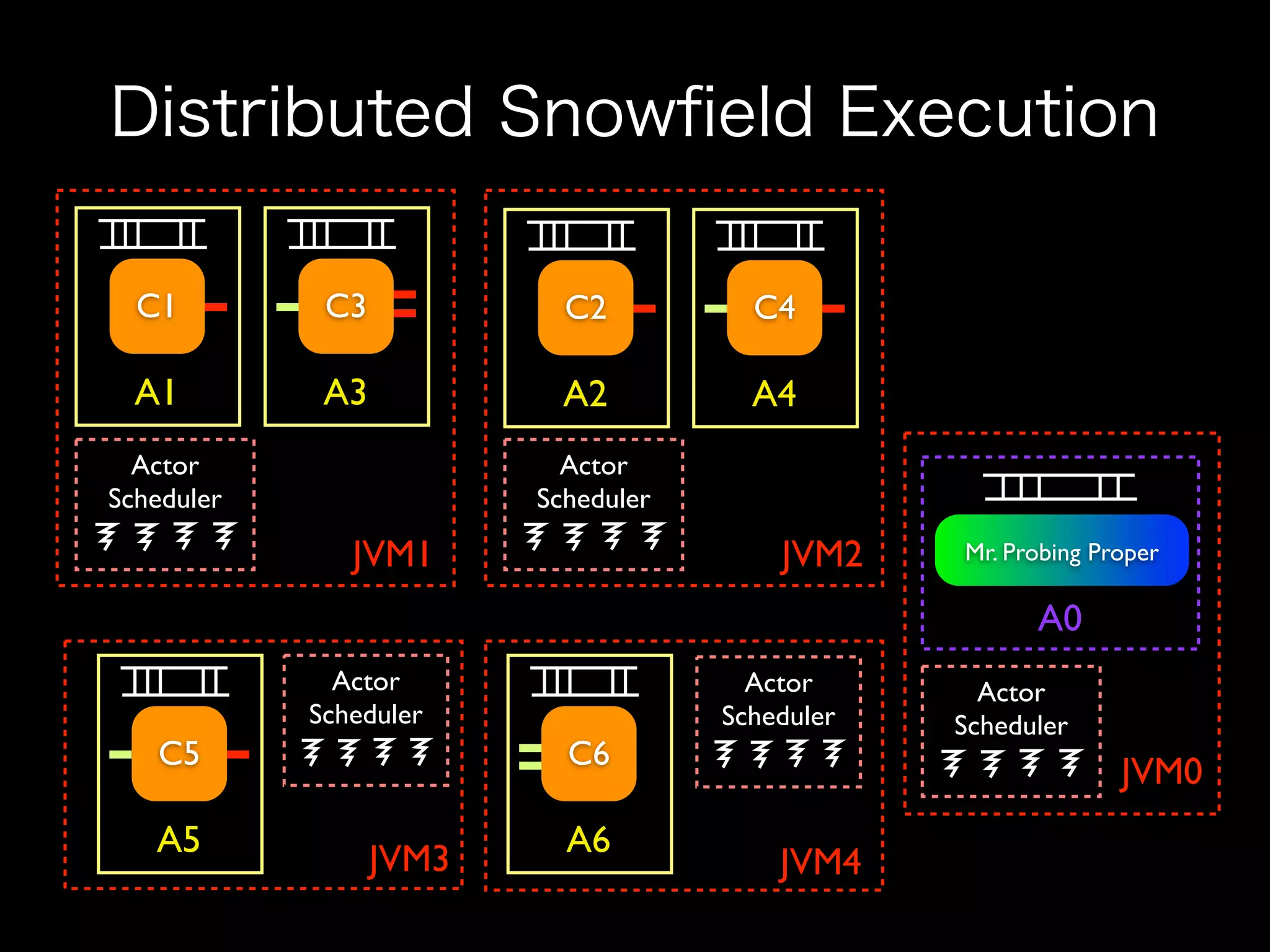

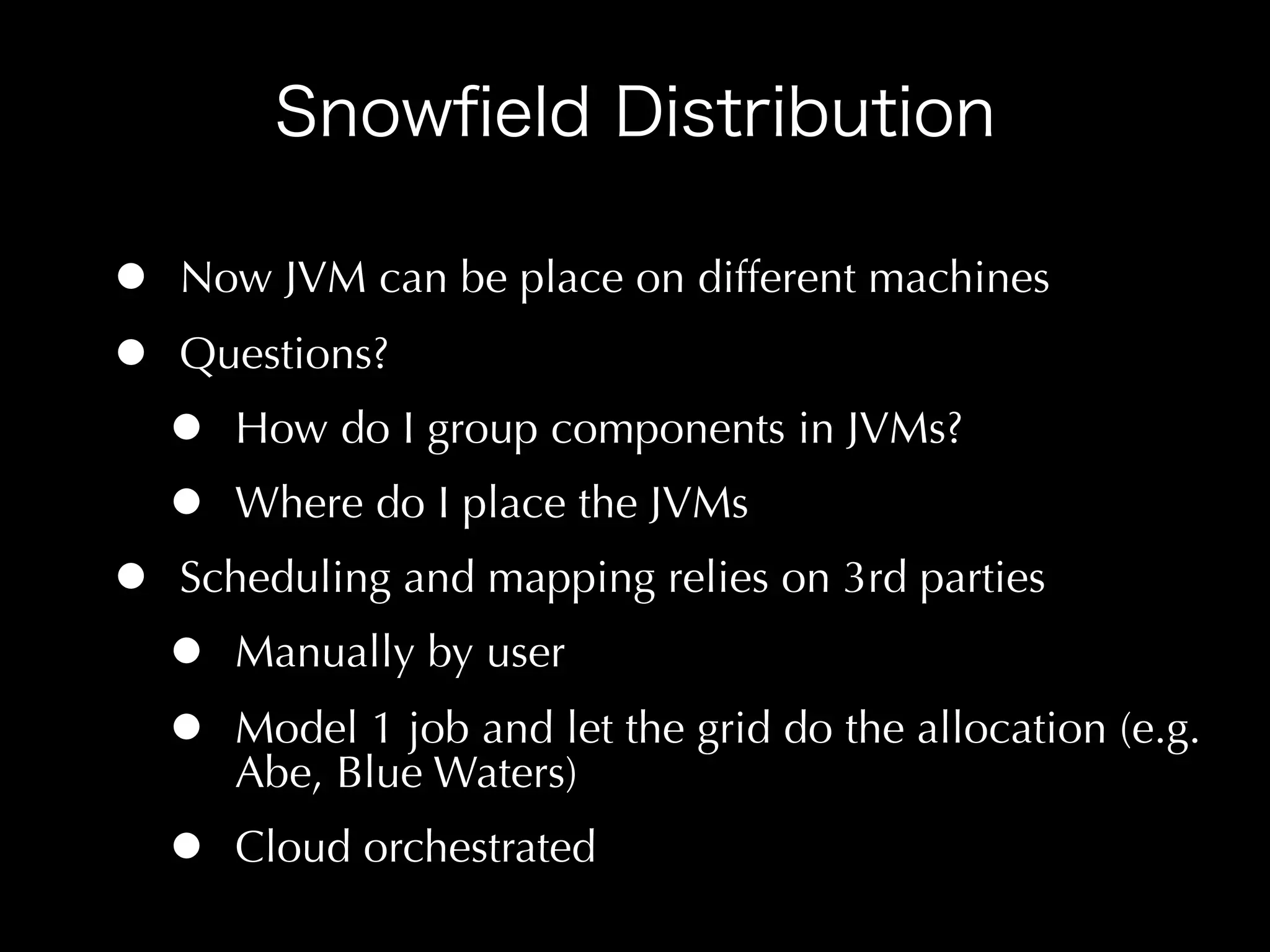

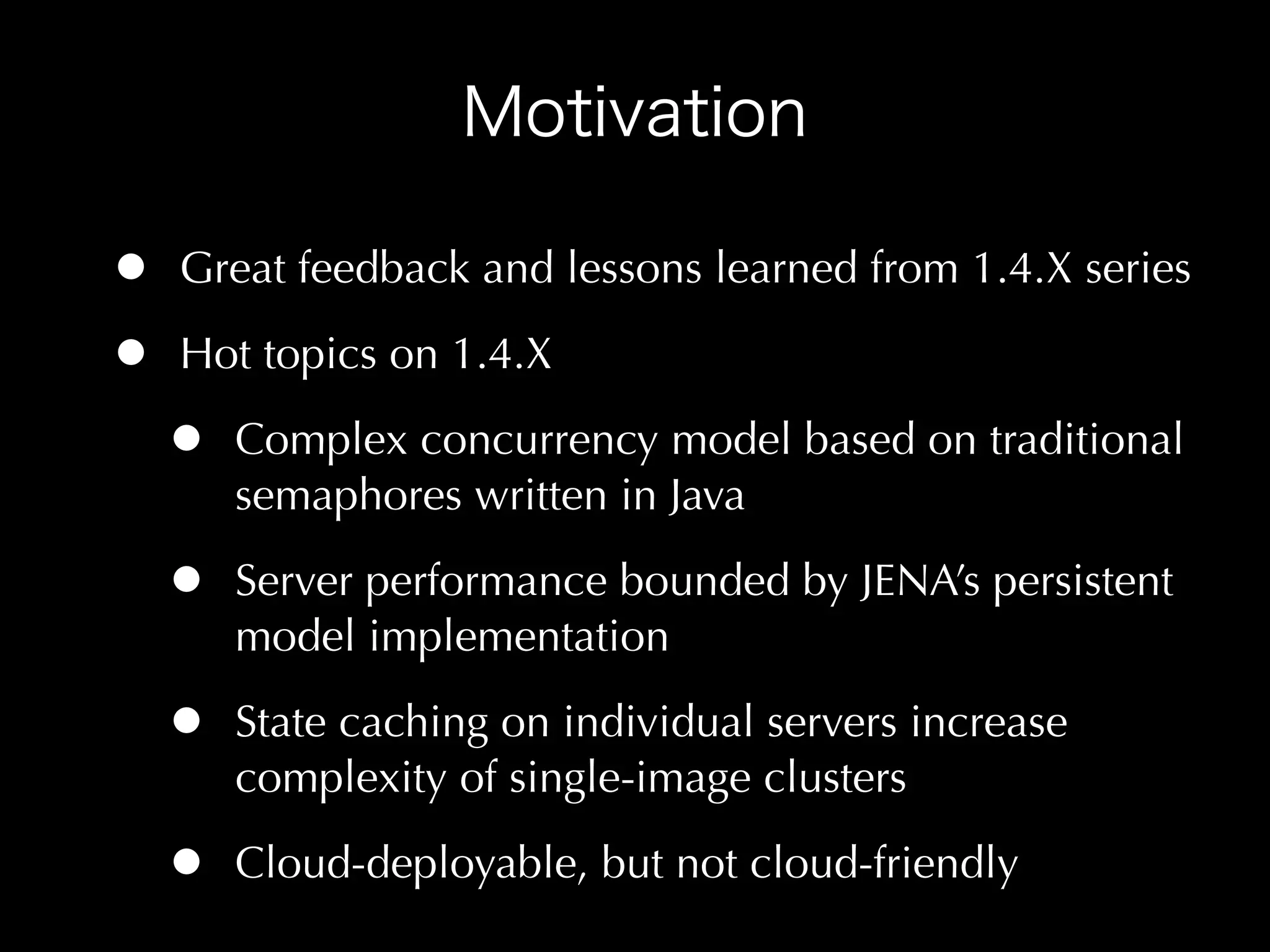

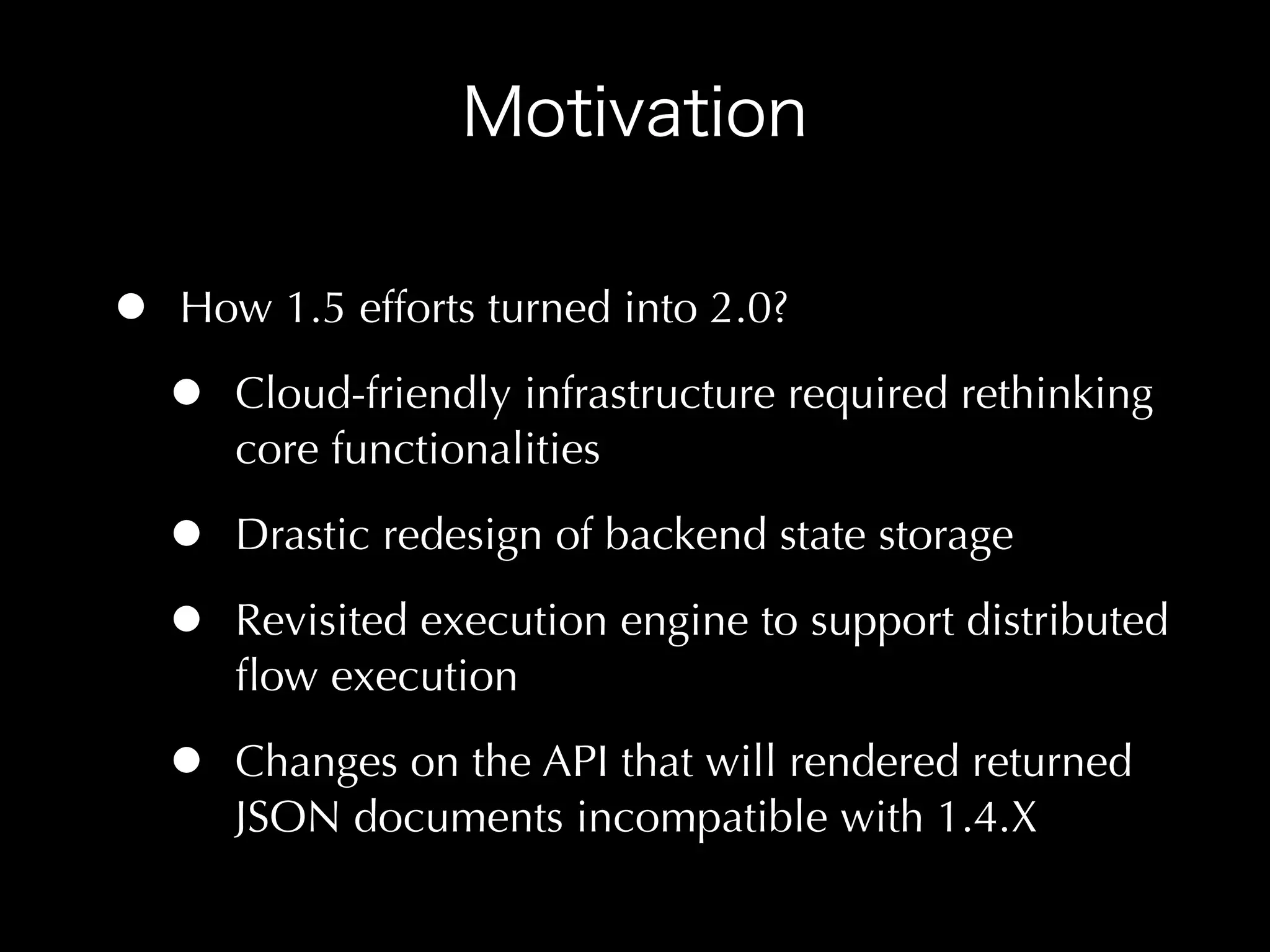

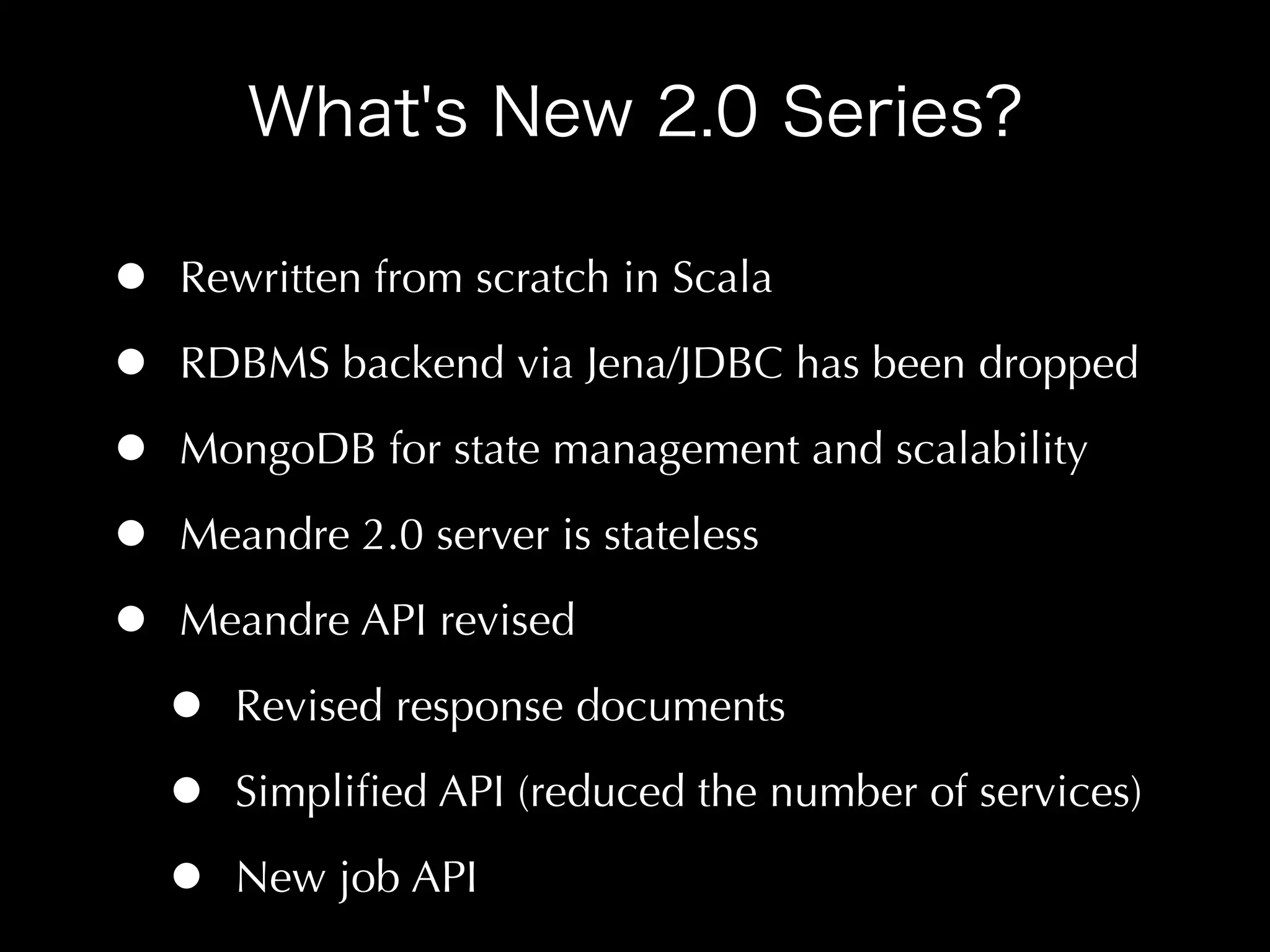

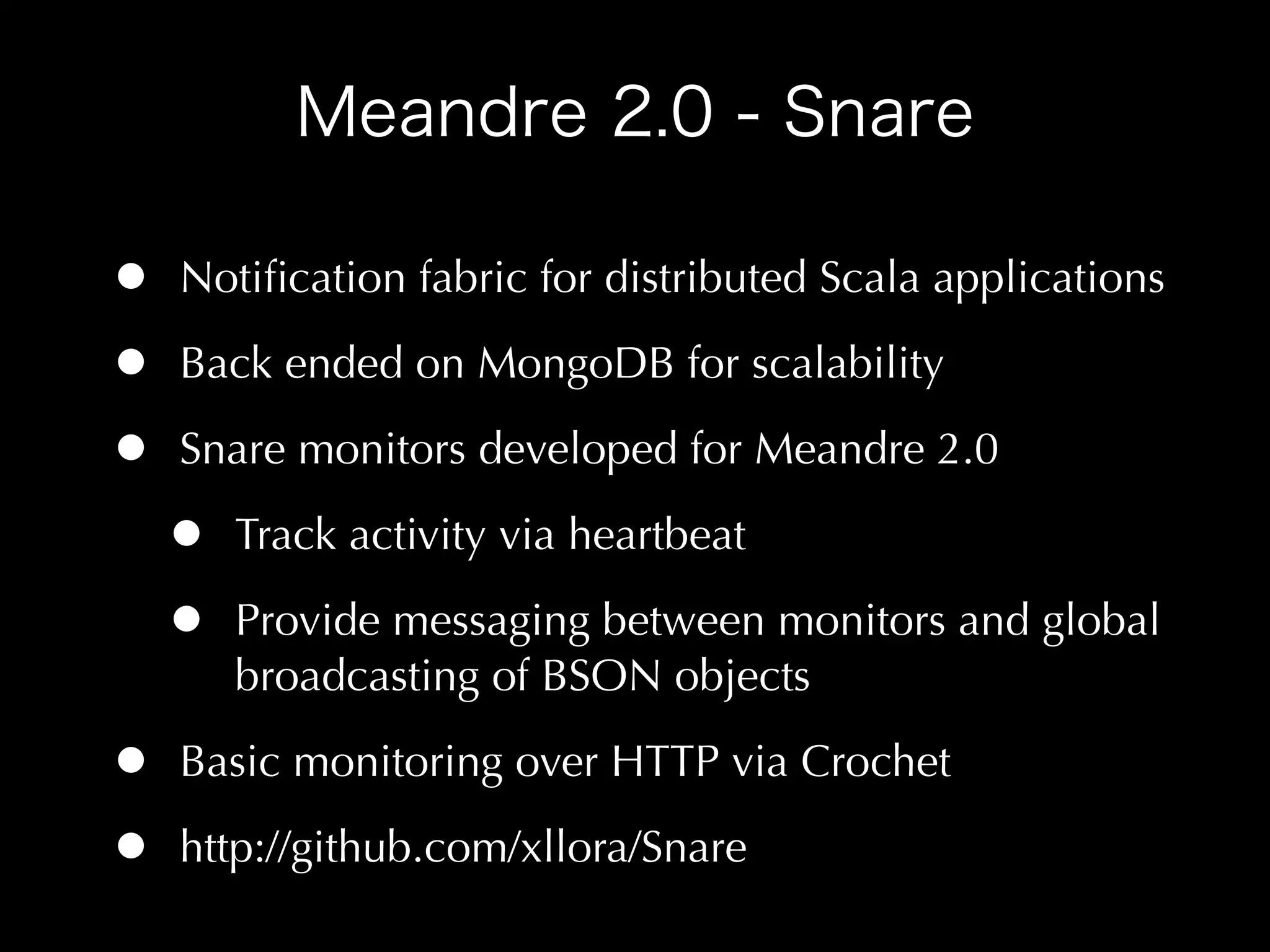

The document discusses the evolution from Meandre 1.4.x to Meandre 2.0, highlighting significant redesigns in backend storage, a shift from RDBMS to MongoDB for management and scalability, and the introduction of a simplified, stateless server architecture. It details new features such as a refined job API, cloud-ready infrastructure, and concurrency models using Scala's actors to enhance distributed execution. The Meandre 2.0 system is articulated to facilitate scalable, efficient flow execution while allowing for easy integration and management of jobs and state across multiple servers.

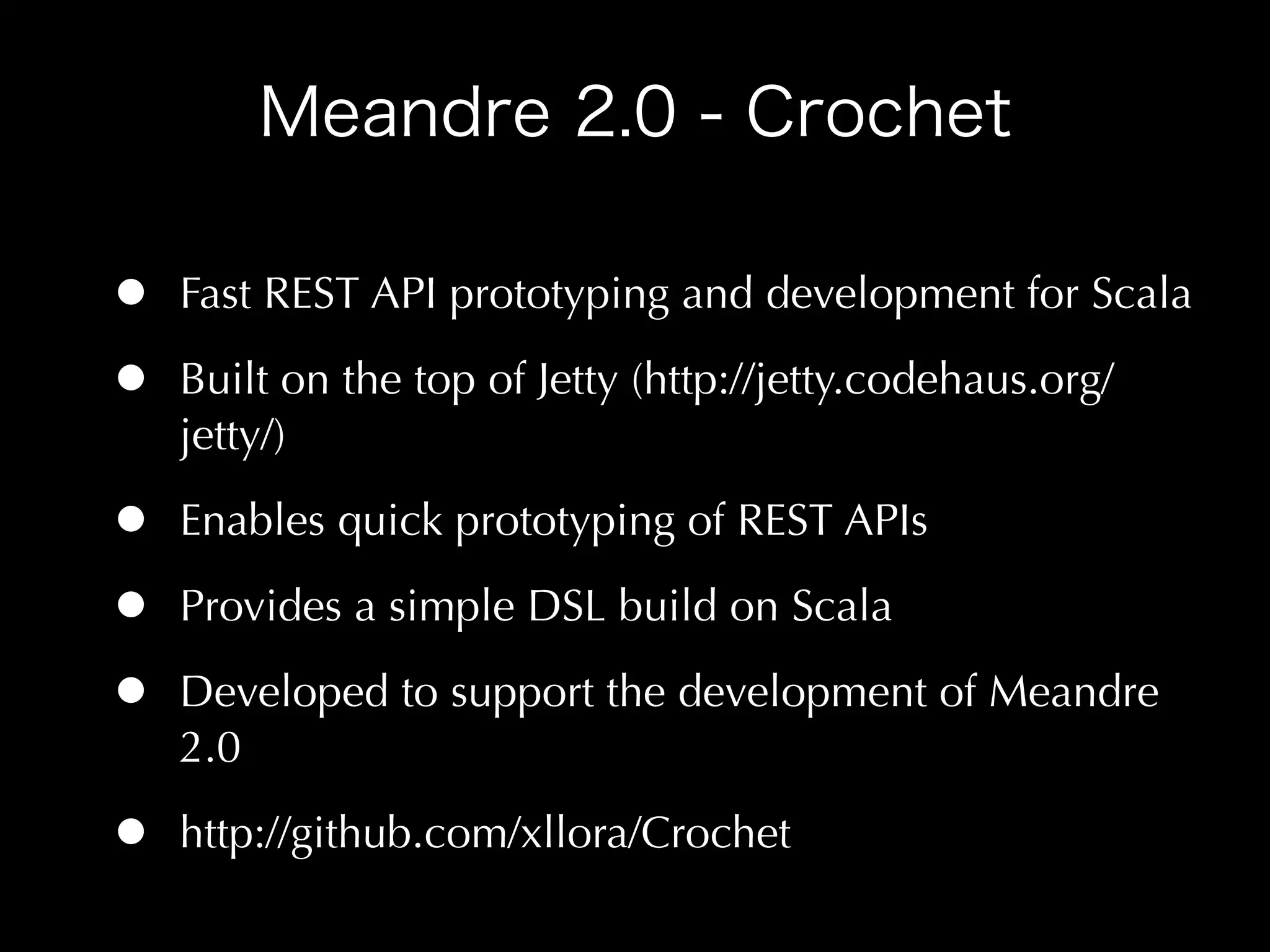

![scala> import snare.tools.Implicits._

scala> val monitors = (1 to 3).toList.map(

i => snare.Snare(

"me_"+i,

“my_pool”,

(o)=>{println(o);true}

)

)

scala> monitors.map(_.activity=true)

2010.01.28 16:47:05.222::INFO:[EVTL] Notification event loop engaged for 230815e0-30cc-3afe-99ac-936d497d1282

2010.01.28 16:47:05.231::INFO:[EVTL] Notification event loop engaged for baec232f-d74d-3fd1-ad3a-caf362f58b7d

2010.01.28 16:47:05.236::INFO:[EVTL] Notification event loop engaged for d057fcde-fd10-3edd-9fd2-cfe464c6971c

2010.01.28 16:47:08.136::INFO:[HRTB] Heartbeat engaged for baec232f-d74d-3fd1-ad3a-caf362f58b7d

2010.01.28 16:47:08.136::INFO:[HRTB] Heartbeat engaged for 230815e0-30cc-3afe-99ac-936d497d1282

2010.01.28 16:47:08.136::INFO:[HRTB] Heartbeat engaged for d057fcde-fd10-3edd-9fd2-cfe464c6971c

scala> monitors(0).broadcast("""{"msg":“Fooo!!!”}""")

scala> monitors(0).notifyPeer(

“230815e0-30cc-3afe-99ac-936d497d1282”,

"""{"msg":“Fooo!!!”}"""

)](https://image.slidesharecdn.com/meandre2-0alpha-preview-100715140140-phpapp01/75/Meandre-2-0-Alpha-Preview-12-2048.jpg)