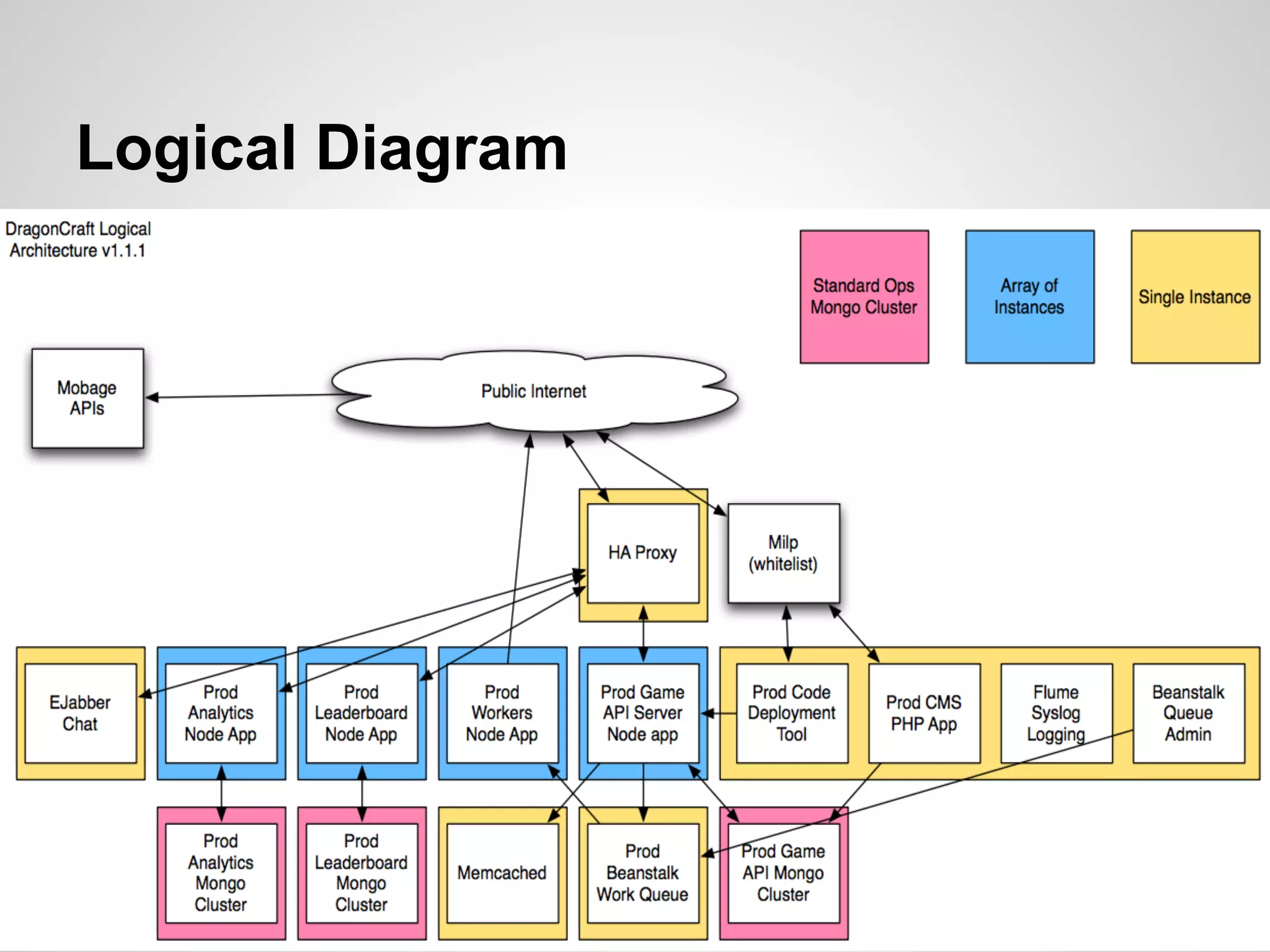

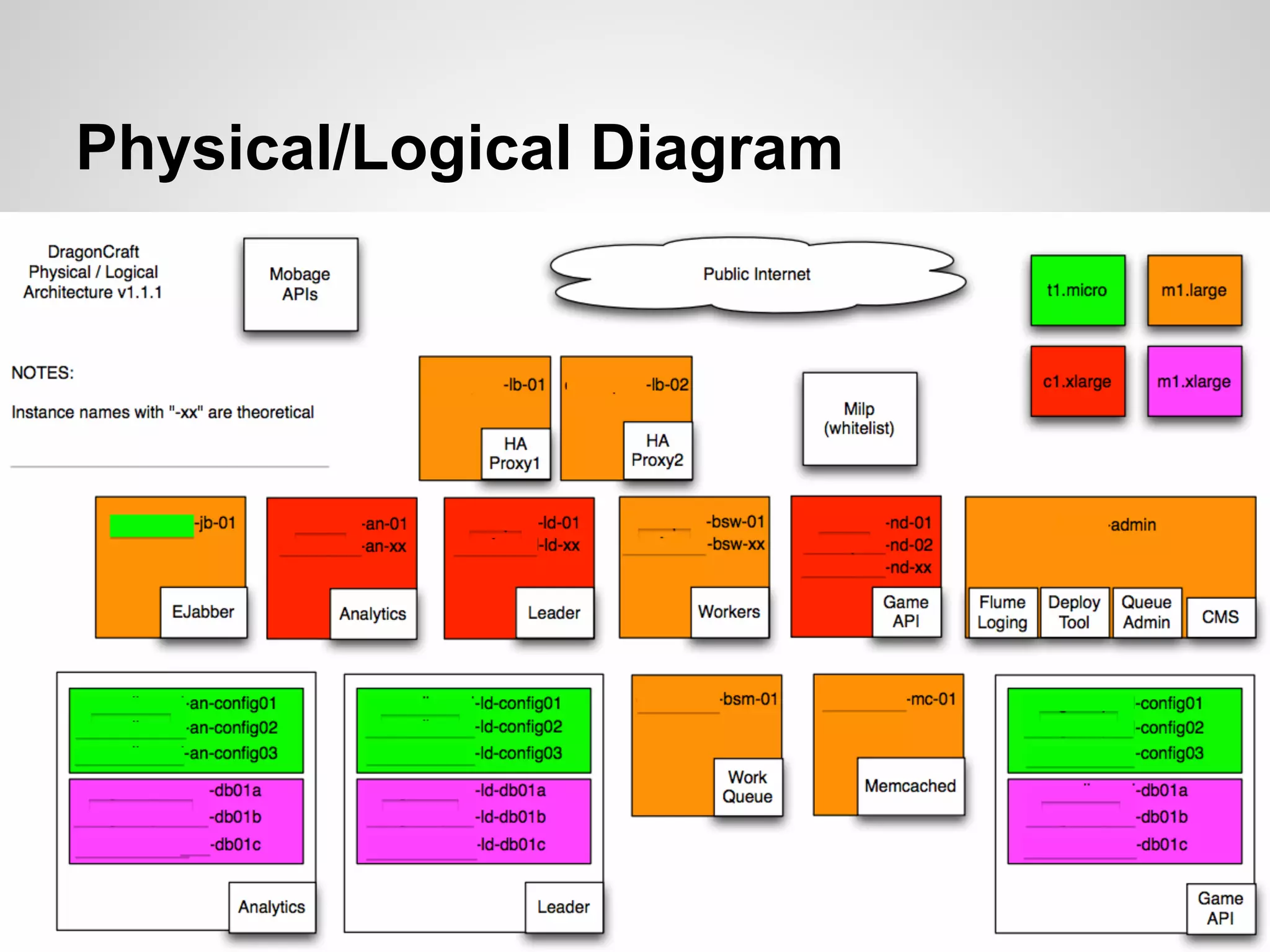

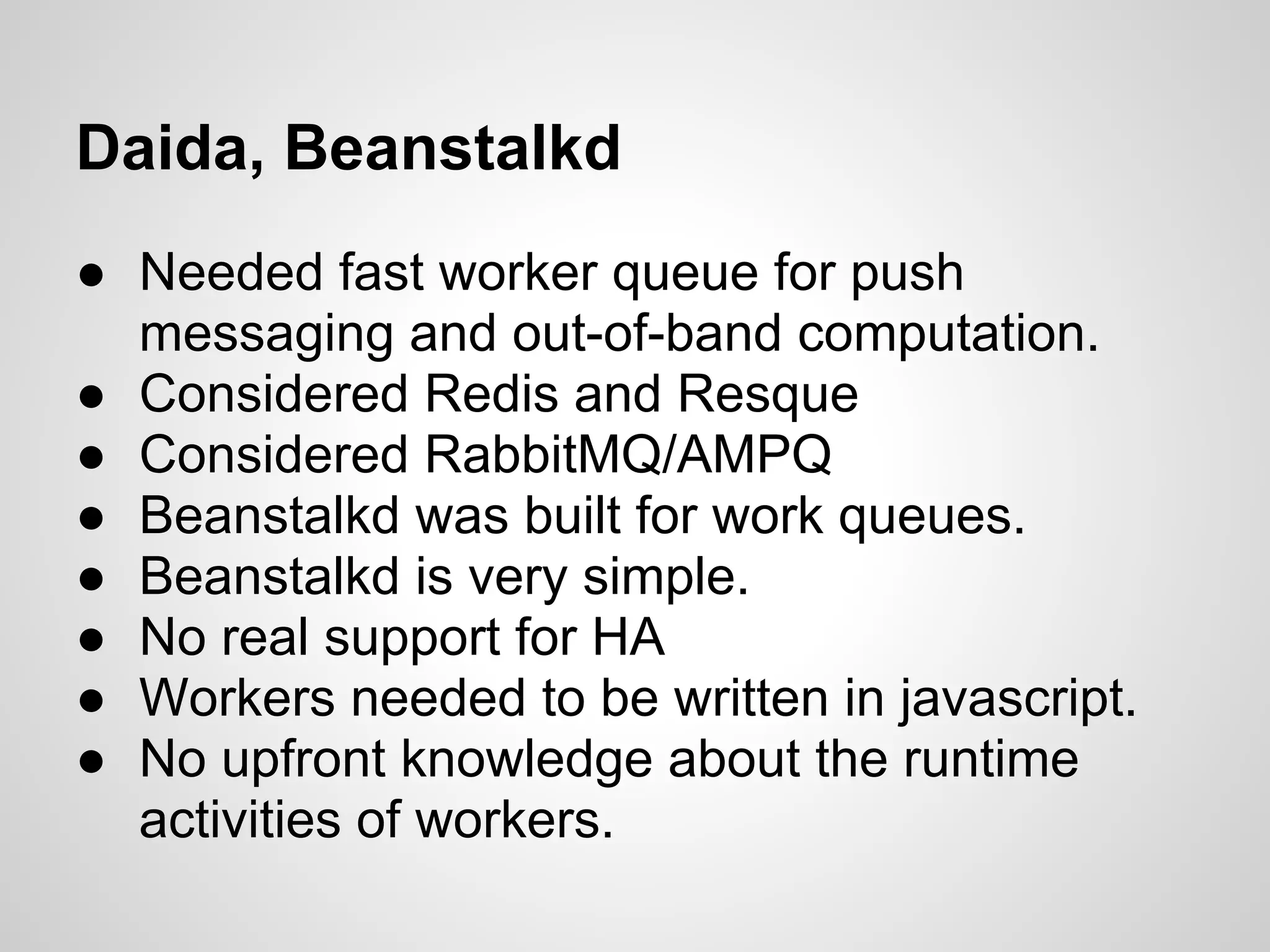

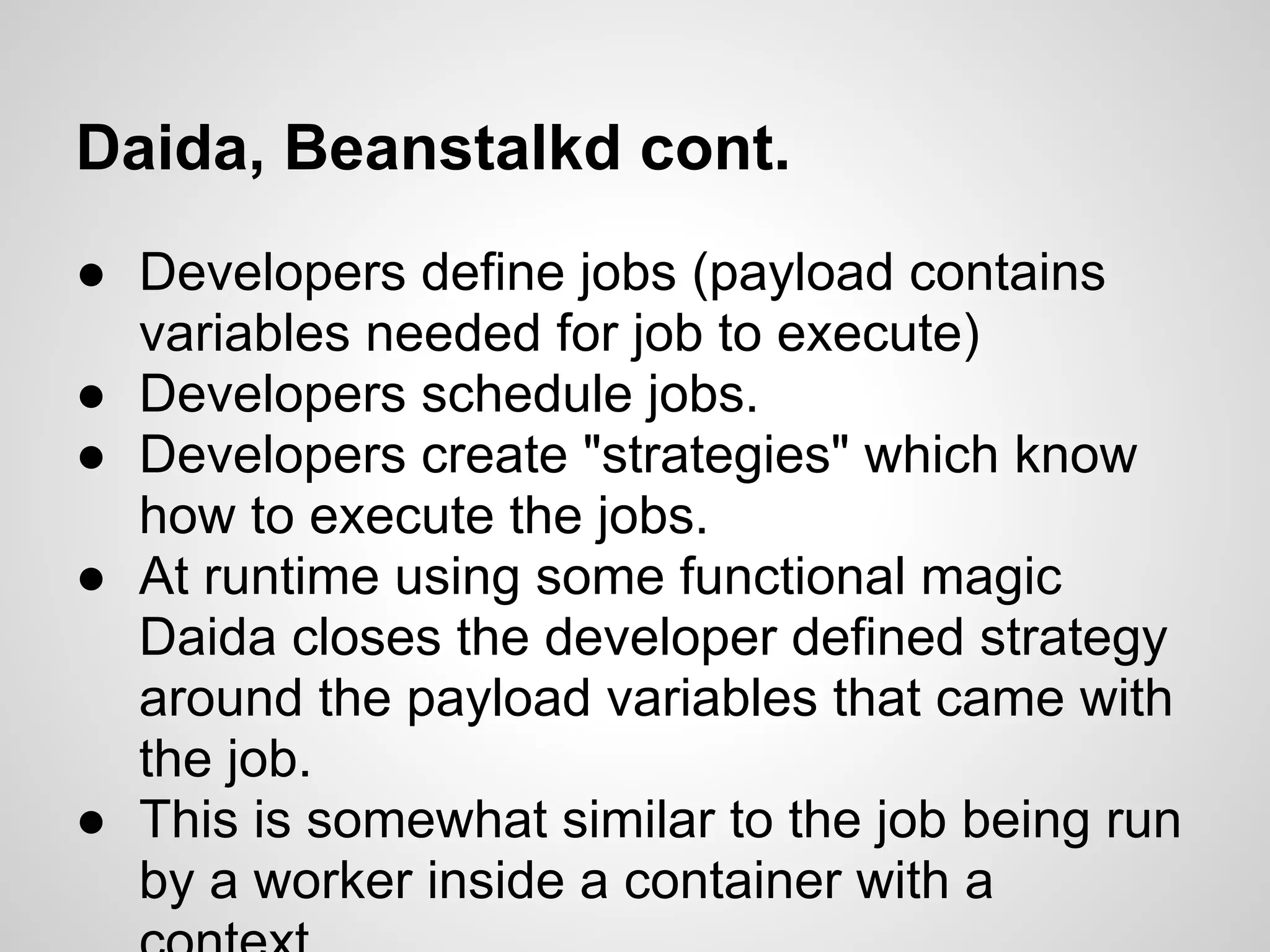

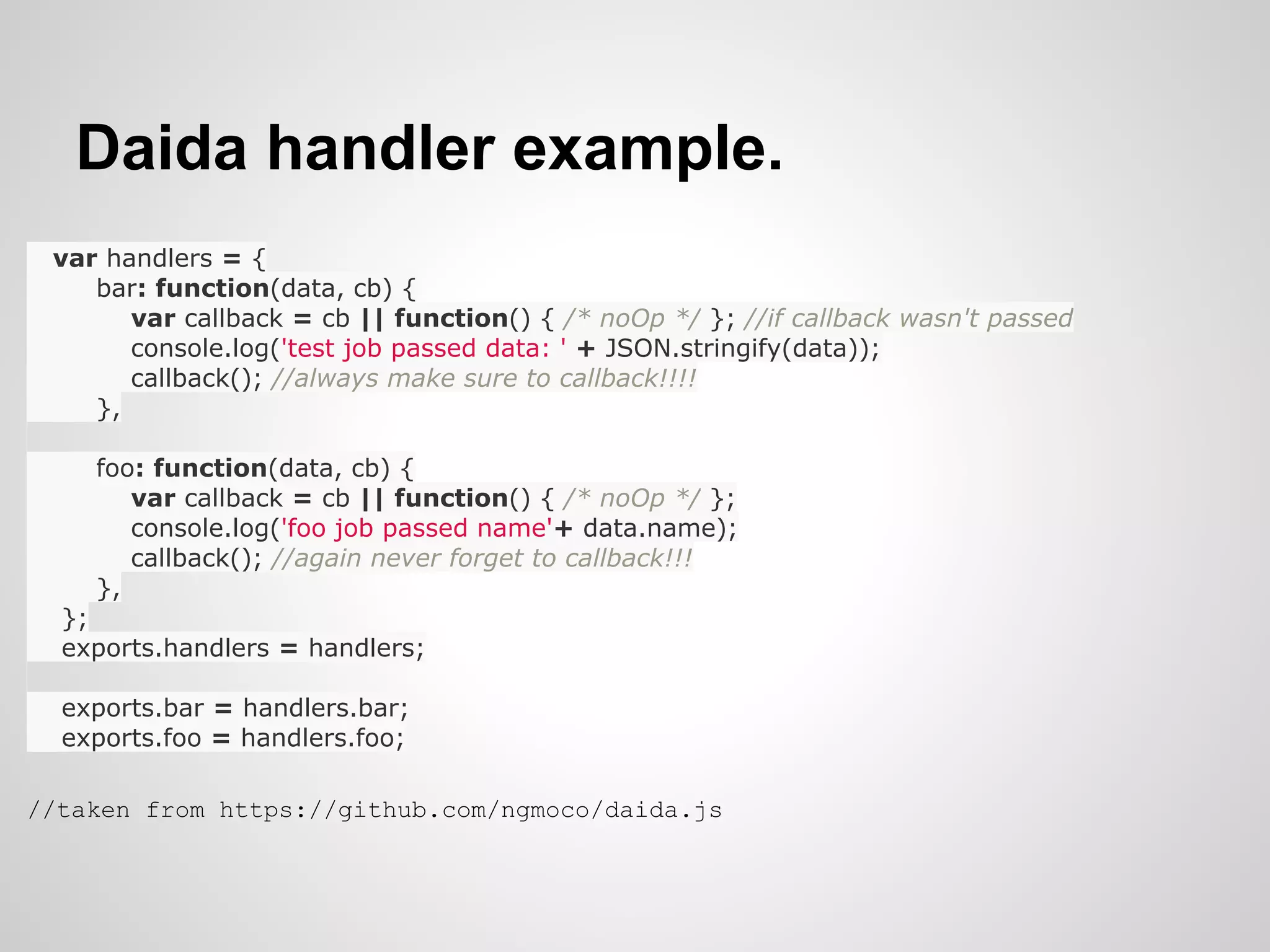

The document outlines the architectural overview of Dragoncraft by Freeverse Inc., focusing on the use of technologies like Node.js, MongoDB, and EC2 for developing RESTful HTTP-based games for handheld clients. It also discusses the infrastructure management, including deployment strategies, backup processes, and a centralized logging system, while addressing challenges related to scaling and performance testing. Additionally, various services and libraries used to support the platform are highlighted, emphasizing the importance of efficient job scheduling and execution, particularly with tools like Beanstalkd and Megaphone.

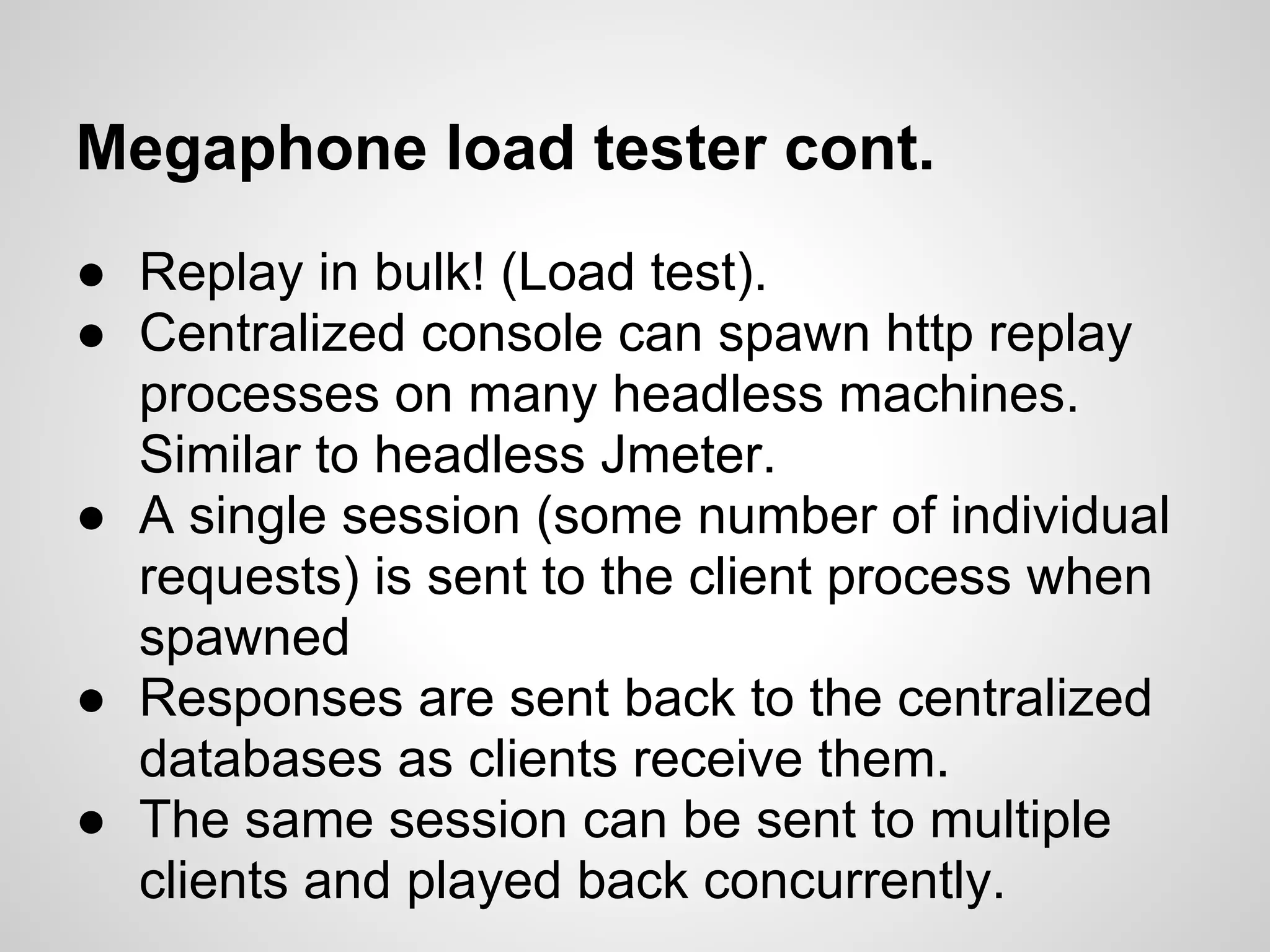

![%% This module contains the functions to manipulate req/resp for the dcraft_session1 playback

-module(dcraft_session1).

EX. Session handler

-include("blt_otp.hrl"). script for

-export([ create_request/1, create_request/2, create_request/3]).

-record(request, { url, verb, body_vars}). manipulating

-record(response, {request_number, response_obj}).

create_request(Request) -> create_request(Request, []).

requests at runtime

create_request(Request, Responses) -> create_request(Request, Responses, 0).

create_request(#request{url="http://127.0.0.1:8080/1.2.1/dragoncraft/player/sanford/mission/"++OldMissionId} = Request, Responses, RequestNumber) ->

?DEBUG_MSG("~p Request for wall Found!~n", [?MODULE]),

[LastResponseRecord|RestResponses] = Responses,

{{_HttpVer, _ResponseCode, _ResponseDesc}, _Headers, ResponseBodyRaw} = LastResponseRecord#response.response_obj,

{ok, ResponseBodyObj} = json:decode(ResponseBodyRaw),

ResponseKVs = element(1, ResponseBodyObj),

[Response_KV1 | [Response_KV2 | Response_KV_Rest ]] = ResponseKVs,

Response_KV2_Key = element(1, Response_KV2),

Response_KV2_Val = element(2, Response_KV2),

ResponseDataObj = element(1, Response_KV2_Val),

[ResponseDataKV | ResponseDataKVRest ] = ResponseDataObj,

ResponseData_KV_Key = element(1, ResponseDataKV), %<<"identifier">>

ResponseData_KV_Val = element(2, ResponseDataKV),

MissionId = binary_to_list(ResponseData_KV_Val),

Replaced = re:replace(Request#request.url, OldMissionId, MissionId++"/wall"),

[ReHead|ReRest] = Replaced,

[ReTail] = ReRest,

?DEBUG_MSG("~p replaced head is ~p and tail ~p ~n", [?MODULE, ReHead, ReTail]),

NewUrl = binary_to_list(ReHead)++binary_to_list(ReTail),

NewRequest = Request#request{url=NewUrl};

create_request(Request, Responses, RequestNumber) -> Request.](https://image.slidesharecdn.com/dragoncrafttechtalk-120629190137-phpapp02/75/Dragoncraft-Architectural-Overview-23-2048.jpg)