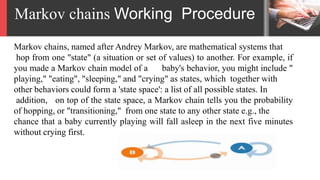

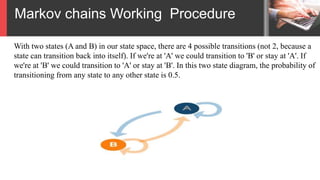

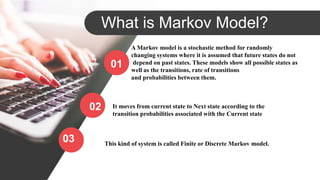

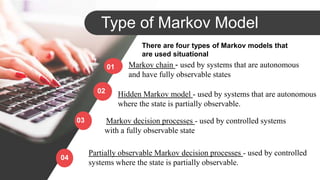

This presentation introduces Markov models. A Markov model is a stochastic method that models randomly changing systems based on transitions between states, where the probability of moving to the next state depends only on the current state, not on past states. There are four main types of Markov models: Markov chains, hidden Markov models, Markov decision processes, and partially observable Markov decision processes. Markov chains work by defining a set of possible states and the transition probabilities between states, such that the probability of moving from the current state to the next state can be determined.

![Markov property

• Markov Property : The Current state of the system depends only on the previous state of the sys

tem

• The State of the system at Time [ T+1 ] depends on the state of the system at time T.

Xt=1

Xt=2 Xt=3 Xt=4 Xt=5](https://image.slidesharecdn.com/markov-180404004320/85/Markov-Model-chains-4-320.jpg)