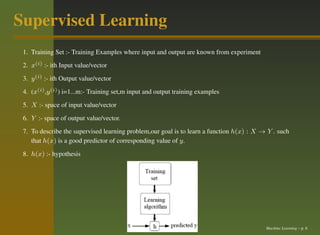

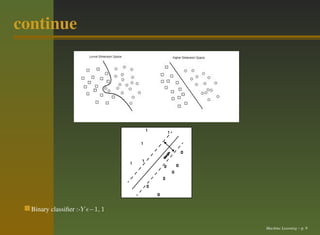

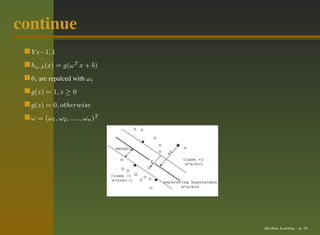

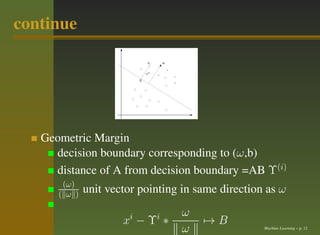

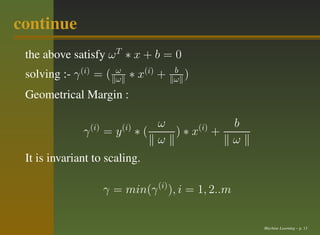

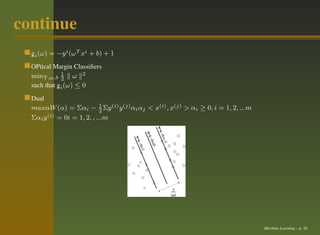

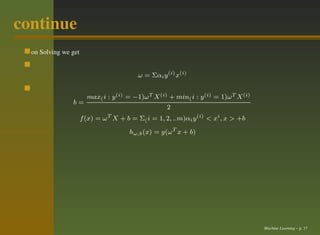

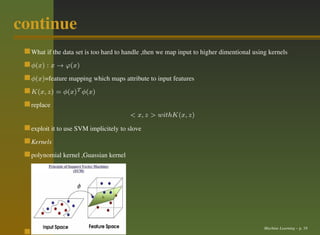

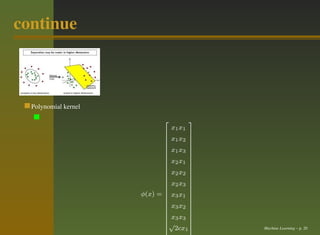

This document provides an outline for a talk on machine learning and support vector machines. It begins with an introduction to machine learning, including the goal of allowing computers to learn from examples without being explicitly programmed. It then discusses different types of machine learning problems, including supervised learning problems where labeled training data is provided. Support vector machines are introduced as a method for supervised learning classification and regression tasks by finding optimal separating hyperplanes in feature spaces. The document outlines kernels and how they can be used to map data to higher dimensions to allow for linear separation. Polynomial and Gaussian kernels are briefly described. Applications mentioned include natural language processing, data mining, speech recognition, and web classification.