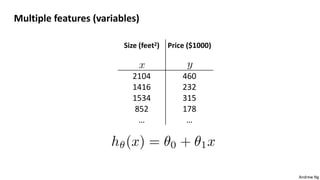

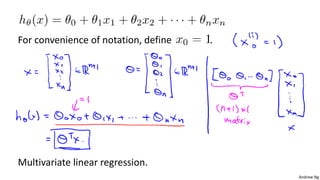

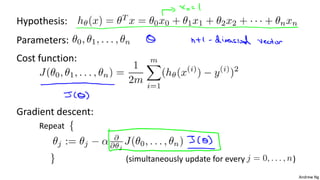

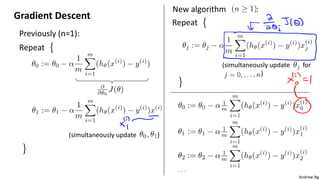

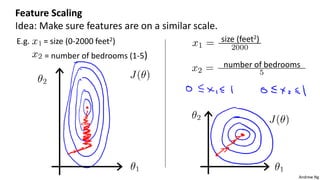

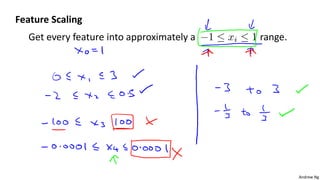

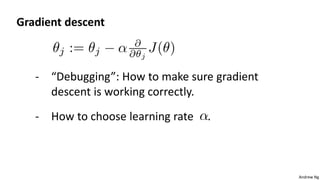

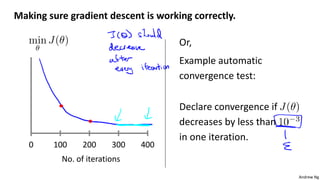

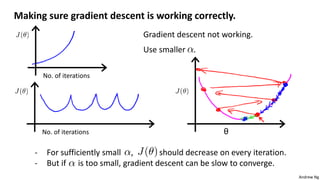

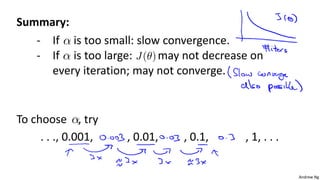

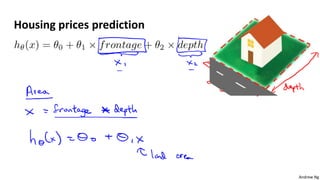

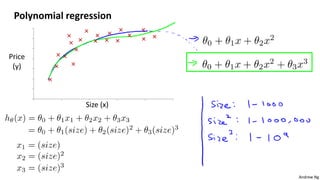

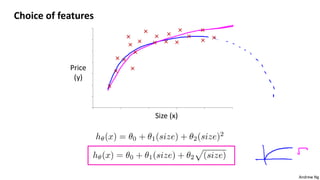

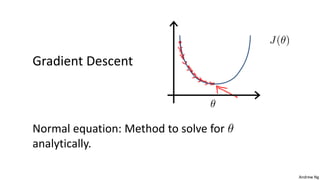

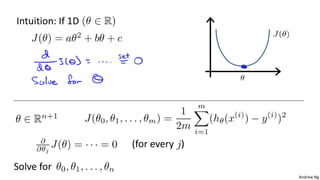

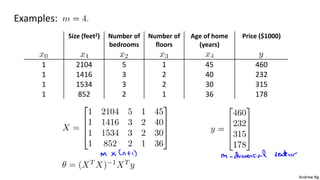

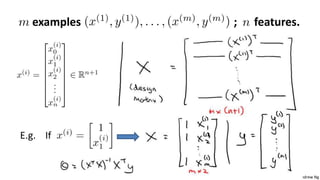

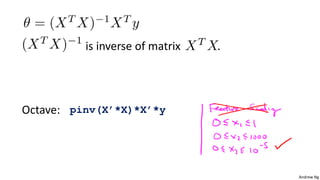

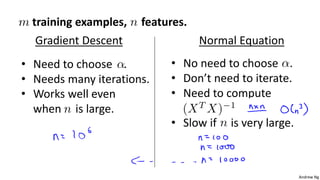

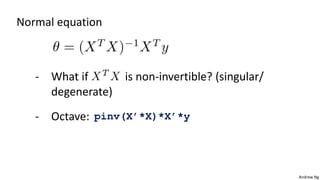

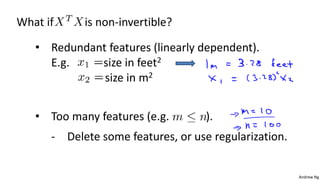

This document discusses linear regression with multiple variables. It introduces notation for multiple features and describes using gradient descent to update the parameters simultaneously for each feature. Feature scaling is introduced to standardize the ranges of different features. Choosing an appropriate learning rate for gradient descent is also discussed. The document covers using polynomial regression by adding powers of features. It also describes solving for the parameters analytically using the normal equation and issues that can arise with non-invertibility.