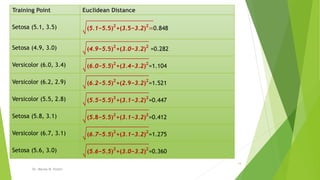

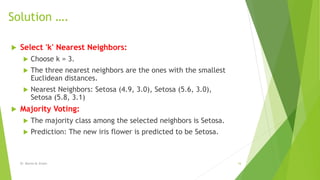

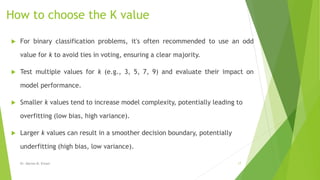

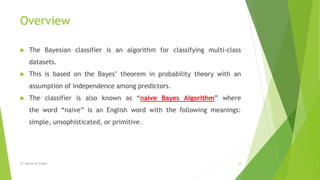

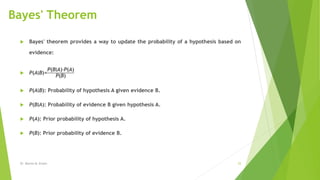

The document discusses the k-nearest neighbors (KNN) algorithm and the naive bayes classification method. KNN is a supervised learning algorithm used for classification and regression tasks, where predictions are based on the closest training data points, while naive bayes uses Bayes' theorem to classify data based on feature probabilities. Key points covered include the choice of 'k' in KNN, the calculation of Euclidean distance, and the assumption of feature independence in naive bayes.